背景信息:

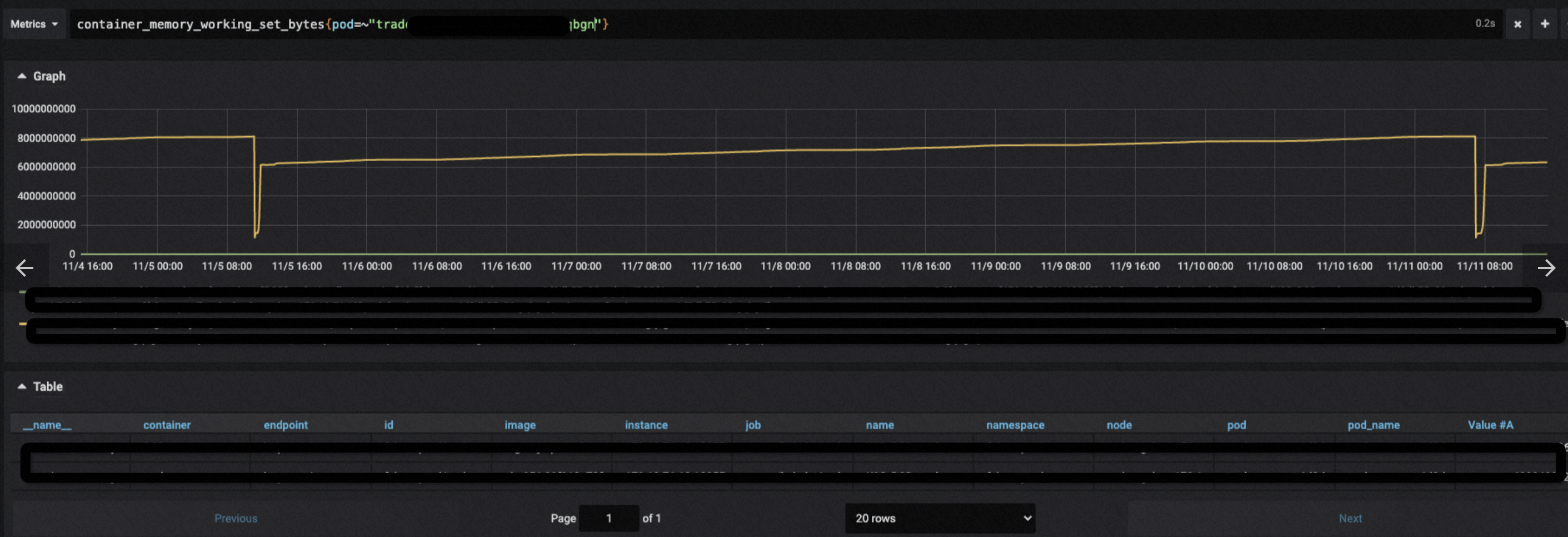

客户反馈java设置xms,xmx参数固定8G内存,而pod的limit则为16G, 三番五次出现了pod oom的情况,且oom的时候他的业务进程输出也是8g, 同时promethus的监控对应pod的working_set_memory也是8个多g, 剩下的几个g的内存到底去哪里了呢?

查看pod内存使用的几种方式:

1. 通过cgroup的统计查看pod内存使用:

默认进到pod里面看到的memory.stat是已经对应到了container目录的cgroup统计,并不包含pause以及pod 对应cgroup根目录的memory统计

# cat /sys/fs/cgroup/memory/memory.stat cache 1066070016 rss 4190208 rss_huge 0 shmem 1048363008 mapped_file 4730880 dirty 135168 writeback 0 swap 0 workingset_refault_anon 0 workingset_refault_file 405504 workingset_activate_anon 0 workingset_activate_file 0 workingset_restore_anon 0 workingset_restore_file 0 workingset_nodereclaim 0 pgpgin 267531 pgpgout 6185 pgfault 7293 pgmajfault 99 inactive_anon 528506880 active_anon 524181504 inactive_file 11718656 active_file 5677056 unevictable 0 hierarchical_memory_limit 1073741824 hierarchical_memsw_limit 1073741824 total_cache 1066070016 total_rss 4190208 total_rss_huge 0 total_shmem 1048363008 total_mapped_file 4730880 total_dirty 135168 total_writeback 0 total_swap 0 total_workingset_refault_anon 0 total_workingset_refault_file 405504 total_workingset_activate_anon 0 total_workingset_activate_file 0 total_workingset_restore_anon 0 total_workingset_restore_file 0 total_workingset_nodereclaim 0 total_pgpgin 267531 total_pgpgout 6185 total_pgfault 7293 total_pgmajfault 99 total_inactive_anon 528506880 total_active_anon 524181504 total_inactive_file 11718656 total_active_file 5677056 total_unevictable 0

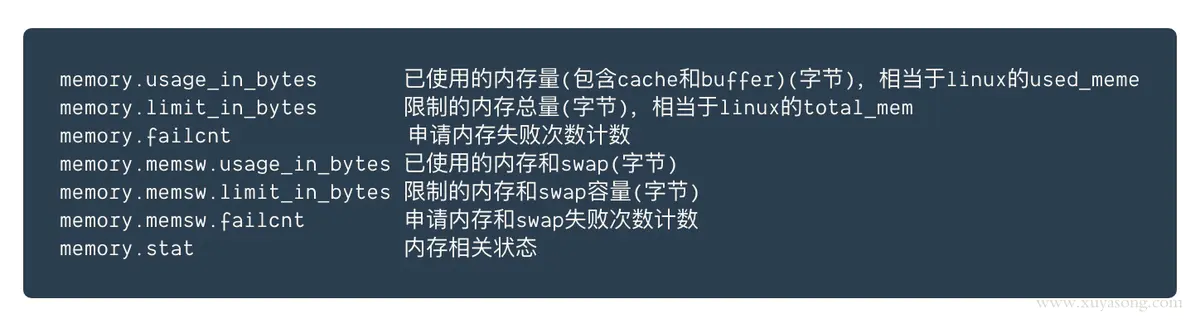

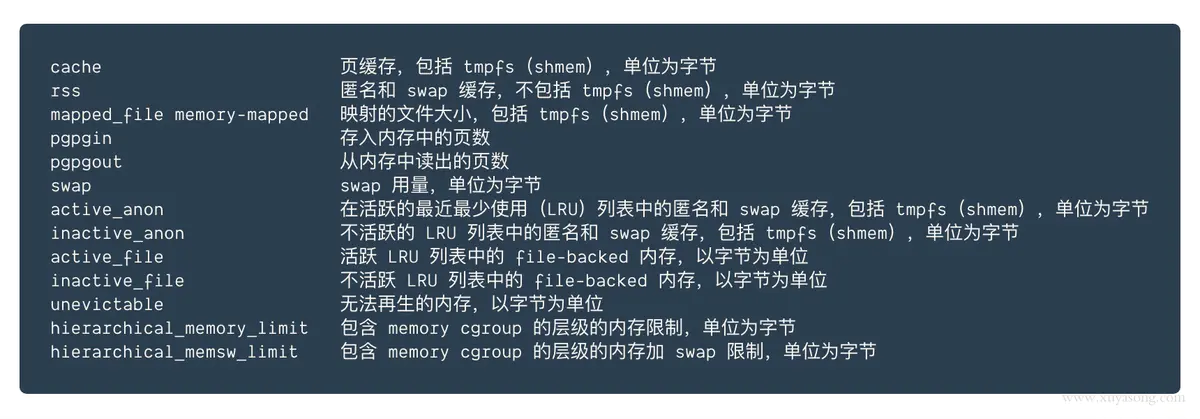

cgroup中memory类型的分类:

memory.stat中的信息是最全的:

为什么说不应该看usage_in_bytes:

5.5 usage_in_bytes For efficiency, as other kernel components, memory cgroup uses some optimization to avoid unnecessary cacheline false sharing. usage_in_bytes is affected by the method and doesn't show 'exact' value of memory (and swap) usage, it's a fuzz value for efficient access. (Of course, when necessary, it's synchronized.) If you want to know more exact memory usage, you should use RSS+CACHE(+SWAP) value in memory.stat(see 5.2). 参考 https://www.kernel.org/doc/Documentation/cgroup-v1/memory.txt

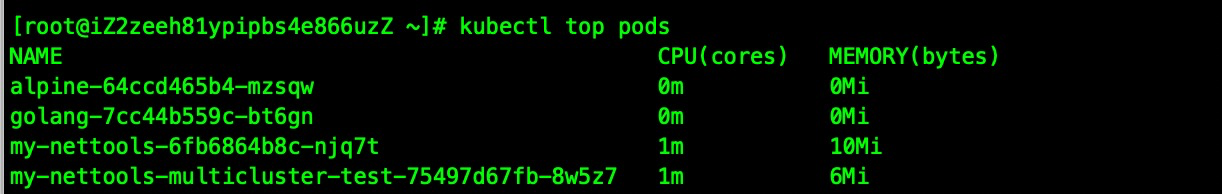

2.通过 kubectl top pod 查看的内存指标:

# kubectl top pods NAME CPU(cores) MEMORY(bytes) alpine-64ccd465b4-mzsqw 0m 0Mi golang-7cc44b559c-bt6gn 0m 0Mi my-nettools-multicluster-test-75497d67fb-8w5z7 1m 3Mi my-nettools-multicluster-test-75497d67fb-fmskd 1m 3Mi my-nettools-primary-8584bffdf5-x97rx 1m 15Mi my-wordpress-8b77b598c-ncfdm 1m 13Mi nginx-deployment-basic-598887494c-9jqp9 1m 2Mi nginx-qinhexing-6cb7c848b-kqbxn 0m 1Mi stress-ceshi-nodename-7fdcb59799-zm275 0m 0Mi tomcat-875bdfdc-6p725 1m 83Mi

kubectl top pod的内存计算公式:

kubectl top pod 得到的内存使用量,并不是cadvisor 中的container_memory_usage_bytes,而是container_memory_working_set_bytes,计算方式为:

container_memory_usage_bytes == container_memory_rss + container_memory_cache + kernel memory container_memory_working_set_bytes = container_memory_usage_bytes - total_inactive_file(未激活的匿名缓存页) 即:container_memory_working_set_bytes = container_memory_rss + container_memory_cache + kernel memory(一般可忽略) - total_inactive_file

container_memory_working_set_bytes是容器真实使用的内存量,也是limit限制时的 oom 判断依据(部分active_file可释放,但是发送oom时说明无可释放内存)

通过pod内执行top命令查看:

pod内执行top命令是有坑的,大家务必注意,像是上半部分的cpu,内存等资源显示的是节点的cpu 内存资源,task以及下面输出pid的才是pod自身的

Tasks: 8 total, 1 running, 7 sleeping, 0 stopped, 0 zombie %Cpu0 : 53.5 us, 4.4 sy, 0.0 ni, 41.8 id, 0.0 wa, 0.0 hi, 0.3 si, 0.0 st %Cpu1 : 52.0 us, 2.7 sy, 0.0 ni, 44.9 id, 0.0 wa, 0.0 hi, 0.3 si, 0.0 st %Cpu2 : 41.7 us, 6.1 sy, 0.0 ni, 51.2 id, 0.7 wa, 0.0 hi, 0.3 si, 0.0 st %Cpu3 : 41.1 us, 6.1 sy, 0.0 ni, 52.2 id, 0.3 wa, 0.0 hi, 0.3 si, 0.0 st MiB Mem : 15752.1 total, 352.3 free, 5164.6 used, 10235.1 buff/cache MiB Swap: 0.0 total, 0.0 free, 0.0 used. 10118.9 avail Mem PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 1 root 20 0 2540 92 0 S 0.0 0.0 0:00.02 sleep 23 root 20 0 58736 1520 0 S 0.0 0.0 0:00.00 nginx 24 www-data 20 0 59064 2564 720 S 0.0 0.0 0:17.95 nginx 25 www-data 20 0 59064 1820 0 S 0.0 0.0 0:00.00 nginx 26 www-data 20 0 59064 1820 0 S 0.0 0.0 0:00.00 nginx 27 www-data 20 0 59064 1820 0 S 0.0 0.0 0:00.00 nginx 179 root 20 0 4468 3824 3168 S 0.0 0.0 0:00.00 bash

通过docker 以及crictl 查看内存使用率:

# docker stats e00a11510727 CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS e00a11510727 k8s_my-nettools_my-nettools-primary-8584bffdf5-x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_1 0.00% 3.82MiB / 1GiB 0.37% 0B / 0B 21.2MB / 1.59MB 6 CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS e00a11510727 k8s_my-nettools_my-nettools-primary-8584bffdf5-x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_1 0.00% 3.82MiB / 1GiB 0.37% 0B / 0B 21.2MB / 1.59MB 6 # crictl stats --id 72dc2a4abc659 CONTAINER CPU % MEM DISK INODES 72dc2a4abc659 0.00 3.92MB 16.68MB 9

通过apiserver查看metrics里面记录的监控数据:

metrics得到的指标实际上是已经被计算过了的,即 metrics拿到的是现成指标

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/namespaces/sj8***rod/pods/prod****-zj67p" |jq { "kind": "PodMetrics", "apiVersion": "metrics.k8s.io/v1beta1", "metadata": { "name": "prod-****-zj67p", "namespace": "s****d", "selfLink": "/apis/metrics.k8s.io/v1beta1/namespaces/sj****od/pods/prod-****-zj67p", "creationTimestamp": "2021-11-09T13:03:36Z" }, "timestamp": "2021-11-09T13:03:20Z", "window": "30s", "containers": [ { "name": "prod-****ess", "usage": { "cpu": "1045813398n", "memory": "2513804Ki" } } ] } 也可以直接通过 kubectl 命令来访问这些 API,比如 kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes kubectl get --raw /apis/metrics.k8s.io/v1beta1/pods kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes/<node-name> kubectl get --raw /apis/metrics.k8s.io/v1beta1/namespace/<namespace-name>/pods/<pod-name>

通过kubelet的cadvisor查看cad采集的数据:

cad summary也是计算后的指标,其实如果比较会看cad的采集,到这里就已经可以知道答案在哪了,但是出现这个问题之前,cad的指标我并没有详细的看过

查看cad的汇总数据,直接获取summary的方式是这样 curl 127.0.0.1:10255/stats/summary { "node": { "nodeName": "cn-beijing.192.168.0.237", "systemContainers": [ { "name": "kubelet", "startTime": "2021-09-06T02:28:36Z", "cpu": { "time": "2021-11-22T06:42:50Z", "usageNanoCores": 145371200, "usageCoreNanoSeconds": 827652227406905 }, "memory": { "time": "2021-11-22T06:42:50Z", "usageBytes": 196685824, "workingSetBytes": 161320960, "rssBytes": 126496768, "pageFaults": 5177146887, "majorPageFaults": 2343 } }, { "name": "runtime", "startTime": "2021-10-09T05:58:09Z", "cpu": { "time": "2021-11-22T06:42:46Z", "usageNanoCores": 100393752, "usageCoreNanoSeconds": 594566183951015 }, "memory": { "time": "2021-11-22T06:42:46Z", "usageBytes": 1394094080, "workingSetBytes": 1075867648, "rssBytes": 124157952, "pageFaults": 6155392122, "majorPageFaults": 924 } }, { "name": "pods", "startTime": "2021-10-09T05:57:59Z", "cpu": { "time": "2021-11-22T06:43:02Z", "usageNanoCores": 1284929792, "usageCoreNanoSeconds": 4873156254419795 }, "memory": { "time": "2021-11-22T06:43:02Z", "availableBytes": 11415199744, "usageBytes": 7008845824, "workingSetBytes": 4368039936, "rssBytes": 3479117824, "pageFaults": 0, "majorPageFaults": 0 } } ], "startTime": "2021-09-06T02:28:25Z", "cpu": { "time": "2021-11-22T06:43:02Z", "usageNanoCores": 1715353110, "usageCoreNanoSeconds": 9464334847104386 }, "memory": { "time": "2021-11-22T06:43:02Z", "availableBytes": 6170161152, "usageBytes": 14745010176, "workingSetBytes": 10347081728, "rssBytes": 4769071104, "pageFaults": 31828203, "majorPageFaults": 132 }, ... { "podRef": { "name": "my-net****x97rx", "namespace": "default", "uid": "a9d684a4-53fe-4114-b8d0-8f138b933551" }, "startTime": "2021-11-15T12:16:16Z", "containers": [ { "name": "my-nettools", "startTime": "2021-11-18T13:27:44Z", "cpu": { "time": "2021-11-22T06:43:02Z", "usageNanoCores": 50389, "usageCoreNanoSeconds": 38669070971 }, "memory": { "time": "2021-11-22T06:43:02Z", "availableBytes": 1058471936, 这个pod的内存统计可用很多,已用很小 "usageBytes": 34553856, "workingSetBytes": 15269888, "rssBytes": 3649536, "pageFaults": 24684, "majorPageFaults": 165 }, "rootfs": { "time": "2021-11-22T06:43:02Z", "device": "", "availableBytes": 57990582272, "capacityBytes": 126692048896, "usedBytes": 10391552, "inodesFree": 7291487, "inodes": 7864320, "inodesUsed": 30 }, "logs": { "time": "2021-11-22T06:43:02Z", "device": "", "availableBytes": 57990582272, "capacityBytes": 126692048896, "usedBytes": 28672, "inodesFree": 7291487, "inodes": 7864320, "inodesUsed": 572833 } } ], "cpu": { "time": "2021-11-22T06:42:58Z", "usageNanoCores": 77743, "usageCoreNanoSeconds": 715087495132 }, "memory": { "time": "2021-11-22T06:42:58Z", "availableBytes": 114749440, 注意这里的可用 "usageBytes": 978309120, 已经用了九百多m了 "workingSetBytes": 958992384, working set也是900多m "rssBytes": 3649536, "pageFaults": 0, "majorPageFaults": 0 }, "network": { "time": "2021-11-22T06:42:55Z", "name": "eth0", "rxBytes": 61572501, "rxErrors": 0, "txBytes": 76220238, "txErrors": 0, "interfaces": [ { "name": "eth0", "rxBytes": 61572501, "rxErrors": 0, "txBytes": 76220238, "txErrors": 0 } ] }, "volume": [ { "time": "2021-11-22T06:42:39Z", "device": "", "availableBytes": 7314903040, "capacityBytes": 8258621440, "usedBytes": 943718400, 这个就是我后面测试要用到的tmpfs对应的emptydir "inodesFree": 2016263, "inodes": 2016265, "inodesUsed": 2, "name": "volume-1623324311949" 我的tmpfsdir的名称 }, { "time": "2021-11-15T12:17:09Z", "device": "", "availableBytes": 8258609152, "capacityBytes": 8258621440, "usedBytes": 12288, "inodesFree": 2016256, "inodes": 2016265, "inodesUsed": 9, "name": "default-token-kn777" } ], "ephemeral-storage": { "time": "2021-11-22T06:43:02Z", "device": "", "availableBytes": 57990582272, "capacityBytes": 126692048896, "usedBytes": 10420224, "inodesFree": 7291487, "inodes": 7864320, "inodesUsed": 30 } }, 查看cad的细项数据,细项数据比较难度,建议可以看cad的summary,summary是top pod 以及监控平台的指标数据的来源 # curl http://127.0.0.1:10255/metrics/cadvisor |grep my-*****-x97rx|grep -i memory container_memory_cache{container="",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-poda9551.slice",image="",name="",namespace="default",pod="my-nettools-primary-8584bffdf5-x97rx"} 9.74966784e+08 1637563886638 container_memory_cache{container="POD",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-poda9d51.slice/docker-6b14fd.scope",image="registry-vpc.cn-beijing.aliyuncs.com/acs/pause:3.2",name="k8s_POD_my-nettools-primary-8584bffdf5-x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_0",namespace="default",pod="my-nett****-x97rx"} 0 1637563884720 ... container_spec_memory_swap_limit_bytes{container="my-nettools",id="/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-poda9d651.slice/docker-e031fe3f53.scope",image="sha256:fa7b0d7ccb2a5d0174b4eb0972fad721af98d3e0e290dbfacb9b3537152c0580",name="k8s_my-nettools_****x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_1",namespace="default",pod="my-****97rx"} 1.073741824e+09

使用cgroup的数值计算cpu使用的核数:

简单计算脚本:

tstart=$(date +%s%N);cstart=$(cat /sys/fs/cgroup/cpu/cpuacct.usage);sleep 5;tstop=$(date +%s%N);cstop=$(cat /sys/fs/cgroup/cpu/cpuacct.usage);result=`awk 'BEGIN{printf "%.2f\n",'$(($cstop - $cstart))'/'$(($tstop - $tstart))'}'`;echo $result;

本案例问题复现时的OOM日志:

Nov 11 06:55:00 iZb10Z kernel: GC Thread#2 invoked oom-killer: gfp_mask=0x6000c0(GFP_KERNEL), nodemask=(null), order=0, oom_score_adj=873 Nov 11 06:55:00 iZ0Z kernel: GC Thread#2 cpuset=docker-24ec5b44****338000ff2f7516.scope mems_allowed=0 Nov 11 06:55:00 iZbp15by10Z kernel: CPU: 3 PID: 213115 Comm: GC Thread#2 Tainted: G W OE 4.19.91-22.2.al7.x86_64 #1 Nov 11 06:55:00 iZbp1nenhby10Z kernel: Hardware name: Alibaba Cloud Alibaba Cloud ECS, BIOS 8a46cfe 04/01/2014 Nov 11 06:55:00 ienhby10Z kernel: Call Trace: Nov 11 06:55:00 iZbp15y10Z kernel: dump_stack+0x66/0x8b Nov 11 06:55:00 iZbpy10Z kernel: dump_memcg_header+0x12/0x40 Nov 11 06:55:00 iZb10Z kernel: oom_kill_process+0x219/0x310 Nov 11 06:55:00 iZbZ kernel: out_of_memory+0xf7/0x4c0 Nov 11 06:55:00 iZb10Z kernel: mem_cgroup_out_of_memory+0xc2/0xe0 Nov 11 06:55:00 iZbpy10Z kernel: try_charge+0x7b4/0x810 Nov 11 06:55:00 i0Z kernel: mem_cgroup_charge+0xfe/0x250 Nov 11 06:55:00 iZb10Z kernel: do_anonymous_page+0xe1/0x5b0 Nov 11 06:55:00 iZbp0Z kernel: __handle_mm_fault+0x665/0xa20 Nov 11 06:55:00 iZb0Z kernel: handle_mm_fault+0x106/0x1c0 Nov 11 06:55:00 iZbp10Z kernel: __do_page_fault+0x1b7/0x470 Nov 11 06:55:00 iZbpy10Z kernel: do_page_fault+0x32/0x140 Nov 11 06:55:00 iZbhby10Z kernel: ? async_page_fault+0x8/0x30 Nov 11 06:55:00 iZbpnhby10Z kernel: async_page_fault+0x1e/0x30 Nov 11 06:55:00 iZbpy10Z kernel: RIP: 0033:0x7faef467a164 Nov 11 06:55:00 iZbpy10Z kernel: Code: 43 38 49 89 45 38 48 8b 43 30 49 89 45 30 48 8b 43 28 49 89 45 28 48 8b 43 20 49 89 45 20 48 8b 43 18 49 89 45 18 48 8b 43 10 <49> 89 45 10 48 8b 43 08 49 89 45 08 48 8b 03 49 89 45 00 e9 34 ff Nov 11 06:55:00 iZbpy10Z kernel: RSP: 002b:00007fae8e5b69c0 EFLAGS: 00010217 Nov 11 06:55:00 iZbpy10Z kernel: RAX: 0000000087a956d8 RBX: 000000077c6f5c90 RCX: 000000067d541003 Nov 11 06:55:00 iZbpy10Z kernel: RDX: ffffffffff85c208 RSI: 0000000000000001 RDI: 00007fae5c0219f0 Nov 11 06:55:00 iZbpy10Z kernel: RBP: 00007fae8e5b6a30 R08: 0000000000000000 R09: 00000000000005c7 Nov 11 06:55:00 iZbpy10Z kernel: R10: 0000000000000001 R11: 000000000000000f R12: 00007fae5c017290 Nov 11 06:55:00 iZbpy10Z kernel: R13: 000000067d541000 R14: 0000000000000003 R15: 00007fae5c021a80 Nov 11 06:55:00 iZbpy10Z kernel: Task in /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod96bc****670f9.slice/docker-24ec*5ae338000ff2f7516.scope killed as a result of limit of /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod96bc17dc_f218_4e61_be05_c945fa8670f9.slice 下面这一条说明了pod的内存限制以及使用情况,说明触发了上限被oom了 Nov 11 06:55:00 iZy10Z kernel: memory: usage 16777216kB, limit 16777216kB, failcnt 17883 Nov 11 06:55:00 iZy10Z kernel: memory+swap: usage 16777216kB, limit 9007199254740988kB, failcnt 0 Nov 11 06:55:00 iZbpy10Z kernel: kmem: usage 0kB, limit 9007199254740988kB, failcnt 0 下面这条是cgroup打印出来的pod cgroup根目录下各个docker目录占用的情况,这一条实际可以看出来是一个docker占用了8个g的shmem,shmem算到cache里面, Nov 11 06:55:00 iZbpy10Z kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-po*34a0838.scope: cache:8840436KB rss:388KB rss_huge:0KB shmem:8840436KB mapped_file:0KB dirty:0KB writeback:0KB swap:0KB workingset_refault_anon:0KB workingset_refault_file:0KB workingset_activate_anon:0KB workingset_activate_file:0KB workingset_restore_anon:0KB workingset_restore_file:0KB workingset_nodereclaim:0KB inactive_anon:4207500KB active_anon:4631220KB inactive_file:748KB active_file:0KB unevictable:0KB 这一条则是当时的主业务进程所在的container cgroup信息,说明cgroup记录到过“残留”的pod内存使用 Nov 11 06:55:00 iZbp1*10Z kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-p*8000ff2f7516.scope: cache:132KB rss:7917200KB rss_huge:7163904KB shmem:0KB mapped_file:660KB dirty:0KB writeback:0KB swap:0KB workingset_refault_anon:0KB workingset_refault_file:1716KB workingset_activate_anon:0KB workingset_activate_file:0KB workingset_restore_anon:0KB workingset_restore_file:0KB workingset_nodereclaim:0KB inactive_anon:7917060KB active_anon:0KB inactive_file:0KB active_file:0KB unevictable:0KB 下面则是触发cgroup内存上限后的oom日志,打分并kill了java进程(rss是page数,1个page是4Kb) Nov 11 06:55:00 iZbphby10Z kernel: Tasks state (memory values in pages): Nov 11 06:55:00 iZbphby10Z kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Nov 11 06:55:00 iZbphby10Z kernel: [2064649] 0 2064649 242 1 24576 0 -998 pause Nov 11 06:55:00 iZbphby10Z kernel: [ 212336] 1001 212336 1095 184 49152 0 873 tini Nov 11 06:55:00 iZbphby10Z kernel: [ 212425] 1001 212425 4025962 1985479 16760832 0 873 java Nov 11 06:55:00 iZbphby10Z kernel: oom_reaper: reaped process 212425 (java), now anon-rss:0kB, file-rss:0kB, shmem-rss:32kB

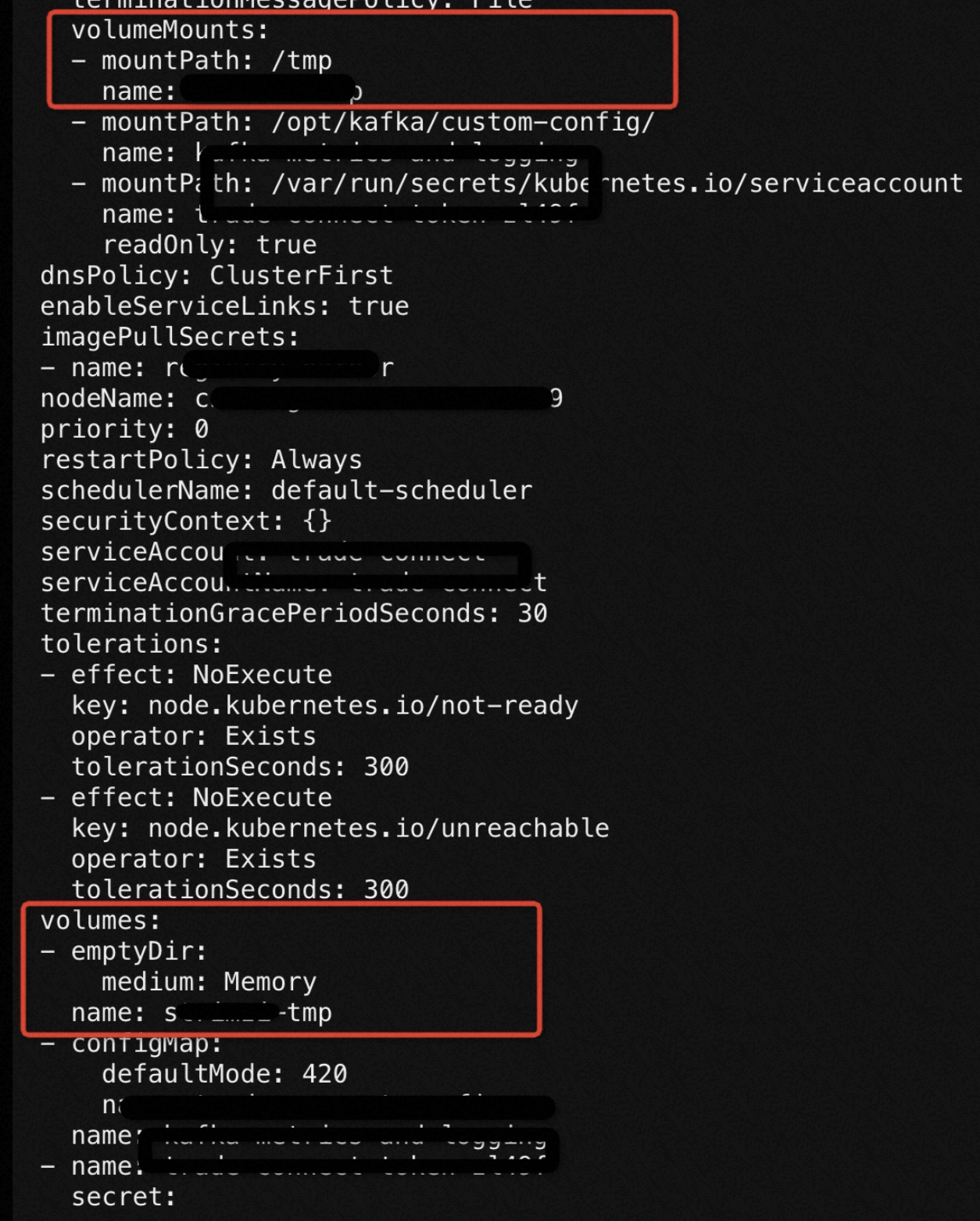

异常OOM的shmem占用原因:emptyDir

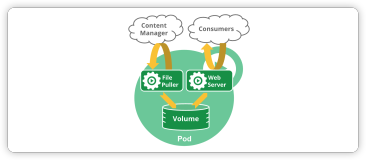

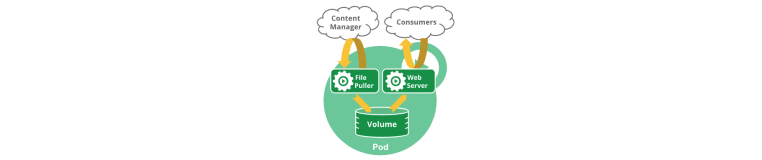

当 Pod 分派到某个 Node 上时,emptyDir 卷会被创建,并且在 Pod 在该节点上运行期间,卷一直存在。 就像其名称表示的那样,卷最初是空的。 尽管 Pod 中的容器挂载 emptyDir 卷的路径可能相同也可能不同,这些容器都可以读写 emptyDir 卷中相同的文件。 当 Pod 因为某些原因被从节点上删除时,emptyDir 卷中的数据也会被永久删除。

说明: 容器崩溃并不会导致 Pod 被从节点上移除,因此容器崩溃期间 emptyDir 卷中的数据是安全的。

emptyDir 的一些用途:

- 缓存空间,例如基于磁盘的归并排序。

- 为耗时较长的计算任务提供检查点,以便任务能方便地从崩溃前状态恢复执行。

- 在 Web 服务器容器服务数据时,保存内容管理器容器获取的文件。

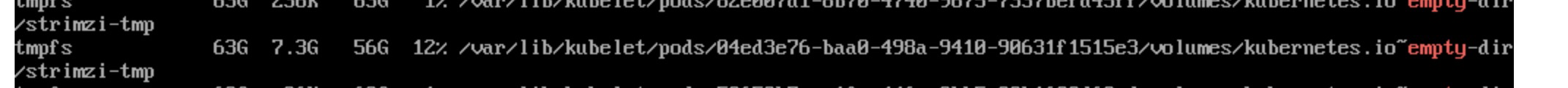

取决于你的环境,emptyDir 卷存储在该节点所使用的介质上;这里的介质可以是磁盘或 SSD 或网络存储。但是,你可以将 emptyDir.medium 字段设置为 "Memory",以告诉 Kubernetes 为你挂载 tmpfs(基于 RAM 的文件系统)。 虽然 tmpfs 速度非常快,但是要注意它与磁盘不同。 tmpfs 在节点重启时会被清除,并且你所写入的所有文件都会计入容器的内存消耗,受容器内存限制约束。

问题复现与验证:

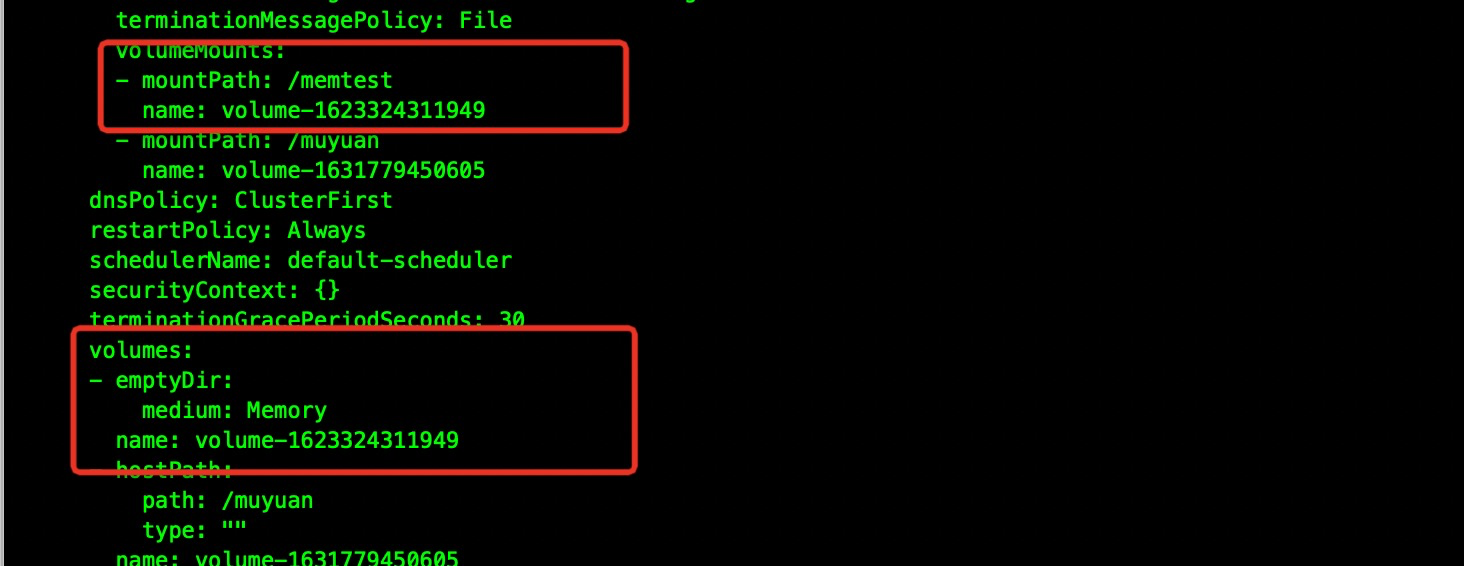

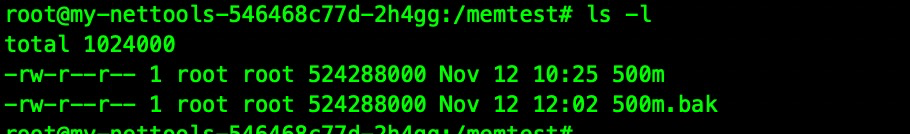

1,创建memory类型的emptydir

2,在挂载目录里面放一些大文件

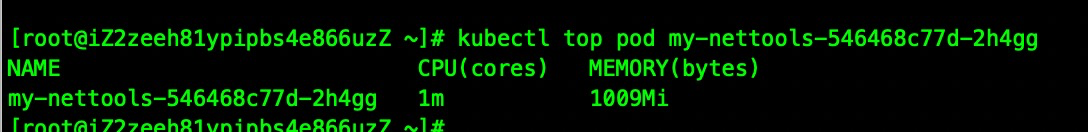

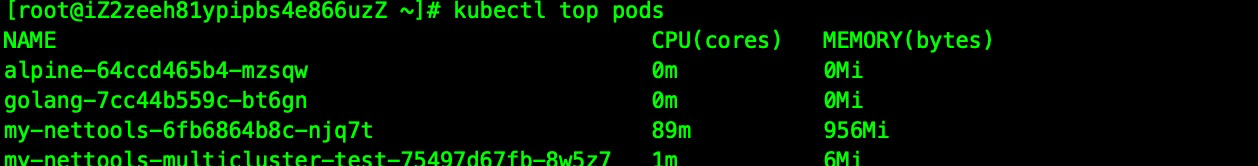

3,top pod观察

当时的疑问?

kubepods-burstable-***0f9.slice 这个相同说明是一个pod,

docker-295fa7c9e5ed1***14d6b5180fdfc34a0838

docker-24ec5b440a5****38000ff2f7516.scope

这个不同是两个container,但是实际的排查过程中,默认cgroup的pod目录下只有pause以及主业务的docker目录,出现了一个“历史”

docker目录,这个很有问题!

复现oom日志参考:

Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: dd invoked oom-killer: gfp_mask=0x6000c0(GFP_KERNEL), nodemask=(null), order=0, oom_score_adj=968 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: dd cpuset=docker-45c3345632cb4d19698badde97941c43f6c3593f21d98a847049b5f03805b642.scope mems_allowed=0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: CPU: 1 PID: 2992940 Comm: dd Kdump: loaded Tainted: G OE 4.19.91-22.2.al7.x86_64 #1 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Hardware name: Alibaba Cloud Alibaba Cloud ECS, BIOS 8c24b4c 04/01/2014 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Call Trace: Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: dump_stack+0x66/0x8b Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: dump_memcg_header+0x12/0x40 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: oom_kill_process+0x219/0x310 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: out_of_memory+0xf7/0x4c0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: mem_cgroup_out_of_memory+0xc2/0xe0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: try_charge+0x7b4/0x810 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: mem_cgroup_charge+0xfe/0x250 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: shmem_add_to_page_cache+0x1d6/0x340 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: ? shmem_alloc_and_acct_page+0x76/0x1d0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: shmem_getpage_gfp+0x5df/0xce0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: ? copyin+0x22/0x30 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: shmem_getpage+0x2d/0x40 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: generic_perform_write+0xb2/0x190 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: __generic_file_write_iter+0x184/0x1c0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: ? __handle_mm_fault+0x665/0xa20 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: generic_file_write_iter+0xec/0x1d0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: new_sync_write+0xdb/0x120 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: vfs_write+0xad/0x1a0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: ? vfs_read+0x110/0x130 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: ksys_write+0x4a/0xc0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: do_syscall_64+0x5b/0x1b0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: entry_SYSCALL_64_after_hwframe+0x44/0xa9 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: RIP: 0033:0x7fe35dee5c27 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Code: Bad RIP value. Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: RSP: 002b:00007ffcb77532b8 EFLAGS: 00000246 ORIG_RAX: 0000000000000001 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: RAX: ffffffffffffffda RBX: 0000000000100000 RCX: 00007fe35dee5c27 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: RDX: 0000000000100000 RSI: 00007fe35dcd9000 RDI: 0000000000000001 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: RBP: 00007fe35dcd9000 R08: 00007fe35dcd8010 R09: 00007fe35dcd8010 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: R10: 00007fe35dcd9000 R11: 0000000000000246 R12: 0000000000000000 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: R13: 0000000000000000 R14: 00007fe35dcd9000 R15: 0000000000100000 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Task in /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice/docker-45c3345632cb4d19698badde 97941c43f6c3593f21d98a847049b5f03805b642.scope killed as a result of limit of /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: memory: usage 1048576kB, limit 1048576kB, failcnt 243 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: memory+swap: usage 1048576kB, limit 9007199254740988kB, failcnt 0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: kmem: usage 0kB, limit 9007199254740988kB, failcnt 0 Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice: cache:0KB rss: 0KB rss_huge:0KB shmem:0KB mapped_file:0KB dirty:0KB writeback:0KB swap:0KB workingset_refault_anon:0KB workingset_refault_file:0KB workingset_activate_anon:0KB workingset_activate_file:0KB wor kingset_restore_anon:0KB workingset_restore_file:0KB workingset_nodereclaim:0KB inactive_anon:0KB active_anon:0KB inactive_file:0KB active_file:0KB unevictable:0KB Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice/docker-27240dd2 0e37e46a25ae6c2219763a8123782762e981e46be2b3311620e17fcc.scope: cache:0KB rss:0KB rss_huge:0KB shmem:0KB mapped_file:0KB dirty:0KB writeback:0KB swap:0KB workingset_refault_anon:0KB workingset_ refault_file:0KB workingset_activate_anon:0KB workingset_activate_file:0KB workingset_restore_anon:0KB workingset_restore_file:0KB workingset_nodereclaim:0KB inactive_anon:0KB active_anon:0KB i nactive_file:0KB active_file:0KB unevictable:0KB Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice/docker-45c33456 32cb4d19698badde97941c43f6c3593f21d98a847049b5f03805b642.scope: cache:1043196KB rss:5280KB rss_huge:0KB shmem:1042932KB mapped_file:132KB dirty:132KB writeback:0KB swap:0KB workingset_refault_a non:0KB workingset_refault_file:396KB workingset_activate_anon:0KB workingset_activate_file:0KB workingset_restore_anon:0KB workingset_restore_file:0KB workingset_nodereclaim:0KB inactive_anon: 536316KB active_anon:511896KB inactive_file:156KB active_file:0KB unevictable:0KB Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: Tasks state (memory values in pages): Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456337] 0 2456337 242 1 28672 0 -998 pause Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456661] 0 2456661 634 22 45056 0 968 sleep Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456827] 0 2456827 14684 382 102400 0 968 nginx Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456828] 33 2456828 14766 457 106496 0 968 nginx Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456829] 33 2456829 14766 457 106496 0 968 nginx Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456830] 33 2456830 14766 457 106496 0 968 nginx Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2456831] 33 2456831 14766 457 106496 0 968 nginx Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2991826] 0 2991826 1116 165 49152 0 968 bash Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: [2992940] 0 2992940 903 272 45056 0 968 dd Nov 12 12:46:50 iZ2zeeh81ypipbs4e866uzZ kernel: oom_reaper: reaped process 2456831 (nginx), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

docker inspect相关信息:

# docker inspect 45c3345632cb |grep -i pid "Pid": 2456661, "PidMode": "", "PidsLimit": null, [root@iZ2ze***6uzZ ~]# more /proc/2456661/cgroup 12:memory:/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podc00e47e7_5c66_4885_a929_607663ed3cd4.slice/docker-45c3345632cb4d19698badde97941c43f6c3593f21d98a847049b5f03805b642.scope ...

问题遗留:

使用脚本采集shmem相关信息发现,一开始的shmem是记录在pod的cgroup 根目录的

podbasedir=/sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod***3551.slice for i in {1..86400} do echo $(date) >> /root/shmem-leak-check.log echo "$podbasedir has this docker dir" >>/root/shmem-leak-check.log ls -l $podbasedir |grep docker >>/root/shmem-leak-check.log echo "Find docker containers and write docker inspect name,the POD is pause" >>/root/shmem-leak-check.log ls -l $podbasedir |grep docker |awk '{print $NF}'|cut -b 8-15|xargs -n1 -I {} docker inspect --format="{{json .Name}}" {} >>/root/shmem-leak-check.log echo "Start record total memory.stat " >>/root/shmem-leak-check.log egrep "total_cache|total_rss|total_shmem|total_inactive_file" $podbasedir/memory.stat >>/root/shmem-leak-check.log echo "Start record docker memory.stat" >>/root/shmem-leak-check.log egrep "total_cache|total_rss|total_shmem|total_inactive_file" $podbasedir/docker-*/memory.stat >>/root/shmem-leak-check.log sleep 60s done

采集效果:

第一个total_shmem是pod的cgroup目录,后面则是采集的docker的cgroup目录,可以看到total存在shmem,docker目录反而不存在,跟oom实际发生的kill不一样

Thu Nov 18 13:37:49 CST 2021 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod96bc10f9.slice has this docker dir drwxr-xr-x 2 root root 0 Nov 17 19:42 docker-3ebefb627.scope drwxr-xr-x 2 root root 0 Jul 14 17:28 docker-a32b86285.scope find docker containers and write docker inspect name,the POD is pause "/k8s_traden-prod_96bc17dc-f218-4e61-be05-c945fa8670f9_44" "/k8s_POD_trade-c7dc-f218-4e61-be05-c945fa8670f9_0" start record total memory.stat total_cache 9075990528 total_rss 6361980928 total_rss_huge 0 total_shmem 9069502464 total_inactive_file 2592768 start record docker memory.stat /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-3eb2fc3dbd3c99edf0937a362fb627.scope/memory.stat:total_cache 1892352 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-3eb2fc3dbd3c99edf0937a362fb627.scope/memory.stat:total_rss 6373216256 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-3eb2fc3dbd3c99edf0937a362fb627.scope/memory.stat:total_rss_huge 5605687296 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-3eb2fc3dbd3c99edf0937a362fb627.scope/memory.stat:total_shmem 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-3eb2fc3dbd3c99edf0937a362fb627.scope/memory.stat:total_inactive_file 1892352 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-a32d946806ba0525a9af6cb9c86285.scope/memory.stat:total_cache 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-a32d946806ba0525a9af6cb9c86285.scope/memory.stat:total_rss 180224 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-a32d946806ba0525a9af6cb9c86285.scope/memory.stat:total_rss_huge 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-a32d946806ba0525a9af6cb9c86285.scope/memory.stat:total_shmem 0 /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstab45fa8670f9.slice/docker-a32d946806ba0525a9af6cb9c86285.scope/memory.stat:total_inactive_file 0

告知用户复现方式,客户自行复现:

最终结论:

pod oom 时 pod不会被删除重建,而是重建一个container,但是重建的container 以及被oom删除的container 都不会删除tmpfs里面的内容(原因是emptydir需要删除pod或者重启节点才会清理空间),因此新起的container自己的docker cgroup目录没有shmem的记录,老的container的shmem会移送到pod的cgroup根目录进行统计,因此最终引发oom的时候,新的container的目录统计到的内存并不多

扩展如何查看内存里面的数据:

1,先查看pod的内存占用

2,往njq7t里面拷贝一个大文件,这里用messages日志

kubectl cp /var/log/messages my-nettools-6fb6864b8c-njq7t:/root

过大的文件容易失败(可以在pod内去下载),docker cp也是,报错如下:

# docker cp /var/log/messages c210654bd1f9:/root ERRO[0006] Can't add file /var/log/messages to tar: archive/tar: write too long

3,在pod内打开message文件,开始各种搜索关键词,可以看到内存占用起来了

4,查看pod内的内存占用分配

这里的单位是bytes,直接除以2次 1024/1024得到的是Mb的单位,可以看出来cache占了大部分 ~# cat /sys/fs/cgroup/memory/memory.stat cache 442810368 rss 5812224 rss_huge 0 shmem 0 mapped_file 3649536 dirty 0 writeback 0 swap 0 workingset_refault_anon 0 workingset_refault_file 733962240 workingset_activate_anon 0 workingset_activate_file 506609664 workingset_restore_anon 0 workingset_restore_file 72720384 workingset_nodereclaim 0 pgpgin 416988 pgpgout 307447 pgfault 235191 pgmajfault 231 inactive_anon 5677056 active_anon 0 inactive_file 211652608 active_file 231276544 unevictable 0 hierarchical_memory_limit 1073741824 hierarchical_memsw_limit 1073741824 total_cache 442810368 total_rss 5812224 total_rss_huge 0 total_shmem 0 total_mapped_file 3649536 total_dirty 0 total_writeback 0 total_swap 0 total_workingset_refault_anon 0 total_workingset_refault_file 733962240 total_workingset_activate_anon 0 total_workingset_activate_file 506609664 total_workingset_restore_anon 0 total_workingset_restore_file 72720384 total_workingset_nodereclaim 0 total_pgpgin 416988 total_pgpgout 307447 total_pgfault 235191 total_pgmajfault 231 total_inactive_anon 5677056 total_active_anon 0 total_inactive_file 211652608 total_active_file 231276544 total_unevictable 0

5.这些内存 cache里面放的都是神马数据呢?

使用fincore采集不到,pcstat 也不行

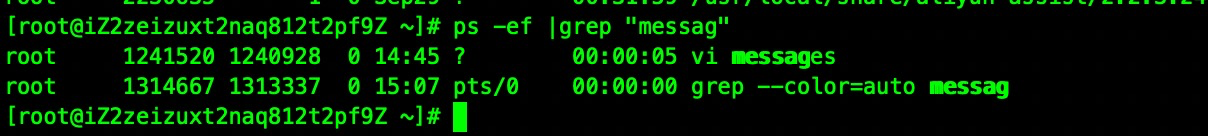

我前面是用vi打开的文件,然后全局搜索关键词,把内存打起来的,要找到vi的pid

使用下面的脚本抓一下

procdump() ( cat /proc/$1/maps | grep -Fv ".so" | grep " 0 " | awk '{print $1}' | ( IFS="-" while read a b; do dd if=/proc/$1/mem bs=$( getconf PAGESIZE ) iflag=skip_bytes,count_bytes \ skip=$(( 0x$a )) count=$(( 0x$b - 0x$a )) of="$1_mem_$a.bin" done ) )

抓取提示:

# procdump 1241520 dd: ‘/proc/1241520/mem’: cannot skip to specified offset 14+0 records in 14+0 records out 57344 bytes (57 kB) copied, 0.000247268 s, 232 MB/s dd: ‘/proc/1241520/mem’: cannot skip to specified offset 226221+0 records in 226221+0 records out 926601216 bytes (927 MB) copied, 4.14441 s, 224 MB/s ...... dd: ‘/proc/1241520/mem’: cannot skip to specified offset dd: error reading ‘/proc/1241520/mem’: Input/output error 0+0 records in 0+0 records out 0 bytes (0 B) copied, 0.000151461 s, nan kB/s dd: ‘/proc/1241520/mem’: cannot skip to specified offset 2+0 records in 2+0 records out 8192 bytes (8.2 kB) copied, 0.00014037 s, 58.4 MB/s

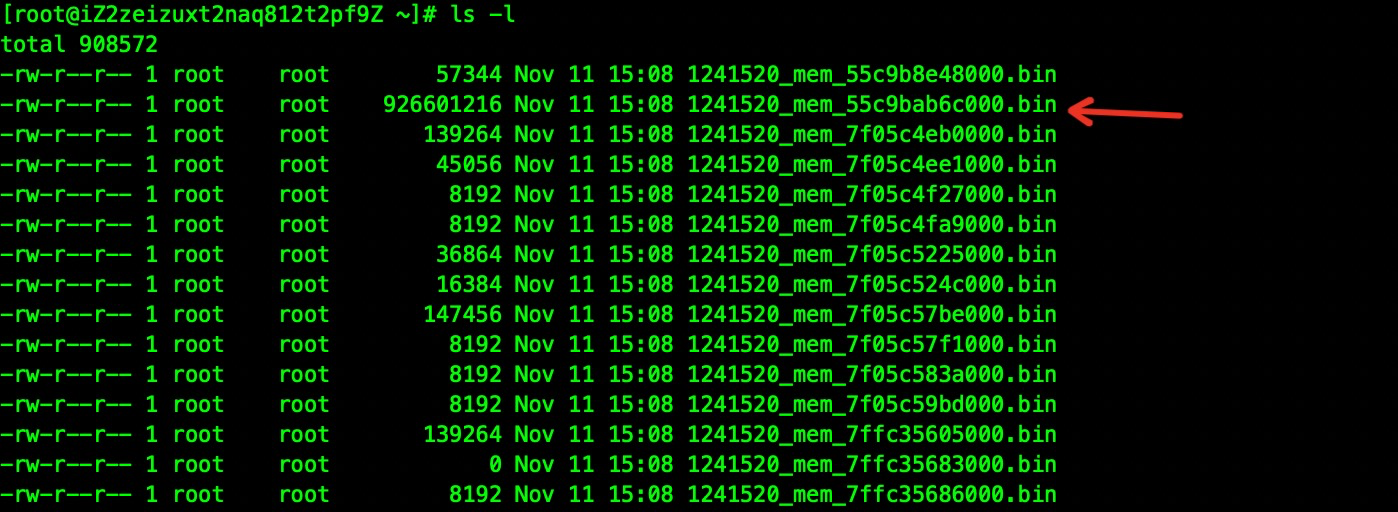

会在当前目录生成一些文件,如下,我们看看九百多m的是谁

效果:

这个最大的900多m内存的占用,都是我的messages里面的内容

# hexdump -C -n 10000 1241520_mem_55c9bab6c000.bin |more 00000000 00 00 00 00 00 00 00 00 91 02 00 00 00 00 00 00 |................| 00000010 07 00 07 00 03 00 01 00 02 00 02 00 01 00 01 00 |................| 00000020 05 00 01 00 03 00 01 00 02 00 07 00 02 00 07 00 |................| 00000030 03 00 03 00 02 00 01 00 04 00 02 00 00 00 01 00 |................| 00000040 01 00 02 00 01 00 02 00 05 00 01 00 07 00 02 00 |................| 00000050 01 00 00 00 01 00 02 00 01 00 00 00 01 00 01 00 |................| 00000060 01 00 01 00 01 00 00 00 01 00 01 00 01 00 03 00 |................| 00000070 00 00 01 00 01 00 01 00 00 00 02 00 01 00 01 00 |................| 00000080 01 00 00 00 01 00 01 00 00 00 01 00 00 00 01 00 |................| 00000090 80 be ac c1 c9 55 00 00 60 87 b5 c2 c9 55 00 00 |.....U..`....U..| 000000a0 90 f6 b9 ba c9 55 00 00 f0 e0 e6 f1 c9 55 00 00 |.....U.......U..| 000000b0 b0 ef b9 ba c9 55 00 00 a0 b2 b7 ba c9 55 00 00 |.....U.......U..| 000000c0 e0 f8 dc ba c9 55 00 00 90 99 da ba c9 55 00 00 |.....U.......U..| 000000d0 00 34 da ba c9 55 00 00 d0 e8 b9 ba c9 55 00 00 |.4...U.......U..| 000000e0 00 75 b9 ba c9 55 00 00 10 05 c6 f1 c9 55 00 00 |.u...U.......U..| 000000f0 80 bb b9 ba c9 55 00 00 00 85 db ba c9 55 00 00 |.....U.......U..| 00000100 00 86 b9 ba c9 55 00 00 50 7b b9 ba c9 55 00 00 |.....U..P{...U..| 00000110 e0 f4 dc ba c9 55 00 00 90 f7 b9 ba c9 55 00 00 |.....U.......U..| 00000120 00 d7 b9 ba c9 55 00 00 e0 95 ea f1 c9 55 00 00 |.....U.......U..| 00000130 f0 81 da ba c9 55 00 00 30 56 e9 f1 c9 55 00 00 |.....U..0V...U..| 00000140 00 00 00 00 00 00 00 00 50 5f dc ba c9 55 00 00 |........P_...U..| 00000150 40 f7 dc ba c9 55 00 00 e0 60 dc ba c9 55 00 00 |@....U...`...U..| 00000160 c0 e9 e6 f1 c9 55 00 00 a0 dc b7 ba c9 55 00 00 |.....U.......U..| 00000170 a0 c2 b6 ba c9 55 00 00 50 e4 e6 f1 c9 55 00 00 |.....U..P....U..| 00000180 70 0c db ba c9 55 00 00 b0 ba dd ba c9 55 00 00 |p....U.......U..| 00000190 c0 b4 bb ba c9 55 00 00 00 00 00 00 00 00 00 00 |.....U..........| 000001a0 f0 ce eb f1 c9 55 00 00 a0 07 da ba c9 55 00 00 |.....U.......U..| 000001b0 a0 57 e9 f1 c9 55 00 00 00 00 00 00 00 00 00 00 |.W...U..........| 000001c0 30 1d d2 ba c9 55 00 00 00 5a e9 f1 c9 55 00 00 |0....U...Z...U..| 000001d0 80 fb c5 f1 c9 55 00 00 e0 f2 df f1 c9 55 00 00 |.....U.......U..| 000001e0 d0 99 c7 ba c9 55 00 00 00 00 00 00 00 00 00 00 |.....U..........| 000001f0 30 d1 eb f1 c9 55 00 00 b0 36 cf ba c9 55 00 00 |0....U...6...U..| 00000200 f0 8a da ba c9 55 00 00 40 e1 e6 f1 c9 55 00 00 |.....U..@....U..| 00000210 00 00 00 00 00 00 00 00 e0 41 dc ba c9 55 00 00 |.........A...U..| 00000220 b0 dd e6 f1 c9 55 00 00 20 fe c5 f1 c9 55 00 00 |.....U.. ....U..| 00000230 00 00 00 00 00 00 00 00 d0 0b da ba c9 55 00 00 |.............U..| 00000240 40 e6 e6 f1 c9 55 00 00 e0 05 c6 f1 c9 55 00 00 |@....U.......U..| 00000250 70 01 c6 f1 c9 55 00 00 00 00 00 00 00 00 00 00 |p....U..........| 00000260 b0 06 bc ba c9 55 00 00 10 d4 eb f1 c9 55 00 00 |.....U.......U..| 00000270 00 00 00 00 00 00 00 00 b0 4f e9 f1 c9 55 00 00 |.........O...U..| 00000280 00 00 00 00 00 00 00 00 80 97 ca bb c9 55 00 00 |.............U..| 00000290 00 00 00 00 00 00 00 00 e1 01 00 00 00 00 00 00 |................| 000002a0 5c 2c 22 e6 cc 55 00 00 10 c0 b6 ba c9 55 00 00 |\,"..U.......U..| 000002b0 69 5a 32 7a 65 69 7a 75 78 74 32 6e 61 71 38 31 |iZ2zeizuxt2naq81| 000002c0 32 74 32 70 66 39 5a 20 6b 75 62 65 6c 65 74 3a |2t2pf9Z kubelet:| 000002d0 20 49 31 31 30 37 20 31 38 3a 34 36 3a 34 35 2e | I1107 18:46:45.| 000002e0 35 35 33 37 35 33 20 20 20 20 20 35 31 34 20 6b |553753 514 k| 000002f0 75 62 65 72 75 6e 74 69 6d 65 5f 6d 61 6e 61 67 |uberuntime_manag| 00000300 65 72 2e 67 6f 3a 36 35 30 5d 20 63 6f 6d 70 75 |er.go:650] compu| 00000310 74 65 50 6f 64 41 63 74 69 6f 6e 73 20 67 6f 74 |tePodActions got| 00000320 20 7b 4b 69 6c 6c 50 6f 64 3a 66 61 6c 73 65 20 | {KillPod:false | 00000330 43 72 65 61 74 65 53 61 6e 64 62 6f 78 3a 66 61 |CreateSandbox:fa| 00000340 6c 73 65 20 53 61 6e 64 62 6f 78 49 44 3a 31 36 |lse SandboxID:16| 00000350 39 65 65 66 37 33 36 38 38 31 65 35 39 36 36 35 |9eef736881e59665| 00000360 39 63 66 38 34 34 31 33 30 63 39 37 63 34 34 30 |9cf844130c97c440| 00000370 62 38 36 36 64 30 66 34 30 33 38 38 63 36 35 62 |b866d0f40388c65b| 00000380 66 61 63 66 62 31 31 31 65 64 65 33 37 39 20 41 |facfb111ede379 A| 00000390 74 74 65 6d 70 74 3a 32 20 4e 65 78 74 49 6e 69 |ttempt:2 NextIni| 000003a0 74 43 6f 6e 74 61 69 6e 65 72 54 6f 53 74 61 72 |tContainerToStar| 000003b0 74 3a 6e 69 6c 20 43 6f 6e 74 61 69 6e 65 72 73 |t:nil Containers| 000003c0 54 6f 53 74 61 72 74 3a 5b 5d 20 43 6f 6e 74 61 |ToStart:[] Conta| 000003d0 69 6e 65 72 73 54 6f 4b 69 6c 6c 3a 6d 61 70 5b |inersToKill:map[| 000003e0 5d 20 45 70 68 65 6d 65 72 61 6c 43 6f 6e 74 61 |] EphemeralConta| 000003f0 69 6e 65 72 73 54 6f 53 74 61 72 74 3a 5b 5d 7d |inersToStart:[]}| 00000400 20 66 6f 72 20 70 6f 64 20 22 6b 72 75 69 73 65 | for pod "kruise| 00000410 2d 63 6f 6e 74 72 6f 6c 6c 65 72 2d 6d 61 6e 61 |-controller-mana| 00000420 67 65 72 2d 37 62 64 66 35 34 36 37 64 38 2d 67 |ger-7bdf5467d8-g| 00000430 77 76 38 74 5f 6b 72 75 69 73 65 2d 73 79 73 74 |wv8t_kruise-syst| 00000440 65 6d 28 66 37 30 38 37 63 32 36 2d 37 33 37 64 |em(f7087c26-737d| 00000450 2d 34 64 30 36 2d 62 34 34 30 2d 32 63 66 64 63 |-4d06-b440-2cfdc| 00000460 36 30 30 31 32 35 39 29 22 00 00 00 00 00 00 00 |6001259)".......| 00000470 60 3f 22 c5 05 7f 00 00 81 00 00 00 00 00 00 00 |`?".............| 00000480 69 66 20 67 65 74 6c 69 6e 65 28 31 29 20 3d 7e |if getline(1) =~| 00000490 20 27 5e 46 72 6f 6d 20 5b 30 2d 39 61 2d 66 5d | '^From [0-9a-f]| 000004a0 5c 7b 34 30 5c 7d 20 4d 6f 6e 20 53 65 70 20 31 |\{40\} Mon Sep 1| 000004b0 37 20 30 30 3a 30 30 3a 30 30 20 32 30 30 31 24 |7 00:00:00 2001$| 000004c0 27 20 7c 20 20 20 73 65 74 66 20 67 69 74 73 65 |' | setf gitse| 000004d0 6e 64 65 6d 61 69 6c 20 7c 20 65 6c 73 65 20 7c |ndemail | else ||

我们再回过头来看下tmpfs占用的内存,能不能dump内存抓到

放到tmpfs目录里面的文件,使用procdump在ecs上对pod的pid进行扫描获取不到,我们试试pod内运行fincore

# kubectl exec -it my-nettools-primary-8584bffdf5-x97rx -- bash root@my-nettools-primary-8584bffdf5-x97rx:/# ls -l /memtest/ total 921600 -rw-r--r-- 1 root root 943718400 Nov 18 13:54 900m root@my-nettools-primary-8584bffdf5-x97rx:/# root@my-nettools-primary-8584bffdf5-x97rx:/# root@my-nettools-primary-8584bffdf5-x97rx:/# cd .. root@my-nettools-primary-8584bffdf5-x97rx:/# exit exit [root@iZ2zeeh81ypipbs4e866uzZ ~]# docker ps |grep my-n e00a11510727 fa7b0d7ccb2a "sleep 360000" 3 days ago Up 3 days k8s_my-nettools_my-nettools-primary-8584bffdf5-x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_1 6bfc5310e773 registry-vpc.cn-beijing.aliyuncs.com/acs/pause:3.2 "/pause" 6 days ago Up 6 days k8s_POD_my-nettools-primary-8584bffdf5-x97rx_default_a9d684a4-53fe-4114-b8d0-8f138b933551_0 [root@iZ2zeeh81ypipbs4e866uzZ ~]# docker inspect e00a11510727 |grep -i pid "Pid": 3747629, "PidMode": "", "PidsLimit": null, [root@iZ2zeeh81ypipbs4e866uzZ ~]# pstree -sp 3747629 systemd(1)───containerd(614)───containerd-shim(3747610)───sleep(3747629)───nginx(3747717)─┬─nginx(3747718) ├─nginx(3747719) ├─nginx(3747720) └─nginx(3747721) # procdump 3747610 # procdump 3747629 # ls 3747610_mem_009d6000.bin 3747610_mem_7ffcb17b2000.bin 3747610_mem_c000400000.bin 3747629_mem_7f0de51c2000.bin 3747629_mem_7ffccfdc3000.bin 3747610_mem_7fce67b20000.bin 3747610_mem_7ffcb17b5000.bin 3747629_mem_55a0c9624000.bin 3747629_mem_7f0de51cf000.bin 3747629_mem_7ffccfdc6000.bin 3747610_mem_7ffcb1771000.bin 3747610_mem_c000000000.bin 3747629_mem_7f0de4fdc000.bin 3747629_mem_7ffccfd72000.bin [root@iZ2zeeh81ypipbs4e866uzZ dump]# for i in `ls`;do hexdump -C $i |grep 900m ;done [root@iZ2zeeh81ypipbs4e866uzZ dump]#

fincore的扫描情况:

./fincore-detection.sh --pages=false --summarize --only-cached /memtest/* filename size total pages cached pages cached size cached percentage ... /usr/bin/bash 1404744 343 343 1404928 100.000000 ... /usr/bin/bash 1404744 343 343 1404928 100.000000 0 /tmp/cache.pids 34 1 1 4096 100.000000 0 /root/fincore-detection.sh 957 1 1 4096 100.000000 ... /usr/sbin/nginx 1199248 293 293 1200128 100.000000 ... /var/log/nginx/access.log 7598726 1856 1856 7602176 100.000000 --- total cached size: 20422656

fincore也扫描不出来pod内的tmpfs的内存占用,所以查看cadvisor的pod summary最为准确!

希望看完这一篇的小伙伴可以在未来遇到内存相关的问题上,玩得愉快~