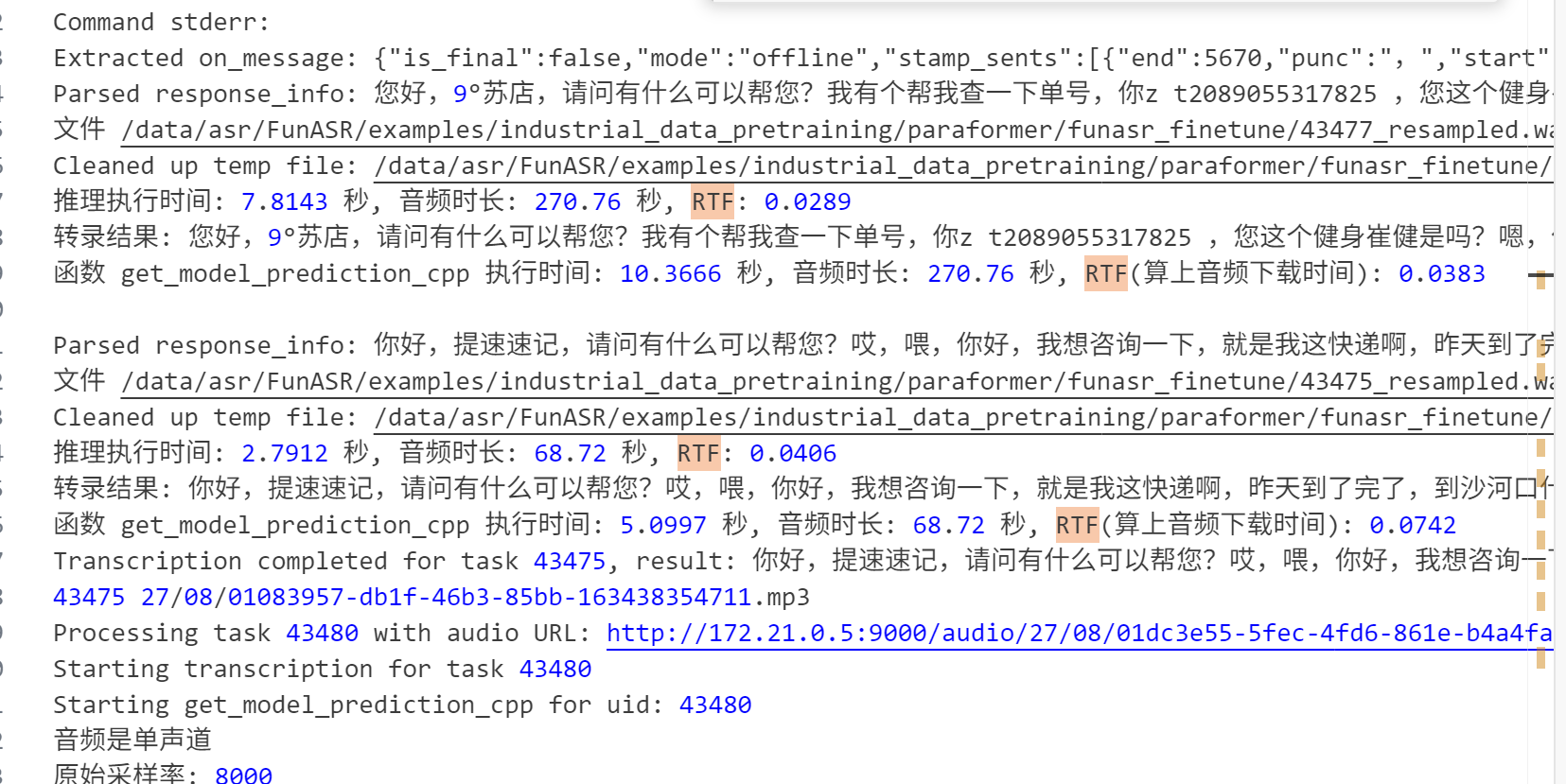

RTF达不到0.0008,连0.0076都达不到,只有0.02-0.04左右。

部署的脚本为:

nohup bash run_server.sh \

--download-model-dir /workspace/models \

--vad-dir damo/speech_fsmn_vad_zh-cn-16k-common-onnx \

--model-dir damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch \

--punc-dir damo/punc_ct-transformer_cn-en-common-vocab471067-large-onnx \

--lm-dir damo/speech_ngram_lm_zh-cn-ai-wesp-fst \

--itn-dir thuduj12/fst_itn_zh \

--decoder-thread-num 20 \

--io-thread-num 200 \

--model-thread-num 10 \

--hotword /workspace/models/hotwords.txt > run_server1.log 2>&1

run_server.sh中开启了GPU,c++构建的时候也用了-DGPU=ON。GPU监控显示正常,日志中也显示正常,但实际推理差几个数量级。

关键日志信息:

I20250528 01:48:59.989689 255255 websocket-server.cpp:30] using TLS mode: Mozilla Intermediate

I20250528 01:49:00.036312 255255 fsmn-vad.cpp:58] Successfully load model from /workspace/models/damo/speech_fsmn_vad_zh-cn-16k-common-onnx/model_quant.onnx

I20250528 01:49:00.060171 255255 paraformer-torch.cpp:41] CUDA is available, running on GPU

I20250528 01:49:05.454563 255255 paraformer-torch.cpp:52] Successfully load model from /workspace/models/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch/model_blade.torchscript

2025-05-28 01:49:10.769925: I external/org_tensorflow/tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:424] Loaded cuDNN version 8302

I20250528 01:49:35.871071 255255 paraformer-torch.cpp:75] Successfully load lm file /workspace/models/damo/speech_ngram_lm_zh-cn-ai-wesp-fst/TLG.fst

I20250528 01:49:39.386749 255255 ct-transformer.cpp:21] Successfully load model from /workspace/models/damo/punc_ct-transformer_cn-en-common-vocab471067-large-onnx/model_quant.onnx

I20250528 01:49:43.487655 255255 tokenizer.cpp:40] Successfully load file from /workspace/models/damo/punc_ct-transformer_cn-en-common-vocab471067-large-onnx/jieba.c.dict, /workspace/models/damo/punc_ct-transformer_cn-en-common-vocab471067-large-onnx/jieba_usr_dict

I20250528 01:49:43.532100 255255 tokenizer.cpp:48] Successfully load model from /workspace/models/damo/punc_ct-transformer_cn-en-common-vocab471067-large-onnx/jieba.hmm

I20250528 01:49:43.544350 255255 itn-processor.cpp:33] Successfully load model from /workspace/models/thuduj12/fst_itn_zh/zh_itn_tagger.fst

I20250528 01:49:43.547955 255255 itn-processor.cpp:35] Successfully load model from /workspace/models/thuduj12/fst_itn_zh/zh_itn_verbalizer.fst

I20250528 01:49:43.547997 255255 websocket-server.cpp:423] model successfully inited

I20250528 01:49:43.548009 255255 websocket-server.cpp:425] initAsr run check_and_clean_connection

I20250528 01:49:43.548147 255255 websocket-server.cpp:428] initAsr run check_and_clean_connection finished

I20250528 01:49:43.548161 255255 funasr-wss-server.cpp:535] decoder-thread-num: 20

I20250528 01:49:43.548171 255255 funasr-wss-server.cpp:536] io-thread-num: 200

I20250528 01:49:43.548182 255255 funasr-wss-server.cpp:537] model-thread-num: 10