Pod控制器介绍

Pod是kubernetes的最小管理单元,在kubernetes中,按照pod的创建方式可以将其分为两类:

- 自主式pod:kubernetes直接创建出来的Pod,这种pod删除后就没有了,也不会重建

- 控制器创建的pod:kubernetes通过控制器创建的pod,这种pod删除了之后还会自动重建

什么是Pod控制器

Pod控制器是管理pod的中间层,使用Pod控制器之后,只需要告诉Pod控制器,想要多少个什么样的Pod就可以了,它会创建出满足条件的Pod并确保每一个Pod资源处于用户期望的目标状态。如果Pod资源在运行中出现故障,它会基于指定策略重新编排Pod。

在kubernetes中,有很多类型的pod控制器,每种都有自己的适合的场景,常见的有下面这些:

- ReplicationController:比较原始的pod控制器,已经被废弃,由ReplicaSet替代

- ReplicaSet:保证副本数量一直维持在期望值,并支持pod数量扩缩容,镜像版本升级

- Deployment:通过控制ReplicaSet来控制Pod,并支持滚动升级、回退版本

- Horizontal Pod Autoscaler:可以根据集群负载自动水平调整Pod的数量,实现削峰填谷

- DaemonSet:在集群中的指定Node上运行且仅运行一个副本,一般用于守护进程类的任务

- Job:它创建出来的pod只要完成任务就立即退出,不需要重启或重建,用于执行一次性任务

- Cronjob:它创建的Pod负责周期性任务控制,不需要持续后台运行

- StatefulSet:管理有状态应用

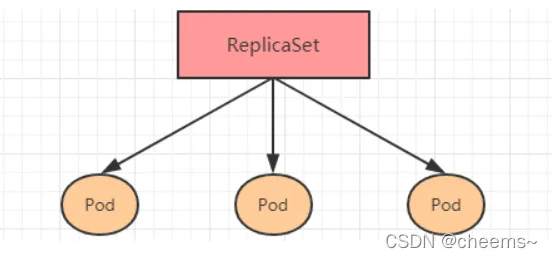

ReplicaSet(RS)

RS概述

ReplicaSet的主要作用是保证一定数量的pod正常运行,它会持续监听这些Pod的运行状态,一旦Pod发生故障,就会重启或重建。同时它还支持对pod数量的扩缩容和镜像版本的升降级。

ReplicaSet的资源清单文件:

apiVersion: apps/v1 # 版本号 kind: ReplicaSet # 类型 metadata: # 元数据 name: # rs名称 namespace: # 所属命名空间 labels: #标签 controller: rs spec: # 详情描述 replicas: 3 # 副本数量 selector: # 选择器,通过它指定该控制器管理哪些pod matchLabels: # Labels匹配规则 app: nginx-pod matchExpressions: # Expressions匹配规则 - { key: app, operator: In, values: [ nginx-pod ] } template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本 metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx ports: - containerPort: 80

在这里面,需要新了解的配置项就是spec下面几个选项:

- replicas:指定副本数量,其实就是当前rs创建出来的pod的数量,默认为1

- selector:选择器,它的作用是建立pod控制器和pod之间的关联关系,采用的Label Selector机制在pod模板上定义label,在控制器上定义选择器,就可以表明当前控制器能管理哪些pod了

- template:模板,就是当前控制器创建pod所使用的模板板,里面其实就是专栏pod详解学的定义

创建与删除RS

创建rs-controller.yaml文件,内容如下:

apiVersion: apps/v1 kind: ReplicaSet metadata: name: rs-controller namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx

创建RS

# 创建rs [root@master k8sYamlForCSDN]# kubectl apply -f rs-controller.yaml replicaset.apps/rs-controller created # 查看rs # DESIRED:期望副本数量 # CURRENT:当前副本数量 # READY:已经准备好提供服务的副本数量 [root@master k8sYamlForCSDN]# kubectl get rs rs-controller -n dev -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR rs-controller 3 3 3 75s nginx nginx app=nginx-pod # 查看当前控制器创建出来的pod # 这里发现控制器创建出来的pod的名称是在控制器名称后面拼接了-xxxxx随机码 [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE rs-controller-rr598 1/1 Running 0 47s rs-controller-tmfd7 1/1 Running 0 47s rs-controller-xgrst 1/1 Running 0 47s

删除RS

# 只推荐第一种,第二三种做了解即可 # 1. 使用yaml直接删除(推荐) [root@master k8sYamlForCSDN]# kubectl delete -f rs-controller.yaml replicaset.apps "rs-controller" deleted # 2. 使用kubectl delete命令会删除此RS以及它管理的Pod # 在kubernetes删除RS前,会将RS的replicasclear调整为0,等待所有的Pod被删除后,在执行RS对象的删除 [root@master k8sYamlForCSDN]# kubectl delete rs rs-controller -n dev replicaset.apps "rs-controller" deleted [root@master k8sYamlForCSDN]# kubectl get pod -n dev No resources found in dev namespace. # 3. 如果希望只删除RS对象,而留下正在运行的pod,需要在kubectl delete命令的时候增加--cascade=orphan选项 [root@master k8sYamlForCSDN]# kubectl get rs -n dev NAME DESIRED CURRENT READY AGE rs-controller 6 6 3 15s [root@master k8sYamlForCSDN]# kubectl delete rs rs-controller -n dev --cascade=orphan replicaset.apps "rs-controller" deleted [root@master k8sYamlForCSDN]# kubectl get rs -n dev No resources found in dev namespace. [root@master k8sYamlForCSDN]# kubectl get pod -n dev NAME READY STATUS RESTARTS AGE rs-controller-9h5zd 1/1 Running 0 38s rs-controller-jk4h6 1/1 Running 0 38s rs-controller-lmlwn 1/1 Running 0 38s

扩缩容

# 第一种方式 # 修改yaml文件,把replicas=3改成1,重新apply,此时rs会更新 [root@master k8sYamlForCSDN]# kubectl apply -f rs-controller.yaml replicaset.apps/rs-controller created [root@master k8sYamlForCSDN]# vi rs-controller.yaml [root@master k8sYamlForCSDN]# kubectl apply -f rs-controller.yaml replicaset.apps/rs-controller configured # 命令运行完毕,立即查看,发现已经有2个开始准备退出了 [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE rs-controller-h654s 0/1 Terminating 0 18s rs-controller-l67tb 0/1 Terminating 0 18s rs-controller-s4g7b 1/1 Running 0 18s # 稍等片刻,就只剩下1个了 [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE rs-controller-s4g7b 1/1 Running 0 81s # 第二种方式 # 编辑rs的副本数量,修改spec:replicas: 2即可,注意用这种方法,yaml文件中的数量是不改变的 [root@master k8sYamlForCSDN]# kubectl edit rs rs-controller -n dev replicaset.apps/rs-controller edited [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE rs-controller-9mf77 1/1 Running 0 26s rs-controller-s4g7b 1/1 Running 0 3m23s # 第三种方式 # 使用scale命令实现,后面--replicas=n直接指定目标数量即可,注意用这种方法,yaml文件中的数量是不改变的 [root@master k8sYamlForCSDN]# kubectl scale rs rs-controller -n dev --replicas=3 replicaset.apps/rs-controller scaled [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE rs-controller-7d9wf 0/1 ContainerCreating 0 6s rs-controller-9mf77 1/1 Running 0 119s rs-controller-s4g7b 1/1 Running 0 4m56s

镜像升级

# 第一种方式(推荐) # 修改yaml文件,把image=nginx改成nginx:1.17.1,重新apply,此时rs会更新 [root@master k8sYamlForCSDN]# vi rs-controller.yaml [root@master k8sYamlForCSDN]# kubectl apply -f rs-controller.yaml replicaset.apps/rs-controller configured [root@master k8sYamlForCSDN]# kubectl get rs -n dev -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES rs-controller 1 1 1 6m42s nginx nginx:1.17.1 # 第二种方式 # 编辑rs的image,修改imagenginx:1.17.2即可,注意用这种方法,yaml文件中的配置是不改变的 [root@master k8sYamlForCSDN]# kubectl edit rs rs-controller -n dev replicaset.apps/rs-controller edited [root@master k8sYamlForCSDN]# kubectl get rs -n dev -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES rs-controller 1 1 1 8m41s nginx nginx:1.17.2 # 第三种方式,也可以使用命令完成这个工作 # kubectl set image rs rs名称 容器=镜像版本 -n namespace [root@master k8sYamlForCSDN]# kubectl set image rs rs-controller -n dev nginx=nginx:1.17.3 replicaset.apps/rs-controller image updated [root@master k8sYamlForCSDN]# kubectl get rs -n dev -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES rs-controller 1 1 1 9m50s nginx nginx:1.17.3

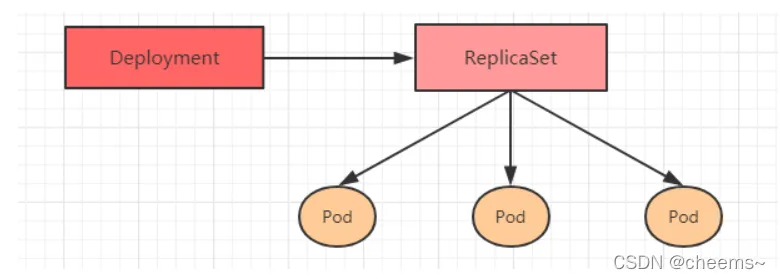

Deployment(Deploy)

Deploy概述

为了更好的解决服务编排的问题,kubernetes在V1.2版本开始,引入了Deployment控制器。这种控制器并不直接管理pod,而是通过管理ReplicaSet来间接管理Pod,即:Deployment管理ReplicaSet,ReplicaSet管理Pod。所以Deployment比ReplicaSet功能更加强大。

Deployment主要功能有下面几个:

- 支持ReplicaSet的所有功能

- 支持发布的停止、继续

- 支持滚动升级和回滚版本

Deployment的资源清单文件:

apiVersion: apps/v1 # 版本号 kind: Deployment # 类型 metadata: # 元数据 name: # Deploy名称 namespace: # 所属命名空间 labels: #标签 controller: deploy spec: # 详情描述 replicas: 3 # 副本数量 revisionHistoryLimit: 3 # 保留历史版本(rs,用于版本回退) paused: false # 暂停部署,默认是false progressDeadlineSeconds: 600 # 部署超时时间(s),默认是600 strategy: # 策略 type: RollingUpdate # 滚动更新策略 rollingUpdate: # 滚动更新 maxSurge: 30% # 最大额外可以存在的副本数,可以为百分比,也可以为整数 maxUnavailable: 30% # 最大不可用状态的 Pod 的最大值,可以为百分比,也可以为整数 selector: # 选择器,通过它指定该控制器管理哪些pod matchLabels: # Labels匹配规则 app: nginx-pod matchExpressions: # Expressions匹配规则 - { key: app, operator: In, values: [ nginx-pod ] } template: # 模板,当副本数量不足时,会根据下面的模板创建pod副本 metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx ports: - containerPort: 80

创建deployment

创建deploy-controller.yaml,内容如下:

apiVersion: apps/v1 kind: Deployment metadata: name: deploy-controller namespace: dev spec: replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx

# 创建deployment [root@master k8sYamlForCSDN]# kubectl apply -f deploy-controller.yaml deployment.apps/deploy-controller created # 查看deployment # UP-TO-DATE 最新版本的pod的数量 # AVAILABLE 当前可用的pod的数量 [root@master k8sYamlForCSDN]# kubectl get deploy deploy-controller -n dev NAME READY UP-TO-DATE AVAILABLE AGE deploy-controller 3/3 3 3 40s [root@master k8sYamlForCSDN]# kubectl get deployments.apps -n dev NAME READY UP-TO-DATE AVAILABLE AGE deploy-controller 3/3 3 3 47s # 查看rs # 发现rs的名称是在原来deployment的名字后面添加了一个10位数的随机串 [root@master k8sYamlForCSDN]# kubectl get rs -n dev NAME DESIRED CURRENT READY AGE deploy-controller-7f87645cd8 3 3 3 65s # 查看pod [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-7f87645cd8-28vcs 1/1 Running 0 73s deploy-controller-7f87645cd8-dgnsb 1/1 Running 0 73s deploy-controller-7f87645cd8-qfczb 1/1 Running 0 73s

扩缩容

# 第一种方式 # 修改yaml文件,把replicas=3改成2,重新apply,此时rs会更新 [root@master k8sYamlForCSDN]# vi deploy-controller.yaml [root@master k8sYamlForCSDN]# kubectl apply -f deploy-controller.yaml deployment.apps/deploy-controller configured [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-7f87645cd8-dgnsb 1/1 Running 0 5m53s deploy-controller-7f87645cd8-qfczb 1/1 Running 0 5m53s # 第二种方式 # 编辑rs的副本数量,修改spec:replicas: 1即可,注意用这种方法,yaml文件中的数量是不改变的 [root@master k8sYamlForCSDN]# kubectl edit deploy deploy-controller -n dev deployment.apps/deploy-controller edited [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-7f87645cd8-qfczb 1/1 Running 0 7m56s # 第三种方式 # 使用scale命令实现,后面--replicas=n直接指定目标数量即可,注意用这种方法,yaml文件中的数量是不改变的 [root@master k8sYamlForCSDN]# kubectl scale deploy deploy-controller -n dev --replicas=3 deployment.apps/deploy-controller scaled [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-7f87645cd8-2dwpp 0/1 ContainerCreating 0 1s deploy-controller-7f87645cd8-bb9xt 0/1 ContainerCreating 0 1s deploy-controller-7f87645cd8-qfczb 1/1 Running 0 8m45s

镜像更新

deployment支持两种更新策略:重建更新和滚动更新,可以通过strategy指定策略类型,支持两个属性:

strategy:指定新的Pod替换旧的Pod的策略, 支持两个属性: type:指定策略类型,支持两种策略 Recreate:在创建出新的Pod之前会先杀掉所有已存在的Pod RollingUpdate:滚动更新,就是杀死一部分,就启动一部分,在更新过程中,存在两个版本Pod rollingUpdate:当type为RollingUpdate时生效,用于为RollingUpdate设置参数,支持两个属性: maxUnavailable:用来指定在升级过程中不可用Pod的最大数量,默认为25%。 maxSurge: 用来指定在升级过程中可以超过期望的Pod的最大数量,默认为25%。

重建更新:在创建出新的Pod之前会先杀掉所有已存在的Pod

- 编辑deploy-controller.yaml,在spec节点下添加更新策略

apiVersion: apps/v1 kind: Deployment metadata: name: deploy-controller namespace: dev spec: strategy: # 策略 type: Recreate # 重建更新 replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx

- 创建deploy进行验证

# 变更镜像 [root@master k8sYamlForCSDN]# kubectl get pods -n dev -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deploy-controller-7f87645cd8-925wr 1/1 Running 0 35s 10.244.2.65 node2 <none> <none> deploy-controller-7f87645cd8-l4f82 1/1 Running 0 35s 10.244.2.64 node2 <none> <none> deploy-controller-7f87645cd8-ljwfx 1/1 Running 0 35s 10.244.1.44 node1 <none> <none> [root@master k8sYamlForCSDN]# kubectl set image deploy deploy-controller -n dev nginx=nginx:1.17.1 deployment.apps/deploy-controller image updated # 观察升级过程 [root@master k8sYamlForCSDN]# kubectl get pods -n dev -o wide -w NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deploy-controller-7f87645cd8-925wr 1/1 Running 0 39s 10.244.2.65 node2 <none> <none> deploy-controller-7f87645cd8-l4f82 1/1 Running 0 39s 10.244.2.64 node2 <none> <none> deploy-controller-7f87645cd8-ljwfx 1/1 Running 0 39s 10.244.1.44 node1 <none> <none> deploy-controller-7f87645cd8-925wr 1/1 Terminating 0 49s 10.244.2.65 node2 <none> <none> deploy-controller-7f87645cd8-l4f82 1/1 Terminating 0 49s 10.244.2.64 node2 <none> <none> deploy-controller-7f87645cd8-ljwfx 1/1 Terminating 0 49s 10.244.1.44 node1 <none> <none> deploy-controller-6f44b7b85d-whjxq 0/1 Pending 0 0s <none> <none> <none> <none> deploy-controller-6f44b7b85d-4nvzz 0/1 Pending 0 0s <none> <none> <none> <none> deploy-controller-6f44b7b85d-hjk7x 0/1 Pending 0 0s <none> <none> <none> <none> deploy-controller-6f44b7b85d-whjxq 0/1 ContainerCreating 0 0s <none> node1 <none> <none> deploy-controller-6f44b7b85d-hjk7x 0/1 ContainerCreating 0 0s <none> node1 <none> <none> deploy-controller-6f44b7b85d-4nvzz 0/1 ContainerCreating 0 0s <none> node2 <none> <none> deploy-controller-6f44b7b85d-4nvzz 1/1 Running 0 2s 10.244.2.66 node2 <none> <none> deploy-controller-6f44b7b85d-hjk7x 1/1 Running 0 16s 10.244.1.46 node1 <none> <none> deploy-controller-6f44b7b85d-whjxq 1/1 Running 0 31s 10.244.1.45 node1 <none> <none>

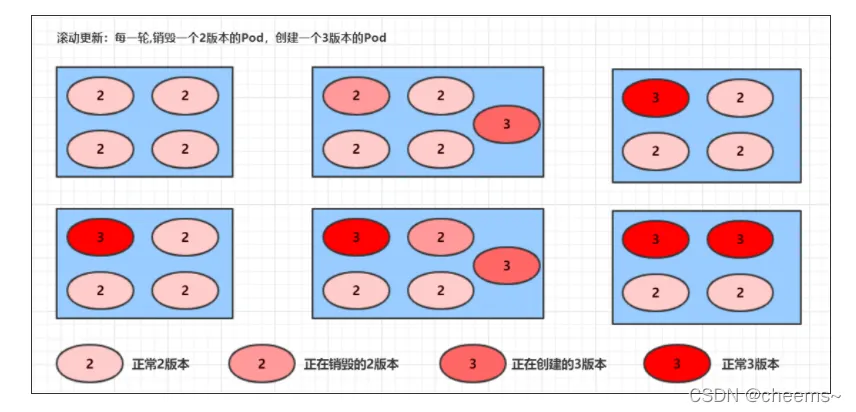

滚动更新:滚动更新,就是杀死一部分,就启动一部分,在更新过程中,存在两个版本Pod

- 编辑deploy-controller.yaml,在spec节点下添加更新策略

apiVersion: apps/v1 kind: Deployment metadata: name: deploy-controller namespace: dev spec: strategy: # 策略 type: RollingUpdate # 滚动更新 rollingUpdate: maxSurge: 25% maxUnavailable: 25% replicas: 3 selector: matchLabels: app: nginx-pod template: metadata: labels: app: nginx-pod spec: containers: - name: nginx image: nginx

- 创建deploy进行验证

# 创建deploy [root@master k8sYamlForCSDN]# kubectl apply -f deploy-controller.yaml deployment.apps/deploy-controller configured [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-7f87645cd8-4b2rf 1/1 Running 0 74s deploy-controller-7f87645cd8-7tqnr 1/1 Running 0 57s deploy-controller-7f87645cd8-cgmft 1/1 Running 0 55s # 更新镜像 [root@master k8sYamlForCSDN]# kubectl set image deploy deploy-controller -n dev nginx=nginx:1.17.1 deployment.apps/deploy-controller image updated # 滚动更新,不是先全杀掉在启动,而是一个杀掉,启动一个,滚动的形式 [root@master k8sYamlForCSDN]# kubectl get pods -n dev -o wide -w NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES deploy-controller-7f87645cd8-4b2rf 1/1 Running 0 86s 10.244.2.67 node2 <none> <none> deploy-controller-7f87645cd8-7tqnr 1/1 Running 0 69s 10.244.1.47 node1 <none> <none> deploy-controller-7f87645cd8-cgmft 1/1 Running 0 67s 10.244.1.48 node1 <none> <none> deploy-controller-6f44b7b85d-xckc9 0/1 Pending 0 0s <none> <none> <none> <none> deploy-controller-6f44b7b85d-xckc9 0/1 ContainerCreating 0 0s <none> node2 <none> <none> deploy-controller-6f44b7b85d-xckc9 1/1 Running 0 16s 10.244.2.68 node2 <none> <none> deploy-controller-7f87645cd8-7tqnr 1/1 Terminating 0 92s 10.244.1.47 node1 <none> <none> deploy-controller-6f44b7b85d-mxm5q 0/1 Pending 0 0s <none> node1 <none> <none> deploy-controller-6f44b7b85d-mxm5q 0/1 ContainerCreating 0 0s <none> node1 <none> <none> deploy-controller-6f44b7b85d-mxm5q 1/1 Running 0 17s 10.244.1.49 node1 <none> <none> deploy-controller-7f87645cd8-7tqnr 0/1 Terminating 0 93s 10.244.1.47 node1 <none> <none> deploy-controller-7f87645cd8-cgmft 1/1 Terminating 0 107s 10.244.1.48 node1 <none> <none> deploy-controller-6f44b7b85d-ftztf 0/1 Pending 0 0s <none> <none> <none> <none> deploy-controller-6f44b7b85d-ftztf 0/1 ContainerCreating 0 0s <none> node2 <none> <none> deploy-controller-7f87645cd8-cgmft 0/1 Terminating 0 108s 10.244.1.48 node1 <none> <none> deploy-controller-6f44b7b85d-ftztf 1/1 Running 0 17s 10.244.2.69 node2 <none> <none> deploy-controller-7f87645cd8-4b2rf 1/1 Terminating 0 2m23s 10.244.2.67 node2 <none> <none> # 至此,新版本的pod创建完毕,旧版本的pod销毁完毕 # 中间过程是滚动进行的,也就是边销毁边创建

滚动更新的过程:

版本回退

镜像更新中rs的变化:

# 创建deploy,并添加一个参数记录版本 [root@master k8sYamlForCSDN]# kubectl apply -f deploy-controller.yaml --record Flag --record has been deprecated, --record will be removed in the future deployment.apps/deploy-controller created # 更新镜像 [root@master k8sYamlForCSDN]# kubectl set image deploy deploy-controller -n dev nginx=nginx:1.17.1 deployment.apps/deploy-controller image updated # 查看RS # 发现原来的RS中的pod被删除了,又重新创建了新的RS [root@master k8sYamlForCSDN]# kubectl get rs -n dev -w NAME DESIRED CURRENT READY AGE deploy-controller-7f87645cd8 3 3 1 11s ... deploy-controller-6f44b7b85d 3 3 3 35s ... deploy-controller-7f87645cd8 0 0 0 90s

eployment支持版本升级过程中的暂停、继续功能以及版本回退等诸多功能,下面具体来看

kubectl rollout: 版本升级相关功能,支持下面的选项:

- status 显示当前升级状态

- history 显示 升级历史记录

- pause 暂停版本升级过程

- resume 继续已经暂停的版本升级过程

- restart 重启版本升级过程

- undo 回滚到上一级版本(可以使用–to-revision回滚到指定版本)

# 查看当前升级版本的状态 [root@master k8sYamlForCSDN]# kubectl rollout status deployment deploy-controller -n dev deployment "deploy-controller" successfully rolled out # 查看升级历史记录 [root@master k8sYamlForCSDN]# kubectl rollout history deployment deploy-controller -n dev deployment.apps/deploy-controller REVISION CHANGE-CAUSE 1 kubectl apply --filename=deploy-controller.yaml --record=true 2 kubectl apply --filename=deploy-controller.yaml --record=true # 升级镜像 [root@master k8sYamlForCSDN]# kubectl set image deploy deploy-controller -n dev nginx=nginx:1.17.2 deployment.apps/deploy-controller image updated # 查看当前升级版本的状态 [root@master k8sYamlForCSDN]# kubectl rollout status deployment deploy-controller -n dev Waiting for deployment "deploy-controller" rollout to finish: 1 out of 3 new replicas have been updated... Waiting for deployment "deploy-controller" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "deploy-controller" rollout to finish: 1 old replicas are pending termination... deployment "deploy-controller" successfully rolled out # 查看升级历史记录 # 可以发现有三次版本记录,说明完成过两次升级 [root@master k8sYamlForCSDN]# kubectl rollout history deployment deploy-controller -n dev deployment.apps/deploy-controller REVISION CHANGE-CAUSE 1 kubectl apply --filename=deploy-controller.yaml --record=true 2 kubectl apply --filename=deploy-controller.yaml --record=true 3 kubectl apply --filename=deploy-controller.yaml --record=true # 版本回滚 # 这里直接使用--to-revision=1回滚到了1版本(nginx), 如果省略这个选项,就是回退到上个版本,就是2版本(nginx1.17.1),目前是3版本(1.17.2) [root@master k8sYamlForCSDN]# kubectl rollout undo deployment deploy-controller -n dev --to-revision=1 deployment.apps/deploy-controller rolled back # 查看发现,通过nginx镜像版本可以发现到了第一版 [root@master k8sYamlForCSDN]# kubectl get deploy -n dev -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deploy-controller 3/3 2 3 10m nginx nginx app=nginx-pod # 查看rs,发现第三个rs中有3个pod运行 # 其实deployment之所以可是实现版本的回滚,就是通过记录下历史rs来实现的, # 一旦想回滚到哪个版本,只需要将当前版本pod数量降为0,然后将回滚版本的pod提升为目标数量就可以了 [root@master k8sYamlForCSDN]# kubectl get rs -n dev NAME DESIRED CURRENT READY AGE deploy-controller-67db8df7f5 0 0 0 7m36s deploy-controller-6f44b7b85d 0 0 0 12m deploy-controller-7f87645cd8 3 3 3 13m

金丝雀发布

eployment控制器支持控制更新过程中的控制,如“暂停(pause)”或“继续(resume)”更新操作。

比如有一批新的Pod资源创建完成后立即暂停更新过程,此时,仅存在一部分新版本的应用,主体部分还是旧的版本。然后,再筛选一小部分的用户请求路由到新版本的Pod应用,继续观察能否稳定地按期望的方式运行。确定没问题之后再继续完成余下的Pod资源滚动更新,否则立即回滚更新操作。这就是所谓的金丝雀发布。

# 更新deployment的版本,并配置暂停deployment [root@master k8sYamlForCSDN]# kubectl set image deploy deploy-controller -n dev nginx=nginx:1.17.1 && kubectl rollout pause deploy deploy-controller -n dev deployment.apps/deploy-controller image updated deployment.apps/deploy-controller paused #观察更新状态 # 监控更新的过程,可以看到已经新增了一个资源,但是并未按照预期的状态去删除一个旧的资源,就是因为使用了pause暂停命令 [root@master k8sYamlForCSDN]# kubectl get rs -n dev NAME DESIRED CURRENT READY AGE deploy-controller-67db8df7f5 0 0 0 19m deploy-controller-6f44b7b85d 1 1 1 24m deploy-controller-7f87645cd8 3 3 3 25m [root@master k8sYamlForCSDN]# kubectl rollout status deploy deploy-controller -n dev Waiting for deployment "deploy-controller" rollout to finish: 1 out of 3 new replicas have been updated... # 确保更新的pod没问题了,继续更新 [root@master k8sYamlForCSDN]# kubectl rollout resume deploy deploy-controller -n dev deployment.apps/deploy-controller resumed # 查看最后的更新情况 [root@master k8sYamlForCSDN]# kubectl get rs -n dev -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR deploy-controller-67db8df7f5 0 0 0 23m nginx nginx:1.17.2 deploy-controller-6f44b7b85d 3 3 3 28m nginx nginx:1.17.1 deploy-controller-7f87645cd8 0 0 0 29m nginx nginx [root@master k8sYamlForCSDN]# kubectl get pods -n dev NAME READY STATUS RESTARTS AGE deploy-controller-6f44b7b85d-7gfp7 1/1 Running 0 2m13s deploy-controller-6f44b7b85d-b6n2b 1/1 Running 0 5m30s deploy-controller-6f44b7b85d-cg4vq 1/1 Running 0 116s

删除Deployment

# 删除deployment,其下的rs和pod也将被删除 [root@master k8sYamlForCSDN]# kubectl delete -f deploy-controller.yaml deployment.apps "deploy-controller" deleted

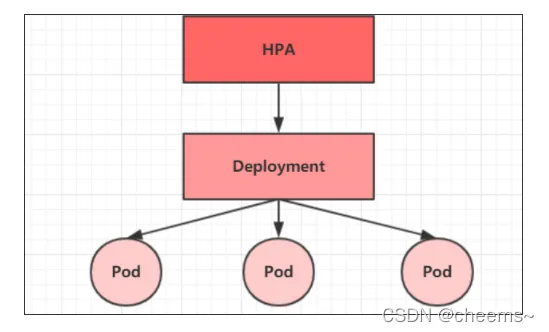

Horizontal Pod Autoscaler(HPA)

HPA概述

我们已经可以实现通过手工执行kubectl scale命令或者edit和改yaml实现Pod扩容或缩容,但是这显然不符合Kubernetes的定位目标:自动化、智能化。 Kubernetes期望可以实现通过监测Pod的使用情况,实现pod数量的自动调整,于是就产生了Horizontal Pod Autoscaler(HPA)这种控制器。

HPA可以获取每个Pod利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量的调整。其实HPA与之前的Deployment一样,也属于一种Kubernetes资源对象,它通过追踪分析RC控制的所有目标Pod的负载变化情况,来确定是否需要针对性地调整目标Pod的副本数,这是HPA的实现原理。

1.安装metrics-server

metrics-server可以用来收集集群中的资源使用情况

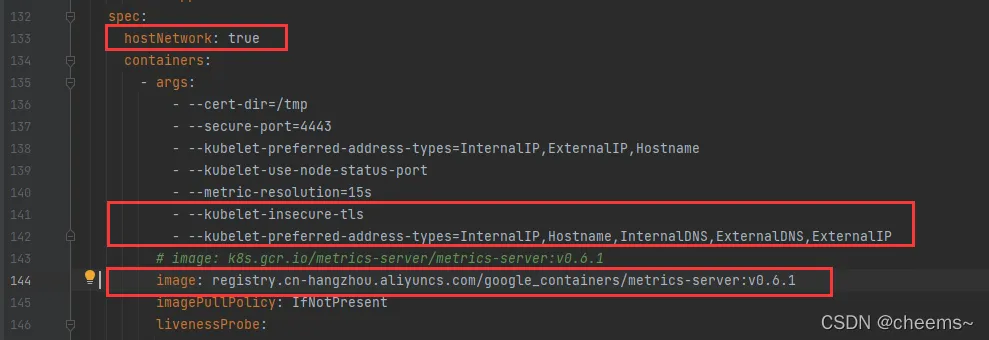

# 从一个能访问外网的地方下载一个yaml文件 https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability.yaml # 修改yaml的参数 # 找到这个 -> image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1 # 替换为 -> image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.1 # 再增加几个参数 hostNetwork: true args: - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP # 如图

# 将编辑好的yaml文件复制到linux中 [root@master k8sYamlForCSDN]# vi components.yaml # 运行 [root@master k8sYamlForCSDN]# kubectl apply -f components.yaml serviceaccount/metrics-server created clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created clusterrole.rbac.authorization.k8s.io/system:metrics-server created rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created service/metrics-server created deployment.apps/metrics-server created apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created # 查看pod运行情况 [root@master k8sYamlForCSDN]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE ...... metrics-server-77ccdd66cf-h7279 1/1 Running 0 7m53s # 使用kubectl top nodes 查看资源使用情况 [root@master k8sYamlForCSDN]# kubectl top nodes NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 84m 4% 1116Mi 64% node1 21m 1% 646Mi 37% node2 16m 0% 466Mi 27% [root@master k8sYamlForCSDN]# kubectl top pods -n kube-system NAME CPU(cores) MEMORY(bytes) coredns-64897985d-7bx2c 2m 20Mi coredns-64897985d-w5c4q 1m 17Mi etcd-master 11m 126Mi kube-apiserver-master 31m 306Mi kube-controller-manager-master 13m 84Mi kube-flannel-ds-78vrj 1m 20Mi kube-flannel-ds-lv5jf 2m 19Mi kube-flannel-ds-tk9c6 2m 20Mi kube-proxy-vxflz 1m 30Mi kube-proxy-w886d 1m 20Mi kube-proxy-xb7kp 1m 19Mi kube-scheduler-master 2m 36Mi metrics-server-77ccdd66cf-h7279 4m 18Mi # 至此,metrics-server安装完成

我这里提供一份编写博文时用的并且已经修改好的yaml文件,2022年3月2日22点38分

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server rbac.authorization.k8s.io/aggregate-to-admin: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-view: "true" name: system:aggregated-metrics-reader rules: - apiGroups: - metrics.k8s.io resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: k8s-app: metrics-server name: system:metrics-server rules: - apiGroups: - "" resources: - nodes/metrics verbs: - get - apiGroups: - "" resources: - pods - nodes verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: k8s-app: metrics-server name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: v1 kind: Service metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: ports: - name: https port: 443 protocol: TCP targetPort: https selector: k8s-app: metrics-server --- apiVersion: apps/v1 kind: Deployment metadata: labels: k8s-app: metrics-server name: metrics-server namespace: kube-system spec: selector: matchLabels: k8s-app: metrics-server strategy: rollingUpdate: maxUnavailable: 0 template: metadata: labels: k8s-app: metrics-server spec: hostNetwork: true containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP # image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1 image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.1 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 3 httpGet: path: /livez port: https scheme: HTTPS periodSeconds: 10 name: metrics-server ports: - containerPort: 4443 name: https protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /readyz port: https scheme: HTTPS initialDelaySeconds: 20 periodSeconds: 10 resources: requests: cpu: 100m memory: 200Mi securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - mountPath: /tmp name: tmp-dir nodeSelector: kubernetes.io/os: linux priorityClassName: system-cluster-critical serviceAccountName: metrics-server volumes: - emptyDir: {} name: tmp-dir --- apiVersion: apiregistration.k8s.io/v1 kind: APIService metadata: labels: k8s-app: metrics-server name: v1beta1.metrics.k8s.io spec: group: metrics.k8s.io groupPriorityMinimum: 100 insecureSkipTLSVerify: true service: name: metrics-server namespace: kube-system version: v1beta1 versionPriority: 100

2.准备deployment和servie

准备deployment和servie的yaml文件

apiVersion: apps/v1 kind: Deployment metadata: name: deploy-nginx namespace: dev spec: selector: matchLabels: run: "nginx" template: metadata: labels: run: "nginx" spec: containers: - name: nginx image: nginx ports: - containerPort: 80 protocol: TCP --- apiVersion: v1 kind: Service metadata: name: svc-deploy-nginx namespace: dev spec: type: NodePort clusterIP: 10.109.68.72 selector: run: "nginx" ports: - port: 6872 targetPort: 80 protocol: TCP

[root@master k8sYamlForCSDN]# vi svc-deploy.yaml # 创建 [root@master k8sYamlForCSDN]# kubectl apply -f svc-deploy.yaml deployment.apps/deploy-nginx created # 查看 [root@master k8sYamlForCSDN]# kubectl get deploy,pods,svc -n dev NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deploy-nginx 0/1 1 0 3s NAME READY STATUS RESTARTS AGE pod/deploy-nginx-69ccc6c65-mbhds 0/1 ContainerCreating 0 3s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/svc-deploy-nginx NodePort 10.109.68.72 <none> 6872:30709/TCP 3s [root@master k8sYamlForCSDN]# kubectl get deploy,pods,svc -n dev NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/deploy-nginx 1/1 1 1 62s NAME READY STATUS RESTARTS AGE pod/deploy-nginx-69ccc6c65-mbhds 1/1 Running 0 62s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/svc-deploy-nginx NodePort 10.109.68.72 <none> 6872:30709/TCP 62s

3.部署HPA

创建hpa.yaml

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: hpa namespace: dev spec: minReplicas: 1 #最小pod数量 maxReplicas: 10 #最大pod数量 targetCPUUtilizationPercentage: 3 # CPU使用率指标3% scaleTargetRef: # 指定要控制的nginx信息 apiVersion: apps/v1 kind: Deployment name: deploy-nginx

[root@master k8sYamlForCSDN]# vi hpa.yaml # 创建hpa [root@master k8sYamlForCSDN]# kubectl apply -f hpa.yaml horizontalpodautoscaler.autoscaling/hpa created # 查看hpa,发现是unknow,为什么呢? [root@master k8sYamlForCSDN]# kubectl get hpa -n dev NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa Deployment/deploy-nginx <unknown>/3% 1 10 1 21s

好,现在启动,压测,发现没有自动伸缩,怎么回事呢?我们查看hpa看看

[root@master k8sYamlForCSDN]# kubectl describe hpa -n dev Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedComputeMetricsReplicas 7m5s (x12 over 9m50s) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get cpu utilization: missing request for cpu Warning FailedGetResourceMetric 4m50s (x21 over 9m50s) horizontal-pod-autoscaler failed to get cpu utilization: missing request for cpu

这是因为我们上面创建的 Pod 对象没有添加 request 资源声明,这样导致 HPA 读取不到 CPU 指标信息,所以如果要想让 HPA 生效,对应的 Pod 资源必须添加 requests 资源声明,好,现在我们需要更新之前的deploy的yaml文件

# 其实这里与之前的就加了个resources.cpu资源配额的限制 apiVersion: apps/v1 kind: Deployment metadata: name: deploy-nginx namespace: dev spec: selector: matchLabels: run: "nginx" template: metadata: labels: run: "nginx" spec: containers: - name: nginx image: nginx ports: - containerPort: 80 protocol: TCP resources: requests: cpu: 100m --- apiVersion: v1 kind: Service metadata: name: svc-deploy-nginx namespace: dev spec: type: NodePort clusterIP: 10.109.68.72 selector: run: "nginx" ports: - port: 6872 targetPort: 80 protocol: TCP

# 修改完后重新启动 [root@master k8sYamlForCSDN]# kubectl apply -f svc-deploy.yaml deployment.apps/deploy-nginx created service/svc-deploy-nginx created [root@master k8sYamlForCSDN]# kubectl apply -f hpa.yaml horizontalpodautoscaler.autoscaling/hpa created [root@master k8sYamlForCSDN]# kubectl get svc -n dev -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/svc-deploy-nginx NodePort 10.109.68.72 <none> 6872:30627/TCP 3m16s run=nginx [root@master k8sYamlForCSDN]# kubectl get hpa -n dev -w NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa Deployment/deploy-nginx 0%/3% 1 10 1 75s

4.测试

使用压测工具对service地址192.168.109.100:30627进行压测,然后通过控制台查看hpa和pod的变化

# deployment变化 [root@master k8sYamlForCSDN]# kubectl get deploy -n dev -w NAME READY UP-TO-DATE AVAILABLE AGE deploy-nginx 8/8 8 8 5m9s deploy-nginx 8/10 8 8 7m4s deploy-nginx 8/10 8 8 7m4s deploy-nginx 8/10 8 8 7m4s deploy-nginx 8/10 10 8 7m4s deploy-nginx 9/10 10 9 7m7s deploy-nginx 10/10 10 10 7m21s ...... # hpa变化 [root@master k8sYamlForCSDN]# kubectl get hpa -n dev -w NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE hpa Deployment/deploy-nginx 25%/3% 1 10 2 3m45s hpa Deployment/deploy-nginx 23%/3% 1 10 4 4m hpa Deployment/deploy-nginx 7%/3% 1 10 8 4m15s hpa Deployment/deploy-nginx 12%/3% 1 10 8 4m31s ...... # pod变化 [root@master k8sYamlForCSDN]# kubectl get pods -n dev -w NAME READY STATUS RESTARTS AGE deploy-nginx-dbcdd8c84-gc5tk 1/1 Running 0 3m39s deploy-nginx-dbcdd8c84-zm7gf 1/1 Running 0 6s deploy-nginx-dbcdd8c84-2whpd 0/1 Pending 0 0s deploy-nginx-dbcdd8c84-qf62m 0/1 Pending 0 0s deploy-nginx-dbcdd8c84-2whpd 0/1 Pending 0 0s deploy-nginx-dbcdd8c84-qf62m 0/1 Pending 0 0s ......

kubernetes—Controller详解(二):https://developer.aliyun.com/article/1417727