目录

利用boston房价数据集(PCA处理)采用线性回归和Lasso套索回归算法实现房价预测模型评估

利用boston房价数据集(PCA处理)采用线性回归和Lasso套索回归算法实现房价预测模型评估

设计思路

更新……

输出结果

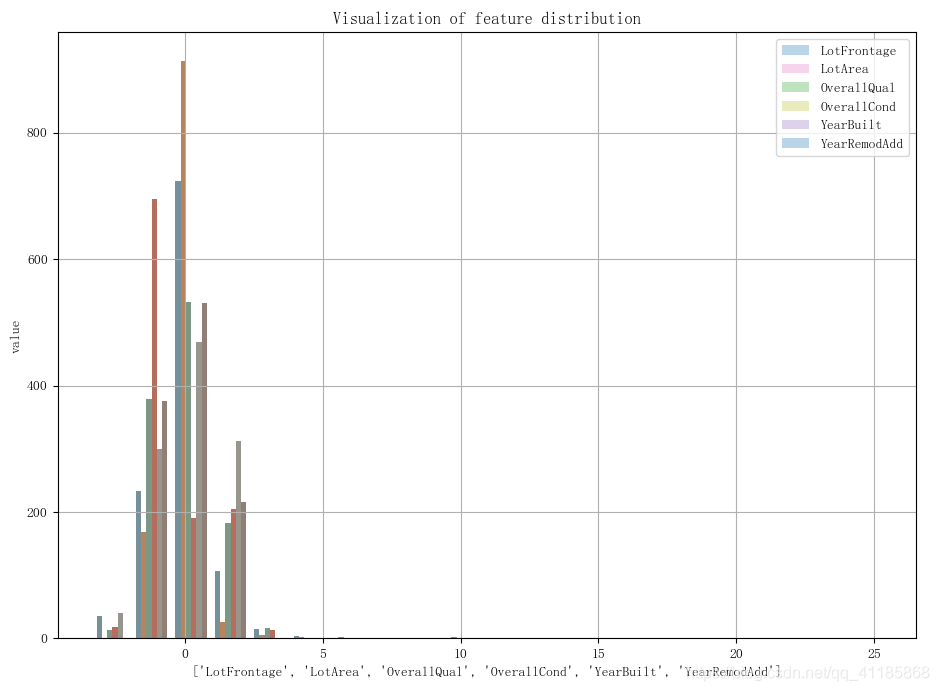

1. Id MSSubClass MSZoning ... SaleType SaleCondition SalePrice 2. 0 1 60 RL ... WD Normal 208500 3. 1 2 20 RL ... WD Normal 181500 4. 2 3 60 RL ... WD Normal 223500 5. 3 4 70 RL ... WD Abnorml 140000 6. 4 5 60 RL ... WD Normal 250000 7. 8. [5 rows x 81 columns] 9. numeric_columns 36 ['LotFrontage', 'LotArea', 'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'MasVnrArea', 'BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', '1stFlrSF', '2ndFlrSF', 'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'FullBath', 'HalfBath', 'BedroomAbvGr', 'KitchenAbvGr', 'TotRmsAbvGrd', 'Fireplaces', 'GarageYrBlt', 'GarageCars', 'GarageArea', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch', '3SsnPorch', 'ScreenPorch', 'PoolArea', 'MiscVal', 'MoSold', 'YrSold', 'SalePrice'] 10. (1460, 36) 11. LotFrontage LotArea OverallQual ... MoSold YrSold SalePrice 12. 0 65.0 8450 7 ... 2 2008 208500 13. 1 80.0 9600 6 ... 5 2007 181500 14. 2 68.0 11250 7 ... 9 2008 223500 15. 3 60.0 9550 7 ... 2 2006 140000 16. 4 84.0 14260 8 ... 12 2008 250000 17. 18. 19. 依次统计每列缺失值元素个数: 20. 36 [259, 0, 0, 0, 0, 0, 8, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 81, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0] 21. Missing_data_Per_dict_0: (33, 0.9167, {'LotArea': 0.0, 'OverallQual': 0.0, 'OverallCond': 0.0, 'YearBuilt': 0.0, 'YearRemodAdd': 0.0, 'BsmtFinSF1': 0.0, 'BsmtFinSF2': 0.0, 'BsmtUnfSF': 0.0, 'TotalBsmtSF': 0.0, '1stFlrSF': 0.0, '2ndFlrSF': 0.0, 'LowQualFinSF': 0.0, 'GrLivArea': 0.0, 'BsmtFullBath': 0.0, 'BsmtHalfBath': 0.0, 'FullBath': 0.0, 'HalfBath': 0.0, 'BedroomAbvGr': 0.0, 'KitchenAbvGr': 0.0, 'TotRmsAbvGrd': 0.0, 'Fireplaces': 0.0, 'GarageCars': 0.0, 'GarageArea': 0.0, 'WoodDeckSF': 0.0, 'OpenPorchSF': 0.0, 'EnclosedPorch': 0.0, '3SsnPorch': 0.0, 'ScreenPorch': 0.0, 'PoolArea': 0.0, 'MiscVal': 0.0, 'MoSold': 0.0, 'YrSold': 0.0, 'SalePrice': 0.0}) 22. Missing_data_Per_dict_Not0: (3, 0.0833, {'LotFrontage': 0.177397, 'MasVnrArea': 0.005479, 'GarageYrBlt': 0.055479}) 23. Missing_data_Per_dict_under01: (2, 0.0556, {'MasVnrArea': 0.005479, 'GarageYrBlt': 0.055479}) 24. 依次计算每列缺失值元素占比: {'LotFrontage': 0.177397, 'MasVnrArea': 0.005479, 'GarageYrBlt': 0.055479} 25. data_Missing_dict {'LotFrontage': 0.1773972602739726, 'LotArea': 0.0, 'OverallQual': 0.0, 'OverallCond': 0.0, 'YearBuilt': 0.0, 'YearRemodAdd': 0.0, 'MasVnrArea': 0.005479452054794521, 'BsmtFinSF1': 0.0, 'BsmtFinSF2': 0.0, 'BsmtUnfSF': 0.0, 'TotalBsmtSF': 0.0, '1stFlrSF': 0.0, '2ndFlrSF': 0.0, 'LowQualFinSF': 0.0, 'GrLivArea': 0.0, 'BsmtFullBath': 0.0, 'BsmtHalfBath': 0.0, 'FullBath': 0.0, 'HalfBath': 0.0, 'BedroomAbvGr': 0.0, 'KitchenAbvGr': 0.0, 'TotRmsAbvGrd': 0.0, 'Fireplaces': 0.0, 'GarageYrBlt': 0.05547945205479452, 'GarageCars': 0.0, 'GarageArea': 0.0, 'WoodDeckSF': 0.0, 'OpenPorchSF': 0.0, 'EnclosedPorch': 0.0, '3SsnPorch': 0.0, 'ScreenPorch': 0.0, 'PoolArea': 0.0, 'MiscVal': 0.0, 'MoSold': 0.0, 'YrSold': 0.0, 'SalePrice': 0.0} 26. after dropna (1121, 36) 27. <class 'numpy.ndarray'> 28. LotFrontage LotArea OverallQual ... MiscVal MoSold YrSold 29. 0 -0.233570 -0.205885 0.570704 ... -0.141407 -1.615345 0.153084 30. 1 0.384834 -0.064358 -0.153825 ... -0.141407 -0.498715 -0.596291 31. 2 -0.109889 0.138702 0.570704 ... -0.141407 0.990125 0.153084 32. 3 -0.439705 -0.070512 0.570704 ... -0.141407 -1.615345 -1.345665 33. 4 0.549742 0.509132 1.295234 ... -0.141407 2.106755 0.153084 34. ... ... ... ... ... ... ... ... 35. 1116 -0.357251 -0.271480 -0.153825 ... -0.141407 0.617915 -0.596291 36. 1117 0.590968 0.375605 -0.153825 ... -0.141407 -1.615345 1.651832 37. 1118 -0.192343 -0.133030 0.570704 ... 14.947388 -0.498715 1.651832 38. 1119 -0.109889 -0.049960 -0.878355 ... -0.141407 -0.870925 1.651832 39. 1120 0.178699 -0.022885 -0.878355 ... -0.141407 -0.126505 0.153084 40. 41. [1121 rows x 35 columns] 42. 前10个主成分解释了数据中63.80%的变化 43. 经过PCA后,进行第一层主成分分析------------------------------------- 44. [(0.16970682313415306, 'LotFrontage'), (0.1211669980146095, 'LotArea'), (0.3008665261375608, 'OverallQual'), (-0.1017783758120348, 'OverallCond'), (0.23754113423286216, 'YearBuilt'), (0.21067267847804322, 'YearRemodAdd'), (0.19125461510335365, 'MasVnrArea'), (0.14136511574315347, 'BsmtFinSF1'), (-0.013552848692716916, 'BsmtFinSF2'), (0.11439764110410199, 'BsmtUnfSF'), (0.259354275741638, 'TotalBsmtSF'), (0.2591780447881022, '1stFlrSF'), (0.11504305093601253, '2ndFlrSF'), (0.004231304806602964, 'LowQualFinSF'), (0.2877802164879641, 'GrLivArea'), (0.08317879411803167, 'BsmtFullBath'), (-0.02114280846249704, 'BsmtHalfBath'), (0.25499633884283257, 'FullBath'), (0.11080279874459822, 'HalfBath'), (0.1017767099777179, 'BedroomAbvGr'), (-0.01012145139988125, 'KitchenAbvGr'), (0.23572236584667458, 'TotRmsAbvGrd'), (0.17611466785004926, 'Fireplaces'), (0.23726651555979883, 'GarageYrBlt'), (0.2831568046802727, 'GarageCars'), (0.279827792756442, 'GarageArea'), (0.13036585867815073, 'WoodDeckSF'), (0.16664693092097654, 'OpenPorchSF'), (-0.08602539908222213, 'EnclosedPorch'), (0.010532579475601184, '3SsnPorch'), (0.02556170369869493, 'ScreenPorch'), (0.06246570190310543, 'PoolArea'), (-0.015493399959318557, 'MiscVal'), (0.028399126033275164, 'MoSold'), (-0.011129722622237775, 'YrSold')] 45. [(0.3008665261375608, 'OverallQual'), (0.2877802164879641, 'GrLivArea'), (0.2831568046802727, 'GarageCars'), (0.279827792756442, 'GarageArea'), (0.259354275741638, 'TotalBsmtSF'), (0.2591780447881022, '1stFlrSF'), (0.25499633884283257, 'FullBath'), (0.23754113423286216, 'YearBuilt'), (0.23726651555979883, 'GarageYrBlt'), (0.23572236584667458, 'TotRmsAbvGrd'), (0.21067267847804322, 'YearRemodAdd'), (0.19125461510335365, 'MasVnrArea'), (0.17611466785004926, 'Fireplaces'), (0.16970682313415306, 'LotFrontage'), (0.16664693092097654, 'OpenPorchSF'), (0.14136511574315347, 'BsmtFinSF1'), (0.13036585867815073, 'WoodDeckSF'), (0.1211669980146095, 'LotArea'), (0.11504305093601253, '2ndFlrSF'), (0.11439764110410199, 'BsmtUnfSF'), (0.11080279874459822, 'HalfBath'), (0.1017767099777179, 'BedroomAbvGr'), (0.08317879411803167, 'BsmtFullBath'), (0.06246570190310543, 'PoolArea'), (0.028399126033275164, 'MoSold'), (0.02556170369869493, 'ScreenPorch'), (0.010532579475601184, '3SsnPorch'), (0.004231304806602964, 'LowQualFinSF'), (-0.01012145139988125, 'KitchenAbvGr'), (-0.011129722622237775, 'YrSold'), (-0.013552848692716916, 'BsmtFinSF2'), (-0.015493399959318557, 'MiscVal'), (-0.02114280846249704, 'BsmtHalfBath'), (-0.08602539908222213, 'EnclosedPorch'), (-0.1017783758120348, 'OverallCond')] 46. 经过PCA后,进行第二层主成分分析------------------------------------- 47. [(0.037140668512444255, 'LotFrontage'), (0.005762269875424171, 'LotArea'), (-0.02265545744738413, 'OverallQual'), (0.06797580738610676, 'OverallCond'), (-0.22034458100877843, 'YearBuilt'), (-0.11769773674122082, 'YearRemodAdd'), (-0.02330741979867707, 'MasVnrArea'), (-0.26830830083400875, 'BsmtFinSF1'), (-0.06776753790369254, 'BsmtFinSF2'), (0.10349973537774373, 'BsmtUnfSF'), (-0.2014230745261159, 'TotalBsmtSF'), (-0.14501101153644946, '1stFlrSF'), (0.43960496790131565, '2ndFlrSF'), (0.11932040000909688, 'LowQualFinSF'), (0.2706724094458561, 'GrLivArea'), (-0.2741406761479087, 'BsmtFullBath'), (-0.001880261013674545, 'BsmtHalfBath'), (0.12608264523927462, 'FullBath'), (0.23358978781221817, 'HalfBath'), (0.3864399252645517, 'BedroomAbvGr'), (0.12179545892853964, 'KitchenAbvGr'), (0.3371810668951179, 'TotRmsAbvGrd'), (0.06581774146310777, 'Fireplaces'), (-0.1834261688794573, 'GarageYrBlt'), (-0.04640661259007604, 'GarageCars'), (-0.08613653500685643, 'GarageArea'), (-0.047991361825782064, 'WoodDeckSF'), (0.03130768246434415, 'OpenPorchSF'), (0.13376424222015906, 'EnclosedPorch'), (-0.02564456693744644, '3SsnPorch'), (0.04211790221668751, 'ScreenPorch'), (0.03032238859229474, 'PoolArea'), (0.04968459727862472, 'MiscVal'), (0.02754218343139985, 'MoSold'), (-0.04555808126996797, 'YrSold')] 48. [(0.43960496790131565, '2ndFlrSF'), (0.3864399252645517, 'BedroomAbvGr'), (0.3371810668951179, 'TotRmsAbvGrd'), (0.2706724094458561, 'GrLivArea'), (0.23358978781221817, 'HalfBath'), (0.13376424222015906, 'EnclosedPorch'), (0.12608264523927462, 'FullBath'), (0.12179545892853964, 'KitchenAbvGr'), (0.11932040000909688, 'LowQualFinSF'), (0.10349973537774373, 'BsmtUnfSF'), (0.06797580738610676, 'OverallCond'), (0.06581774146310777, 'Fireplaces'), (0.04968459727862472, 'MiscVal'), (0.04211790221668751, 'ScreenPorch'), (0.037140668512444255, 'LotFrontage'), (0.03130768246434415, 'OpenPorchSF'), (0.03032238859229474, 'PoolArea'), (0.02754218343139985, 'MoSold'), (0.005762269875424171, 'LotArea'), (-0.001880261013674545, 'BsmtHalfBath'), (-0.02265545744738413, 'OverallQual'), (-0.02330741979867707, 'MasVnrArea'), (-0.02564456693744644, '3SsnPorch'), (-0.04555808126996797, 'YrSold'), (-0.04640661259007604, 'GarageCars'), (-0.047991361825782064, 'WoodDeckSF'), (-0.06776753790369254, 'BsmtFinSF2'), (-0.08613653500685643, 'GarageArea'), (-0.11769773674122082, 'YearRemodAdd'), (-0.14501101153644946, '1stFlrSF'), (-0.1834261688794573, 'GarageYrBlt'), (-0.2014230745261159, 'TotalBsmtSF'), (-0.22034458100877843, 'YearBuilt'), (-0.26830830083400875, 'BsmtFinSF1'), (-0.2741406761479087, 'BsmtFullBath')] 49. 不进行PCA的线性回归的MSE是1644140595.6636596 50. 前10个PCA主成分进行线性回归的MSE是1836601962.4751632 51. [1e-10, 1e-09, 1e-08, 1e-07, 1e-06, 1e-05, 0.0001, 0.001, 0.01, 0.1] 52. [1642818822.3530025, 1642818822.3529558, 1642818822.3524888, 1642818822.3471866, 1642818822.3005185, 1642818821.7415214, 1642818817.1179569, 1642818756.7038794, 1642818283.0732899, 1642813588.5752773] 53. [1e-10, 1e-09, 1e-08, 1e-07, 1e-06, 1e-05, 0.0001, 0.001, 0.01, 0.1] 54. [1836601962.4751682, 1836601962.4752123, 1836601962.475657, 1836601962.480097, 1836601962.5245085, 1836601962.9652405, 1836601967.4063494, 1836602011.8174434, 1836602455.9288514, 1836606882.1034737] 55. 56. 57. 58. 59. 60. 61. 62. 63. 64.

核心代码

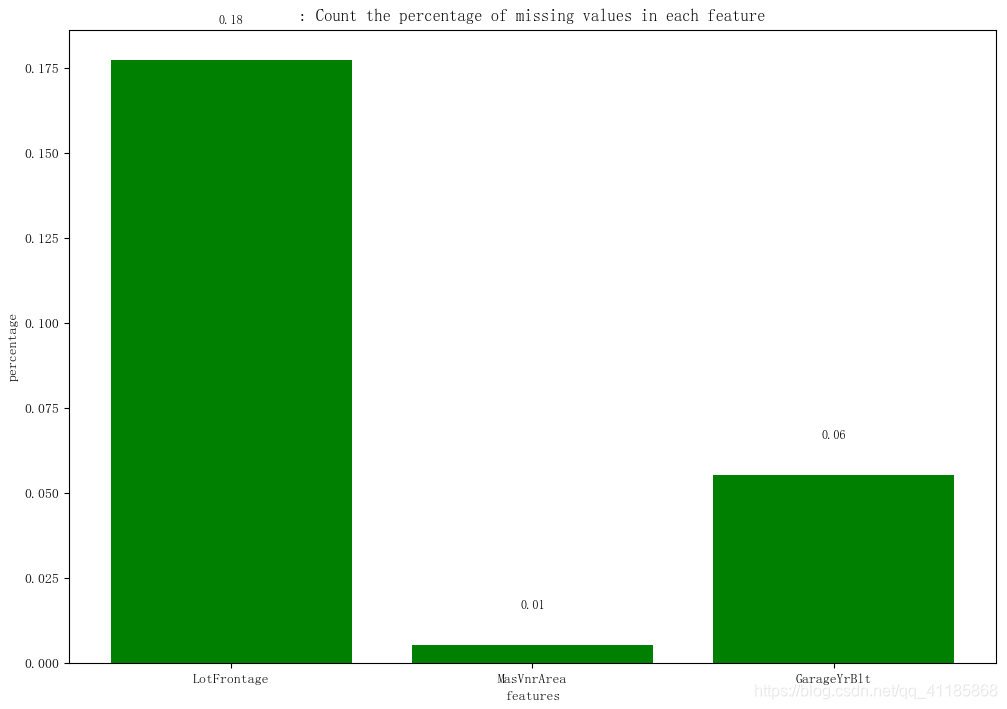

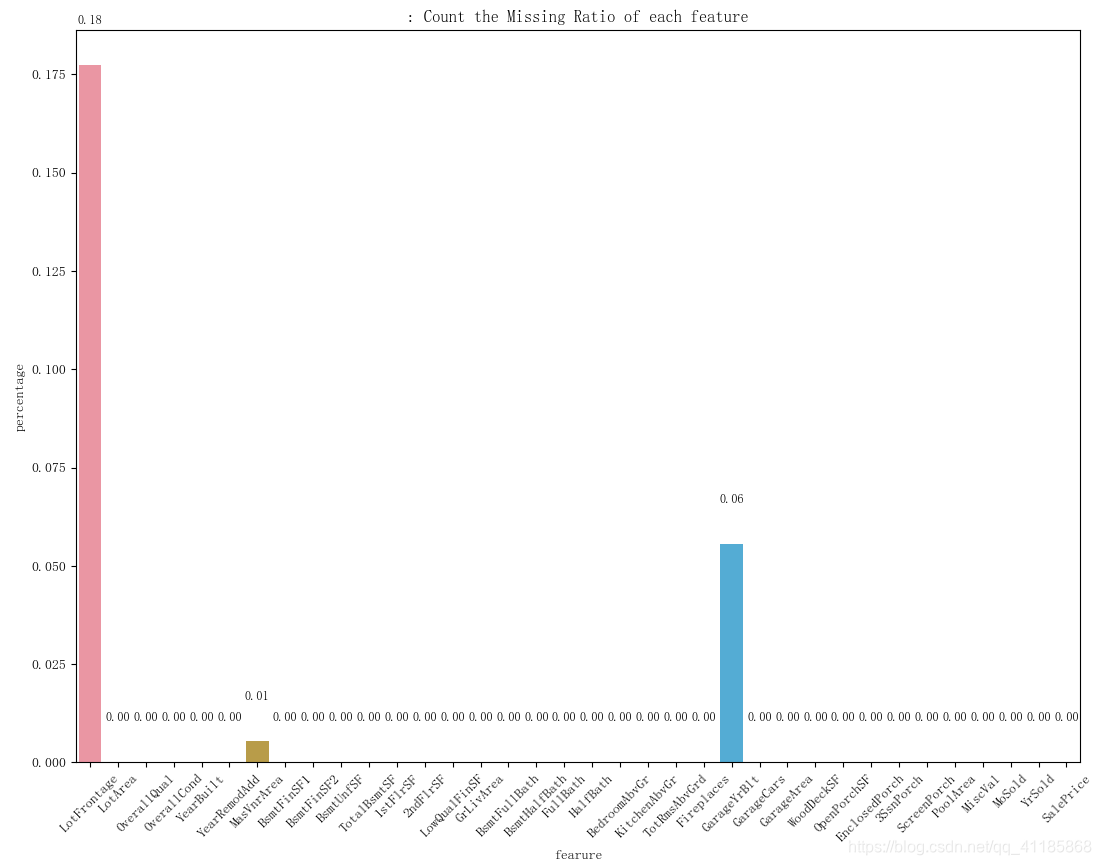

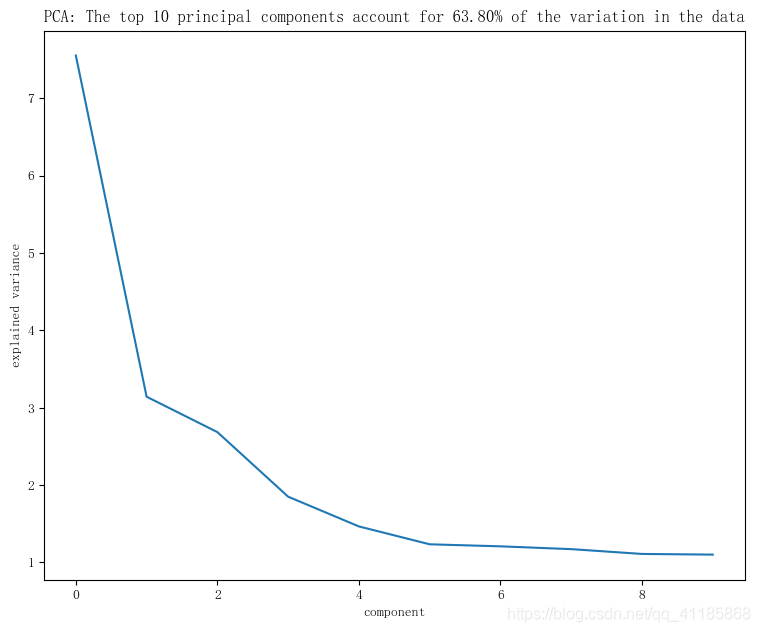

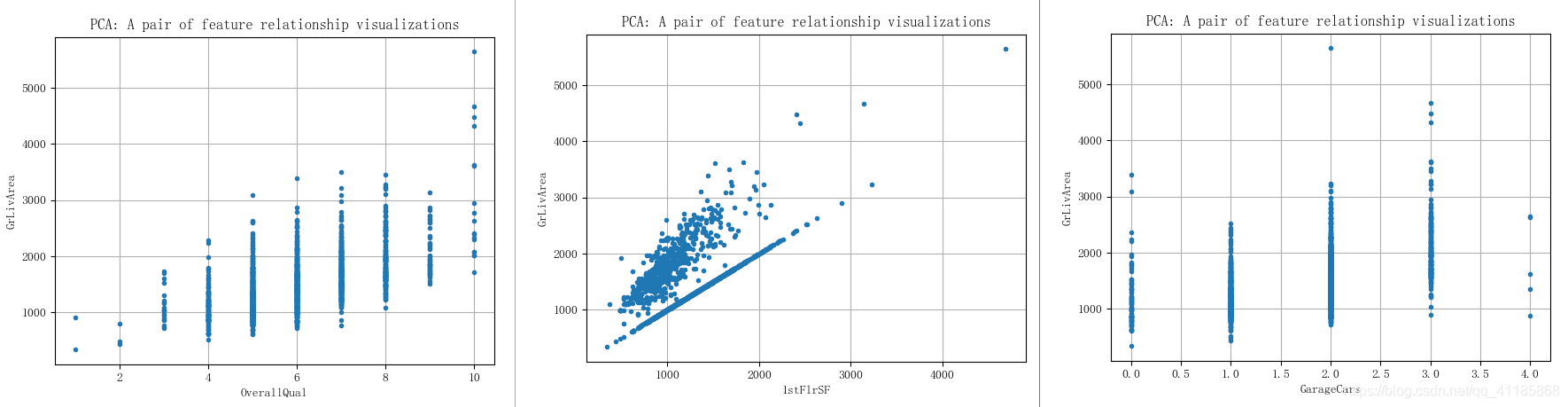

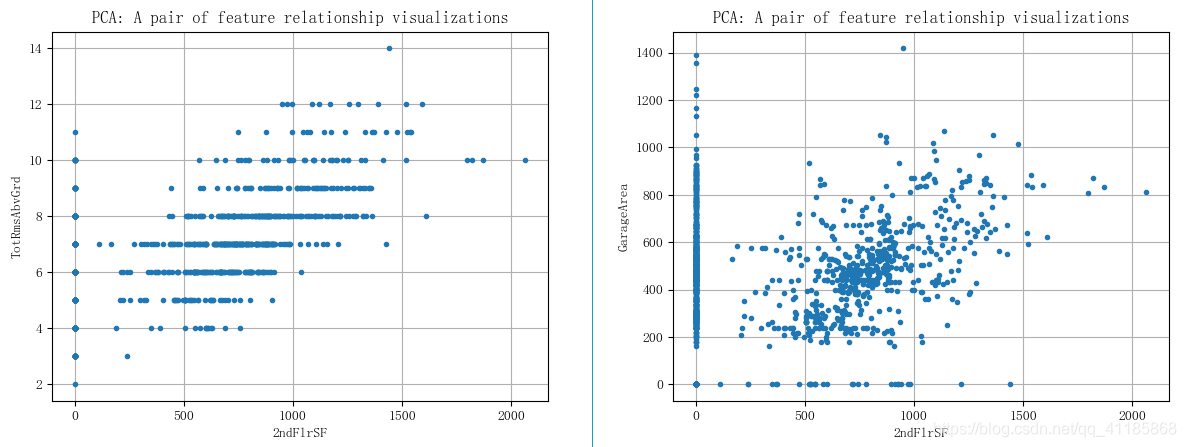

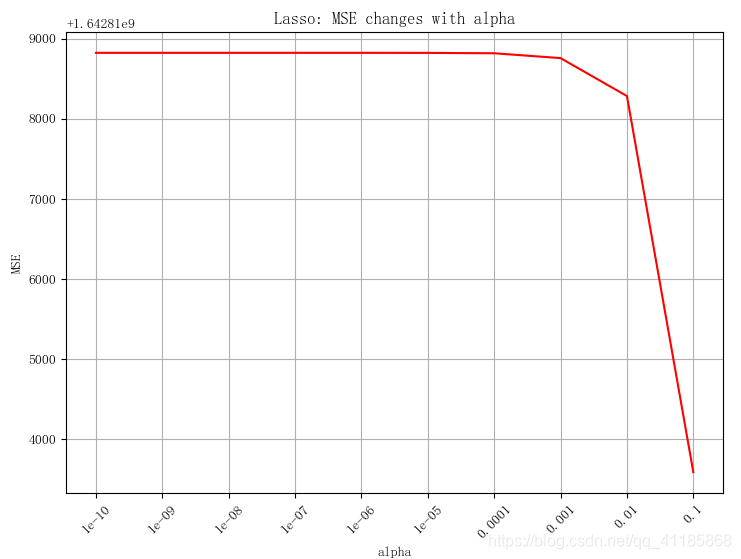

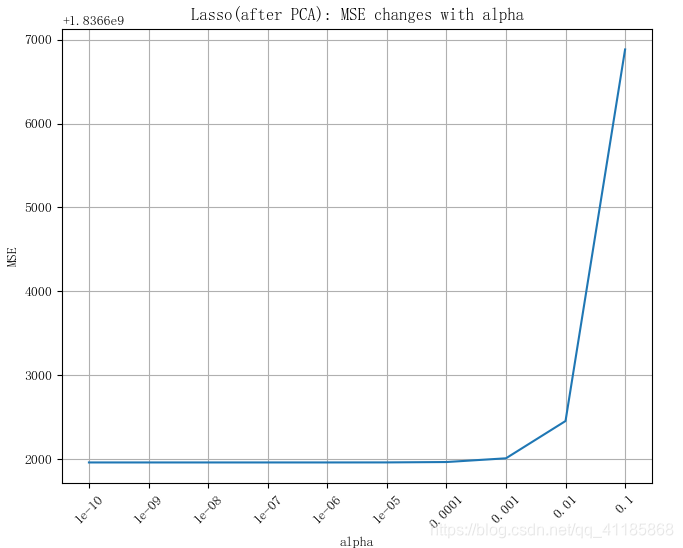

1. PCA 2. class TruncatedSVD Found at: sklearn.decomposition._truncated_svd 3. 4. class TruncatedSVD(TransformerMixin, BaseEstimator): 5. """Dimensionality reduction using truncated SVD (aka LSA). 6. 7. This transformer performs linear dimensionality reduction by means of 8. truncated singular value decomposition (SVD). Contrary to PCA, this 9. estimator does not center the data before computing the singular value 10. decomposition. This means it can work with sparse matrices 11. efficiently. 12. 13. In particular, truncated SVD works on term count/tf-idf matrices as 14. returned by the vectorizers in :mod:`sklearn.feature_extraction.text`. In 15. that context, it is known as latent semantic analysis (LSA). 16. 17. This estimator supports two algorithms: a fast randomized SVD solver, 18. and 19. a "naive" algorithm that uses ARPACK as an eigensolver on `X * X.T` or 20. `X.T * X`, whichever is more efficient. 21. 22. 23. LinearRegression 24. class LinearRegression Found at: sklearn.linear_model._base 25. 26. class LinearRegression(MultiOutputMixin, RegressorMixin, LinearModel): 27. """ 28. Ordinary least squares Linear Regression. 29. 30. LinearRegression fits a linear model with coefficients w = (w1, ..., wp) 31. to minimize the residual sum of squares between the observed targets in 32. the dataset, and the targets predicted by the linear approximation. 33. 34. 35. Lasso 36. class Lasso Found at: sklearn.linear_model._coordinate_descent 37. class Lasso(ElasticNet): 38. """Linear Model trained with L1 prior as regularizer (aka the Lasso) 39. 40. The optimization objective for Lasso is:: 41. 42. (1 / (2 * n_samples)) * ||y - Xw||^2_2 + alpha * ||w||_1 43. 44. Technically the Lasso model is optimizing the same objective function as 45. the Elastic Net with ``l1_ratio=1.0`` (no L2 penalty). 46. 47. Read more in the :ref:`User Guide <lasso>`. 48. 49. 50. 51. 52. 53. 54. 55. 56. 57. 58. 59.