Created by Wang, Jerry, last modified on Sep 22, 2015

单机模式运行,即local模式

local模式运行非常简单,只要运行以下命令即可,假设当前目录是$SPARK_HOME

MASTER=local bin/spark-shell

“MASTER=local"就是表明当前运行在单机模式

scala> val textFile = sc.textFile(“README.md”)

val textFile = sc.textFile(“jerry.test”)

15/08/08 19:14:32 INFO MemoryStore: ensureFreeSpace(182712) called with curMem=664070, maxMem=278302556

15/08/08 19:14:32 INFO MemoryStore: Block broadcast_7 stored as values in memory (estimated size 178.4 KB, free 264.6 MB)

15/08/08 19:14:32 INFO MemoryStore: ensureFreeSpace(17237) called with curMem=846782, maxMem=278302556

15/08/08 19:14:32 INFO MemoryStore: Block broadcast_7_piece0 stored as bytes in memory (estimated size 16.8 KB, free 264.6 MB)

15/08/08 19:14:32 INFO BlockManagerInfo: Added broadcast_7_piece0 in memory on localhost:37219 (size: 16.8 KB, free: 265.3 MB)

15/08/08 19:14:32 INFO SparkContext: Created broadcast 7 from textFile at :21

textFile: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[12] at textFile at :21

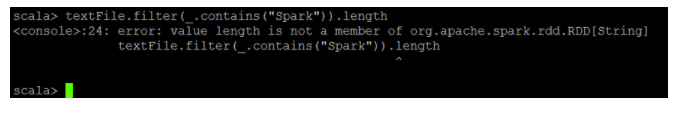

then: textFile.filter(.contains(“Spark”)).count

or textFile.flatMap(.split(” ")).map((_, 1))

15/08/08 19:16:27 INFO FileInputFormat: Total input paths to process : 1

15/08/08 19:16:27 INFO SparkContext: Starting job: count at :24

15/08/08 19:16:27 INFO DAGScheduler: Got job 0 (count at :24) with 1 output partitions (allowLocal=false)

15/08/08 19:16:27 INFO DAGScheduler: Final stage: ResultStage 0(count at :24)

15/08/08 19:16:27 INFO DAGScheduler: Parents of final stage: List()

15/08/08 19:16:27 INFO DAGScheduler: Missing parents: List()

15/08/08 19:16:27 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[2] at filter at :24), which has no missing parents

15/08/08 19:16:27 INFO MemoryStore: ensureFreeSpace(3184) called with curMem=156473, maxMem=278302556

15/08/08 19:16:27 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 3.1 KB, free 265.3 MB)

15/08/08 19:16:27 INFO MemoryStore: ensureFreeSpace(1855) called with curMem=159657, maxMem=278302556

15/08/08 19:16:27 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 1855.0 B, free 265.3 MB)

15/08/08 19:16:27 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost:42648 (size: 1855.0 B, free: 265.4 MB)

15/08/08 19:16:27 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:874

15/08/08 19:16:27 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[2] at filter at :24)

15/08/08 19:16:27 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

15/08/08 19:16:27 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, PROCESS_LOCAL, 1415 bytes)

15/08/08 19:16:27 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

15/08/08 19:16:27 INFO HadoopRDD: Input split: file:/root/devExpert/spark-1.4.1/README.md:0+3624

15/08/08 19:16:27 INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

15/08/08 19:16:27 INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

15/08/08 19:16:27 INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

15/08/08 19:16:27 INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

15/08/08 19:16:27 INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

15/08/08 19:16:27 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1830 bytes result sent to driver

15/08/08 19:16:27 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 80 ms on localhost (1/1)

15/08/08 19:16:27 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

15/08/08 19:16:27 INFO DAGScheduler: ResultStage 0 (count at :24) finished in 0.093 s

15/08/08 19:16:27 INFO DAGScheduler: Job 0 finished: count at :24, took 0.176689 s

res0: Long = 19