It has been 10 years since DPDK first open-sourced in 2013. From a simple L2 layer packet forwarding tool at the beginning, it keeps rapidly evolving. Now DPDK has covered software-defined networking (SDN), host virtualization, network protocol stack, network security, compression and encryption, and even GPU devices and deep learning framework. It has received extensive attention and research worldwide, and has become a important tool for building high-performance network applications.

The most important feature of DPDK is the poll mode driver in userspace. Compared with the interrupt mode in the kernel, the delay of polling is lower. Without the context switches between kernel and user space, it can also save CPU overhead, thereby increasing the maximum packet processing capability of the system.

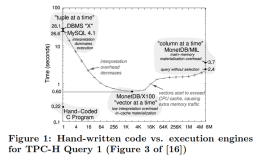

As time went by, DPDK developers gradually became not satisfied with L2/L3 app, and the calls to support the TCP/IP protocol stack have never stopped since the beginning. However, perhaps because the network protocol stack is too complex, even today, 10 years later, DPDK still has no official solution. The open source community has some attempts of its own, such as mTCP, F-Stack, Seastar, dpdk-ans, etc. There are generally two types of solutions, one is to port the existing protocol stack such as FreeBSD, and the other is to completely rewrite it. These open source projects show that they can usually achieve better performance than the native sockets of the Linux kernel.

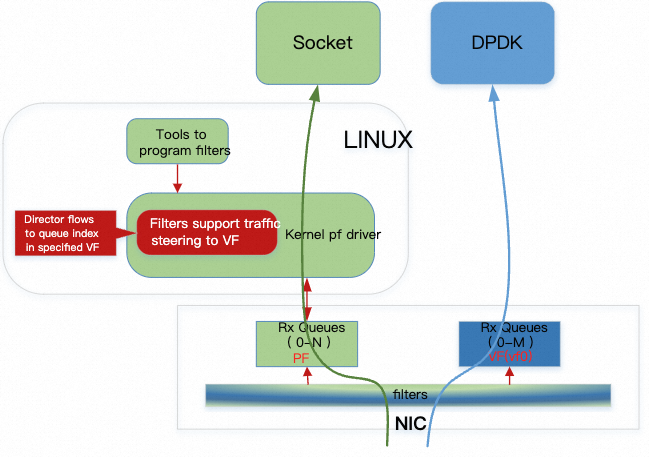

However, compared with traditional Linux network application, DPDK development is still somewhat difficult. The first is the hardware aspect, DPDK dev usually requires a physical machine. Since the some NICs can only be passed through to DPDK by igb_uio or vfio. So it is often necessary to configure multiple IP addresses for the physical machine and enable SR-IOV. One VF device is used for debugging, and the PF (with main IP) is used to ensure that the management network works normally. For the Mellanox NIC, because its DPDK driver can coexist with the kernel, so it does not need to be monopolized by the app.

Due to the emergence of hardware-assisted technology such as Flow Birfurcation, the NICs can now deliver traffic that you only concerned (such as a certain TCP port, a certain IP address) before entering the system kernel. It is done by flow classification and filtering, and then forwarded to the DPDK app, while the remaining traffic would still be submitted to the kernel. Therefore, there is no need to assign an independent IP to the management network, which simplifies the dev process. These specific steps will be talked later.

In terms of software, we all know that DPDK program runs a big while loop on a CPU core. In the loop, you can invoke the driver API to send and receive packets, as well as adding your own business logic to process the packets. The overall architecture is an async-callback event model. Because the driver is busy polling, one cycle needs to end as soon as possible in order to start the next round. So generally there should be no blocking calls in the code, such as sleep. The typical applications of DPDK used to be gateways and firewalls, all merely focused on packets delivering. Sometimes they need to involve file I/O, and thus cross-thread communication is needed, where the I/O task could be transferred to specific I/O threads. For instance, DPDK and qemu use shared-memory communication.

App developers who are familiar with Linux dev may not be satisfied enough with this. Although many network servers in Linux also use async-callback engines (based on epoll), developers can easily achieve concurrency by creating a new execution unit, with the help of thread pools or coroutine pools. Moreover, the legacy business code may be filled with a large number of locks, semaphores, condition variables, etc. It will never be easy if they suddenly need to be ported to the while loop of DPDK. Let's take another case, if the legacy business code sleeps periodically for 1 second, then in the new DPDK program, we will have to check the current time every poll loop, and tell if it has exceeded 1 second or not. Then, what if there are more sleeps?

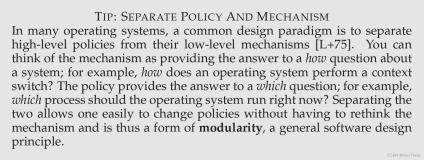

In a word, these problems are results of different programming models. One is event-driven, and the other is concurrency, based on multiple execution units. Some people may think that the async event model is more performant, which is actually a misunderstanding. Lauer and Needham once proved in the article On the duality of operating system structures published in 1979 that events and threads are dual, the meanings are:

- A program written in one model can be mapped directly to an equivalent program based on another model.

- The two models are logically equivalent, although they use different concepts and provide different syntax.

- Assuming the same scheduling policy is used, the performance of programs written in both models is essentially the same.

So, we try to use the coroutine from PhotonLibOS (hereafter referred to as Photon) to simplify the development of DPDK applications with the new concurrency model, and provide more functionalities, such as lock, timer and file I/O. First of all, we need to choose a userspace network protocol stack. After investigation, we have chosen Tencent's open source F-Stack project, which has ported the entire FreeBSD 11.0 network protocol stack on top of DPDK. It also has made some code cuts, providing a set of POSIX APIs, such as socket, epoll, kqueue, etc. Of course, its epoll is also simulated by kqueue, since it is essentially FreeBSD.

The following code is from F-Stack example, its current style is still a while loop. A loop function needs to be provided, and then registered into DPDK. The packet send/recv is arranged near the context of the function, which is automatically done by the framework. Although F-Stack has provided the event API (which is a prerequisite for coroutineization), but it has no scheduler, so the code style looks alike to that of the previous DPDK.

intloop(void*arg) { intnevents=ff_epoll_wait(epfd, events, MAX_EVENTS, 0); inti; for (i=0; i<nevents; ++i) { if (events[i].data.fd==sockfd) { while (1) { intnclientfd=ff_accept(sockfd, NULL, NULL); if (nclientfd<0) { break; } ev.data.fd=nclientfd; ev.events=EPOLLIN; if (ff_epoll_ctl(epfd, EPOLL_CTL_ADD, nclientfd, &ev) !=0) { printf("ff_epoll_ctl failed:%d, %s\n", errno, strerror(errno)); break; } } } else { if (events[i].events&EPOLLERR ) { ff_epoll_ctl(epfd, EPOLL_CTL_DEL, events[i].data.fd, NULL); ff_close(events[i].data.fd); } elseif (events[i].events&EPOLLIN) { charbuf[256]; size_treadlen=ff_read( events[i].data.fd, buf, sizeof(buf)); if(readlen>0) { ff_write( events[i].data.fd, html, sizeof(html) -1); } else { ff_epoll_ctl(epfd, EPOLL_CTL_DEL, events[i].data.fd, NULL); ff_close( events[i].data.fd); } } else { printf("unknown event: %8.8X\n", events[i].events); } } } }

We try to integrate Photon's scheduler with F-Stack's while loop, and the goal is to make them in the same thread. Photon usually uses epoll or io_uring as the main event engine of the scheduler, which can register the concerned fd, no matter it is a file fd or a socket. When the I/O event is completed, the corresponding coroutine will be awakened from sleep, and run the rest code.

So what kind of Photon function should we take as the while loop for F-Stack? First, we made a kqueue event engine inside Photon, using the same interface as the original two engines, except that when polling kqueue for F-Stack, the timeout parameter is passed as 0. This is easy to understand, if the NIC has had I/O during polling, it will be recorded in the coroutine engine for subsequent wake-up; otherwise, it will exit immediately to allow the next poll to have a chance to run. Next, in addition to the poll 0 in each round, we also need a function that allows the coroutine to actively yield the CPU (photon::thread_yield()). Only in this way, in the interval of busy polling, the main event engine (io_uring) can have the opportunity to execute its own poll. The execution probabilities of the two polls are adjustable in the scheduler.

At first we worried that enabling two event engines in the same thread to poll would reduce performance. However, after testing, we found that when the timeout of io_uring_wait_cqe_timeout is 0, there is only one syscall and one atomic operation, and the time overhead is about a few microseconds, which is relatively low compared to the latency of the NIC. Therefore, as long as the main event engine is not busy, the performance impact on the DPDK loop is very small. See the following text for detailed test data.

Let's take a look at what the Photon coroutine + F-Stack + DPDK code looks like in the end. As shown below, first you need to initialize the FSTACK_DPDK environment on current OS thread (Photon calls it vcpu), as an I/O engine. When photon::init completes, the program enters busy polling. Next is the most interesting part, we can start a new coroutine (Photon calls it thread) anywhere in the code. Inside the coroutine, we can sleep, lock, or perform file I/O . We can also use the socket server encapsulated by the photon::net to implement an echo server similar to the F-Stack example above. For each new connection, the server will create a new coroutine to process. Since it is no longer an event-driven async-callback mechanism, there is no need to use a large number of if ... else to check event status, and the overall code style is simple and intuitive.

photon::init(photon::INIT_EVENT_DEFAULT, photon::INIT_IO_FSTACK_DPDK); // DPDK will start running from here, as an I/O engine.// Although busy polling, the scheduler will ensure that all threads in current vcpu// have a chance to run, when their events arrived.autothread=photon::thread_create11([] { while (true) { photon::thread_sleep(1); // Sleep every 1 second and do something ... } }); autohandler= [&](photon::net::ISocketStream*sock) ->int { autofile=photon::fs::open_local("file", O_WRONLY, 0644, ioengine_iouring); charbuf[buf_size]; while (true) { size_tret=sock->recv(buf, buf_size); file->write(buf, ret); sock->send(buf, ret); } return0; }; // Register handler and configure server ...autoserver=photon::net::new_fstack_dpdk_socket_server(); server->set_handler(handler); server->run(); // Sleep forever ...photon::thread_sleep(-1UL);

In addition, the new code has better flexibility. When we don't need to use F-Stack + DPDK, just turn off the photon::INIT_IO_FSTACK_DPDK parameter, and replace new_fstack_dpdk_socket_server with new_tcp_socket_server, then we can switch back to the normal server based on the Linux kernel socket.

We built an echo server with the above code for performance test, and the results are as follows:

| Server socket type | Client traffic type (Ping-Pong) | Client traffic type (Streaming) |

| Linux 6.x kernel + Photon coroutine | 1.03Gb/s | 2.60Gb/s |

| F-Stack + DPDK + Photon coroutine | 1.23Gb/s | 3.74Gb/s |

Note that we separate client traffic types into Streaming and Ping-Pong. The former is used to measure net server performance under high throughput, send coroutine and recv coroutine are running their own infinite loops. In reality, the potential users are multiplexing technologies such as RPC and HTTP 2.0. The latter is generally used to evaluate scenarios for large number of connections. For each connection, the client sends a packet, waits to receive, and finally does the next send. Regarding to the test environment, the NIC bandwidth is 25G, the server is single-threaded, and the packet size is 512 bytes.

Apparently the new socket on F-Stack + DPDK has better performance than the native kernel socket. Ping-Pong mode has improved 20%, while Streaming mode improved 40%. If we go on increasing the poll ratio of the main event engine (io_uring), such as adding more sleep or file I/O operations(til the ratio of two polls reaches 1:1), the performance of the echo server will drop by about 5%. We also compared Photon F-Stack and the original F-Stack, the results are basically the same, indicating that Photon does not bring additional overhead. This is because each context switch of Photon coroutine only takes less than 10 nanoseconds.

Readers who are familiar with Photon may know that since open sourced in 2022, it has compared many rival projects horizontally. We also listed the performance data of the echo server implemented by each framework on Photon's homepage. With the high-efficiency scheduler, high-performance assembly code for critical paths, and the utilization of the io_uring asynchronous engine, Photon is still ranked the first. The DPDK integration this time has introduced a cross-border competitor (interrupt vs polling), and it is also a self-transcendence to Photon.

After discussing performance, let's go back to the original intention of writing this article, that is, how to use the coroutine library to reduce the development difficulty of DPDK applications and hide the underlying differences. We believe that Photon's attempt in this area is worth of more attention . By using the Photon lib, developers can easily port legacy code to the DPDK framework, thus could spend more time on the business logic.

Finally, in order to let readers verify the above results, we have prepared a document. It describes in detail how to install the driver for Mellanox NIC, configure SR-IOV, install F-STACK and DPDK, configure Flow Birfurcation, and run Photon echo server. Welcome to check it out.