ChatGLM3-6B 微调,

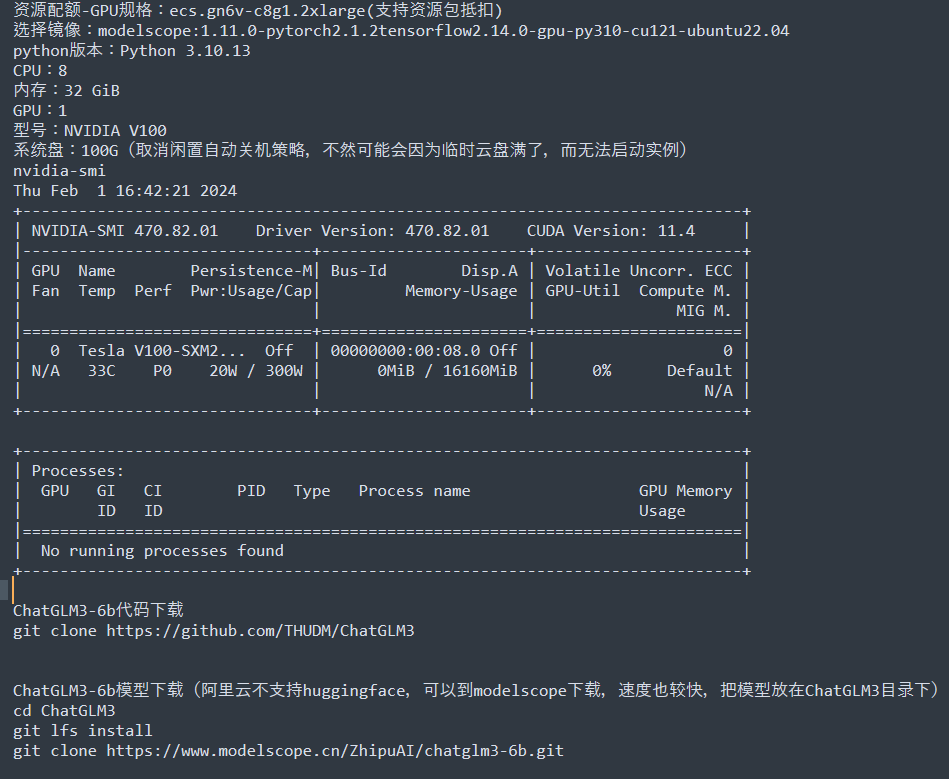

环境:

使用modelscope提供的模型,微调使用ChatGLM官方提供的微调代码ChatGLM3/finetune_demo/lora_finetune.ipynb

报错:没有找到cuda驱动

但cuda驱动显示如下:

nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Wed_Nov_22_10:17:15_PST_2023

Cuda compilation tools, release 12.3, V12.3.107

Build cuda_12.3.r12.3/compiler.33567101_0

详细微调报错如下:

2024-02-02 10:41:22.129186: I tensorflow/tsl/cuda/cudart_stub.cc:28] Could not find cuda drivers on your machine, GPU will not be used.

2024-02-02 10:41:22.176394: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-02-02 10:41:22.176431: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-02-02 10:41:22.176460: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2024-02-02 10:41:22.185307: I tensorflow/tsl/cuda/cudart_stub.cc:28] Could not find cuda drivers on your machine, GPU will not be used.

2024-02-02 10:41:22.185657: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 AVX512F FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-02-02 10:41:23.415865: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

/opt/conda/lib/python3.10/site-packages/torch/cuda/init.py:138: UserWarning: CUDA initialization: The NVIDIA driver on your system is too old (found version 11040). Please update your GPU driver by downloading and installing a new version from the URL: http://www.nvidia.com/Download/index.aspx Alternatively, go to: https://pytorch.org to install a PyTorch version that has been compiled with your version of the CUDA driver. (Triggered internally at ../c10/cuda/CUDAFunctions.cpp:108.)

return torch._C._cuda_getDeviceCount() > 0

感谢各位热心的回答,初学者太多的问题。现在自己也慢慢摸索了一点,https://www.zhihu.com/column/c_1758152537000435713,欢迎指教。

根据报错信息,您的系统上的NVIDIA驱动版本过旧(找到的版本为11040),请更新您的GPU驱动程序。您可以通过以下步骤进行更新:

您的环境中没有正确安装或者系统无法找到兼容的NVIDIA CUDA驱动程序。CUDA驱动是支持GPU加速计算的关键组件,对于运行大型语言模型如ChatGLM3-6B至关重要。

虽然您显示的 CUDA 编译器版本为 12.3,但是 TensorFlow 报告找不到 CUDA 驱动。这可能是因为环境变量设置不正确,CUDA 库路径没有添加到系统 PATH 中。请检查以下内容:

查看 TensorFlow 是否正确识别了 CUDA 设备,可以通过在 Python 中运行如下代码查看:

import tensorflow as tf

tf.config.list_physical_devices('GPU')

如果仍然存在问题,请确保 TensorFlow 和 PyTorch 版本与 CUDA 版本兼容,并按照官方指南配置环境。

在尝试运行ChatGLM3-6B或其它基于GPU的深度学习模型时,如果遇到“Could not find cuda drivers”的错误提示,这意味着运行环境未能正确检测到CUDA驱动程序或者CUDA驱动版本与使用的深度学习框架或模型不兼容。以下是几个可能的解决步骤:

确认CUDA安装:

检查驱动程序版本:

环境变量设置:

/usr/local/cuda/bin添加到PATH中。检查CUDA设备可见性:

nvidia-smi命令确认GPU是否被操作系统识别,并且CUDA驱动可以正常工作。与深度学习框架兼容性:

容器环境中的CUDA支持:

--runtime=nvidia等标志启用NVIDIA GPU支持。重新启动服务: