ADS层:Application Data Service

1.ADS层保存的数据是最终的统计结果,无需做进一步的计算 --不需要列式存储,也不需要snaapy压缩 2.统计结果的目的是对外提供服务,所以表不会最终数据的存储位置 --需要将表中的数据同步到第三方存储(MySQL) --ADS层的表最好是行式存储:tsv(DataX) --压缩格式采用gzip 3.统计结果的数据量不会太多,ADS层的表无需分区设计 4.表的设计 --ODS层:表的结构依托于数据源的数据结构 --DIM层:遵循维度建模的维度表的设计理念(维度越丰富越好) --DWD层:遵循维度模型的事实表的设计概念(粒度越细越好) --ADS层:客户要啥加啥

基础概念

维度:分析数据的角度 颗粒度:分析数据的详细程度 统计周期:统计的时候,数据统计时间范围(窗口) 统计粒度:分析数据的具体角度,称为统计粒度(站在哪一个角度统计数据) 指标:客户想要的一个结果数值 --2022-06-08(最近一周):02,03,04,05,06,07,08 --2022-06-09(最近一周):03,04,05,06,07,08,09 --2022-06-10(最近一周):04,05,06,07,08,09,10(类似于spark的滑动窗口)

各品牌商品下单统计

--统计行为:下单 --分析的角度:品牌 --指标:下单数量,下单人数 --建表语句 --统计时间:以获取数据的那一天为准 ROP TABLE IF EXISTS ads_order_stats_by_tm; CREATE EXTERNAL TABLE ads_order_stats_by_tm ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `tm_id` STRING COMMENT '品牌ID', `tm_name` STRING COMMENT '品牌名称', `order_count` BIGINT COMMENT '下单数', `order_user_count` BIGINT COMMENT '下单人数' ) COMMENT '各品牌商品下单统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_stats_by_tm/';

数据装载

--统计指标分析 --将复杂的指标的分解成小的,简单的指标 --指标分析 --1.原子指标(最简单的统计值) --业务过程(行为)+ 度量值+ 聚合逻辑 下单 + (order_id)次数+count() --2.派生指标 --基于原子指标,增加其他的条件,角度之类 --派生指标=原子指标+统计周期(最近一天)+业务限定+统计粒度 --统计周期和业务限定其实都是数据筛选条件,但是不一样 --统计周期一般指的就是数据时间范围(分区字段的过滤,过滤文件夹) --业务限定一般指的就是数据约束条件(数据字段的过滤,过滤文件) select count(distanct order_id) from dwd_trade_order_detail_inc select count(distanct order_id) from dwd_trade_order_detail_inc where dt>data_sub('2022-06-08',0) and dt<='2022-06-08' and 品牌='华为' group byu 品牌 select count(distanct order_id) from dwd_trade_order_detail_inc where dt>data_sub('2022-06-08',6) and dt<='2022-06-08'

派生指标

派生指标=原子指标+统计周期+业务限定+统计粒度

最近一天各省份手机品类订单总额=订单总额+最近一天+品类为手机+省份

定义业务过程、度量值和聚合逻辑

限定统计范围,相当与SQL中的where

定义统计粒度,相当于SQL中Group by

--最近1天各个品牌下单表,下单人数统计 --统计周期其实就是数据的时间范围,一般会在where字句中添加dt条件 --业务限定其实就是数据约束,一般会在where子句中添加业务条件 --统计粒度其实就是数据的分析维度,一般会在group by 子句中添加维度字段 -- 当数据中的Hash表结构为空时抛出类型转换异常,禁用相应优化即可 set hive.mapjoin.optimized.hashtable=false; insert overwrite table ads_sku_cart_num_top3_by_cate select * from ads_sku_cart_num_top3_by_cate union select '2022-06-08' dt, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sku_id, sku_name, cart_num, rk from ( select sku_id, sku_name, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, cart_num, rank() over (partition by category1_id,category2_id,category3_id order by cart_num desc) rk from ( select sku_id, sum(sku_num) cart_num from dwd_trade_cart_full where dt='2022-06-08' group by sku_id )cart left join ( select id, sku_name, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name from dim_sku_full where dt='2022-06-08' )sku on cart.sku_id=sku.id )t1 where rk<=3; -- 优化项不应一直禁用,受影响的SQL执行完毕后打开 set hive.mapjoin.optimized.hashtable=true;

各品类商品下单统计

统计周期 |

统计粒度 |

指标 |

说明 |

最近1、7、30日 |

品类 |

下单数 |

略 |

最近1、7、30日 |

品类 |

下单人数 |

略 |

DROP TABLE IF EXISTS ads_order_stats_by_cate; CREATE EXTERNAL TABLE ads_order_stats_by_cate ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `category1_id` STRING COMMENT '一级品类ID', `category1_name` STRING COMMENT '一级品类名称', `category2_id` STRING COMMENT '二级品类ID', `category2_name` STRING COMMENT '二级品类名称', `category3_id` STRING COMMENT '三级品类ID', `category3_name` STRING COMMENT '三级品类名称', `order_count` BIGINT COMMENT '下单数', `order_user_count` BIGINT COMMENT '下单人数' ) COMMENT '各品类商品下单统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_stats_by_cate/';

insert overwrite table ads_order_stats_by_cate select * from ads_order_stats_by_cate union select * from (--最近一天 select '2022-06-08', 1, `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` , count(distinct order_id) as order_count , count(distinct user_id) as order_user_count from( select order_id, user_id, sku_id from dwd_trade_order_detail_inc where dt='2022-06-08' )od left join( select category1_id, category2_id, category3_id, category1_name, category2_name, category3_name, from dim_sku_full where dt='2022-06-08' )sku on od.sku_id=sku.id group by `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` union all --最近七天 select '2022-06-08', 7, `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` , count(distinct order_id) as order_count , count(distinct user_id) as order_user_count from( select order_id, user_id, sku_id from dwd_trade_order_detail_inc where dt>=data_sub('2022-06-08',6) and dt<='2022-06-08' )od left join( select category1_id, category2_id, category3_id, category1_name, category2_name, category3_name, from dim_sku_full where dt='2022-06-08' #维度表不用改,我们没有逻辑删除,数据不会少 )sku on od.sku_id=sku.id group by `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` union all --最近30天 select '2022-06-08', 30, `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` , count(distinct order_id) as order_count , count(distinct user_id) as order_user_count from( select order_id, user_id, sku_id from dwd_trade_order_detail_inc where dt>=data_sub('2022-06-08',29) and dt<='2022-06-08' )od left join( select category1_id, category2_id, category3_id, category1_name, category2_name, category3_name, from dim_sku_full where dt='2022-06-08' #维度表不用改,我们没有逻辑删除,数据不会少 )sku on od.sku_id=sku.id group by `category1_id` , `category1_name` , `category2_id` , `category2_name` , `category3_id` , `category3_name` )t

性能如果提升?

--减少join

--可以减少数据量

--减少重复量

--优化思路

--将最近1天的数据保存在一张表中

--最近7天和最近30天数据从最近1天的统计表中获取数据,进行进一步计算

通过预聚合中取数据

--ODS(x)

--DIM(x)

--DWD(x)

--DWS(OK)

--预聚合,保存中间计算结果

--中间计算结果表不是最终结果表

--需要进一步的计算

--存储方式:列式存储

--压缩方式:snappy

--ADS(x)

DROP TABLE IF EXISTS dws_order_stats_by_cate; CREATE EXTERNAL TABLE dws_order_stats_by_tm_1d ( `tm_id`, STRING COMMENT '品牌ID', `tm_name` STRING COMMENT '品牌名称', `order_count` BIGINT COMMENT '下单数', `order_user_count` BIGINT COMMENT '下单人数' ) COMMENT '各品类商品下单统计' partition by(`dt` String) stored as orc location '/warehourse/gmall/dws/dws_order_stats_by_tm_1d/' tblproperties('orc.compress'='snappy'); --将最近1天的统计结果保存在1d表中 insert overwriter table dws_order_stats_by_tm_1d partition(dt='2022-06-08') select `tm_id`, `tm_name`, count(distinct order_id) as `order_count_1d` , count(distinct user_id) as `order_user_count` from( select order_id, user_id, sku_id from dwd_trade_order_detail_inc where dt='2022-06-08' )od left join( select id,tm_id,tm_name from dim_sku_full where dt='2022-06-08' )sku on od.sku_id=sku.id group by tm_id, tm_name; --从1d表获取最近1天,最近7天,最近30天数据,保存到ADS层的表中 insert overwrite table ads_order_stats_by_tm select * from ads_order_status_by_tm union select * from(--最近1天 select '2022-06-08', 1, tm_id, tm_name, order_count_1d, order_user_count_1d from dws_order_stats_by_tm_1d where dt='2022-06-08' union all --最近七天 select '2022-06-08', 7, tm_id, tm_name, sum(order_count_1d), sum(order_user_count_1d) from dws_order_stats_by_tm_1d where dt>=date_sub('2022-06-08',6) and dt<='2022-06-08' group by tm_id, tm_name union all --最近30天 select '2022-06-08', 7, tm_id, tm_name, sum(order_count_1d), sum(order_user_count_1d) from dws_order_stats_by_tm_1d where dt>=date_sub('2022-06-08',29) and dt<='2022-06-08' group by tm_id, tm_name )t

ADS层-各品类商品下单统计--SQL优化

--当前的问题:读了三次相同的数据,使用三个不同的场景

--解决思路:读了一次数据,使用三个场景(炸裂)

炸裂【1,2,3】=》【1,2,3】,【1,2,3】,【1,2,3】

扁平化【1,2,3】=》1,2,3

select * from (select 'a' name) t lateral view explode(array(1,2,3)) tmp as name1

where name1!=7

1.获取时间范围最大的数据集

2.将查询的数据集在内存中进行炸裂操作(三份),变成多份,增加标记

3.将炸裂后的数据进行筛选,保存有效数据

4.将过滤的后的数据按照标记进行分组,然后进行统计

insert overwrite table ads_order_stats_by_tm select * from ads_order_status_by_tm union select '2022-06-08', days, tm_id, tm_name, sum(order_count_1d), sum(order_user_count_1d) from dws_order_stats_by_tm_1d lateral view explode(`array`(1,7,30)) tmp as days where dt>=date_sub('2022-06-08',days-1) and dt<='2022-06-08' group by days,tm_id, tm_name

新增下单用户统计

需求说明如下。

统计周期 |

指标 |

说明 |

最近1、7、30日 |

新增下单人数 |

略 |

以前这个用户没有下过订单,最近1天,第一回下订单,称为新增用户下单用户

DROP TABLE IF EXISTS ads_new_order_user_stats; CREATE EXTERNAL TABLE ads_new_order_user_stats ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `new_order_user_count` BIGINT COMMENT '新增下单人数' ) COMMENT '新增下单用户统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_new_order_user_stats/';

--新增:以前没做过,今天第一回 --判断1天前用户没有出现过 insert overwrite table ads_new_order_user_stats select * from ads_order_new_order_user_stats union select *from(select '2022-06-08', 1, count(distinct user_id) from dwd_trade_order_detail_inc where dt='2022-06-08' and user_id not in( select user_id from dwd_trade_order_detail_inc where dt<'2022-06-08' ) union all select '2022-06-08', 7, count(distinct user_id) from dwd_trade_order_detail_inc where dt>=date_sub('2022-06-08',6) and dt<='2022-06-08' and user_id not in( select user_id from dwd_trade_order_detail_inc where dt<date_sub('2022-06-07',6) ) union all select '2022-06-08', 30 count(distinct user_id) from dwd_trade_order_detail_inc where dt>=date_sub('2022-06-08',29) and dt<='2022-06-08' and user_id not in( select user_id from dwd_trade_order_detail_inc where dt<date_sub('2022-06-07',29) ))t

--需求:统计最近1天,7天,30天新增注册用户

DROP TABLE IF EXISTS ads_new_reg_user_stats; CREATE EXTERNAL TABLE ads_new_order_user_stats ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `new_reg_user_count` BIGINT COMMENT '新增注册人数' ) COMMENT '新增下单用户统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_new_reg_user_stats/';

insert overwrite table ads_new_new_user_status select * from ads_new_reg_user_stats union select *from( select '2022-06-08', 1, count(*) from dwd_user_register_inc where dt>='2022-06-08' union all select '2022-06-08', 7, count(*) from dwd_user_register_inc where date_sub(dt>='2022-06-08',6) and dt<=2022-06-08' union all select '2022-06-08', 30, count(*) from dwd_user_register_inc where date_sub(dt>='2022-06-08',29) and dt<=2022-06-08')t

--需求:统计最近1天,7天,30天新增下单用户(难)

--新增下单(用户的第一次下单)

--需求:统计最近1天,7天,30天新增注册用户(简单)

--新增注册(用户的第一次注册)

DROP TABLE IF EXISTS dws_first_order_stats; CREATE EXTERNAL TABLE dws_first_order_stats ( `user_id` String comment '用户ID', `first_order_date` Bigint common '用户首次下单时间' ) COMMENT '新增下单用户统计' partition by(`dt` String) stored as orc location '/warehourse/gmall/dws/dws_first_order_stats/' tblproperties('orc.compress'='snappy'); --取8号 insert overwrite table dws_first_order_stats partition (dt='2022-06-08') select user_id, min(date_id) from dwd_trade_order_detail_inc group by user_id --取9号 insert overwrite table dws_first_order_stats partition (dt='2022-06-09') select user_id, min(date_id) from dwd_trade_order_detail_inc group by user_id

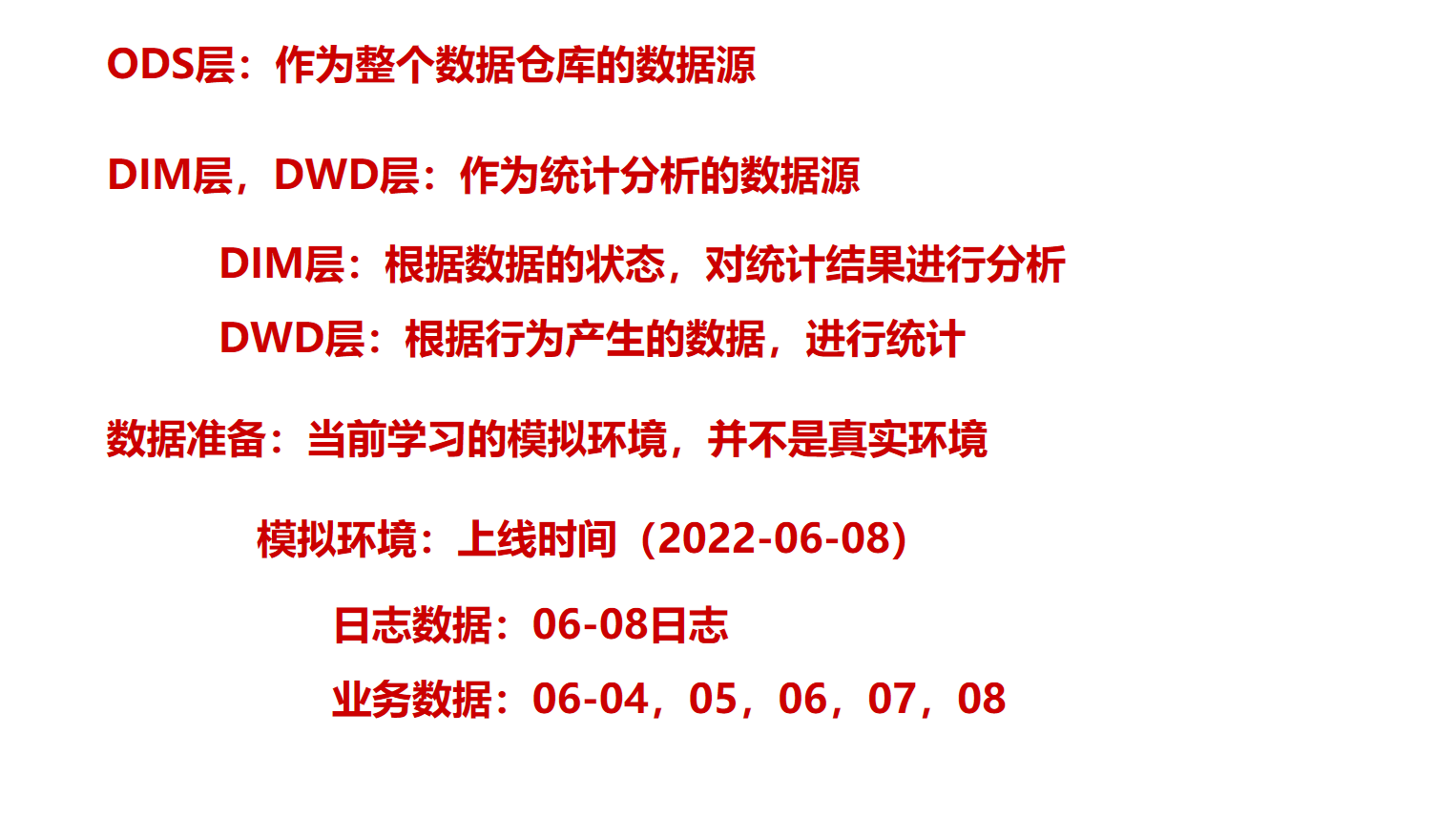

nd

各渠道流量统计(流量主题)

需求说明如下

统计周期 |

统计粒度 |

指标 |

说明 |

最近1/7/30日 |

渠道 |

访客数 |

统计访问人数 |

最近1/7/30日 |

渠道 |

会话平均停留时长 |

统计会话平均停留时长 |

最近1/7/30日 |

渠道 |

会话平均浏览页面数 |

统计会话平均浏览页面数 |

最近1/7/30日 |

渠道 |

会话总数 |

统计会话总数 |

最近1/7/30日 |

渠道 |

跳出率 |

只有一个页面的会话的比例 |

DROP TABLE IF EXISTS ads_traffic_stats_by_channel; CREATE EXTERNAL TABLE ads_traffic_stats_by_channel ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `channel` STRING COMMENT '渠道', `uv_count` BIGINT COMMENT '访客人数', `avg_duration_sec` BIGINT COMMENT '会话平均停留时长,单位为秒', `avg_page_count` BIGINT COMMENT '会话平均浏览页面数', `sv_count` BIGINT COMMENT '会话数', `bounce_rate` DECIMAL(16, 2) COMMENT '跳出率' ) COMMENT '各渠道流量统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_traffic_stats_by_channel/';

在dws_traffic_session_page_view_1d中,我们对每个会话的页面访问情况做了汇总,本节基于该表统计。

ADS层报表数据量不大,分区意义不大,通过dt字段区分统计日期即可。每日装载将历史报表数据取出,与当日统计结果union后写回该表即可。要注意,为了避免数据重复,此处要用union去重。

insert overwrite table ads_traffic_stats_by_channel --为了防止全表覆盖,所以我们需要把旧的表先取出来 select * from ads_traffic_stats_by_channel union select * from (--最近1天 select '2022-06-08',1,channel,count(distinct mid_id),avg(during_time/1000), avg(page_count_1d),count(session_id), sum(if(page_count=1,1,0))/count(session_id) from dws_traffic_session_page_view_1d where dt='2022-06-08' group by channel union all --最近七天 select '2022-06-08',7,channel,count(distinct mid_id),avg(during_time/1000), avg(page_count_1d),count(session_id), sum(if(page_count=1,1,0))/count(session_id) from dws_traffic_session_page_view_1d where dt>=date_sub('2022-06-08',6) and dt<='2022-06-08' group by channel union all select '2022-06-08',30,channel,count(distinct mid_id),avg(during_time/1000), avg(page_count_1d),count(session_id), sum(if(page_count=1,1,0))/count(session_id) from dws_traffic_session_page_view_1d where dt>=date_sub('2022-06-08',29) and dt<='2022-06-08' group by channel)t

SQL优化

--当前的问题:读了三次相同的数据,使用三个不同的场景

--解决思路:读了一次数据,使用三个场景(炸裂)

炸裂【1,2,3】=》【1,2,3】,【1,2,3】,【1,2,3】

扁平化【1,2,3】=》1,2,3

select * from (select 'a' name) t lateral view explode(array(1,2,3)) tmp as name1

where name1!=7

1.获取时间范围最大的数据集

2.将查询的数据集在内存中进行炸裂操作(三份),变成多份,增加标记

3.将炸裂后的数据进行筛选,保存有效数据

4.将过滤的后的数据按照标记进行分组,然后进行统计

insert overwrite table ads_traffic_stats_by_channel --为了防止全表覆盖,所以我们需要把旧的表先取出来 select * from ads_traffic_stats_by_channel --炸裂函数 select '2022-06-08',days,channel,count(distinct mid_id),avg(during_time/1000), avg(page_count_1d),count(session_id), sum(if(page_count=1,1,0))/count(session_id) from dws_traffic_session_page_view_1d lateral view explode(array(1,7,30)) tmp as days where dt>=date_sub('2022-06-08',days-1) and dt<='2022-06-08' group by channel,days

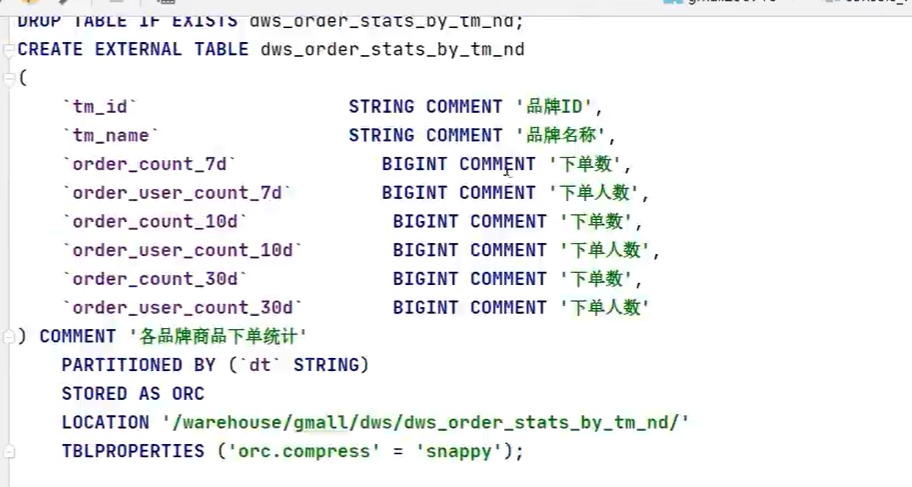

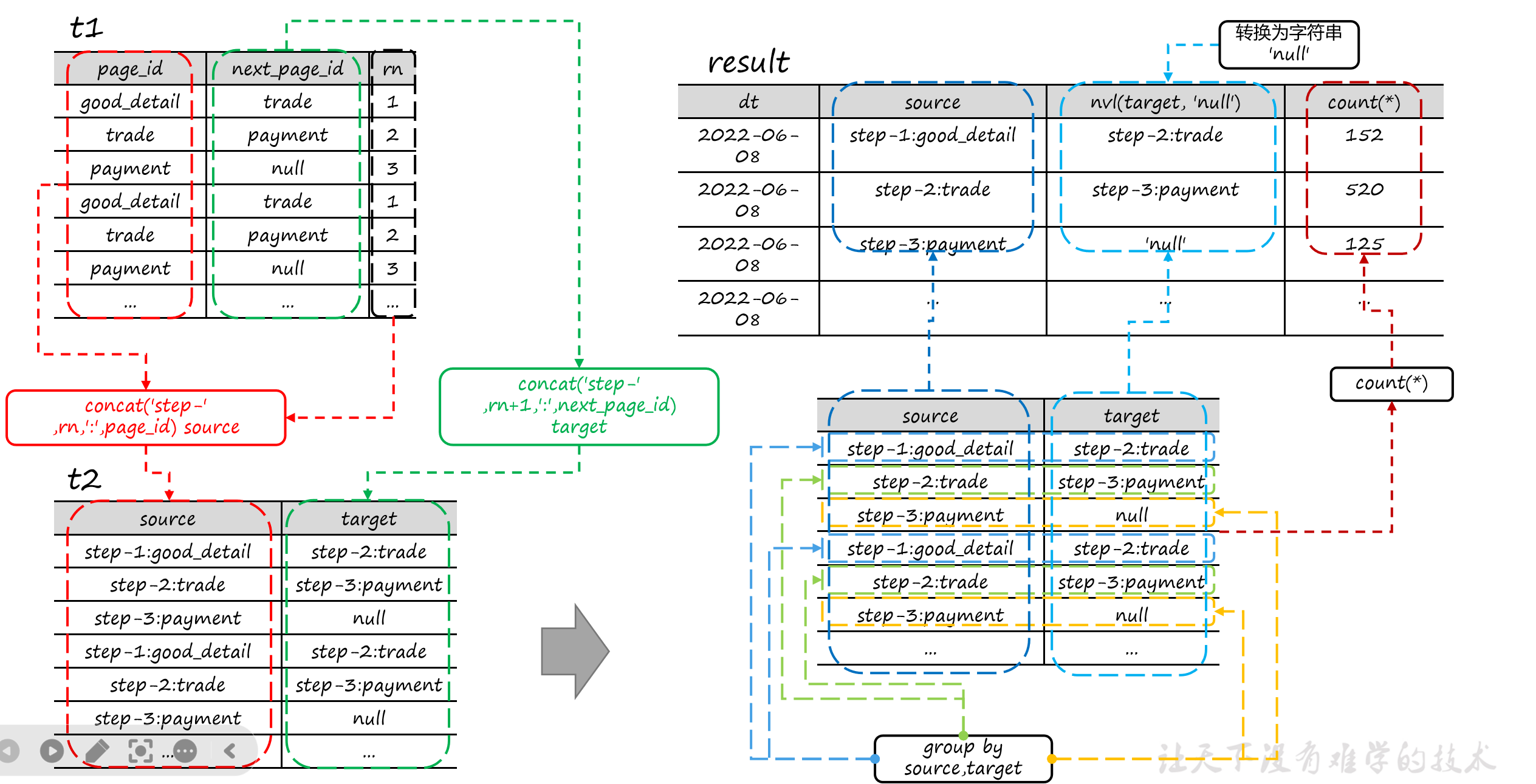

路径分析

用户路径分析,顾名思义,就是指用户在APP或网站中的访问路径。为了衡量网站优化的效果或营销推广的效果,以及了解用户行为偏好,时常要对访问路径进行分析。

用户访问路径的可视化通常使用桑基图。如下图所示,该图可真实还原用户的访问路径,包括页面跳转和页面访问次序。

桑基图需要我们提供每种页面跳转的次数,每个跳转由source/target表示,source指跳转起始页面,target表示跳转终到页面。

建表语句 DROP TABLE IF EXISTS ads_page_path; CREATE EXTERNAL TABLE ads_page_path ( `dt` STRING COMMENT '统计日期', `source` STRING COMMENT '跳转起始页面ID', `target` STRING COMMENT '跳转终到页面ID', `path_count` BIGINT COMMENT '跳转次数' ) COMMENT '页面浏览路径分析' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_page_path/';

insert overwrite table ads_page_path select * from ads_page_path union select '2022-06-08',last_page_id source, page_id target, count(*) from dwd_traffic_page_view_inc where dt='2022-06-08' group by last_page_id,page_id

问题:

桑基图不允许出现环状图形:可以在页面前增加流程标记,区分页面跳转顺序

我们需要统计页面离开的情况

当前统计的是页面路径跳转,前提条件是同一个会话

在同一个会话中,给每个页面额外增加一个标记,用于表示跳转后的页面,如果跳转后面的页面为null,表示离开

insert overwrite table ads_page_path select * from ads_page_path select '2022-06-08', source, target, count(*) from ( select concat('step-',rn,':',source) source, concat('step-',(rn1),':',target) target, target from( select page_id,source, lead(page_id,1,'out') over(partition by session_id order by view_time) target, row_number() over(partition by session_id order by view_time) rn from dwd_traffic_page_view_inc where dt='2022-06-08' )t1 )t group by source,target

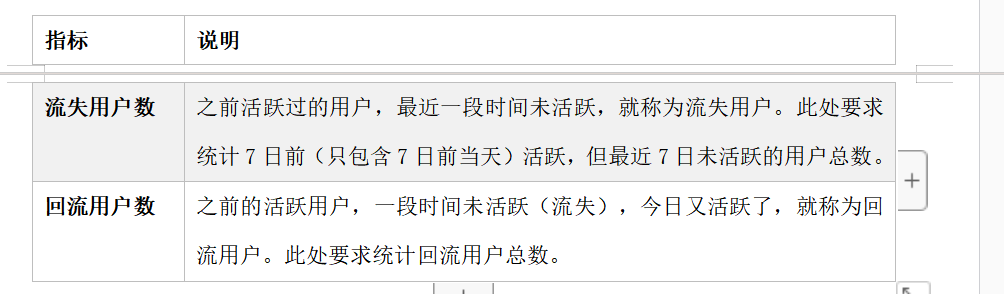

用户变动统计

该需求包括两个指标,分别为流失用户数和回流用户数,以下为对两个指标的解释说明。

流失用户数:用户的末次登录时间是7天前当天=(8-7) 回流用户数:首先要今天登录和上一次登录时间为7天前<(8-7) 上一次登录其实就是昨天统计的末次登录

DROP TABLE IF EXISTS ads_user_change; CREATE EXTERNAL TABLE ads_user_change ( `dt` STRING COMMENT '统计日期', `user_churn_count` BIGINT COMMENT '流失用户数', `user_back_count` BIGINT COMMENT '回流用户数' ) COMMENT '用户变动统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_user_change/';

--统计日期和业务日期的区别

--统计日期(dt):数据在哪一天统计的

#统计流失数据的数量 select count(*) user_churn_count from dws_user_login_td where dt='2022-06-08' and login_date_last=date_sub('2022-06-08',7)

#回流用户 insert overwrite table ads_user_change select * from ads_user_change union select '2022-06-08', user_churn_count, user_back_count from( select 1 a, count(*) user_churn_count from dws_user_login_td where dt='2022-06-08' where dt='2022-06-08' and login_date_last=date_sub('2022-06-08',7) )churn join( select 1 b, count(*) user_back_count from #把两个昨天和今天时间和在一起 (select user_id, '2022-06-08' from dws_user_login_td where dt='2022-06-08' and login_date_last='2022-06-08')new join( select user_id from dws_user_user_login_td where dt=date_sub('2022-06-08',1) #昨天统计的 )old on new.user_id=old.user_id where datediff(new.login_date,old.login_date_last)>7 )back on churn.a=back.b

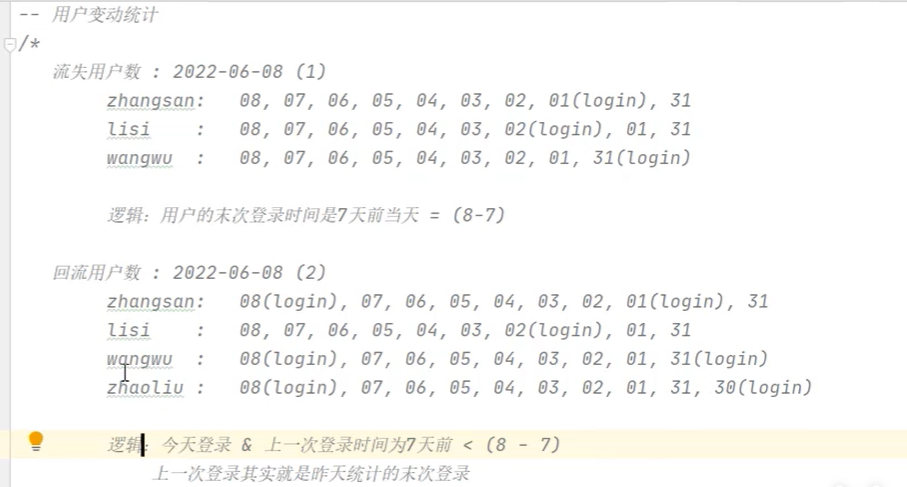

用户留存率

留存分析一般包含新增留存和活跃留存分析。

新增留存分析是分析某天的新增用户中,有多少人有后续的活跃行为。活跃留存分析是分析某天的活跃用户中,有多少人有后续的活跃行为。

留存分析是衡量产品对用户价值高低的重要指标。

此处要求统计新增留存率,新增留存率具体是指留存用户数与新增用户数的比值,例如2022-06-08新增100个用户,1日之后(2022-06-09)这100人中有80个人活跃了,那2022-06-08的1日留存数则为80,2022-06-08的1日留存率则为80%。

要求统计每天的1至7日留存率,如下图所示。

用户留存率

2022-06-08

zhangsan reg

lisi reg

wangwu reg

2022-06-09(1日留存率33.33%)

zhangsan login

lisi login

2022-06-10(2日留存率 66.66%)

zhangsan reg

lisi reg

zhangsan reg

反推

2022-06-08(1日留存率:100%,2日留存率:100%)

zhangsan login

lisi login

2022-06-07

zhangsan reg

2022-06-06

lisi reg

规律:获取1天前注册用户数量+获取1天注册且当天用户登录用户数量

获取2天前注册用户数量+获取2天注册且当天用户登录用户数量

.。。。。

用户登录历史至今表(td)

--userid+first+last

#要求统计每天的1至7日留存率 DROP TABLE IF EXISTS ads_user_retention; CREATE EXTERNAL TABLE ads_user_retention ( `dt` STRING COMMENT '统计日期', `create_date` STRING COMMENT '用户新增日期', `retention_day` INT COMMENT '截至当前日期留存天数', `retention_count` BIGINT COMMENT '留存用户数量', `new_user_count` BIGINT COMMENT '新增用户数量', `retention_rate` DECIMAL(16, 2) COMMENT '留存率' ) COMMENT '用户留存率' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_user_retention/'; 2)数据装载 --1日留存率 select '2022-06-08', date_sub('2022-06-08',1), 1, sum(if(login_date_last='2022-06-08',1,0)) retention_count, count(*) new_user_count, sum(if(login_date_last='2022-06-08',1,0))/ count(*)*100 from dws_user_user_login_td where dt='2022-06-08' and login_date_first=date_sub('2022-06-08',1) #注册里面包含着登录 --2日留存率 select '2022-06-08', date_sub('2022-06-08',2), 2, sum(if(login_date_last='2022-06-08',1,0)) retention_count, count(*) new_user_count, sum(if(login_date_last='2022-06-08',1,0))/ count(*)*100 from dws_user_user_login_td where dt='2022-06-08' and login_date_first=date_sub('2022-06-08',2) #注册里面包含着登录

insert overwrite table ads_user_retention select * from ads_user_retention union select user_id, '2022-06-08', login_date_first, datediff('2022-06-08', login_date_first) retention_day, sum(if(login_date_last = '2022-06-08', 1, 0)) retention_count, count(*) new_user_count, cast(sum(if(login_date_last = '2022-06-08', 1, 0)) / count(*) * 100 as decimal(16, 2)) retention_rate from dws_user_user_login_td where dt = '2022-06-08' and login_date_first >= date_sub('2022-06-08', 7) and login_date_first < '2022-06-08' group by login——date_first

用户新增活跃统计

本需求对活跃的定义是限定时间范围内有登录记录,可以通过限制末次登录日期在最近1/7/30日筛选活跃用户。注册日期等价于首次登录日期,可以根据首次登录日期筛选新增用户。

统计周期 |

指标 |

指标说明 |

最近1、7、30日 |

新增用户数 |

略 |

最近1、7、30日 |

活跃用户数 |

略 |

DROP TABLE IF EXISTS ads_user_stats; CREATE EXTERNAL TABLE ads_user_stats ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近n日,1:最近1日,7:最近7日,30:最近30日', `new_user_count` BIGINT COMMENT '新增用户数', `active_user_count` BIGINT COMMENT '活跃用户数' ) COMMENT '用户新增活跃统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_user_stats/';

insert overwrite table ads_user_stats select * from ads_user_stats union select * from( --最近一天 select '2022-06-08', 1 sum(if( login_date_first=date_sub('2022-06-08',1),1,0)) new_user_count, count(*) active_user_count from dws_user_login_td where dt='2022-06-08' and login_date_last=date_sub('2022-06-08',1) #昨天登录的用户数量 union all --最近七天 select '2022-06-08', 7 sum(if( login_date_firs>=date_sub('2022-06-08',6),1,0)) new_user_count, count(*) active_user_count from dws_user_login_td where dt='2022-06-08' and login_date_last>=date_sub('2022-06-08',6) and login_date_last<='2022-06-08' 七天之类的用户数量 union all --最近30天 select '2022-06-08', 30 sum(if( login_date_first>=date_sub('2022-06-08',29),1,0)) new_user_count, count(*) active_user_count from dws_user_login_td where dt='2022-06-08' and login_date_last>=date_sub('2022-06-08',29) and login_date_last<='2022-06-08' 七天之类的用户数量 )t

SQL优化

--当前的问题:读了三次相同的数据,使用三个不同的场景

--解决思路:读了一次数据,使用三个场景(炸裂)

炸裂【1,2,3】=》【1,2,3】,【1,2,3】,【1,2,3】

扁平化【1,2,3】=》1,2,3

select * from (select 'a' name) t lateral view explode(array(1,2,3)) tmp as name1

where name1!=7

1.获取时间范围最大的数据集

2.将查询的数据集在内存中进行炸裂操作(三份),变成多份,增加标记

3.将炸裂后的数据进行筛选,保存有效数据

4.将过滤的后的数据按照标记进行分组,然后进行统计

insert overwrite table ads_user_stats select * from ads_user_stats union select * from( select '2022-06-08', days sum(if( login_date_first>=date_sub('2022-06-08',days-1),1,0)) new_user_count, count(*) active_user_count from dws_user_login_td lateral view explode(array(1,7,30)) as days where dt='2022-06-08' and login_date_last>=date_sub('2022-06-08',days-1) and login_date_last<='2022-06-08' 七天之类的用户数量 group by days )t

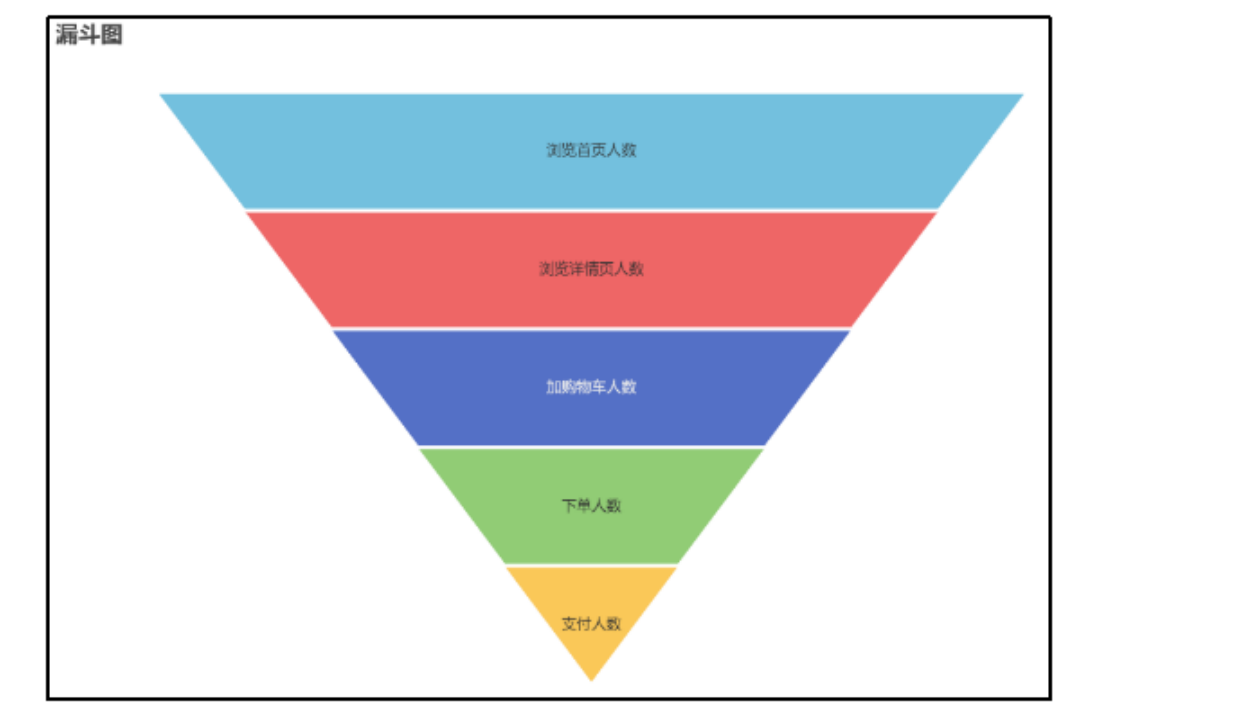

用户行为漏斗分析

漏斗分析是一个数据分析模型,它能够科学反映一个业务流程从起点到终点各阶段用户转化情况。由于其能将各阶段环节都展示出来,故哪个阶段存在问题,就能一目了然。

统计周期 |

指标 |

说明 |

最近1 日 |

首页浏览人数 |

略 |

最近1 日 |

商品详情页浏览人数 |

略 |

最近1 日 |

加购人数 |

略 |

最近1 日 |

下单人数 |

略 |

最近1 日 |

支付人数 |

支付成功人数 |

DROP TABLE IF EXISTS ads_user_action; CREATE EXTERNAL TABLE ads_user_action ( `dt` STRING COMMENT '统计日期', `home_count` BIGINT COMMENT '浏览首页人数', `good_detail_count` BIGINT COMMENT '浏览商品详情页人数', `cart_count` BIGINT COMMENT '加购人数', `order_count` BIGINT COMMENT '下单人数', `payment_count` BIGINT COMMENT '支付人数' ) COMMENT '用户行为漏斗分析' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_user_action/'; ---效率不高 select count(*) home_count from dws_traffic_page_visitor_page_view_1d where dt='2022-06-08' and page_id='home' ---效率不高 select count(*) good_detail_count from dws_traffic_page_visitor_page_view_1d where dt='2022-06-08' and page_id='good_detail' --优化,融合,读一次数据表 insert overwrite table ads_user_action select * from ads_user_action union ( select `2022-06-08` , `home_count` , `good_detail_count`, `cart_count` , `order_count` , `payment_count` from( select 1,a, sum(if(page_id ='home',1,0)) home_count, sum(if(page_id='good_detail',1,0)) good_detail_count from dws_traffic_page_visitor_page_view_1d where dt='2022-06-08' and(page_id='home' or page_id='good_detail') )pv join ( --加购人数统计 select 1 b, count(user_id) cart_count from dws_trade_user_cart_add_1d where dt='2022-06-08' )cart on pv.a=cart.b join( select 1,c count(user_id) order_count from dws_trade_user_1d where dt='2022-06-08') oi pv.a=oi.c join( select 1,d count(user_id) payment_count from dws_trade_user_1d where dt='2022-06-08 )pay on pay.a=pay.d)t

新增下单用户统计

需求说明如下。

统计周期 |

指标 |

说明 |

最近1、7、30日 |

新增下单人数 |

略 |

建表语句 DROP TABLE IF EXISTS ads_new_order_user_stats; CREATE EXTERNAL TABLE ads_new_order_user_stats ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `new_order_user_count` BIGINT COMMENT '新增下单人数' ) COMMENT '新增下单用户统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_new_order_user_stats/'; insert overwrite table ads_new_order_user_stats select * from ads_new_order_user_stats union select * from ( select '2022-06-08', 1, count(user_id) from dws_trade_user_order_td where dt='2022-06-08' and order_date_first='2022-06-08' union all select '2022-06-08', 7, count(user_id) from dws_trade_user_order_td where dt='2022-06-08' and order_date_first>=data_sub('2022-06-08',6) and order_date_first<='2022-06-08' union all select '2022-06-08', 30, count(user_id) from dws_trade_user_order_td where dt='2022-06-08' and order_date_first>=data_sub('2022-06-08',29) and order_date_first<='2022-06-08 )t

优化

select '2022-06-08', days, count(user_id) from dws_trade_user_order_td lateral view explode(array(1,7,30)) tmp as days where dt='2022-06-08' and order_date_first>=data_sub('2022-06-08',days-1) and order_date_first<='2022-06-08 group by days

最近7日内连续3日下单用户数

DROP TABLE IF EXISTS ads_order_continuously_user_count; CREATE EXTERNAL TABLE ads_order_continuously_user_count ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,7:最近7天', `order_continuously_user_count` BIGINT COMMENT '连续3日下单用户数' ) COMMENT '最近7日内连续3日下单用户数统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_continuously_user_count/';

dws_trade_user_order_1d 数据规律:1.连续三天的基本条件应该数据量大于等于3 2.连续的第三条数据的时间减去当前时间应该为2 3.一个用户可能会重复出现三次所以要去重 insert overwrite table ads_order_continuously_user_count select * from ads_order_continuously_user_count union select '2022-06-08', 7, count(distinct user) from ( select user_id datediff(lead(dt,2,'9999-12-31') over (partition by user_id order by dt),dt) diff from dws_trade_user_order_1d where dt>=date_sub('2022-06-08',1) and dt<='2022-06-08' )t where diff=2

方法二

select count(distinct(user_id)) from ( select user_id from ( select user_id, date_sub(dt,rank() over(partition by user_id order by dt)) diff from dws_trade_user_order_1d where dt>=date_add('2022-06-08',-6) )t1 group by user_id,diff having count(*)>=3 )t2;

方法三

insert overwrite table ads_order_continuously_user_count select * from ads_order_continuously_user_count union select '2022-06-08', 7, count(distinct user) from( select user_id, lead(dt,2,'999-12-31) over (partition by user_id order by dt) now, date_add(dt,2) ss from dws_trade_user_order_1d )t where now=ss

如果断一天也算连续(例如2022-06-05,2022-06-06,2022-06-08),应该如何计算?

select count(distinct(user_id)) from ( select user_id, datediff(lead(dt,2,'9999-12-31') over(partition by user_id order by dt),dt) diff from dws_trade_user_order_1d where dt>=date_add('2022-06-08',-6) )t1 where diff<=3;

商品主题

最近30日各品牌复购率

统计周期 |

统计粒度 |

指标 |

说明 |

最近30日 |

品牌 |

复购率 |

重复购买人数占购买人数比例 |

--复购率 --衍生指标(比例) --N派生指标 --重复购买人数(下单(次数>1)人数) /购买人数(下单人数) --数据来源: --DWS --DWD(下单)+DIM(SKU) --ODS(X) DROP TABLE IF EXISTS ads_repeat_purchase_by_tm; CREATE EXTERNAL TABLE ads_repeat_purchase_by_tm ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,30:最近30天', `tm_id` STRING COMMENT '品牌ID', `tm_name` STRING COMMENT '品牌名称', `order_repeat_rate` DECIMAL(16, 2) COMMENT '复购率' ) COMMENT '最近30日各品牌复购率统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_repeat_purchase_by_tm/';

粒度转化

先把我们的粒度从商品变成我们的品牌,在从品牌去掉我们的user_id,做统计

先取得某个用户对商品下单几次

--粒度变化 --数据源粒度:user+sku --统计粒度:sku --此时对另外一个粒度的数据统计不需要去重操作 --数据源粒度:user+sku --统计粒度:user+tm --粒度如果变粗,那么数据就需要进一步聚合 --nd表的问题 --nd表计算时可能出现的问题:30天有数据,但是7天没有数据,但是7天和3天会融合成一条 insert overwrite table ads_repeat_purchase_by_tm select * from ads_repeat_purchase_by_tm union select '2022-06-08', 30, tm_id, tm_name, sum(if(order_count>1,1,0))/count(user_id)*100 --cast(sum(if(order_count>=2,1,0))/sum(if(order_count>=1,1,0)) as decimal(16,2)) from(select user_id, tm_id, tm_name, sum(order_count_30d) count(distinct user_id) from dsw_trade_user_sku_order_nd where dt='2022-06-08' group by tm_id,tm_name,user_id)t group by tm_id,tm_name

各品牌商品下单统计

需求说明如下

统计周期 |

统计粒度 |

指标 |

说明 |

最近1、7、30日 |

品牌 |

下单数 |

略 |

最近1、7、30日 |

品牌 |

下单人数 |

略 |

--DWD+DIM=>1d DROP TABLE IF EXISTS ads_order_stats_by_tm; CREATE EXTERNAL TABLE ads_order_stats_by_tm ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `tm_id` STRING COMMENT '品牌ID', `tm_name` STRING COMMENT '品牌名称', `order_count` BIGINT COMMENT '下单数', `order_user_count` BIGINT COMMENT '下单人数' ) COMMENT '各品牌商品下单统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_stats_by_tm/'; insert overwrite table ads_order_stats_by_tm select * from ads_order_stats_by_tm union --最近1天 --数据源粒度:user+sku --统计粒度:tm select '2022-06-08', 1, tm_id, tm_name, sum(order_count_1d), count(distinct user_id) from dws_trade_sku_order_1d where dt='2022-06-08' group by tm_id,tm_name union all --最近七天 select '2022-06-08', 7, tm_id, tm_name, sum(order_count_7d), count(distinct user_id) from dws_trade_user_sku_order_nd where dt='2022-06-08' and oreder_count_7d>0 group by tm_id,tm_name union all select '2022-06-08', 30, tm_id, tm_name, sum(order_count_30d), count(distinct user_id) from dws_trade_user_sku_order_nd where dt='2022-06-08' and oreder_count_30d>0 group by tm_id,tm_name

优化

insert overwrite table ads_order_stats_by_tm select * from ads_order_stats_by_tm union ( select '2022-06-08', 30, tm_id, tm_name, sum(order_count_30d), count(distinct user_id) from (select days,tm_id,tm_name, if(days=7,order_count_7d,order_count_30d) order_count from dws_trade_user_sku_order_nd lateral view explode(array(7,30)) tmp as days where dt='2022-06-08')t where order_count>0 group by tm_id,tm_name,days)t

各品类商品下单统计

需求说明如下

统计周期 |

统计粒度 |

指标 |

说明 |

最近1、7、30日 |

品类 |

下单数 |

略 |

最近1、7、30日 |

品类 |

下单人数 |

略 |

DROP TABLE IF EXISTS ads_order_stats_by_cate; CREATE EXTERNAL TABLE ads_order_stats_by_cate ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `category1_id` STRING COMMENT '一级品类ID', `category1_name` STRING COMMENT '一级品类名称', `category2_id` STRING COMMENT '二级品类ID', `category2_name` STRING COMMENT '二级品类名称', `category3_id` STRING COMMENT '三级品类ID', `category3_name` STRING COMMENT '三级品类名称', `order_count` BIGINT COMMENT '下单数', `order_user_count` BIGINT COMMENT '下单人数' ) COMMENT '各品类商品下单统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_stats_by_cate/'; insert overwrite table ads_order_stats_by_cate select * from ads_order_stats_by_cate union select '2022-06-08' dt, recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, order_count, order_user_count from ( select 1 recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sum(order_count_1d) order_count, count(distinct(user_id)) order_user_count from dws_trade_user_sku_order_1d where dt='2022-06-08' group by category1_id,category1_name,category2_id,category2_name,category3_id,category3_name union all select recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sum(order_count), count(distinct(if(order_count>0,user_id,null))) from ( select recent_days, user_id, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, case recent_days when 7 then order_count_7d when 30 then order_count_30d end order_count from dws_trade_user_sku_order_nd lateral view explode(array(7,30)) tmp as recent_days where dt='2022-06-08' )t1 group by recent_days,category1_id,category1_name,category2_id,category2_name,category3_id,category3_name )odr;

各品类商品购物车存量Top3

--各品类商品购物车 --各品类商品 --购物车存量 --周期快照事实表 实现思路: 1.(统计粒度(维度)+维度)(度量值)销量统计出来 = (品类+存量)销量统计出来 2.将相同的统计粒度数据分在一个组中(数据不能减少)(开窗) 3.将组内的统计结果数据进行排序(开窗中的order by) 4.将组内排序的结果取前N条(row_number>=N) DROP TABLE IF EXISTS ads_sku_cart_num_top3_by_cate; CREATE EXTERNAL TABLE ads_sku_cart_num_top3_by_cate ( `dt` STRING COMMENT '统计日期', `category1_id` STRING COMMENT '一级品类ID', `category1_name` STRING COMMENT '一级品类名称', `category2_id` STRING COMMENT '二级品类ID', `category2_name` STRING COMMENT '二级品类名称', `category3_id` STRING COMMENT '三级品类ID', `category3_name` STRING COMMENT '三级品类名称', `sku_id` STRING COMMENT 'SKU_ID', `sku_name` STRING COMMENT 'SKU名称', `cart_num` BIGINT COMMENT '购物车中商品数量', `rk` BIGINT COMMENT '排名' ) COMMENT '各品类商品购物车存量Top3' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_sku_cart_num_top3_by_cate/'; 2)数据装载 select '2022-06-08', category1_id, category1_name,category2_id,category2_name,category3_id,category3_name,sku_id,sku_name from( select category1_id, category1_name,category2_id,category2_name,category3_id,category3_name,sku_id,sku_name rank() over (partition by category1_id,category2_id,category3_id order by cart_num) from( select category1_id, category1_name,category2_id,category2_name,category3_id,category3_name,sku_id,sku_name, sum(sku_num) cart_num from ( select sku_id from dwd_trade_cart_full where dt='2022-06-08' ) cart left join( select id, category1_id,category1_name,category2_id,category2_name,category3_id,category3_name, from dim_sku_full where dt='2022-06-08' )sku on cart.sku_id=sku.id group by category1_id,category1_name,category2_id,category2_name,category3_id,category3_name,sku_id )t )rr where rk<=3

各品牌商品收藏次数Top3

1)建表语句

DROP TABLE IF EXISTS ads_sku_favor_count_top3_by_tm; CREATE EXTERNAL TABLE ads_sku_favor_count_top3_by_tm ( `dt` STRING COMMENT '统计日期', `tm_id` STRING COMMENT '品牌ID', `tm_name` STRING COMMENT '品牌名称', `sku_id` STRING COMMENT 'SKU_ID', `sku_name` STRING COMMENT 'SKU名称', `favor_count` BIGINT COMMENT '被收藏次数', `rk` BIGINT COMMENT '排名' ) COMMENT '各品牌商品收藏次数Top3' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_sku_favor_count_top3_by_tm/'; 1.(品牌+商品,收藏次数) 统计 2.按照品类分组 3.按照次数进行排序(降序) 4.将排序后的数据取3名 --粒度的变化 --数据源粒度:sku --统计粒度:sku select '2022-06-08', 'tm_id', 'tm_name', 'sku_id', 'sku_name', 'favor_add_couunt_1d', rk (select 'tm_id', 'tm_name', 'sku_id', 'sku_name', 'favor_add_couunt_1d', favor_add_cont_1d, rank() over (partition by tm_id order by favor_add_count_1d desc) rk from dws_interation_sku_facor_add_1d where dt='2022-06-08')t where rk<=3 group by tm_id,tm_name,sku_id,sku_name

下单到支付时间间隔平均值

具体要求:最近1日完成支付的订单的下单时间到支付时间的时间间隔的平均值

DROP TABLE IF EXISTS ads_order_to_pay_interval_avg; CREATE EXTERNAL TABLE ads_order_to_pay_interval_avg ( `dt` STRING COMMENT '统计日期', `order_to_pay_interval_avg` BIGINT COMMENT '下单到支付时间间隔平均值,单位为秒' ) COMMENT '下单到支付时间间隔平均值统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_to_pay_interval_avg/';

--时间间隔1平均值:avg(datediff(支付时间,下单时间)) --datediff:用于天的时间的差值,不能用于时分秒的差值 1.时间差问题 2.累计快照事实表分区策略: --事实表会以流程中最后一个行为的时间作为分区字段值 insert overwrite table ads_order_to_pay_interval_avg select * from ads_order_to_pay_interval_avg union select '2022-06-08', cast(avg(to_unix_timestamp(payment_time)-to_unix_timestamp(order_time)) as bigint) from dwd_trade_trade_flow_acc where dt in ('9999-12-31','2022-06-08')#有可能收货也有可能收货 and payment_date_id='2022-06-08';#支付成功的时间

各省份交易统计

需求说明如下。

统计周期 |

统计粒度 |

指标 |

说明 |

最近1、7、30日 |

省份 |

订单数 |

略 |

最近1、7、30日 |

省份 |

订单金额 |

略 |

1)建表语句 DROP TABLE IF EXISTS ads_order_by_province; CREATE EXTERNAL TABLE ads_order_by_province ( `dt` STRING COMMENT '统计日期', `recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天', `province_id` STRING COMMENT '省份ID', `province_name` STRING COMMENT '省份名称', `area_code` STRING COMMENT '地区编码', `iso_code` STRING COMMENT '旧版国际标准地区编码,供可视化使用', `iso_code_3166_2` STRING COMMENT '新版国际标准地区编码,供可视化使用', `order_count` BIGINT COMMENT '订单数', `order_total_amount` DECIMAL(16, 2) COMMENT '订单金额' ) COMMENT '各省份交易统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_order_by_province/';

insert overwrite table ads_order_by_province select * from ads_order_by_province union select * from ( select '2022-06-08' , 1, province_id, province_name, area_code, iso_code, iso_3166_2 `iso_code_3166_2`, `order_count_1d`, `order_total_amount_1d` from dws_trade_province_order_1d where dt='2022-06-08' union all select '2022-06-08' , 7, province_id, province_name, area_code, iso_code, iso_3166_2 `iso_code_3166_2`, `order_count_7d`, `order_total_amount_7d` from dws_trade_province_order_nd where dt='2022-06-08' union all select '2022-06-08' , 30, province_id, province_name, area_code, iso_code, iso_3166_2 `iso_code_3166_2`, `order_count_30d`, `order_total_amount_30d` from dws_trade_province_order_nd where dt='2022-06-08' )t

优化

(4)代码实现 insert overwrite table ads_order_by_province select * from ads_order_by_province union select * from(select '2022-06-08' dt, 1 recent_days, province_id, province_name, area_code, iso_code, iso_3166_2, order_count_1d, order_total_amount_1d from dws_trade_province_order_1d where dt='2022-06-08' union select '2022-06-08' , days, province_id, province_name, area_code, iso_code, iso_3166_2 `iso_code_3166_2`, if(days=7,`order_count_7d`,`order_count_30d`), if(days=7,`order_total_amount_7d`,`order_total_amount_30d`) from dws_trade_province_order_nd lateral view explode(array(7,30)) tmp as days where dt='2022-06-08')t

优惠券使用统计

需求说明如下。

统计周期 |

统计粒度 |

指标 |

说明 |

最近1日 |

优惠券 |

使用次数 |

支付才算使用 |

最近1日 |

优惠券 |

使用人数 |

支付才算使用 |

--数据源:dws_tool_user_coupon_coupon_used_1d --粒度:user+coupon DROP TABLE IF EXISTS ads_coupon_stats; CREATE EXTERNAL TABLE ads_coupon_stats ( `dt` STRING COMMENT '统计日期', `coupon_id` STRING COMMENT '优惠券ID', `coupon_name` STRING COMMENT '优惠券名称', `used_count` BIGINT COMMENT '使用次数', `used_user_count` BIGINT COMMENT '使用人数' ) COMMENT '优惠券使用统计' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' LOCATION '/warehouse/gmall/ads/ads_coupon_stats/'; insert overwrite table ads_coupon_stats select * from ads_coupon_stats union select '2022-06-08' dt, coupon_id, coupon_name, cast(sum(used_count_1d) as bigint), cast(count(*) as bigint) from dws_tool_user_coupon_coupon_used_1d where dt='2022-06-08' group by coupon_id,coupon_name;

数据装载脚本

在hadoop102的/home/atguigu/bin目录下创建dws_to_ads.sh

vim dws_to_ads.sh

!/bin/bash APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$2" ] ;then do_date=$2 else do_date=`date -d "-1 day" +%F` fi ads_coupon_stats=" insert overwrite table ${APP}.ads_coupon_stats select * from ${APP}.ads_coupon_stats union select '$do_date' dt, coupon_id, coupon_name, cast(sum(used_count_1d) as bigint), cast(count(*) as bigint) from ${APP}.dws_tool_user_coupon_coupon_used_1d where dt='$do_date' group by coupon_id,coupon_name; " ads_new_order_user_stats=" insert overwrite table ${APP}.ads_new_order_user_stats select * from ${APP}.ads_new_order_user_stats union select '$do_date' dt, recent_days, count(*) new_order_user_count from ${APP}.dws_trade_user_order_td lateral view explode(array(1,7,30)) tmp as recent_days where dt='$do_date' and order_date_first>=date_add('$do_date',-recent_days+1) group by recent_days; " ads_order_by_province=" insert overwrite table ${APP}.ads_order_by_province select * from ${APP}.ads_order_by_province union select '$do_date' dt, 1 recent_days, province_id, province_name, area_code, iso_code, iso_3166_2, order_count_1d, order_total_amount_1d from ${APP}.dws_trade_province_order_1d where dt='$do_date' union select '$do_date' dt, recent_days, province_id, province_name, area_code, iso_code, iso_3166_2, case recent_days when 7 then order_count_7d when 30 then order_count_30d end order_count, case recent_days when 7 then order_total_amount_7d when 30 then order_total_amount_30d end order_total_amount from ${APP}.dws_trade_province_order_nd lateral view explode(array(7,30)) tmp as recent_days where dt='$do_date'; " ads_order_continuously_user_count=" insert overwrite table ${APP}.ads_order_continuously_user_count select * from ${APP}.ads_order_continuously_user_count union select '$do_date', 7, count(distinct(user_id)) from ( select user_id, datediff(lead(dt,2,'9999-12-31') over(partition by user_id order by dt),dt) diff from ${APP}.dws_trade_user_order_1d where dt>=date_add('$do_date',-6) )t1 where diff=2; " ads_order_stats_by_cate=" insert overwrite table ${APP}.ads_order_stats_by_cate select * from ${APP}.ads_order_stats_by_cate union select '$do_date' dt, recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, order_count, order_user_count from ( select 1 recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sum(order_count_1d) order_count, count(distinct(user_id)) order_user_count from ${APP}.dws_trade_user_sku_order_1d where dt='$do_date' group by category1_id,category1_name,category2_id,category2_name,category3_id,category3_name union all select recent_days, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sum(order_count), count(distinct(if(order_count>0,user_id,null))) from ( select recent_days, user_id, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, case recent_days when 7 then order_count_7d when 30 then order_count_30d end order_count from ${APP}.dws_trade_user_sku_order_nd lateral view explode(array(7,30)) tmp as recent_days where dt='$do_date' )t1 group by recent_days,category1_id,category1_name,category2_id,category2_name,category3_id,category3_name )odr; " ads_order_stats_by_tm=" insert overwrite table ${APP}.ads_order_stats_by_tm select * from ${APP}.ads_order_stats_by_tm union select '$do_date' dt, recent_days, tm_id, tm_name, order_count, order_user_count from ( select 1 recent_days, tm_id, tm_name, sum(order_count_1d) order_count, count(distinct(user_id)) order_user_count from ${APP}.dws_trade_user_sku_order_1d where dt='$do_date' group by tm_id,tm_name union all select recent_days, tm_id, tm_name, sum(order_count), count(distinct(if(order_count>0,user_id,null))) from ( select recent_days, user_id, tm_id, tm_name, case recent_days when 7 then order_count_7d when 30 then order_count_30d end order_count from ${APP}.dws_trade_user_sku_order_nd lateral view explode(array(7,30)) tmp as recent_days where dt='$do_date' )t1 group by recent_days,tm_id,tm_name )odr; " ads_order_to_pay_interval_avg=" insert overwrite table ${APP}.ads_order_to_pay_interval_avg select * from ${APP}.ads_order_to_pay_interval_avg union select '$do_date', cast(avg(to_unix_timestamp(payment_time)-to_unix_timestamp(order_time)) as bigint) from ${APP}.dwd_trade_trade_flow_acc where dt in ('9999-12-31','$do_date') and payment_date_id='$do_date'; " ads_page_path=" insert overwrite table ${APP}.ads_page_path select * from ${APP}.ads_page_path union select '$do_date' dt, source, nvl(target,'null'), count(*) path_count from ( select concat('step-',rn,':',page_id) source, concat('step-',rn+1,':',next_page_id) target from ( select page_id, lead(page_id,1,null) over(partition by session_id order by view_time) next_page_id, row_number() over (partition by session_id order by view_time) rn from ${APP}.dwd_traffic_page_view_inc where dt='$do_date' )t1 )t2 group by source,target; " ads_repeat_purchase_by_tm=" insert overwrite table ${APP}.ads_repeat_purchase_by_tm select * from ${APP}.ads_repeat_purchase_by_tm union select '$do_date', 30, tm_id, tm_name, cast(sum(if(order_count>=2,1,0))/sum(if(order_count>=1,1,0)) as decimal(16,2)) from ( select user_id, tm_id, tm_name, sum(order_count_30d) order_count from ${APP}.dws_trade_user_sku_order_nd where dt='$do_date' group by user_id, tm_id,tm_name )t1 group by tm_id,tm_name; " ads_sku_cart_num_top3_by_cate=" set hive.mapjoin.optimized.hashtable=false; insert overwrite table ${APP}.ads_sku_cart_num_top3_by_cate select * from ${APP}.ads_sku_cart_num_top3_by_cate union select '$do_date' dt, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, sku_id, sku_name, cart_num, rk from ( select sku_id, sku_name, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name, cart_num, rank() over (partition by category1_id,category2_id,category3_id order by cart_num desc) rk from ( select sku_id, sum(sku_num) cart_num from ${APP}.dwd_trade_cart_full where dt='$do_date' group by sku_id )cart left join ( select id, sku_name, category1_id, category1_name, category2_id, category2_name, category3_id, category3_name from ${APP}.dim_sku_full where dt='$do_date' )sku on cart.sku_id=sku.id )t1 where rk<=3; set hive.mapjoin.optimized.hashtable=true; " ads_sku_favor_count_top3_by_tm=" insert overwrite table ${APP}.ads_sku_favor_count_top3_by_tm select * from ${APP}.ads_sku_favor_count_top3_by_tm union select '$do_date' dt, tm_id, tm_name, sku_id, sku_name, favor_add_count_1d, rk from ( select tm_id, tm_name, sku_id, sku_name, favor_add_count_1d, rank() over (partition by tm_id order by favor_add_count_1d desc) rk from ${APP}.dws_interaction_sku_favor_add_1d where dt='$do_date' )t1 where rk<=3; " ads_traffic_stats_by_channel=" insert overwrite table ${APP}.ads_traffic_stats_by_channel select * from ${APP}.ads_traffic_stats_by_channel union select '$do_date' dt, recent_days, channel, cast(count(distinct(mid_id)) as bigint) uv_count, cast(avg(during_time_1d)/1000 as bigint) avg_duration_sec, cast(avg(page_count_1d) as bigint) avg_page_count, cast(count(*) as bigint) sv_count, cast(sum(if(page_count_1d=1,1,0))/count(*) as decimal(16,2)) bounce_rate from ${APP}.dws_traffic_session_page_view_1d lateral view explode(array(1,7,30)) tmp as recent_days where dt>=date_add('$do_date',-recent_days+1) group by recent_days,channel; " ads_user_action=" insert overwrite table ${APP}.ads_user_action select * from ${APP}.ads_user_action union select '$do_date' dt, home_count, good_detail_count, cart_count, order_count, payment_count from ( select 1 recent_days, sum(if(page_id='home',1,0)) home_count, sum(if(page_id='good_detail',1,0)) good_detail_count from ${APP}.dws_traffic_page_visitor_page_view_1d where dt='$do_date' and page_id in ('home','good_detail') )page join ( select 1 recent_days, count(*) cart_count from ${APP}.dws_trade_user_cart_add_1d where dt='$do_date' )cart on page.recent_days=cart.recent_days join ( select 1 recent_days, count(*) order_count from ${APP}.dws_trade_user_order_1d where dt='$do_date' )ord on page.recent_days=ord.recent_days join ( select 1 recent_days, count(*) payment_count from ${APP}.dws_trade_user_payment_1d where dt='$do_date' )pay on page.recent_days=pay.recent_days; " ads_user_change=" insert overwrite table ${APP}.ads_user_change select * from ${APP}.ads_user_change union select churn.dt, user_churn_count, user_back_count from ( select '$do_date' dt, count(*) user_churn_count from ${APP}.dws_user_user_login_td where dt='$do_date' and login_date_last=date_add('$do_date',-7) )churn join ( select '$do_date' dt, count(*) user_back_count from ( select user_id, login_date_last from ${APP}.dws_user_user_login_td where dt='$do_date' and login_date_last = '$do_date' )t1 join ( select user_id, login_date_last login_date_previous from ${APP}.dws_user_user_login_td where dt=date_add('$do_date',-1) )t2 on t1.user_id=t2.user_id where datediff(login_date_last,login_date_previous)>=8 )back on churn.dt=back.dt; " ads_user_retention=" insert overwrite table ${APP}.ads_user_retention select * from ${APP}.ads_user_retention union select '$do_date' dt, login_date_first create_date, datediff('$do_date', login_date_first) retention_day, sum(if(login_date_last = '$do_date', 1, 0)) retention_count, count(*) new_user_count, cast(sum(if(login_date_last = '$do_date', 1, 0)) / count(*) * 100 as decimal(16, 2)) retention_rate from ( select user_id, login_date_last, login_date_first from ${APP}.dws_user_user_login_td where dt = '$do_date' and login_date_first >= date_add('$do_date', -7) and login_date_first < '$do_date' ) t1 group by login_date_first; " ads_user_stats=" insert overwrite table ${APP}.ads_user_stats select * from ${APP}.ads_user_stats union select '$do_date' dt, recent_days, sum(if(login_date_first >= date_add('$do_date', -recent_days + 1), 1, 0)) new_user_count, count(*) active_user_count from ${APP}.dws_user_user_login_td lateral view explode(array(1, 7, 30)) tmp as recent_days where dt = '$do_date' and login_date_last >= date_add('$do_date', -recent_days + 1) group by recent_days; " case $1 in "ads_coupon_stats" ) hive -e "$ads_coupon_stats" ;; "ads_new_order_user_stats" ) hive -e "$ads_new_order_user_stats" ;; "ads_order_by_province" ) hive -e "$ads_order_by_province" ;; "ads_order_continuously_user_count" ) hive -e "$ads_order_continuously_user_count" ;; "ads_order_stats_by_cate" ) hive -e "$ads_order_stats_by_cate" ;; "ads_order_stats_by_tm" ) hive -e "$ads_order_stats_by_tm" ;; "ads_order_to_pay_interval_avg" ) hive -e "$ads_order_to_pay_interval_avg" ;; "ads_page_path" ) hive -e "$ads_page_path" ;; "ads_repeat_purchase_by_tm" ) hive -e "$ads_repeat_purchase_by_tm" ;; "ads_sku_cart_num_top3_by_cate" ) hive -e "$ads_sku_cart_num_top3_by_cate" ;; "ads_sku_favor_count_top3_by_tm" ) hive -e "$ads_sku_favor_count_top3_by_tm" ;; "ads_traffic_stats_by_channel" ) hive -e "$ads_traffic_stats_by_channel" ;; "ads_user_action" ) hive -e "$ads_user_action" ;; "ads_user_change" ) hive -e "$ads_user_change" ;; "ads_user_retention" ) hive -e "$ads_user_retention" ;; "ads_user_stats" ) hive -e "$ads_user_stats" ;; "all" ) hive -e "$ads_coupon_stats$ads_new_order_user_stats$ads_order_by_province$ads_order_continuously_user_count$ads_order_stats_by_cate$ads_order_stats_by_tm$ads_order_to_pay_interval_avg$ads_page_path$ads_repeat_purchase_by_tm$ads_sku_cart_num_top3_by_cate$ads_sku_favor_count_top3_by_tm$ads_traffic_stats_by_channel$ads_user_action$ads_user_change$ads_user_retention$ads_user_stats" ;;

增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod +x dws_to_ads.sh

(4)脚本用法

[atguigu@hadoop102 bin]$ dws_to_ads.sh all 2022-06-08