"Traceback (most recent call last):

File ""/opt/conda/lib/python3.7/site-packages/flask/app.py"", line 2073, in wsgi_app

response = self.full_dispatch_request()

File ""/opt/conda/lib/python3.7/site-packages/flask/app.py"", line 1519, in full_dispatch_request

rv = self.handle_user_exception(e)

File ""/opt/conda/lib/python3.7/site-packages/flask/app.py"", line 1517, in full_dispatch_request

rv = self.dispatch_request()

File ""/opt/conda/lib/python3.7/site-packages/flask/app.py"", line 1503, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(req.view_args)

File ""c.py"", line 13, in identify

result = inference_pipeline(audio_in=audio_path)

File ""/opt/conda/lib/python3.7/site-packages/modelscope/pipelines/audio/asr_inference_pipeline.py"", line 260, in call

output = self.forward(output, kwargs)

File ""/opt/conda/lib/python3.7/site-packages/modelscope/pipelines/audio/asr_inference_pipeline.py"", line 540, in forward

inputs['asr_result'] = self.run_inference(self.cmd, kwargs)

File ""/opt/conda/lib/python3.7/site-packages/modelscope/pipelines/audio/asr_inference_pipeline.py"", line 619, in run_inference

cmd['fs'], cmd['param_dict'], kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/bin/asr_inference_paraformer.py"", line 741, in _forward

results = speech2text(batch)

File ""/opt/conda/lib/python3.7/site-packages/torch/autograd/grad_mode.py"", line 27, in decorate_context

return func(*args, kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/bin/asr_inference_paraformer.py"", line 227, in call

enc, enc_len = self.asr_model.encode(batch)

File ""/opt/conda/lib/python3.7/site-packages/funasr/models/e2e_asr_paraformer.py"", line 302, in encode

encoder_out, encoder_outlens, = self.encoder(feats, feats_lengths)

File ""/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py"", line 1110, in _call_impl

return forward_call(*input, kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/models/encoder/sanm_encoder.py"", line 322, in forward

encoder_outs = self.encoders(xs_pad, masks)

File ""/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py"", line 1110, in _call_impl

return forward_call(input, **kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/modules/repeat.py"", line 18, in forward

args = m(args)

File ""/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py"", line 1110, in _call_impl

return forward_call(input, **kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/models/encoder/sanm_encoder.py"", line 99, in forward

self.self_attn(x, mask, mask_shfit_chunk=mask_shfit_chunk, mask_att_chunk_encoder=mask_att_chunk_encoder)

File ""/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py"", line 1110, in _call_impl

return forward_call(input, **kwargs)

File ""/opt/conda/lib/python3.7/site-packages/funasr/modules/attention.py"", line 441, in forward

att_outs = self.forward_attention(v_h, scores, mask, mask_att_chunk_encoder)

File ""/opt/conda/lib/python3.7/site-packages/funasr/modules/attention.py"", line 410, in forward_attention

mask, 0.0

RuntimeError: [enforce fail at alloc_cpu.cpp:73] . DefaultCPUAllocator: can't allocate memory: you tried to allocate 2232373504 bytes. Error code 12 (Cannot allocate memory)

2024-07-02 22:39:23,167 (_internal:225) INFO: 172.17.0.1 - - [02/Jul/2024 22:39:23] ""POST /wav2res HTTP/1.0"" 500 -

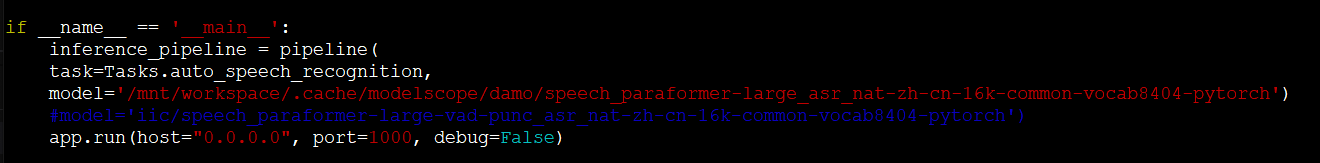

用的这个ModelScope模型,发现文件小可以正常转写出来,文件大就会报如上的错误,怎么解决?"

ModelScope旨在打造下一代开源的模型即服务共享平台,为泛AI开发者提供灵活、易用、低成本的一站式模型服务产品,让模型应用更简单!欢迎加入技术交流群:微信公众号:魔搭ModelScope社区,钉钉答疑群:44837352