怎么使用modelscope微调的Qwen1.5-4B-Chat?

"https://github.com/QwenLM/Qwen1.5/blob/main/docs/source/deployment/vllm.rst

导入所需库

from modelscope import AutoTokenizer # 自动加载预训练模型的tokenizer

from vllm import LLM, SamplingParams # 加载LLM模块和采样参数类

tokenizer = AutoTokenizer.from_pretrained(""Qwen/Qwen1.5-4B-Chat"") # 初始化tokenizer

默认超参数 随机性,top_p采样概率,重复惩罚,最大文本长度

sampling_params = SamplingParams(temperature=0.6, top_p=0.6, repetition_penalty=1.00, max_tokens=2048)

初始化LLM模型

llm = LLM(model=""Qwen/Qwen1.5-4B-Chat"", kv_cache_dtype=""fp8_e5m2"",

gpu_memory_utilization=0.80, max_model_len=8192) # 多GPU :tensor_parallel_size=4

准备输入提示信息

prompt_data = ['蚂蚁', '大象', '蟑螂', '蜻蜓']

result = []

for prompt in prompt_data:

# 定义对话消息格式,包括系统消息和用户消息

messages = [{""role"": ""system"", ""content"": ""动物百科""}, {""role"": ""user"", ""content"": prompt}]

# 使用tokenizer将消息格式化,并添加生成文本提示符,不进行分词处理

text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

result.append(text)

使用设置好的参数对模型进行文本生成

outputs = llm.generate(result, sampling_params)

打印生成的输出结果

for output in outputs:

prompt = output.prompt # 提取原始提示信息

generated_text = output.outputs[0].text # 提取模型生成的文本内容

print(f""Prompt: {prompt!r}, Generated text: {generated_text!r}"")

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

Initialize the tokenizer

tokenizer = AutoTokenizer.from_pretrained(""Qwen/Qwen1.5-7B-Chat"")

Pass the default decoding hyperparameters of Qwen1.5-7B-Chat

max_tokens is for the maximum length for generation.

sampling_params = SamplingParams(temperature=0.7, top_p=0.8, repetition_penalty=1.05, max_tokens=512)

Input the model name or path. Can be GPTQ or AWQ models.

llm = LLM(model=""Qwen/Qwen1.5-7B-Chat"")

Prepare your prompts

prompt = ""Tell me something about large language models.""

messages = [

{""role"": ""system"", ""content"": ""You are a helpful assistant.""},

{""role"": ""user"", ""content"": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

generate outputs

outputs = llm.generate([text], sampling_params)

Print the outputs.

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f""Prompt: {prompt!r}, Generated text: {generated_text!r}"")

怎么使用modelscope微调的Qwen1.5-4B-Chat? ,

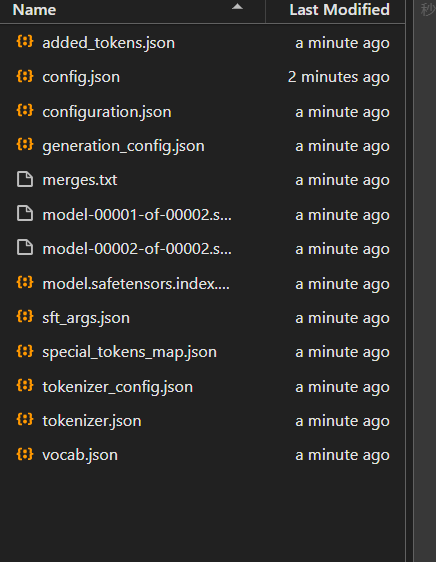

本地的路径:/mnt/workspace/output/qwen1half-4b-chat/v0-20240412-204934/checkpoint-97-merged

"

-

北京阿里云ACE会长

导入AutoTokenizer用于加载预训练模型的tokenizer

from modelscope import AutoTokenizer from vllm import LLM, SamplingParams # 加载Qwen1.5-4B-Chat模型的tokenizer tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen1.5-4B-Chat") # 设置采样参数 sampling_params = SamplingParams( temperature=0.6, # 控制生成文本的随机性 top_p=0.6, # 控制词汇选择的多样性 repetition_penalty=1.00, # 减少重复词汇的出现 max_tokens=2048 # 控制生成文本的最大长度 ) # 初始化LLM模型 model = LLM.from_pretrained("Qwen/Qwen1.5-4B-Chat", sampling_params=sampling_params) # 现在您可以使用model对象进行文本生成或其他NLP任务2024-04-17 09:08:15赞同 3 展开评论

ModelScope旨在打造下一代开源的模型即服务共享平台,为泛AI开发者提供灵活、易用、低成本的一站式模型服务产品,让模型应用更简单!欢迎加入技术交流群:微信公众号:魔搭ModelScope社区,钉钉答疑群:44837352