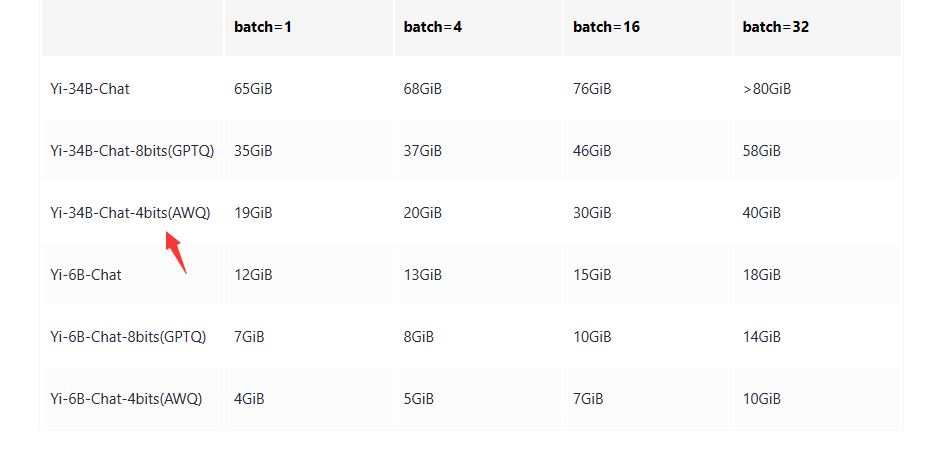

ModelScope爆显存了吗,应该怎么解决?我使用的免费示例,显示GPU内存剩余22G,加载Yi-34B-Chat-4bits模型,kernal总是重启

from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

tokenizer = AutoTokenizer.from_pretrained("01ai/Yi-6B-Chat", revision='master', trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("01ai/Yi-6B-Chat", revision='master', device_map="auto", trust_remote_code=True).eval()

messages = [

{"role": "user", "content": "hi"}

]

input_ids = tokenizer.apply_chat_template(conversation=messages, tokenize=True, add_generation_prompt=True, return_tensors='pt')

output_ids = model.generate(input_ids.to('cuda'))

response = tokenizer.decode(output_ids[0][input_ids.shape[1]:], skip_special_tokens=True)

print(response)