CBeann

已加入开发者社区1810天

勋章

专家博主

专家博主

星级博主

星级博主

技术博主

技术博主

江湖新秀

江湖新秀

我关注的人

粉丝

技术能力

兴趣领域

- 数据库

擅长领域

技术认证

暂时未有相关云产品技术能力~

明天的你会感激今天努力的自己。。。

暂无精选文章

暂无更多信息

2023年02月

-

02.25 23:38:13

发表了文章

2023-02-25 23:38:13

发表了文章

2023-02-25 23:38:13

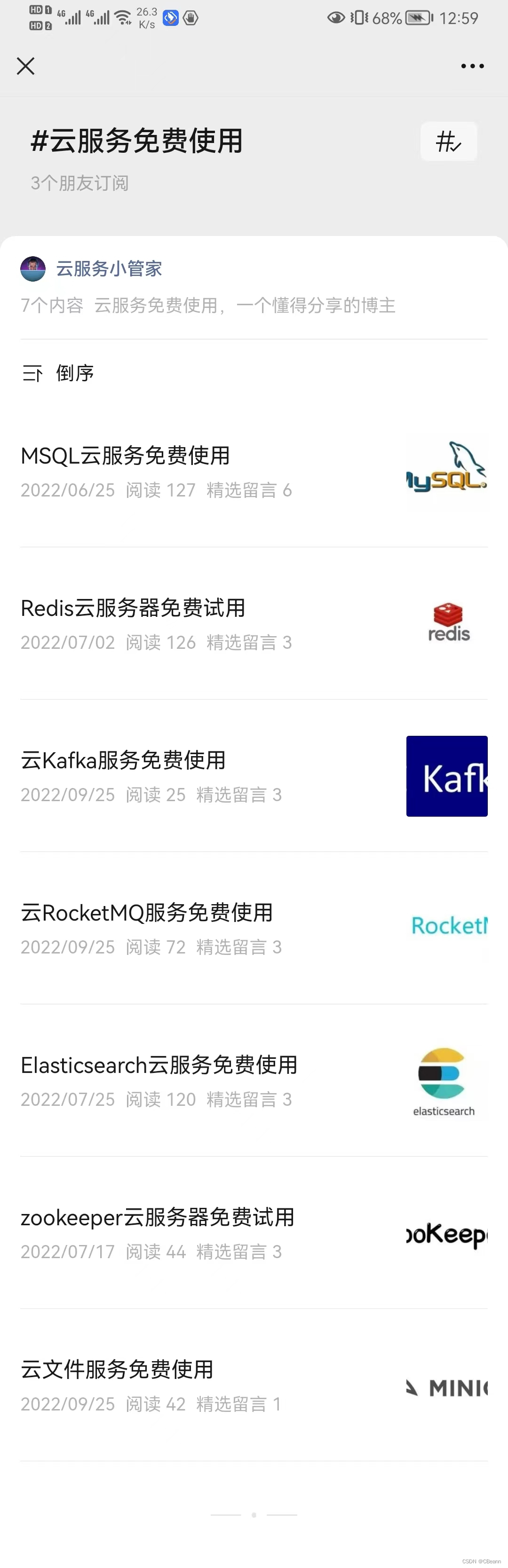

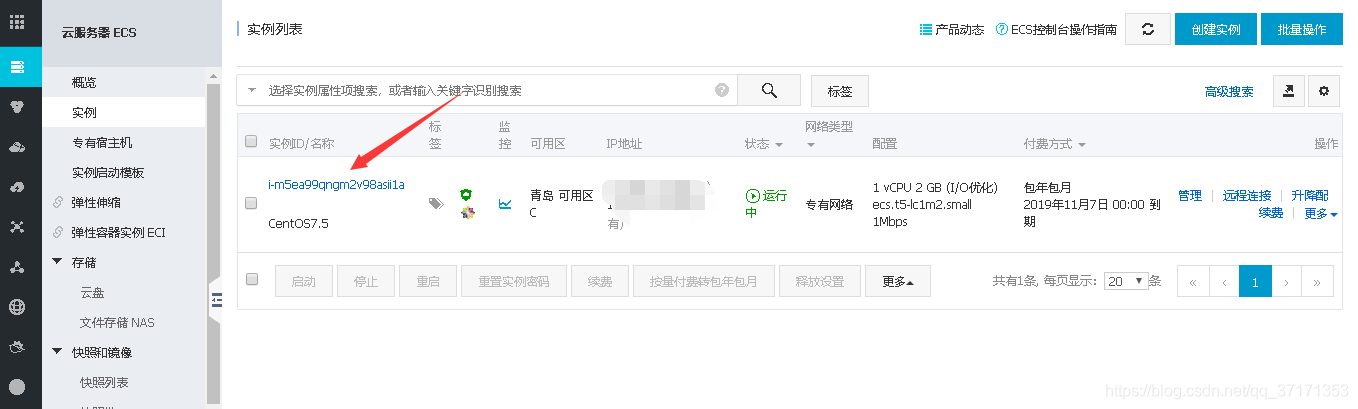

云服务&服务器免费使用

写作目的 最近买了个2核4G的云服务器,自己平时就是写个demo。感觉服务器资源浪费了,所以搭建了一些测试环境方便小伙伴使用。

-

02.25 23:34:54

发表了文章

2023-02-25 23:34:54

发表了文章

2023-02-25 23:34:54

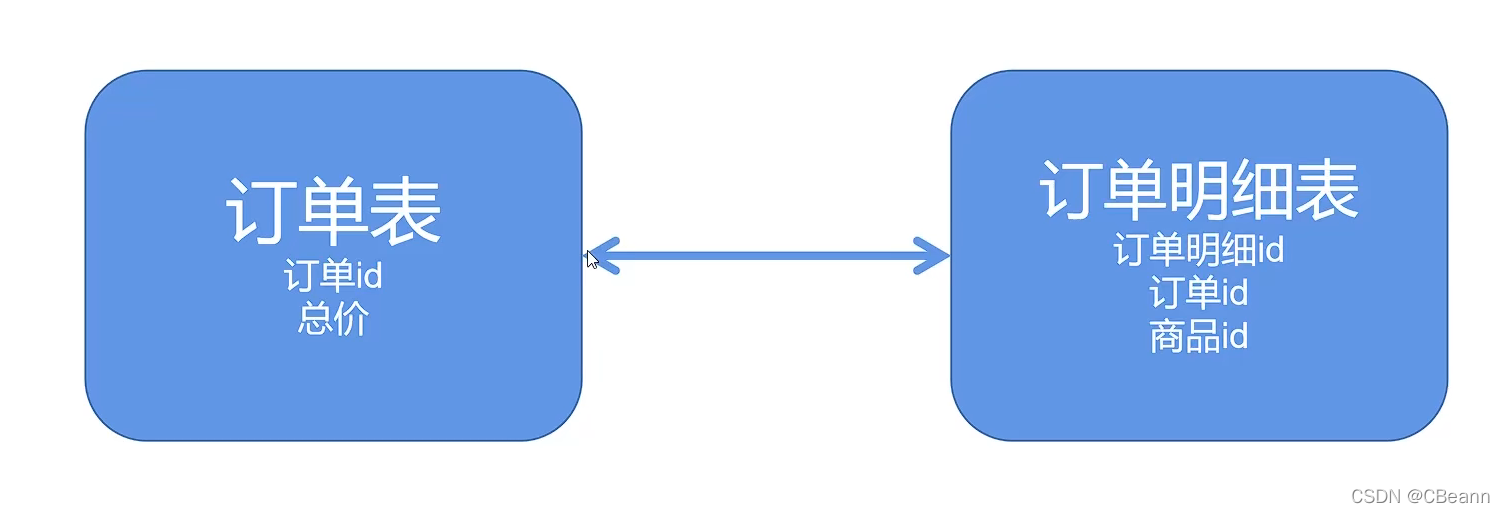

开发规范中为什么要禁用外键约束

开发规范中为什么要禁用外键约束

-

02.25 23:32:55

发表了文章

2023-02-25 23:32:55

发表了文章

2023-02-25 23:32:55

走过的坑-Java开发

走过的坑-Java开发 -

02.25 23:30:19

发表了文章

2023-02-25 23:30:19

发表了文章

2023-02-25 23:30:19

花了一星期,自己写了个简单的RPC框架

花了一星期,自己写了个简单的RPC框架

-

02.25 23:20:40

发表了文章

2023-02-25 23:20:40

发表了文章

2023-02-25 23:20:40

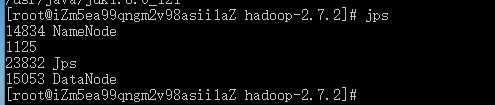

ECS服务器搭建hadoop伪分布式(二)

ECS服务器搭建hadoop伪分布式(二)

-

02.25 23:13:04

发表了文章

2023-02-25 23:13:04

发表了文章

2023-02-25 23:13:04

ECS服务器搭建hadoop伪分布式(一)

ECS服务器搭建hadoop伪分布式(一)

-

02.25 22:56:56

发表了文章

2023-02-25 22:56:56

发表了文章

2023-02-25 22:56:56

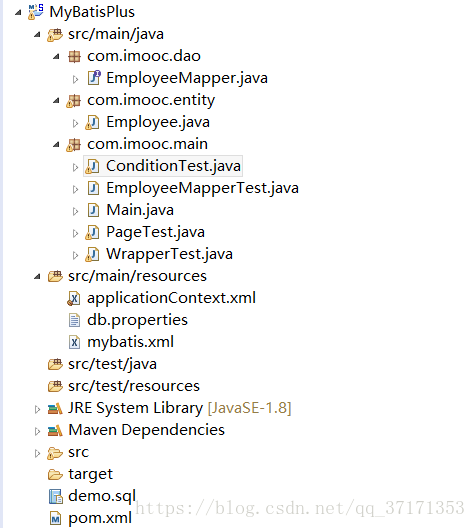

MyBatis-Plus整合Spring Demo

MyBatis-Plus整合Spring Demo

-

02.25 22:52:14

发表了文章

2023-02-25 22:52:14

发表了文章

2023-02-25 22:52:14

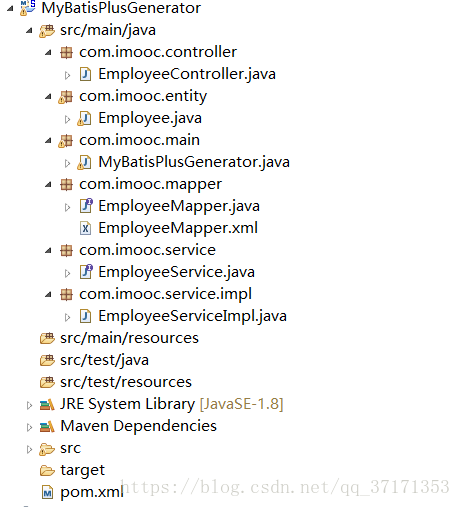

MyBatis-Plus代码自动生成工具

MyBatis-Plus代码自动生成工具

-

02.25 22:49:32

发表了文章

2023-02-25 22:49:32

发表了文章

2023-02-25 22:49:32

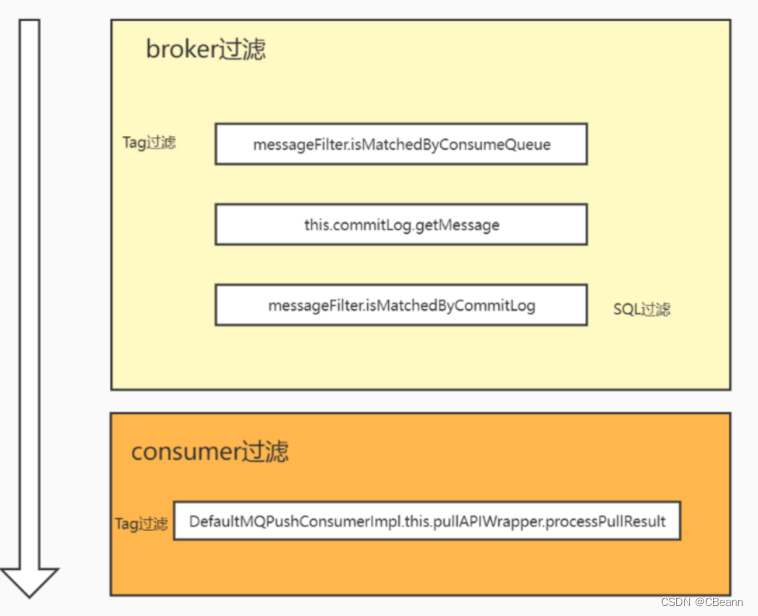

RocketMQ的TAG过滤和SQL过滤机制

写作目的 项目中各个中台都使用同一个DB。而DB下会使用中间件监听binlog转换成MQ消息,而下游的各个中台去MQ去拿自己感兴趣的消息。

-

02.25 22:41:09

发表了文章

2023-02-25 22:41:09

发表了文章

2023-02-25 22:41:09

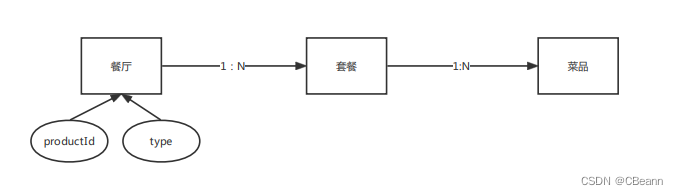

easy-rules规则引擎最佳落地实践

写作目的 这是一个头部互联网公司中的一个问题。因为有很多业务产品线,作为一个新人或者团队外的人员是很难区分不同的产品线之间的区别的,因此需要给某个产品线一个描述。但是随着业务的发展,产品线下可能又根据某个字段进一步划分,那么子产品线就是父产品线 + 字段 去区分。后面根据两个字段划分…。人都麻了。因为不同的组合有不同的链路。因此针对一个产品,我们要提供针对这个产品的具体规则描述,从而减少答疑。

-

02.25 22:35:30

发表了文章

2023-02-25 22:35:30

发表了文章

2023-02-25 22:35:30

为什么MySQL默认的隔离级别是RR而大厂使用的是RC?

为什么MySQL默认的隔离级别是RR而大厂使用的是RC?

-

02.25 22:30:48

发表了文章

2023-02-25 22:30:48

发表了文章

2023-02-25 22:30:48

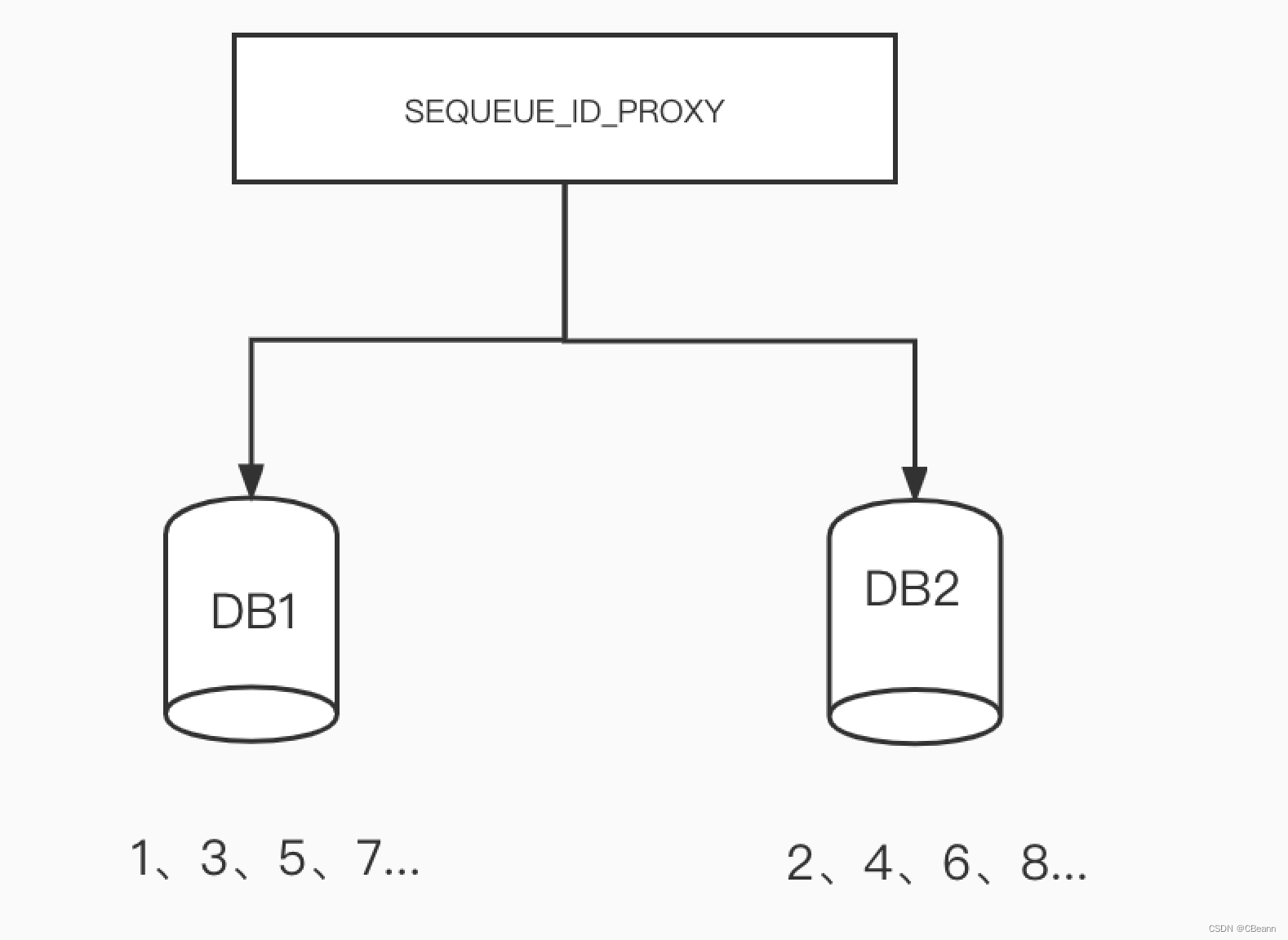

分布式主键生成设计策略

分布式主键生成设计策略

-

02.25 22:21:10

发表了文章

2023-02-25 22:21:10

发表了文章

2023-02-25 22:21:10

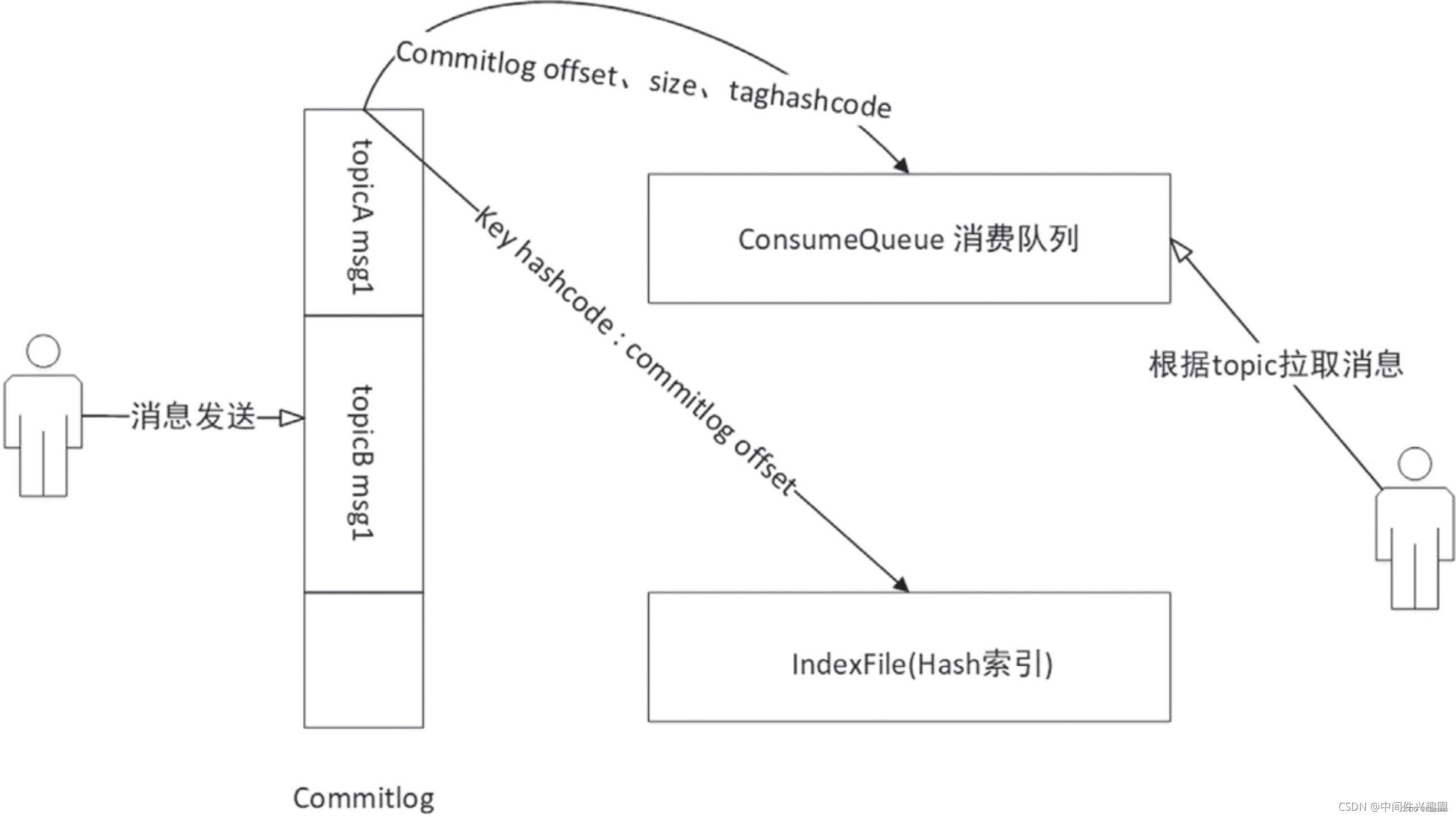

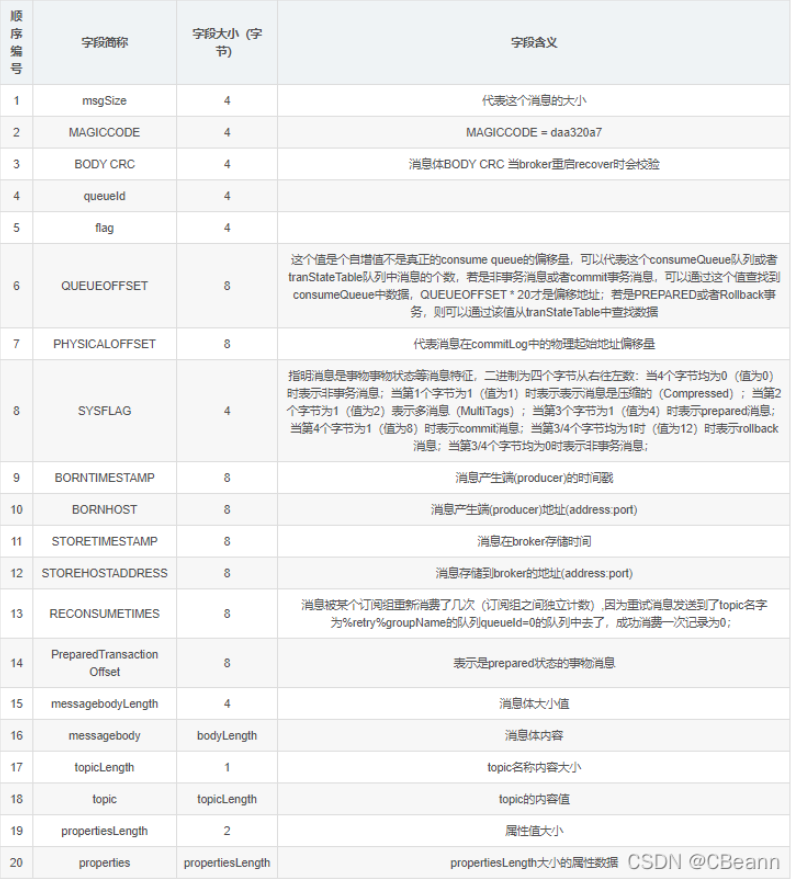

RocketMQ中msg&tag的生命周期

RocketMQ中msg&tag的生命周期

-

02.25 22:12:27

发表了文章

2023-02-25 22:12:27

发表了文章

2023-02-25 22:12:27

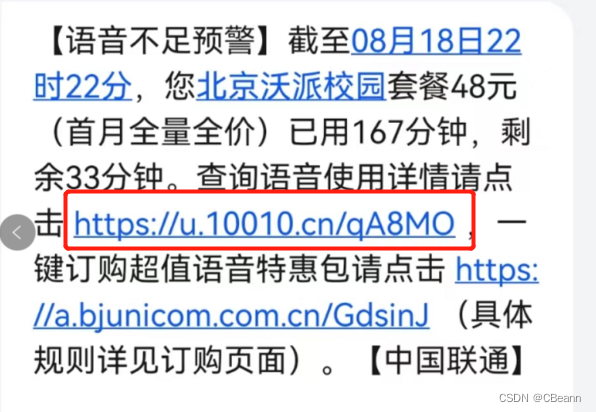

短URL服务的设计以及实现

短URL服务的设计以及实现

-

02.25 22:08:54

发表了文章

2023-02-25 22:08:54

发表了文章

2023-02-25 22:08:54

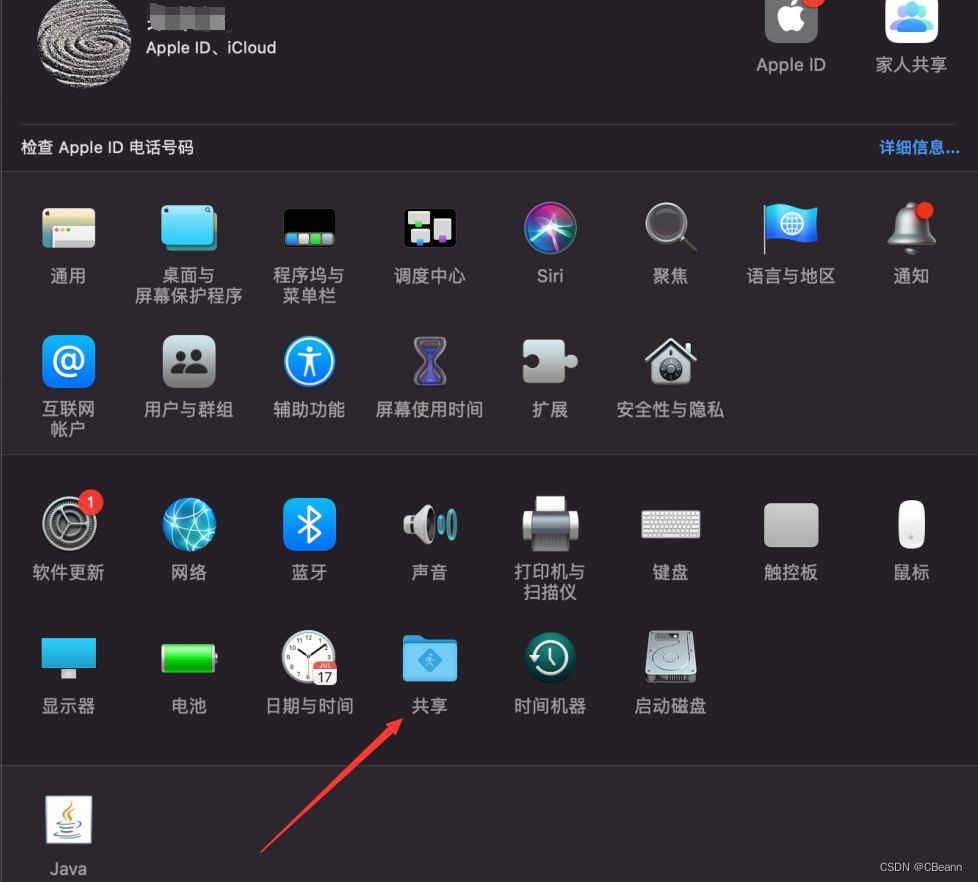

Mac当作云服务器,你真的会搞吗

Mac当作云服务器,你真的会搞吗

-

02.25 22:03:09

发表了文章

2023-02-25 22:03:09

发表了文章

2023-02-25 22:03:09

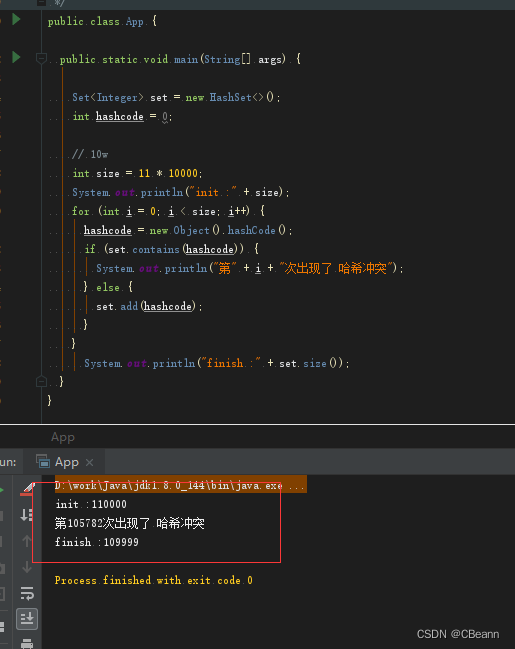

多大数量级会出现哈希碰撞

写作目的 今天在网上看到一个有意思的问题是多大的数据量会出现哈希碰撞?我当时的想法是2的32次方,因此hascode是init类型的,哈哈。 还是可以写个demo实验一下的。真实答案是10W5K左右的量级会出现哈希碰撞

-

02.25 22:01:47

发表了文章

2023-02-25 22:01:47

发表了文章

2023-02-25 22:01:47

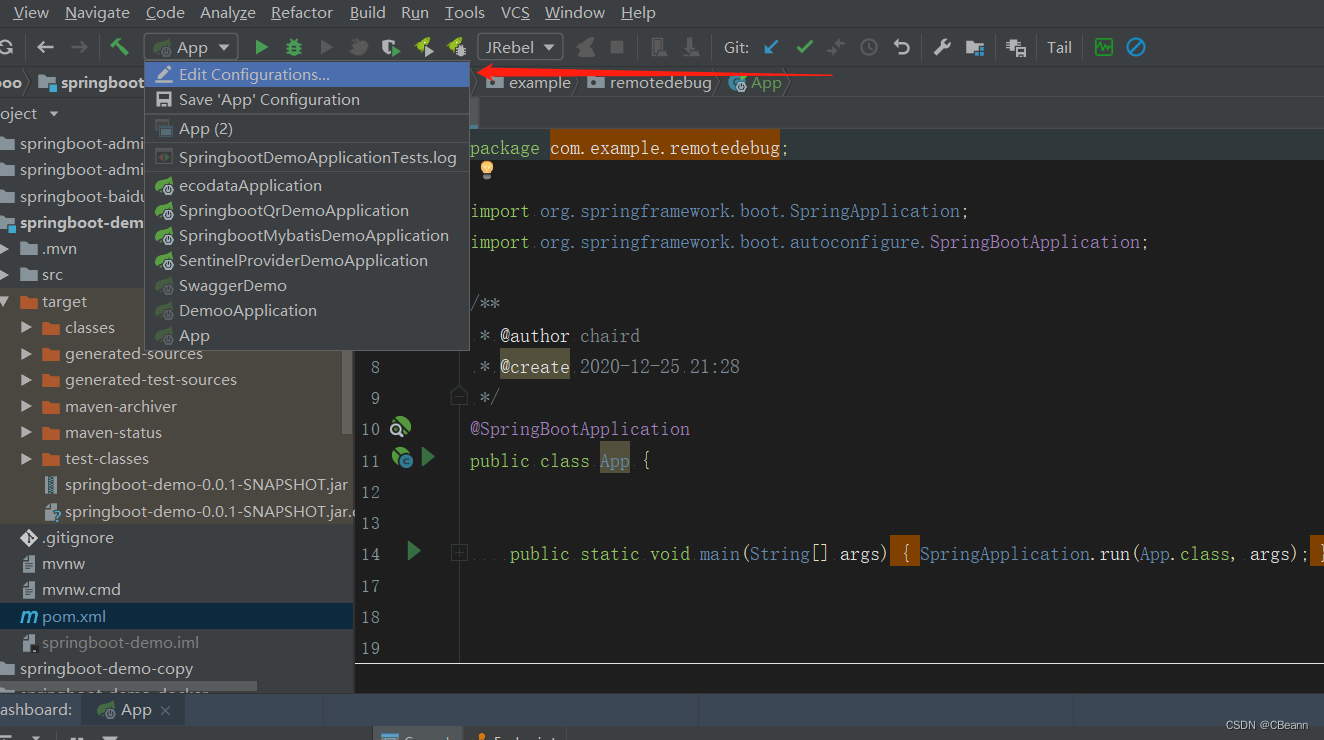

IDEA远程debug调试设置

IDEA远程debug调试设置

-

02.25 21:57:55

发表了文章

2023-02-25 21:57:55

发表了文章

2023-02-25 21:57:55

用StopWatch 统计代码耗时

用StopWatch 统计代码耗时

-

02.25 21:56:39

发表了文章

2023-02-25 21:56:39

发表了文章

2023-02-25 21:56:39

docker的四种网络模式

docker的四种网络模式

-

02.25 21:51:18

发表了文章

2023-02-25 21:51:18

发表了文章

2023-02-25 21:51:18

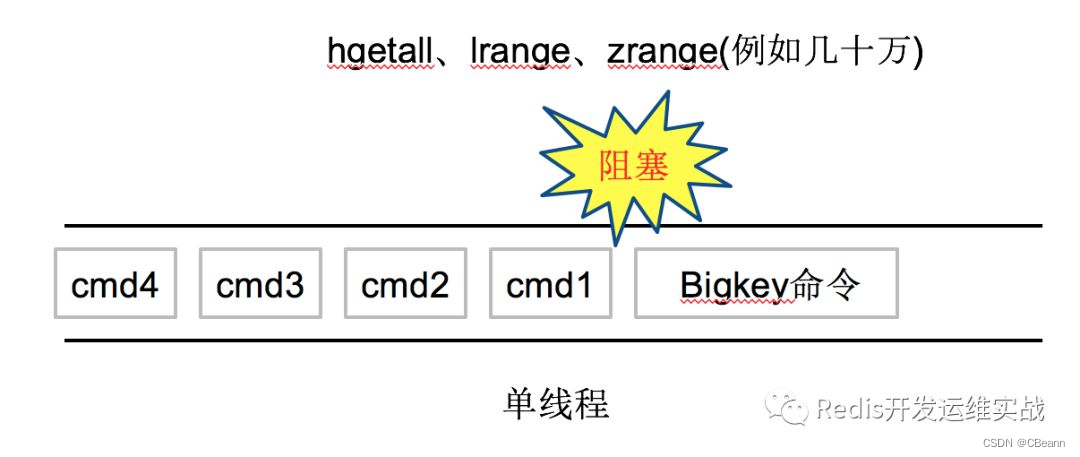

Redis的bigkey了解过吗---让面试官无话可问【面试题】

写作目的 好久没更新博客了,最近受到一个说“理论-实操-小总结”大佬的点拨,问我bigkey了解过吗?他说你不用告诉我你的答案,你就说你“实操”过吗???我。。。

-

02.25 16:59:37

发表了文章

2023-02-25 16:59:37

发表了文章

2023-02-25 16:59:37

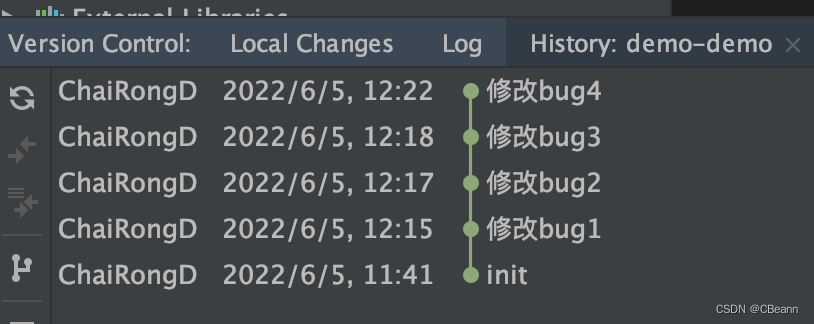

git rebase -i合并多次提交

写作目的 自己写代码的时候修改了bug就提交一次,发现提交日志很乱,所以有必要合并多次提交,这个点有必要学习一下

-

02.25 16:56:17

发表了文章

2023-02-25 16:56:17

发表了文章

2023-02-25 16:56:17

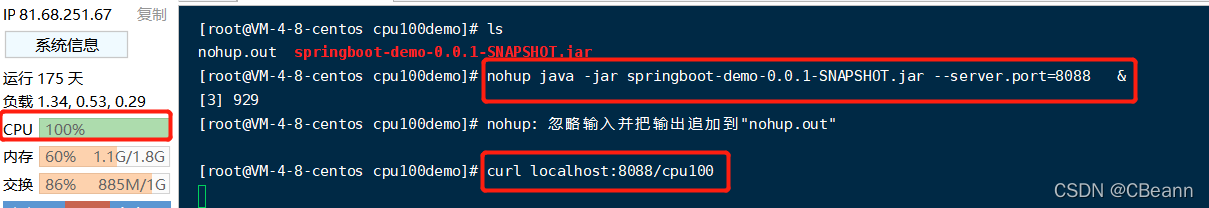

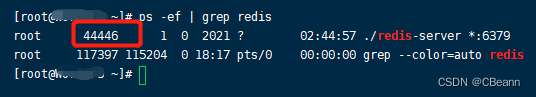

Java服务CPU100%异常排查实践总结

写作目的 最近看牛客网发现了CPU 100% 怎么办这个问题,这个问题的重点是定位和解决,会用到Linux和java的的很多命令,所以写篇博客记录和总结一下。

-

02.25 16:53:19

发表了文章

2023-02-25 16:53:19

发表了文章

2023-02-25 16:53:19

linux根据进程号PID查找启动程序的全路径

问题提出 有的时候想重启一个服务,但是不知道启动命令在哪,这就很尴尬,如果能通过进程ID反推到启动的脚本位置,那就很舒服了,结果还真能

-

02.25 16:50:02

发表了文章

2023-02-25 16:50:02

发表了文章

2023-02-25 16:50:02

Feign源码分析-接口如何发现并生成代理类

Feign源码分析-接口如何发现并生成代理类

-

02.25 16:45:25

发表了文章

2023-02-25 16:45:25

发表了文章

2023-02-25 16:45:25

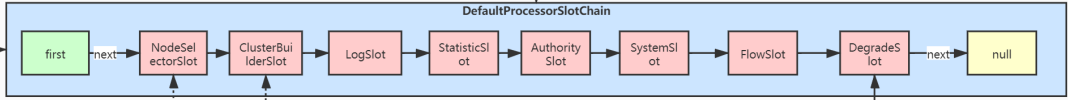

Sentinel源码分析总结

写作目的 最近在看Sentinel源码,遇到了几个问题,想再此记录和分享一下遇到的几个问题,方便读者看到我的文章后就不用在继续搜索了。

-

02.25 16:38:08

发表了文章

2023-02-25 16:38:08

发表了文章

2023-02-25 16:38:08

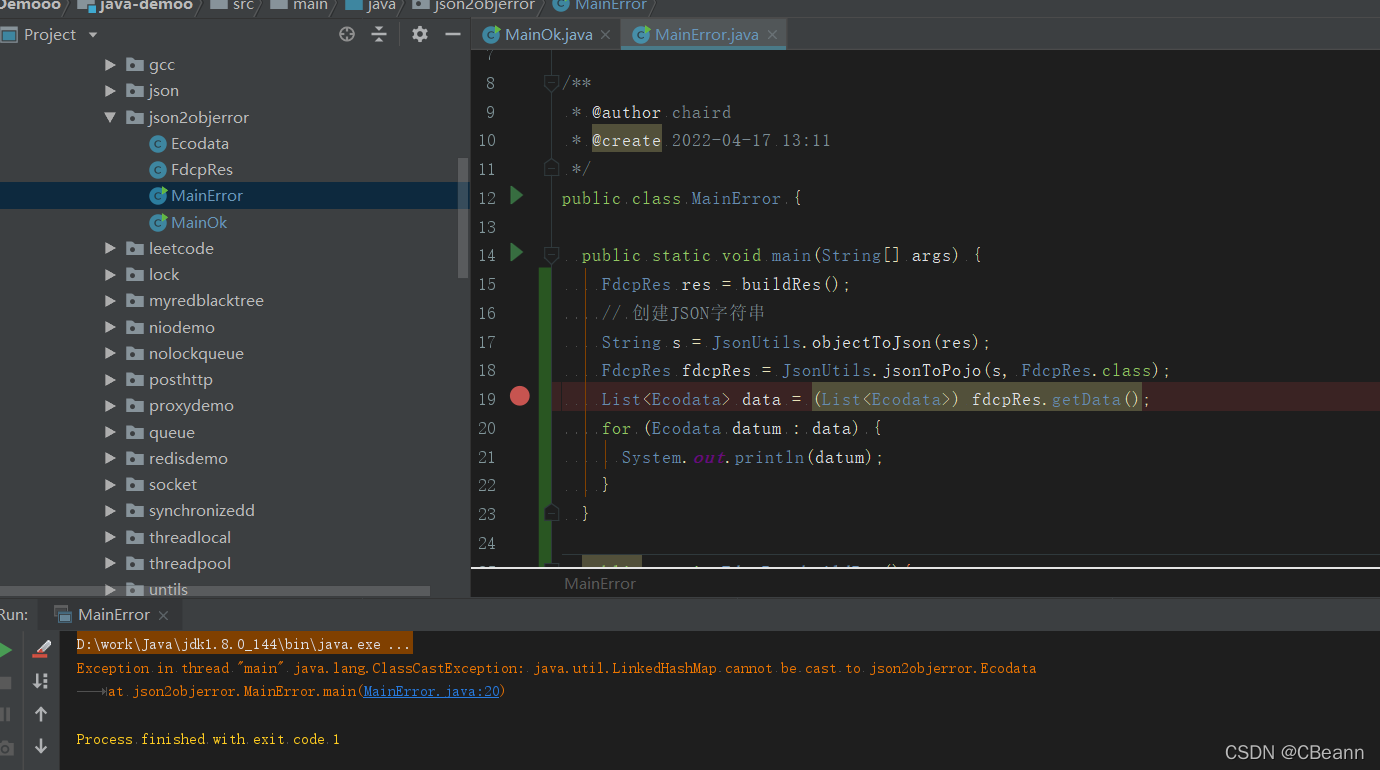

java.util.LinkedHashMap cannot be cast to

java.util.LinkedHashMap cannot be cast to

-

02.25 16:36:40

发表了文章

2023-02-25 16:36:40

发表了文章

2023-02-25 16:36:40

Skywalking的安装与使用

Skywalking的安装与使用

-

02.25 16:31:52

发表了文章

2023-02-25 16:31:52

发表了文章

2023-02-25 16:31:52

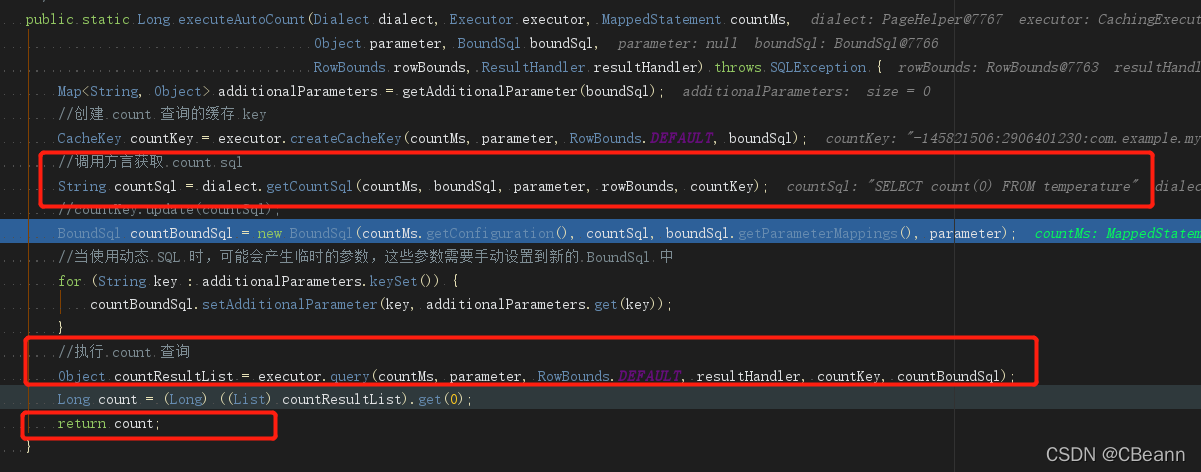

pagehelper分页查询明明下一页没有数据了却还是返回了数据

pagehelper分页查询明明下一页没有数据了却还是返回了数据 -

02.25 16:29:38

发表了文章

2023-02-25 16:29:38

发表了文章

2023-02-25 16:29:38

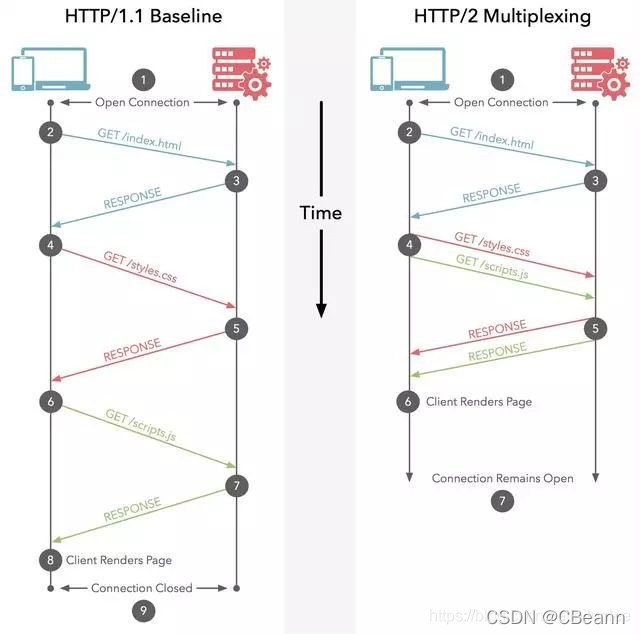

HTTP1.0、HTTP1.1 、HTTP2.0和HTTP3.0 的区别【面试题】

HTTP1.0、HTTP1.1 、HTTP2.0和HTTP3.0 的区别【面试题】

-

02.25 16:26:42

发表了文章

2023-02-25 16:26:42

发表了文章

2023-02-25 16:26:42

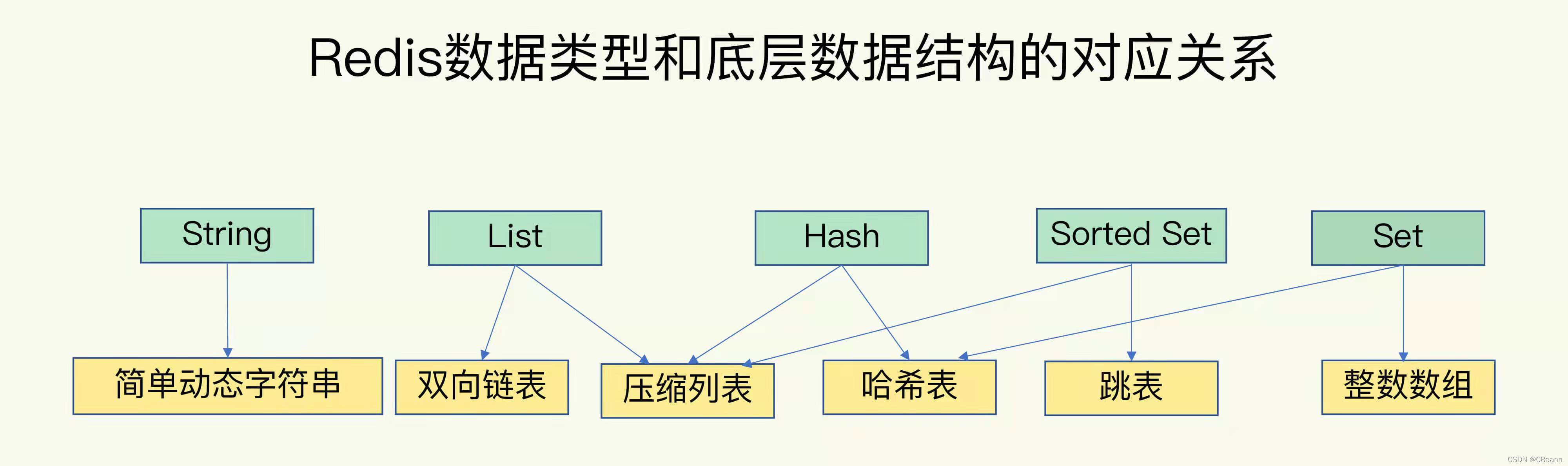

Redis基本类型及其数据结构【面试题】

Redis基本类型及其数据结构【面试题】

-

02.25 16:21:18

发表了文章

2023-02-25 16:21:18

发表了文章

2023-02-25 16:21:18

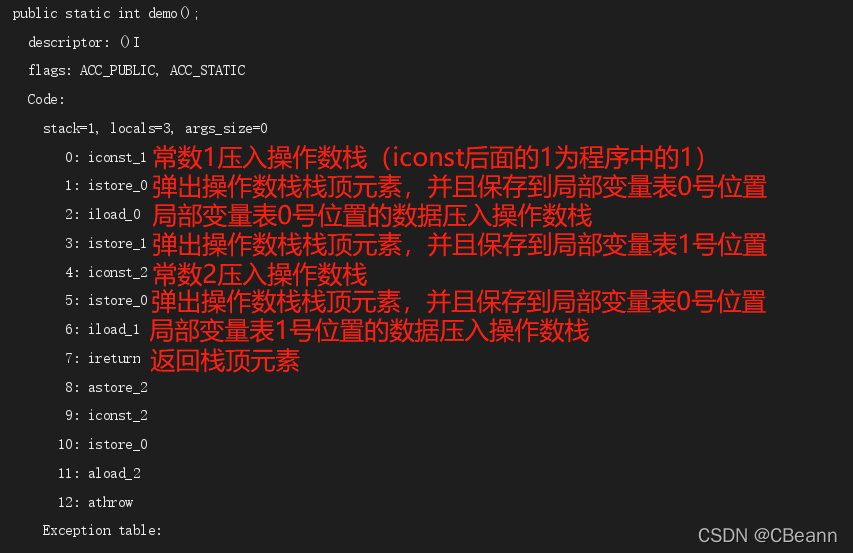

一个try-catch问出这么多花样【面试题】

一个try-catch问出这么多花样【面试题】

-

02.25 16:15:58

发表了文章

2023-02-25 16:15:58

发表了文章

2023-02-25 16:15:58

MyBatis的分页原理

写作目的 最近看到了一篇MyBatis的分页实现原理,文章里描述到使用ThreadLocal,其实想主要想看看ThreadLocal的巧妙使用,并且看一下分页是如何实现的。

-

02.25 16:11:37

发表了文章

2023-02-25 16:11:37

发表了文章

2023-02-25 16:11:37

RocketMQ事务实现原理

RocketMQ事务实现原理 -

02.25 16:05:56

发表了文章

2023-02-25 16:05:56

发表了文章

2023-02-25 16:05:56

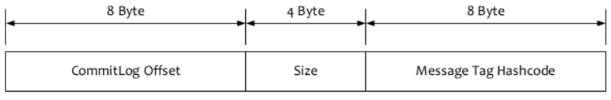

CousumeQueue中tag的作用

问题的提出 存在就是有意义的,那么ConsumeQueue中存消息tag的hashcode是什么目的呢?

-

02.25 16:01:33

发表了文章

2023-02-25 16:01:33

发表了文章

2023-02-25 16:01:33

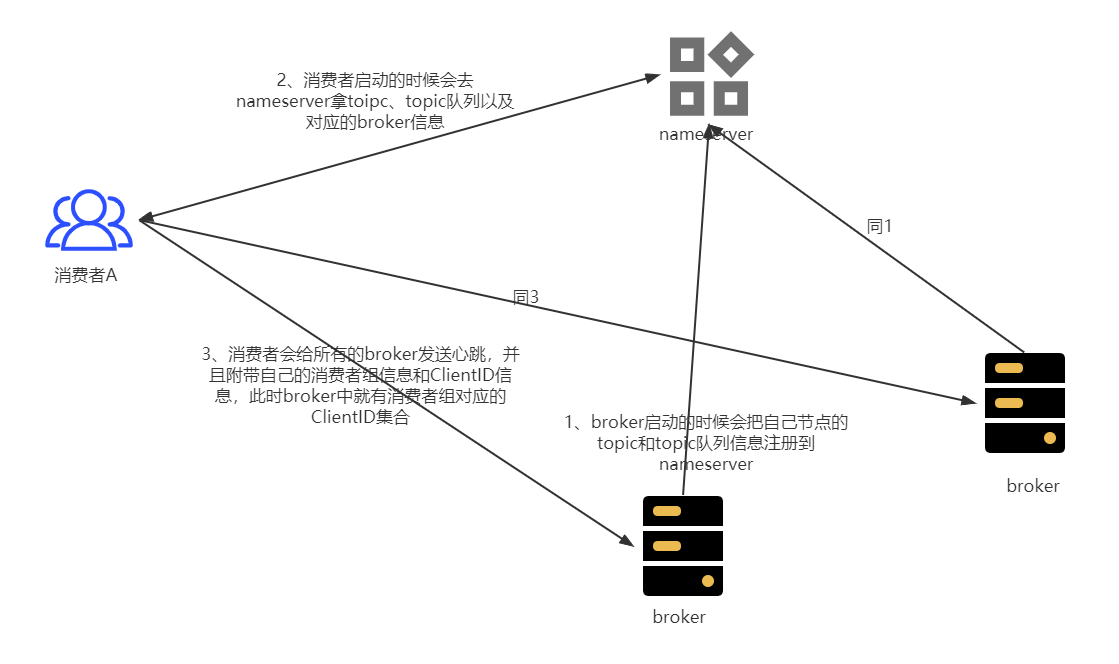

RocketMQ消费者启动流程

问题 消费者启动的时候,去哪拿的消息呢?

-

02.25 15:39:55

发表了文章

2023-02-25 15:39:55

发表了文章

2023-02-25 15:39:55

RocketMQ给broker发送消息确定Commitlog的写入的位置

问题 有一个疑问,当client给broker发送消息的时候,怎么知道在commitlog的第几个字节开始写呢?

-

02.25 15:36:04

发表了文章

2023-02-25 15:36:04

发表了文章

2023-02-25 15:36:04

RocketMQ主题的自动创建机制

问题 在学习RocketMQ的时候,有几个疑问。 如果主题不存在,client把消息发给谁呢? 当发送消息给不存在的主题时,主题是什么时候创建的呢? -

02.25 15:29:29

发表了文章

2023-02-25 15:29:29

发表了文章

2023-02-25 15:29:29

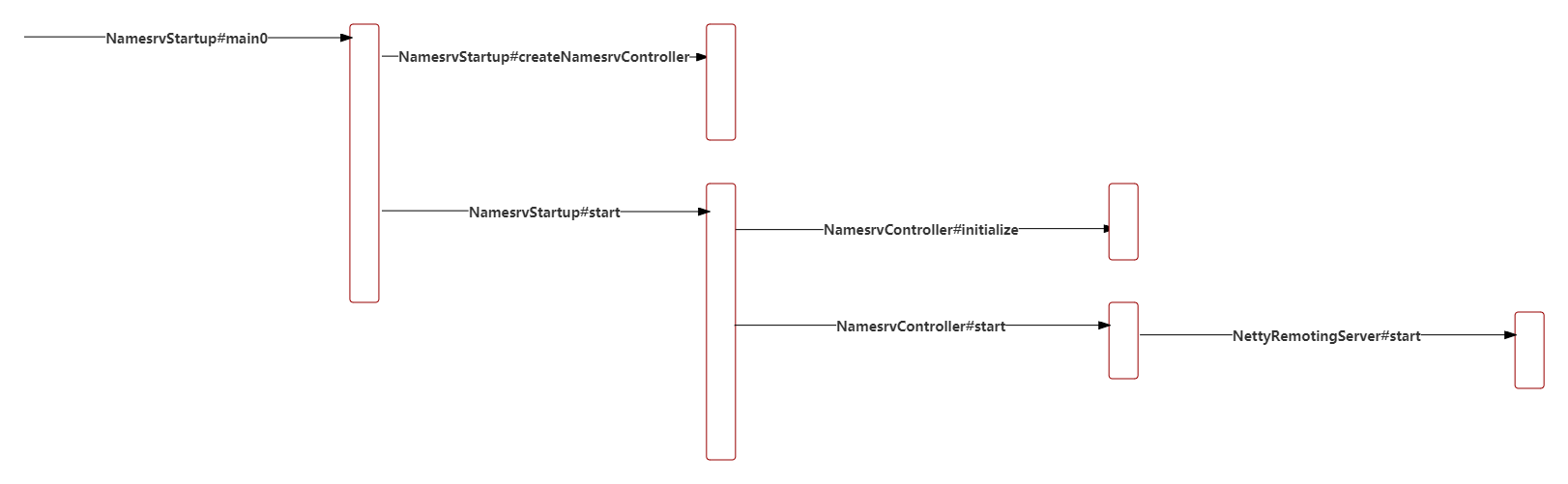

Broker注册到NameServer源码分析

写作目的 RocketMQ一个用Java写的开源项目,而且也是阿里开源的,所以想看一看设计思路以及一些细节,所以就写了这篇博客,记录一下Broker注册到Nameserver的过程以及心跳逻辑。

-

02.25 15:24:12

发表了文章

2023-02-25 15:24:12

发表了文章

2023-02-25 15:24:12

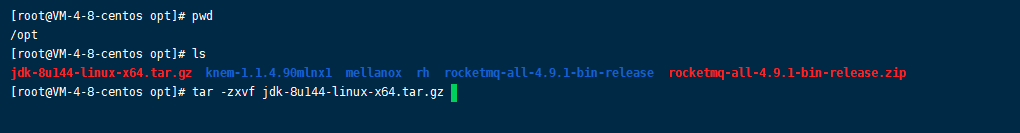

RocketMQ单机版安装

RocketMQ单机版安装

-

02.25 15:17:57

发表了文章

2023-02-25 15:17:57

发表了文章

2023-02-25 15:17:57

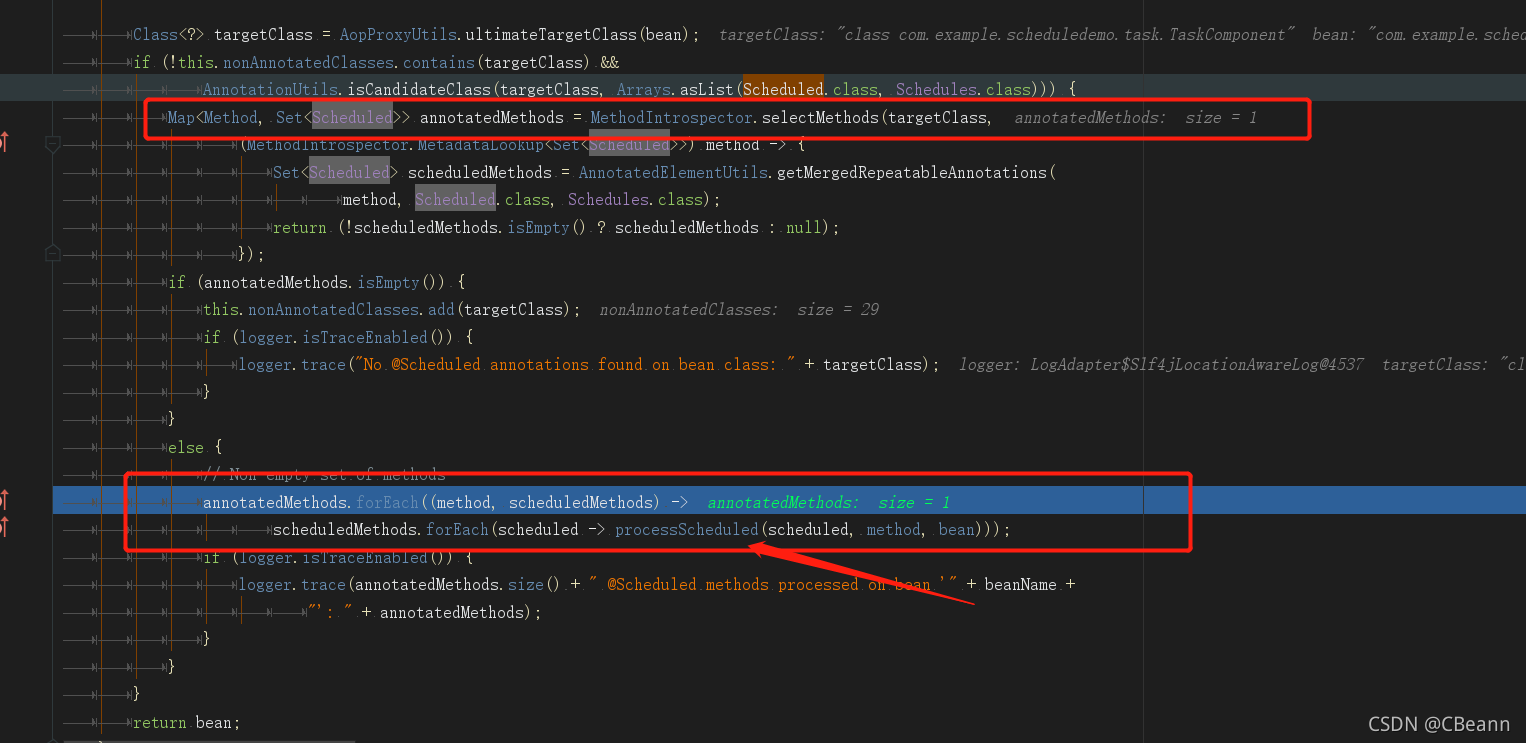

SpringBoot定时任务源码分析

写作目的 最近看了一篇博客 “Spring Boot实现定时任务的动态增删启停” ,其实实现这个需求的前提是你要搞明白 定时任务 的实现原理,这样你才有可能实现定时任务的动态增删启停,所以下面从源码的角度跟 SpringBoot定时任务原理。

-

02.25 15:13:52

发表了文章

2023-02-25 15:13:52

发表了文章

2023-02-25 15:13:52

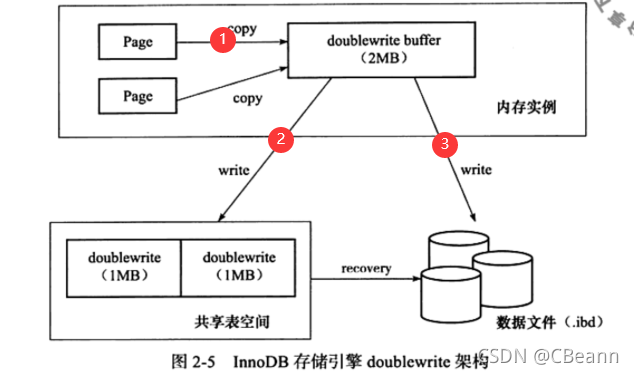

MySQL之InnoDB关键特性

MySQL之InnoDB关键特性

-

02.25 15:11:24

发表了文章

2023-02-25 15:11:24

发表了文章

2023-02-25 15:11:24

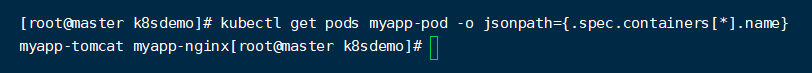

查看k8s中Pod里容器的数量和名称

查看k8s中Pod里容器的数量和名称

-

02.25 15:08:58

发表了文章

2023-02-25 15:08:58

发表了文章

2023-02-25 15:08:58

docker复制文件

docker复制文件 -

02.25 15:07:42

发表了文章

2023-02-25 15:07:42

发表了文章

2023-02-25 15:07:42

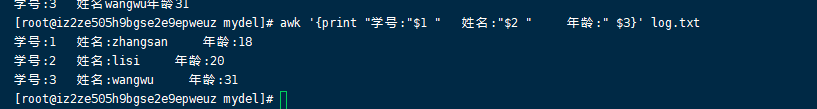

Linux命令awk的简单使用

Linux命令awk的简单使用

-

02.25 15:05:36

发表了文章

2023-02-25 15:05:36

发表了文章

2023-02-25 15:05:36

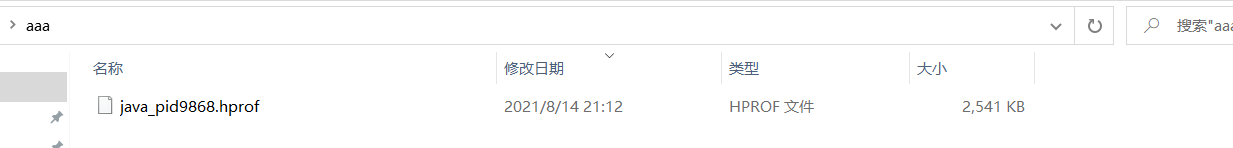

OOM排查小案例

写作目的 排查过某OOM问题吗?额。。。没有

-

02.25 15:00:28

发表了文章

2023-02-25 15:00:28

发表了文章

2023-02-25 15:00:28

MySQL横竖表转换

MySQL横竖表转换

-

02.25 14:58:23

发表了文章

2023-02-25 14:58:23

发表了文章

2023-02-25 14:58:23

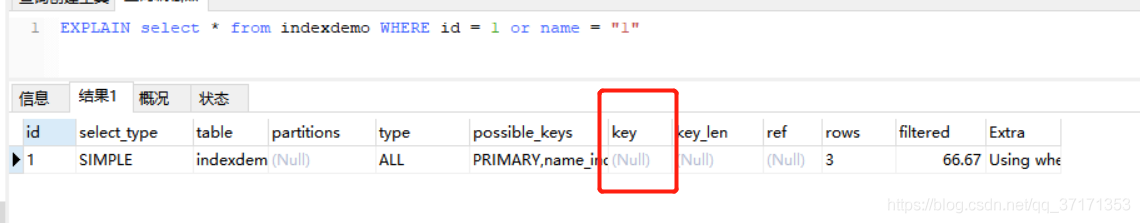

MysSQL索引会失效的几种情况分析

MysSQL索引会失效的几种情况分析

-

02.25 14:56:01

发表了文章

2023-02-25 14:56:01

发表了文章

2023-02-25 14:56:01

volatile关键字解析

volatile关键字解析

-

02.25 14:51:21

发表了文章

2023-02-25 14:51:21

发表了文章

2023-02-25 14:51:21

使用RestTemplate上传文件

写作目的 最近维护一个项目,里面用了RestTemplate进行服务之前的调用,不过最近有一个Excel解析的需求,百度了几篇,内容不是很全,所以写篇博客记录一下,不过我还是推荐使用Feign调用,毕竟面向接口编程,方便。 -

02.25 14:50:37

发表了文章

2023-02-25 14:50:37

发表了文章

2023-02-25 14:50:37

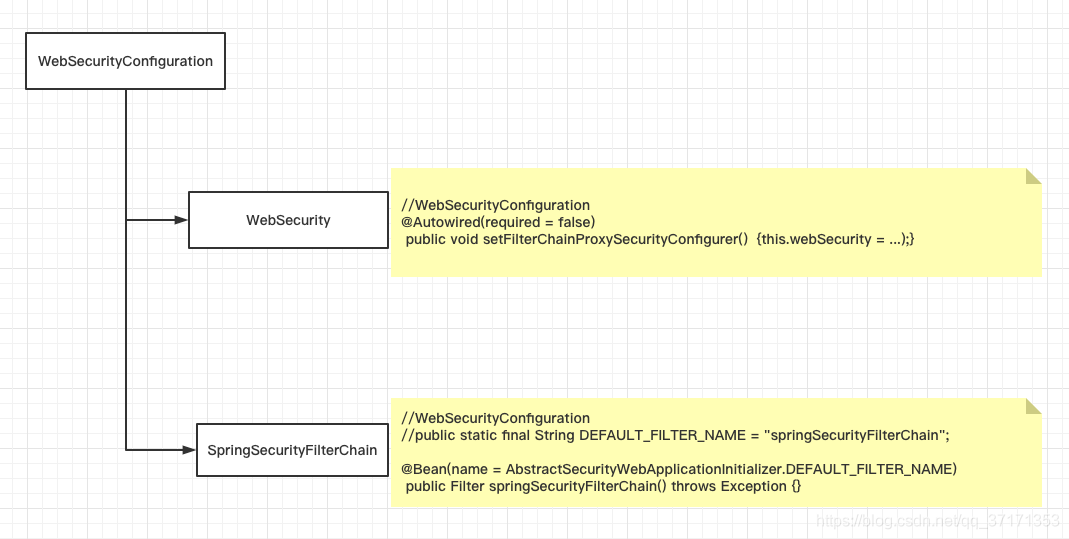

SpringSecurity认证流程

写作目的 最近在学习SpringSecurity,中间就遇到了一个问题:我在浏览器中第一次输入localhost:8080/hello,提示我没有登陆,自动跳转到登陆页面,等我登陆成功后,我在输入localhost:8080/hello,就成功访问了,验证第二次的时候,验证信息是存储在哪呢? 跟完源码发现:存储信息存在session中,然后每次请求都在session中取出并且放在ThreadLocal中。

-

发表了文章

2023-02-25

发表了文章

2023-02-25

云服务&服务器免费使用

-

发表了文章

2023-02-25

发表了文章

2023-02-25

开发规范中为什么要禁用外键约束

-

发表了文章

2023-02-25

发表了文章

2023-02-25

走过的坑-Java开发

-

发表了文章

2023-02-25

发表了文章

2023-02-25

花了一星期,自己写了个简单的RPC框架

-

发表了文章

2023-02-25

发表了文章

2023-02-25

ECS服务器搭建hadoop伪分布式(二)

-

发表了文章

2023-02-25

发表了文章

2023-02-25

ECS服务器搭建hadoop伪分布式(一)

-

发表了文章

2023-02-25

发表了文章

2023-02-25

MyBatis-Plus整合Spring Demo

-

发表了文章

2023-02-25

发表了文章

2023-02-25

MyBatis-Plus代码自动生成工具

-

发表了文章

2023-02-25

发表了文章

2023-02-25

RocketMQ的TAG过滤和SQL过滤机制

-

发表了文章

2023-02-25

发表了文章

2023-02-25

easy-rules规则引擎最佳落地实践

-

发表了文章

2023-02-25

发表了文章

2023-02-25

为什么MySQL默认的隔离级别是RR而大厂使用的是RC?

-

发表了文章

2023-02-25

发表了文章

2023-02-25

分布式主键生成设计策略

-

发表了文章

2023-02-25

发表了文章

2023-02-25

RocketMQ中msg&tag的生命周期

-

发表了文章

2023-02-25

发表了文章

2023-02-25

短URL服务的设计以及实现

-

发表了文章

2023-02-25

发表了文章

2023-02-25

Mac当作云服务器,你真的会搞吗

-

发表了文章

2023-02-25

发表了文章

2023-02-25

多大数量级会出现哈希碰撞

-

发表了文章

2023-02-25

发表了文章

2023-02-25

IDEA远程debug调试设置

-

发表了文章

2023-02-25

发表了文章

2023-02-25

用StopWatch 统计代码耗时

-

发表了文章

2023-02-25

发表了文章

2023-02-25

docker的四种网络模式

-

发表了文章

2023-02-25

发表了文章

2023-02-25

Redis的bigkey了解过吗---让面试官无话可问【面试题】

滑动查看更多

暂无更多信息

暂无更多信息