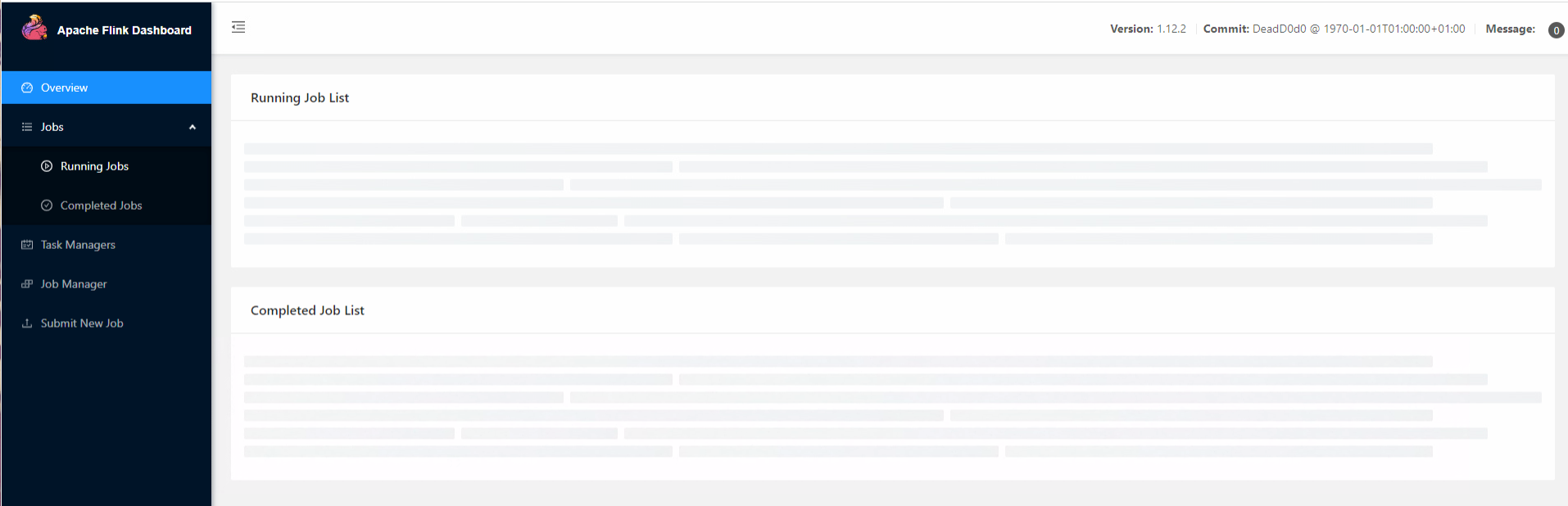

按照官方文档https://nightlies.apache.org/flink/flink-docs-release-1.12/deployment/resource-providers/standalone/kubernetes.html#session-mode 在kubernetes上部署flink1.11以上版本会出现奇怪的问题(1.10以前正常),提交作业后Dashboard会持续卡顿处于loading状态,始终显示不出job信息:

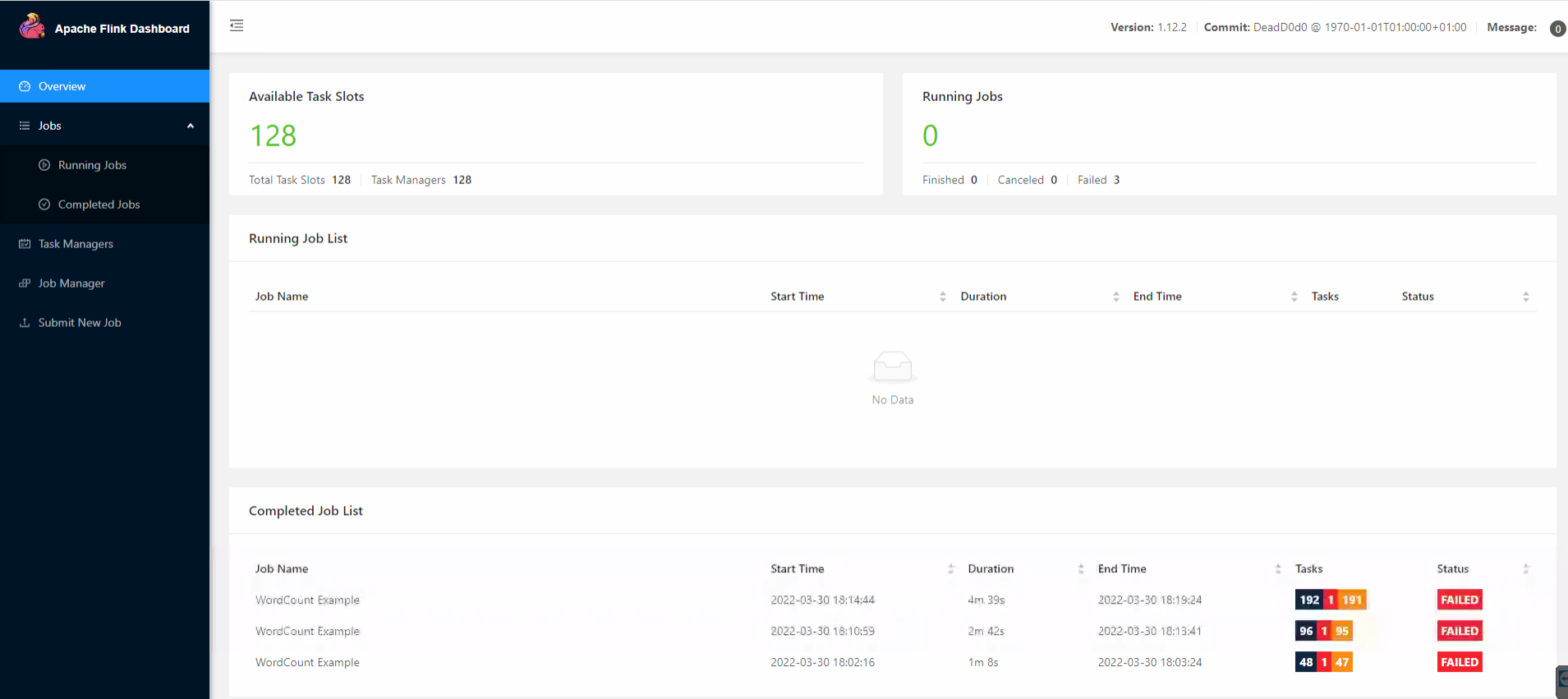

直到作业失败,且卡顿时间和数据源的并行度有关,并行度越高卡顿时间越久:

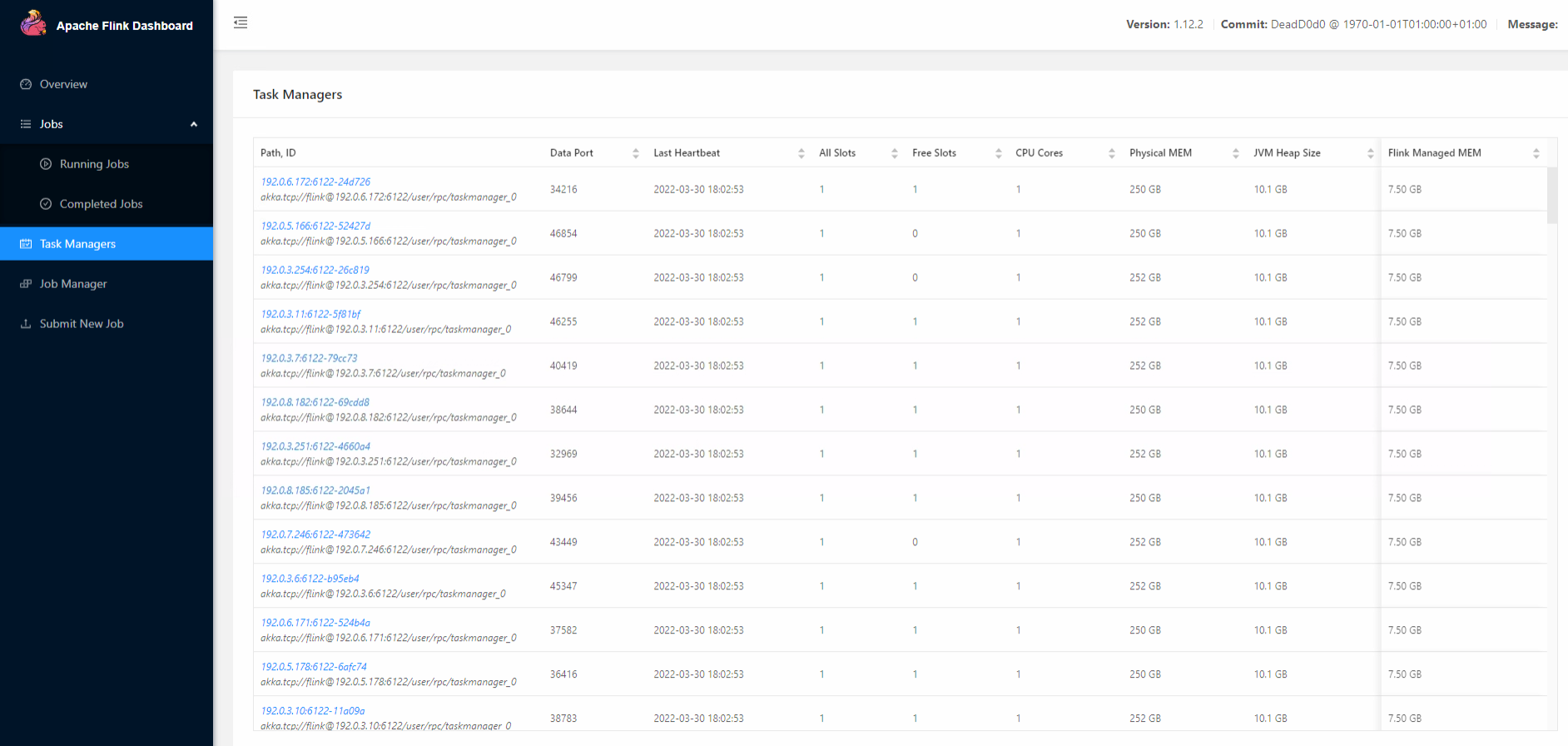

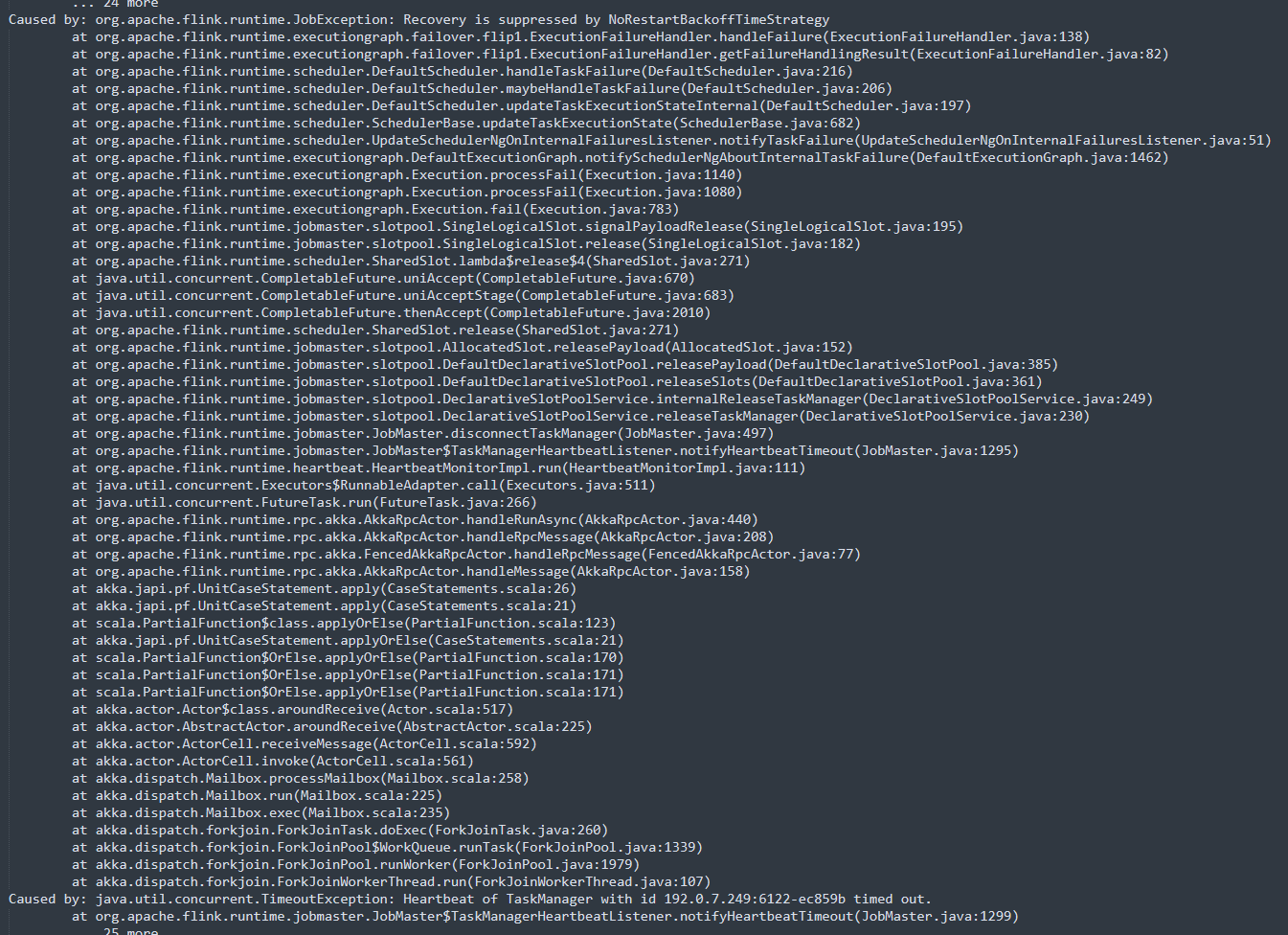

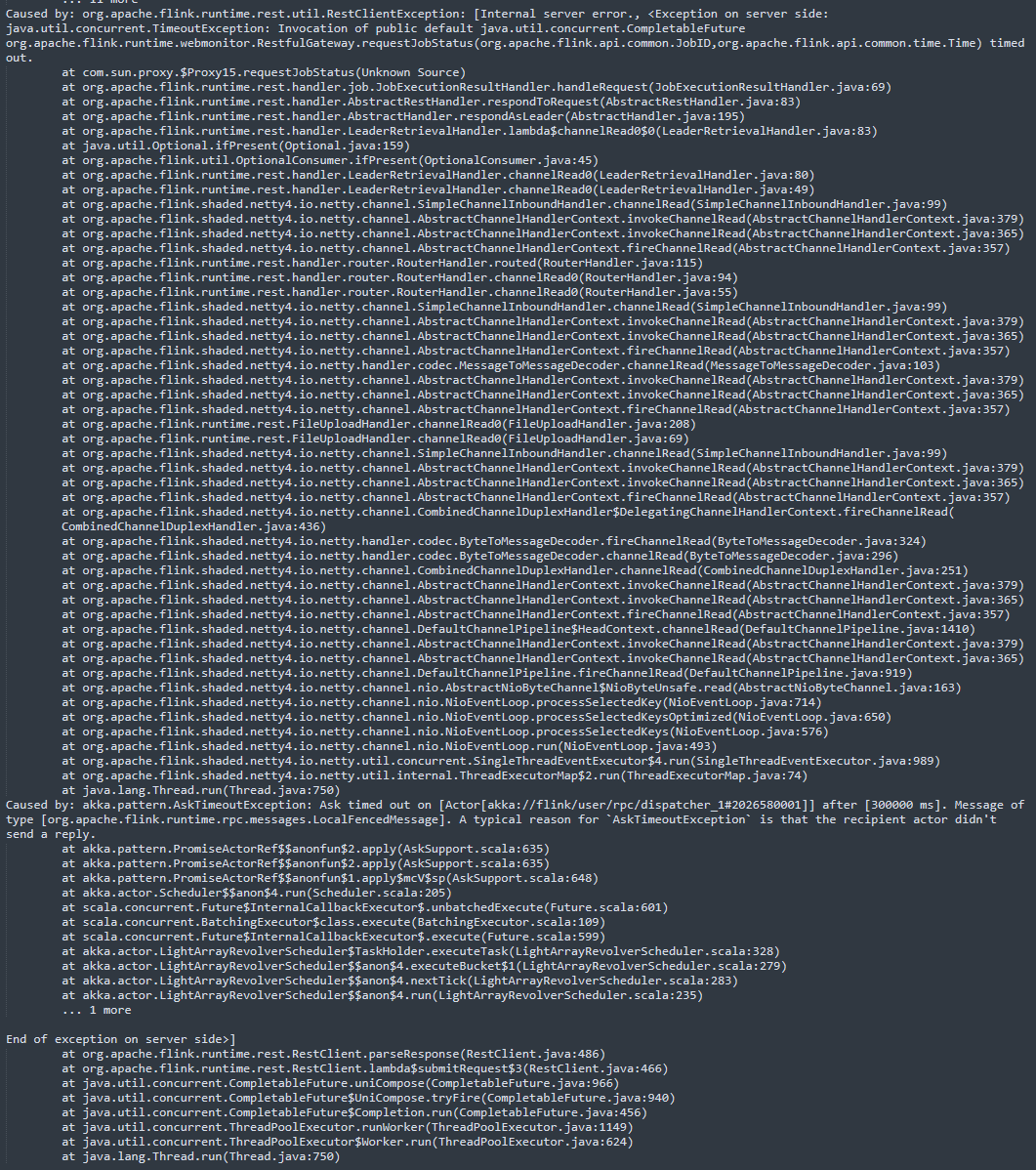

末尾附上两段报错信息,主要是一些心跳timeout之类的通信错误,但是在loading的时候Dashboard上仍然可以显示Taskmanager列表,点进去观察也没发现异常的GC停顿,而且如果是通信环境问题为什么1.10之前的版本没问题?

不知道是不是部署方式不对?下面是集群环境、部署文件和异常信息:

apiVersion: v1

kind: ConfigMap

metadata:

name: flink-config-new

namespace: gaia

labels:

app: flink-new

data:

flink-conf.yaml: |+

jobmanager.rpc.address: flink-jobmanager-new

taskmanager.numberOfTaskSlots: 1

blob.server.port: 6124

jobmanager.rpc.port: 6123

taskmanager.rpc.port: 6122

queryable-state.proxy.ports: 6125

jobmanager.memory.process.size: 1024m

taskmanager.memory.process.size: 10240m

akka.ask.timeout: 60s

web.timeout: 300000

heartbeat.timeout: 60000

log4j-console.properties: |+

log4j.rootLogger=INFO, file

log4j.logger.akka=INFO

log4j.logger.org.apache.kafka=INFO

log4j.logger.org.apache.hadoop=INFO

log4j.logger.org.apache.zookeeper=INFO

log4j.appender.file=org.apache.log4j.FileAppender

log4j.appender.file.file=${log.file}

log4j.appender.file.layout=org.apache.log4j.PatternLayout

log4j.appender.file.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

log4j.logger.org.apache.flink.shaded.akka.org.jboss.netty.channel.DefaultChannelPipeline=ERROR, file

apiVersion: apps/v1

kind: Deployment

metadata:

name: flink-jobmanager-new

namespace: gaia

spec:

replicas: 1

selector:

matchLabels:

app: flink-new

template:

metadata:

labels:

app: flink-new

component: jobmanager

spec:

containers:

- name: jobmanager

image: flink:1.12.2

args:

- jobmanager

ports:

- containerPort: 6123

name: rpc

- containerPort: 6124

name: blob

- containerPort: 6125

name: query

- containerPort: 8081

name: ui

env:

- name: JOB_MANAGER_RPC_ADDRESS

value: flink-jobmanager-new

volumeMounts:

- name: flink-config-volume-new

mountPath: /opt/flink/conf/

- name: gaia-volume-new

mountPath: /opt/data/

volumes:

- name: flink-config-volume-new

configMap:

name: flink-config-new

items:

- key: flink-conf.yaml

path: flink-conf.yaml

- key: log4j-console.properties

path: log4j-console.properties

- key: log4j-cli.properties

path: log4j-cli.properties

- name: gaia-volume-new

persistentVolumeClaim:

claimName: gaia-pvc

apiVersion: apps/v1

kind: Deployment

metadata:

name: flink-taskmanager-new

namespace: gaia

spec:

replicas: 128

selector:

matchLabels:

app: flink-new

template:

metadata:

labels:

app: flink-new

component: taskmanager

spec:

containers:

- name: taskmanager

image: flink:1.12.2

args:

- taskmanager

ports:

- containerPort: 6121

name: data

- containerPort: 6122

name: rpc

- containerPort: 6125

name: query

env:

- name: JOB_MANAGER_RPC_ADDRESS

value: flink-jobmanager-new

volumeMounts:

- name: flink-config-volume-new

mountPath: /opt/flink/conf/

- name: gaia-volume-new

mountPath: /opt/data

volumes:

- name: flink-config-volume-new

configMap:

name: flink-config-new

items:

- key: flink-conf.yaml

path: flink-conf.yaml

- key: log4j-console.properties

path: log4j-console.properties

- key: log4j-cli.properties

path: log4j-cli.properties

- name: gaia-volume-new

persistentVolumeClaim:

claimName: gaia-pvc

apiVersion: v1

kind: Service

metadata:

name: flink-jobmanager-new

namespace: gaia

spec:

ports:

- name: rpc

port: 6123

- name: blob

port: 6124

- name: query

port: 6125

- name: ui

port: 8081

selector:

app: flink-new

component: jobmanager

type: NodePort

(提交不上去,只截关键部分图)

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

创建jobmanager-checkpoint-pvc.yaml

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: jobmanager-checkpoint-pvc namespace: flink-ha spec: accessModes: - ReadWriteMany resources: requests: storage: 30Gi storageClassName: nfs

创建taskmanager-checkpoint-pvc.yaml ————————————————

实时计算Flink版是阿里云提供的全托管Serverless Flink云服务,基于 Apache Flink 构建的企业级、高性能实时大数据处理系统。提供全托管版 Flink 集群和引擎,提高作业开发运维效率。