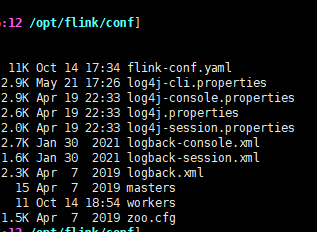

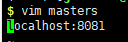

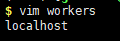

flink standalone模式提交任务报错,idea调试不报错,flink部署在hadoop的一个节点,hadoop集群多台节点,flink/conf下master和worker都是localhost,

$ ../bin/flink run -c ** *.jar时报错如下: 2021-10-14 19:23:45 java.io.IOException: Cannot instantiate file system for URI: hdfs://nameservice1/checkpoint/galileo at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:196) at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:526) at org.apache.flink.core.fs.FileSystem.get(FileSystem.java:407) at org.apache.flink.core.fs.Path.getFileSystem(Path.java:274) at org.apache.flink.runtime.state.filesystem.FsCheckpointStorageAccess.

(FsCheckpointStorageAccess.java:64) at org.apache.flink.runtime.state.filesystem.FsStateBackend.createCheckpointStorage(FsStateBackend.java:527) at org.apache.flink.streaming.runtime.tasks.StreamTask.

(StreamTask.java:341) at org.apache.flink.streaming.runtime.tasks.StreamTask.

(StreamTask.java:308) at org.apache.flink.streaming.runtime.tasks.SourceStreamTask.

(SourceStreamTask.java:76) at org.apache.flink.streaming.runtime.tasks.SourceStreamTask.

(SourceStreamTask.java:72) at sun.reflect.GeneratedConstructorAccessor29.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.flink.runtime.taskmanager.Task.loadAndInstantiateInvokable(Task.java:1524) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:730) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.NumberFormatException: For input string: "30s" at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65) at java.lang.Long.parseLong(Long.java:589) at java.lang.Long.parseLong(Long.java:631) at org.apache.hadoop.conf.Configuration.getLong(Configuration.java:1381) at org.apache.hadoop.hdfs.DFSClient$Conf.

(DFSClient.java:507) at org.apache.hadoop.hdfs.DFSClient.

(DFSClient.java:647) at org.apache.hadoop.hdfs.DFSClient.

(DFSClient.java:628) at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149) at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:168) ... 16 more hadoop是CDH安装,把相关hadoop的配置扫描了一遍,没发现30s的配置参数文件。无从下手,请帮忙指点迷津!

$ ../bin/flink run -c ** *.jar时报错如下: 2021-10-14 19:23:45 java.io.IOException: Cannot instantiate file system for URI: hdfs://nameservice1/checkpoint/galileo at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:196) at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:526) at org.apache.flink.core.fs.FileSystem.get(FileSystem.java:407) at org.apache.flink.core.fs.Path.getFileSystem(Path.java:274) at org.apache.flink.runtime.state.filesystem.FsCheckpointStorageAccess.

(FsCheckpointStorageAccess.java:64) at org.apache.flink.runtime.state.filesystem.FsStateBackend.createCheckpointStorage(FsStateBackend.java:527) at org.apache.flink.streaming.runtime.tasks.StreamTask.

(StreamTask.java:341) at org.apache.flink.streaming.runtime.tasks.StreamTask.

(StreamTask.java:308) at org.apache.flink.streaming.runtime.tasks.SourceStreamTask.

(SourceStreamTask.java:76) at org.apache.flink.streaming.runtime.tasks.SourceStreamTask.

(SourceStreamTask.java:72) at sun.reflect.GeneratedConstructorAccessor29.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.flink.runtime.taskmanager.Task.loadAndInstantiateInvokable(Task.java:1524) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:730) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.NumberFormatException: For input string: "30s" at java.lang.NumberFormatException.forInputString(NumberFormatException.java:65) at java.lang.Long.parseLong(Long.java:589) at java.lang.Long.parseLong(Long.java:631) at org.apache.hadoop.conf.Configuration.getLong(Configuration.java:1381) at org.apache.hadoop.hdfs.DFSClient$Conf.

(DFSClient.java:507) at org.apache.hadoop.hdfs.DFSClient.

(DFSClient.java:647) at org.apache.hadoop.hdfs.DFSClient.

(DFSClient.java:628) at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149) at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:168) ... 16 more hadoop是CDH安装,把相关hadoop的配置扫描了一遍,没发现30s的配置参数文件。无从下手,请帮忙指点迷津!

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

之前好像遇到过尝试在连接时设置hadoopConfig "dfs.namenode.decommission.interval":"30", "dfs.client.datanode-restart.timeout":"30"