Canal Admin + Server 订阅MySQL的数据发送至Kafka,Canal 没有报错但 Kafka中一直没有数据,MySQL从库正常

Canal 版本:v1.1.5

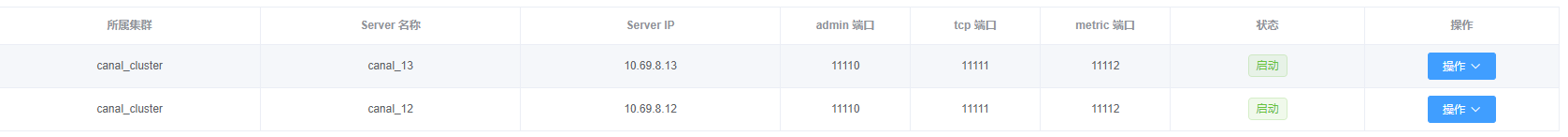

集群状态:

集群配置 canal.properties:

################################################# ######### common argument ############# #################################################

canal.ip =

canal.register.ip = canal.port = 11111 canal.metrics.pull.port = 11112

#canal.admin.manager = 127.0.0.1:8089 canal.admin.port = 11110 canal.admin.user = admin canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

#canal.admin.register.auto = true #canal.admin.register.cluster = #canal.admin.register.name =

canal.zkServers = 10.69.8.12:2181,10.69.8.13:2181,10.69.8.14:2181

canal.zookeeper.flush.period = 1000 canal.withoutNetty = false

canal.serverMode = tcp

canal.file.data.dir = ${canal.conf.dir} canal.file.flush.period = 1000

canal.instance.memory.buffer.size = 16384

canal.instance.memory.buffer.memunit = 1024

canal.instance.memory.batch.mode = MEMSIZE canal.instance.memory.rawEntry = true

canal.instance.detecting.enable = false #canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now() canal.instance.detecting.sql = select 1 canal.instance.detecting.interval.time = 3 canal.instance.detecting.retry.threshold = 3 canal.instance.detecting.heartbeatHaEnable = false

canal.instance.transaction.size = 1024

canal.instance.fallbackIntervalInSeconds = 60

canal.instance.network.receiveBufferSize = 16384 canal.instance.network.sendBufferSize = 16384 canal.instance.network.soTimeout = 30

canal.instance.filter.druid.ddl = true canal.instance.filter.query.dcl = false canal.instance.filter.query.dml = false canal.instance.filter.query.ddl = false canal.instance.filter.table.error = false canal.instance.filter.rows = false canal.instance.filter.transaction.entry = false canal.instance.filter.dml.insert = false canal.instance.filter.dml.update = false canal.instance.filter.dml.delete = false

canal.instance.binlog.format = ROW,STATEMENT,MIXED canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

canal.instance.get.ddl.isolation = false

canal.instance.parser.parallel = true

#canal.instance.parser.parallelThreadSize = 16

canal.instance.parser.parallelBufferSize = 256

canal.instance.tsdb.enable = true canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:} canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL; canal.instance.tsdb.dbUsername = canal canal.instance.tsdb.dbPassword = canal

canal.instance.tsdb.snapshot.interval = 24

canal.instance.tsdb.snapshot.expire = 360

################################################# ######### destinations ############# ################################################# canal.destinations =

canal.conf.dir = ../conf

canal.auto.scan = true canal.auto.scan.interval = 5

canal.auto.reset.latest.pos.mode = false

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml #canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = manager canal.instance.global.lazy = false canal.instance.global.manager.address = ${canal.admin.manager} #canal.instance.global.spring.xml = classpath:spring/memory-instance.xml canal.instance.global.spring.xml = classpath:spring/file-instance.xml #canal.instance.global.spring.xml = classpath:spring/default-instance.xml

################################################## ######### MQ Properties ############# ##################################################

canal.mq.flatMessage = false canal.mq.canalBatchSize = 50 canal.mq.canalGetTimeout = 100

canal.mq.accessChannel = local

canal.mq.database.hash = true canal.mq.send.thread.size = 30 canal.mq.build.thread.size = 8

################################################## ######### Kafka ############# ################################################## kafka.bootstrap.servers = 10.69.8.12:9092,10.69.8.13:9092,10.69.8.14:9092 kafka.acks = all kafka.compression.type = none kafka.batch.size = 16384 kafka.linger.ms = 1 kafka.max.request.size = 1048576 kafka.buffer.memory = 33554432 kafka.max.in.flight.requests.per.connection = 1 kafka.retries = 0

kafka.kerberos.enable = false kafka.kerberos.krb5.file = "../conf/kerberos/krb5.conf" kafka.kerberos.jaas.file = "../conf/kerberos/jaas.conf"

instance 配置:

#################################################

canal.instance.gtidon=false

canal.instance.master.address=10.69.8.12:3309 canal.instance.master.journal.name= canal.instance.master.position= canal.instance.master.timestamp= canal.instance.master.gtid=

canal.instance.rds.accesskey= canal.instance.rds.secretkey= canal.instance.rds.instanceId=

canal.instance.tsdb.enable=true #canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb #canal.instance.tsdb.dbUsername=canal #canal.instance.tsdb.dbPassword=canal

#canal.instance.standby.address = #canal.instance.standby.journal.name = #canal.instance.standby.position = #canal.instance.standby.timestamp = #canal.instance.standby.gtid=

canal.instance.dbUsername=root canal.instance.dbPassword=xjVRZi40GMt6TNFO canal.instance.connectionCharset = UTF-8

canal.instance.enableDruid=false #canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==

canal.instance.filter.regex=chirp.dynamic_tb,chirp.post_tb

canal.instance.filter.black.regex=

#canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch

#canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch

canal.mq.topic=chirp_default

canal.mq.dynamicTopic=chirp_dynamic:chirp.dynamic_tb,chirp_post:chirp.post_tb canal.mq.partitionsNum=10 canal.mq.partitionHash=.\..:id canal.mq.dynamicTopicPartitionNum=chirp.dynamic_tb:10,chirp.post_tb:10 #################################################

原提问者GitHub用户jaceding

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

你这个问题是 没指定kafka canal.serverMode = kafka

过滤条件也有问题,看看官网提示 DBname.tableName

原回答者GitHub用户mrchicn