大纲

1.单机版的zk服务端的启动过程

(1)预启动阶段

(2)初始化阶段

2.集群版的zk服务端的启动过程

(1)预启动阶段

(2)初始化阶段

(3)Leader选举阶段

(4)Leader和Follower启动阶段

1.单机版的zk服务端的启动过程

(1)预启动阶段

(2)初始化阶段

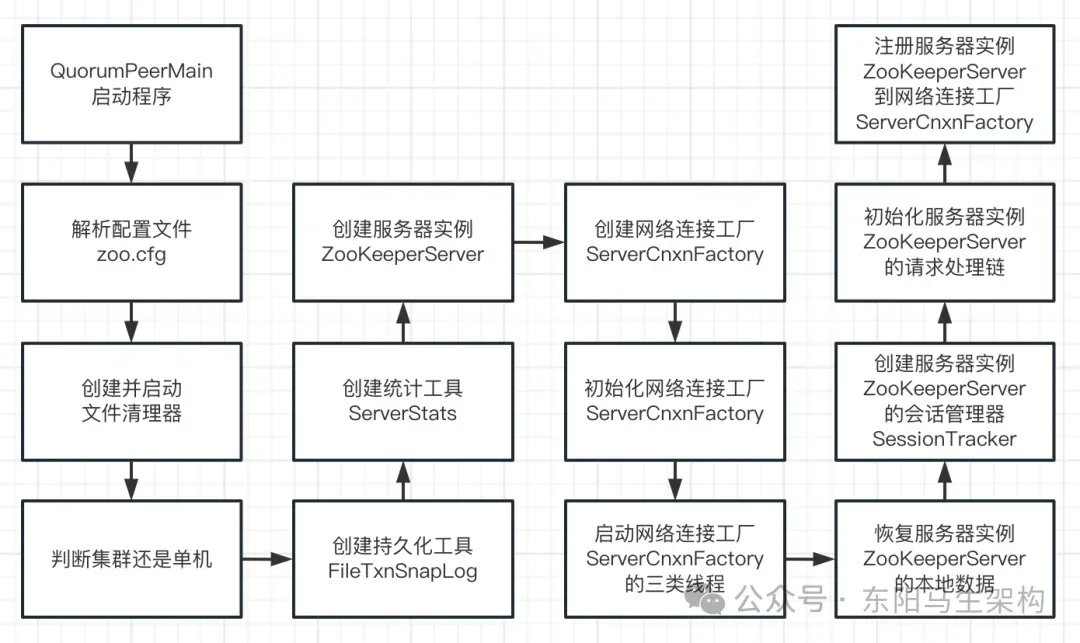

单机版zk服务端的启动,主要分为两个阶段:预启动阶段和初始化阶段,其启动流程图如下:

接下来介绍zk服务端的预启动阶段(启动管理)与初始化阶段的具体流程,也就是单机版的zk服务端是如何从初始化到对外提供服务的。

(1)预启动阶段

在zk服务端进行初始化之前,首先要对配置文件等信息进行解析和载入,而zk服务端的预启动阶段的主要工作流程如下:

一.启动QuorumPeerMain入口程序

二.解析zoo.cfg配置文件

三.创建和启动历史文件清理器

四.根据配置判断是集群模式还是单机模式

public class QuorumPeerMain { protected QuorumPeer quorumPeer; ... //1.启动程序入口 public static void main(String[] args) { QuorumPeerMain main = new QuorumPeerMain(); try { //启动程序 main.initializeAndRun(args); } catch (IllegalArgumentException e) { ... } LOG.info("Exiting normally"); System.exit(0); } protected void initializeAndRun(String[] args) { QuorumPeerConfig config = new QuorumPeerConfig(); if (args.length == 1) { //2.解析配置文件 config.parse(args[0]); } //3.创建和启动历史文件清理器 DatadirCleanupManager purgeMgr = new DatadirCleanupManager(config.getDataDir(), config.getDataLogDir(), config.getSnapRetainCount(), config.getPurgeInterval()); purgeMgr.start(); //4.根据配置判断是集群模式还是单机模式 if (args.length == 1 && config.isDistributed()) { //集群模式 runFromConfig(config); } else { //单机模式 ZooKeeperServerMain.main(args); } } ... } public class ZooKeeperServerMain { private ServerCnxnFactory cnxnFactory; ... public static void main(String[] args) { ZooKeeperServerMain main = new ZooKeeperServerMain(); try { //启动程序 main.initializeAndRun(args); } catch (IllegalArgumentException e) { ... } LOG.info("Exiting normally"); System.exit(0); } protected void initializeAndRun(String[] args) { ... ServerConfig config = new ServerConfig(); //2.解析配置文件 if (args.length == 1) { config.parse(args[0]); } else { config.parse(args); } runFromConfig(config); } //以下是初始化阶段的内容 public void runFromConfig(ServerConfig config) { ... txnLog = new FileTxnSnapLog(config.dataLogDir, config.dataDir); final ZooKeeperServer zkServer = new ZooKeeperServer(txnLog, config.tickTime, config.minSessionTimeout, config.maxSessionTimeout, null); ... } ... } public class DatadirCleanupManager { //配置zoo.cfg文件中的autopurge.snapRetainCount和autopurge.purgeInterval实现数据快照文件的定时清理; private final File snapDir;//数据快照地址 private final File dataLogDir;//事务日志地址 //配置zoo.cfg文件中的autopurge.snapRetainCount,可指定需要保留的文件数目,默认是保留3个; private final int snapRetainCount;//需要保留的文件数目 //配置zoo.cfg文件中的autopurge.purgeInterval可指定清理频率,以小时为单位,默认0表示不开启自动清理功能; private final int purgeInterval;//清理频率 private Timer timer; ... }

一.启动程序

QuorumPeerMain类是zk服务的启动入口,可理解为Java中的main函数。通常我们执行zkServer.sh脚本启动zk服务时,就会运行这个类。QuorumPeerMain的main()方法会调用它的initializeAndRun()方法来启动程序。

二.解析zoo.cfg配置文件

在QuorumPeerMain的main()方法中,会执行它的initializeAndRun()方法。

在QuorumPeerMain的initializeAndRun()方法中,便会解析zoo.cfg配置文件。

在ZooKeeperServerMain的initializeAndRun()方法中,也会解析zoo.cfg配置文件。

zoo.cfg配置文件配置了zk运行时的基本参数,包括tickTime、dataDir等。

三.创建和启动历史文件清理器

文件清理器在日常的使用中非常重要。面对大流量的网络访问,zk会产生海量的数据。如果磁盘数据过多或者磁盘空间不足,可能会导致zk服务端不能正常运行,所以zk采用DatadirCleanupManager类去清理历史文件。

其中DatadirCleanupManager类有5个属性,如上代码所示。DatadirCleanupManager会对事务日志和数据快照文件进行定时清理,这种自动清理历史数据文件的机制可以尽量避免zk磁盘空间的浪费。

四.判断集群模式还是单机模式

根据从zoo.cfg文件解析出来的集群服务器地址列表来判断是否是单机模式。如果是单机模式,则会调用ZooKeeperServerMain的main()方法来进行启动。如果是集群模式,则会调用QuorumPeerMain的runFromConfig()方法来进行启动。

(2)初始化阶段

初始化阶段会根据预启动解析出的配置信息,初始化服务器实例。该阶段的主要工作流程如下:

一.创建数据持久化工具实例FileTxnSnapLog

二.创建服务端统计工具实例ServerStats

三.根据两个工具实例创建单机版服务器实例

四.创建网络连接工厂实例

五.初始化网络连接工厂实例

六.启动网络连接工厂实例的线程

七.恢复单机版服务器实例的本地数据

八.创建并启动服务器实例的会话管理器

九.初始化单机版服务器实例的请求处理链

十.注册单机版服务器实例到网络连接工厂实例

单机版服务器实例:ZooKeeperServer

网络连接工厂实例:ServerCnxnFactory

会话管理器:SessionTracker

public class ZooKeeperServerMain { private ServerCnxnFactory cnxnFactory; ... //以下是初始化阶段的内容 public void runFromConfig(ServerConfig config) { ... //1.创建zk数据管理器——持久化工具类FileTxnSnapLog的实例 txnLog = new FileTxnSnapLog(config.dataLogDir, config.dataDir); //2.创建zk服务运行统计器——统计工具类ServerStats的实例 //3.创建服务器实例ZooKeeperServer final ZooKeeperServer zkServer = new ZooKeeperServer(txnLog, config.tickTime, config.minSessionTimeout, config.maxSessionTimeout, null); txnLog.setServerStats(zkServer.serverStats()); ... //4.创建服务端网络连接工厂实例ServerCnxnFactory cnxnFactory = ServerCnxnFactory.createFactory(); cnxnFactory.configure(config.getClientPortAddress(), config.getMaxClientCnxns(), false); cnxnFactory.startup(zkServer); ... } ... } public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { private final ServerStats serverStats; private FileTxnSnapLog txnLogFactory = null; private ZKDatabase zkDb; protected int tickTime = DEFAULT_TICK_TIME; private final ZooKeeperServerListener listener; ... public ZooKeeperServer(FileTxnSnapLog txnLogFactory, int tickTime, int minSessionTimeout, int maxSessionTimeout, ZKDatabase zkDb) { //2.创建服务端统计工具ServerStats实例 serverStats = new ServerStats(this); this.txnLogFactory = txnLogFactory; this.txnLogFactory.setServerStats(this.serverStats); this.zkDb = zkDb; this.tickTime = tickTime; setMinSessionTimeout(minSessionTimeout); setMaxSessionTimeout(maxSessionTimeout); listener = new ZooKeeperServerListenerImpl(this); } ... }

一.创建数据持久化工具实例FileTxnSnapLog

可以通过FileTxnSnapLog对zk服务器的内存数据进行持久化,具体会将内存数据持久化到配置文件里的事务日志文件 + 快照数据文件中。

所以在执行ZooKeeperServerMain的runFromConfig()方法启动zk服务端时,首先会根据zoo.cfg配置文件中的dataDir数据快照目录和dataLogDir事务日志目录,通过"new FileTxnSnapLog()"来创建持久化工具类FileTxnSnapLog的实例。

二.创建服务端统计工具实例ServerStats

ServerStats用于统计zk服务端运行时的状态信息,主要统计的数据包括:服务端向客户端发送的响应包次数、接收客户端发送的请求包次数、服务端处理请求的延迟情况、处理客户端的请求次数。

在执行ZooKeeperServerMain.runFromConfig()方法时,执行到ZooKeeperServer的构造方法就会首先创建ServerStats实例。

三.根据两个工具实例创建单机版服务器实例

ZooKeeperServer是单机版服务端的核心实体类。在执行ZooKeeperServerMain.runFromConfig()方法时,创建完zk数据管理器——持久化工具类FileTxnSnapLog的实例后,就会通过"new ZooKeeperServer()"来创建单机版服务器实例ZooKeeperServer。

此时会传入从zoo.cfg配置文件中解析出的tickTime和会话超时时间来创建服务器实例。创建完服务器实例ZooKeeperServer后,接下来才会对该ZooKeeperServer服务器实例进行更多的初始化工作,包括网络连接器、内存数据库和请求处理器等组件的初始化。

四.创建网络连接工厂实例

zk中客户端和服务端的网络通信,本质是通过Java的IO数据流进行通信的。zk一开始就是使用自己实现的NIO进行网络通信的,但之后引入了Netty框架来满足不同使用情况下的需求。

在执行ZooKeeperServerMain的runFromConfig()方法时,创建完服务器实例ZooKeeperServer后,就会通过ServerCnxnFactory的createFactory()方法来创建服务端网络连接工厂实例ServerCnxnFactory。

ServerCnxnFactory的createFactory()方法首先会获取配置值,判断是使用NIO还是使用Netty,然后再通过反射去实例化服务端网络连接工厂。

可以通过配置zookeeper.serverCnxnFactory来指定使用:zk自己实现的NIO还是Netty框架,来构建服务端网络连接工厂ServerCnxnFactory。

public abstract class ServerCnxnFactory { ... static public ServerCnxnFactory createFactory() throws IOException { String serverCnxnFactoryName = System.getProperty(ZOOKEEPER_SERVER_CNXN_FACTORY); if (serverCnxnFactoryName == null) { serverCnxnFactoryName = NIOServerCnxnFactory.class.getName(); } ServerCnxnFactory serverCnxnFactory = (ServerCnxnFactory) Class.forName(serverCnxnFactoryName).getDeclaredConstructor().newInstance(); return serverCnxnFactory; } ... }

五.初始化网络连接工厂实例

在执行ZooKeeperServerMain的runFromConfig()方法时,创建完服务端网络连接工厂ServerCnxnFactory实例后,就会调用网络连接工厂ServerCnxnFactory的configure()方法来初始化网络连接工厂ServerCnxnFactory实例。

这里以NIOServerCnxnFactory的configure()方法为例,该方法主要会启动一个NIO服务器,以及创建三类线程:

一.处理客户端连接的AcceptThread线程

二.处理客户端请求的一批SelectorThread线程

三.处理过期连接的ConnectionExpirerThread线程

初始化完ServerCnxnFactory实例后,虽然此时NIO服务器已对外开放端口,客户端也能访问到2181端口,但此时zk服务端还不能正常处理客户端请求。

public class NIOServerCnxnFactory extends ServerCnxnFactory { //最大客户端连接数 protected int maxClientCnxns = 60; //处理过期连接的线程 private ConnectionExpirerThread expirerThread; //处理客户端建立连接的线程 private AcceptThread acceptThread; //处理客户端请求的线程 private final Set<SelectorThread> selectorThreads = new HashSet<SelectorThread>(); //会话过期相关 int sessionlessCnxnTimeout; //连接过期队列 private ExpiryQueue<NIOServerCnxn> cnxnExpiryQueue; //selector线程数,CPU核数的一半 private int numSelectorThreads; //工作线程数 private int numWorkerThreads; ... public void configure(InetSocketAddress addr, int maxcc, boolean secure) throws IOException { ... maxClientCnxns = maxcc; sessionlessCnxnTimeout = Integer.getInteger(ZOOKEEPER_NIO_SESSIONLESS_CNXN_TIMEOUT, 10000); //连接过期队列 cnxnExpiryQueue = new ExpiryQueue<NIOServerCnxn>(sessionlessCnxnTimeout); //创建一个自动处理过期连接的ConnectionExpirerThread线程 expirerThread = new ConnectionExpirerThread(); int numCores = Runtime.getRuntime().availableProcessors(); numSelectorThreads = Integer.getInteger(ZOOKEEPER_NIO_NUM_SELECTOR_THREADS, Math.max((int) Math.sqrt((float) numCores/2), 1)); numWorkerThreads = Integer.getInteger(ZOOKEEPER_NIO_NUM_WORKER_THREADS, 2 * numCores); ... //创建一批SelectorThread线程 for (int i=0; i<numSelectorThreads; ++i) { selectorThreads.add(new SelectorThread(i)); } //打开ServerSocketChannel this.ss = ServerSocketChannel.open(); ss.socket().setReuseAddress(true); //绑定端口,启动NIO服务器 ss.socket().bind(addr); ss.configureBlocking(false); //创建一个AcceptThread线程 acceptThread = new AcceptThread(ss, addr, selectorThreads); } ... }

六.启动网络连接工厂实例的线程

在执行ZooKeeperServerMain的runFromConfig()方法时,调用完网络连接工厂ServerCnxnFactory的configure()方法初始化网络连接工厂ServerCnxnFactory实例后,便会调用ServerCnxnFactory的startup()方法去启动ServerCnxnFactory的线程。

注意:对过期连接进行处理是由一个ConnectionExpirerThread线程负责的。

public class NIOServerCnxnFactory extends ServerCnxnFactory { private ExpiryQueue<NIOServerCnxn> cnxnExpiryQueue;//连接的过期队列 ... public void startup(ZooKeeperServer zks, boolean startServer) { //6.启动各种线程 start(); setZooKeeperServer(zks); if (startServer) { //7.恢复本地数据 zks.startdata(); //8.创建并启动会话管理器SessionTracker //9.初始化zk的请求处理链 //10.注册zk服务器实例 zks.startup(); } } public void start() { stopped = false; if (workerPool == null) { workerPool = new WorkerService("NIOWorker", numWorkerThreads, false); } for (SelectorThread thread : selectorThreads) { if (thread.getState() == Thread.State.NEW) { thread.start(); } } if (acceptThread.getState() == Thread.State.NEW) { acceptThread.start(); } if (expirerThread.getState() == Thread.State.NEW) { expirerThread.start(); } } ... //用来处理过期连接,会启动一个超时检查线程来检查连接是否过期 private class ConnectionExpirerThread extends ZooKeeperThread { ConnectionExpirerThread() { super("ConnnectionExpirer"); } @Override public void run() { while (!stopped) { //使用了分桶管理策略 long waitTime = cnxnExpiryQueue.getWaitTime(); if (waitTime > 0) { Thread.sleep(waitTime); continue; } for (NIOServerCnxn conn : cnxnExpiryQueue.poll()) { conn.close(); } } } } //用来处理要建立连接的客户端OP_ACCEPT请求 private class AcceptThread extends AbstractSelectThread { private final ServerSocketChannel acceptSocket; private final SelectionKey acceptKey; private final Collection<SelectorThread> selectorThreads; private Iterator<SelectorThread> selectorIterator; ... public AcceptThread(ServerSocketChannel ss, InetSocketAddress addr, Set<SelectorThread> selectorThreads) throws IOException { super("NIOServerCxnFactory.AcceptThread:" + addr); this.acceptSocket = ss; this.acceptKey = acceptSocket.register(selector, SelectionKey.OP_ACCEPT); this.selectorThreads = Collections.unmodifiableList(new ArrayList<SelectorThread>(selectorThreads)); selectorIterator = this.selectorThreads.iterator(); } @Override public void run() { ... while (!stopped && !acceptSocket.socket().isClosed()) { select(); } ... } private void select() { selector.select(); Iterator<SelectionKey> selectedKeys = selector.selectedKeys().iterator(); while (!stopped && selectedKeys.hasNext()) { SelectionKey key = selectedKeys.next(); selectedKeys.remove(); if (!key.isValid()) { continue; } if (key.isAcceptable()) { if (!doAccept()) { pauseAccept(10); } } else { LOG.warn("Unexpected ops in accept select " + key.readyOps()); } } } private void pauseAccept(long millisecs) { acceptKey.interestOps(0); selector.select(millisecs); acceptKey.interestOps(SelectionKey.OP_ACCEPT); } private boolean doAccept() { boolean accepted = false; SocketChannel sc = null; sc = acceptSocket.accept(); accepted = true; InetAddress ia = sc.socket().getInetAddress(); int cnxncount = getClientCnxnCount(ia); ... sc.configureBlocking(false); // Round-robin assign this connection to a selector thread if (!selectorIterator.hasNext()) { selectorIterator = selectorThreads.iterator(); } SelectorThread selectorThread = selectorIterator.next(); ... acceptErrorLogger.flush(); return accepted; } } //用来处理AcceptThread线程建立好的客户端连接请求 class SelectorThread extends AbstractSelectThread { private final int id; private final Queue<SocketChannel> acceptedQueue; private final Queue<SelectionKey> updateQueue; public SelectorThread(int id) throws IOException { super("NIOServerCxnFactory.SelectorThread-" + id); this.id = id; acceptedQueue = new LinkedBlockingQueue<SocketChannel>(); updateQueue = new LinkedBlockingQueue<SelectionKey>(); } public boolean addAcceptedConnection(SocketChannel accepted) { if (stopped || !acceptedQueue.offer(accepted)) { return false; } wakeupSelector(); return true; } ... @Override public void run() { while (!stopped) { select(); processAcceptedConnections(); processInterestOpsUpdateRequests(); } ... } private void select() { selector.select(); Set<SelectionKey> selected = selector.selectedKeys(); ArrayList<SelectionKey> selectedList = new ArrayList<SelectionKey>(selected); Collections.shuffle(selectedList); Iterator<SelectionKey> selectedKeys = selectedList.iterator(); while(!stopped && selectedKeys.hasNext()) { SelectionKey key = selectedKeys.next(); selected.remove(key); ... } } private void processAcceptedConnections() { SocketChannel accepted; while (!stopped && (accepted = acceptedQueue.poll()) != null) { SelectionKey key = null; key = accepted.register(selector, SelectionKey.OP_READ); NIOServerCnxn cnxn = createConnection(accepted, key, this); key.attach(cnxn); addCnxn(cnxn); } } ... } ... protected NIOServerCnxn createConnection(SocketChannel sock, SelectionKey sk, SelectorThread selectorThread) { return new NIOServerCnxn(zkServer, sock, sk, this, selectorThread); } private void addCnxn(NIOServerCnxn cnxn) throws IOException { ... //激活连接 touchCnxn(cnxn); } public void touchCnxn(NIOServerCnxn cnxn) { //这个cnxnExpiryQueue与管理过期连接有关 cnxnExpiryQueue.update(cnxn, cnxn.getSessionTimeout()); } }

七.恢复单机版服务器实例的本地数据

启动zk服务端需要从本地快照数据文件 + 事务日志文件中进行数据恢复。在执行ZooKeeperServerMain的runFromConfig()方法时,调用完ServerCnxnFactory的startup()方法启动ServerCnxnFactory的线程后,就会调用单机版服务器实例ZooKeeperServer的startdata()方法来恢复本地数据。

public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { private ZKDatabase zkDb; private FileTxnSnapLog txnLogFactory = null; ... //7.恢复本地数据 public void startdata() { if (zkDb == null) { zkDb = new ZKDatabase(this.txnLogFactory); } if (!zkDb.isInitialized()) { loadData(); } } public void loadData() throws IOException, InterruptedException { if (zkDb.isInitialized()) { setZxid(zkDb.getDataTreeLastProcessedZxid()); } else { setZxid(zkDb.loadDataBase()); } // Clean up dead sessions LinkedList<Long> deadSessions = new LinkedList<Long>(); for (Long session : zkDb.getSessions()) { if (zkDb.getSessionWithTimeOuts().get(session) == null) { deadSessions.add(session); } } for (long session : deadSessions) { killSession(session, zkDb.getDataTreeLastProcessedZxid()); } // Make a clean snapshot takeSnapshot(); } public void takeSnapshot(){ try { txnLogFactory.save(zkDb.getDataTree(), zkDb.getSessionWithTimeOuts()); } catch (IOException e) { LOG.error("Severe unrecoverable error, exiting", e); System.exit(10); } } ... }

八.创建并启动服务器实例的会话管理器

会话管理器SessionTracker主要负责zk服务端的会话管理。在执行ZooKeeperServerMain的runFromConfig()方法时,调用完单机版服务器实例ZooKeeperServer的startdata()方法完成本地数据恢复后,就会调用ZooKeeperServer的startup()方法来开始创建并启动会话管理器,也就是在startup()方法中会调用createSessionTracker()和startSessionTracker()方法。SessionTracker其实也是一个继承了ZooKeeperThread的线程。

public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { protected SessionTracker sessionTracker; private FileTxnSnapLog txnLogFactory = null; private ZKDatabase zkDb; ... public synchronized void startup() { startupWithServerState(State.RUNNING); } private void startupWithServerState(State state) { //8.创建并启动会话管理器SessionTracker if (sessionTracker == null) { createSessionTracker(); } startSessionTracker(); //9.初始化服务器实例ZooKeeperServer的请求处理链 setupRequestProcessors(); //注册JMX服务 registerJMX(); //开启监控JVM停顿的线程 startJvmPauseMonitor(); setState(state); notifyAll(); } protected void createSessionTracker() { sessionTracker = new SessionTrackerImpl(this, zkDb.getSessionWithTimeOuts(), tickTime, createSessionTrackerServerId, getZooKeeperServerListener()); } protected void startSessionTracker() { ((SessionTrackerImpl)sessionTracker).start(); } ... } public class SessionTrackerImpl extends ZooKeeperCriticalThread implements SessionTracker { private final ExpiryQueue<SessionImpl> sessionExpiryQueue; private final SessionExpirer expirer; ... @Override public void run() { while (running) { //使用了分桶管理策略 long waitTime = sessionExpiryQueue.getWaitTime(); if (waitTime > 0) { Thread.sleep(waitTime); continue; } for (SessionImpl s : sessionExpiryQueue.poll()) { setSessionClosing(s.sessionId); expirer.expire(s); } } } ... }

九.初始化单机版服务器实例的请求处理链

在执行ZooKeeperServerMain的runFromConfig()方法时,在ZooKeeperServer的startup()方法中调用方法创建并启动好会话管理器后,就会继续在ZooKeeperServer的startup()方法中调用方法初始化请求处理链,也就是在startup()方法中会调用setupRequestProcessors()方法。

zk处理请求的方式是典型的责任链模式,zk服务端会使用多个请求处理器来依次处理一个客户端请求。所以在服务端启动时,会将这些请求处理器串联起来形成一个请求处理链。

单机版服务器的请求处理链包括3个请求处理器:

第一个请求处理器是:PrepRequestProcessor

第二个请求处理器是:SyncRequestProcessor

第三个请求处理器是:FinalRequestProcessor

zk服务端会严格按照顺序分别调用这3个请求处理器处理客户端的请求,其中PrepRequestProcessor和SyncRequestProcessor其实也是一个线程。服务端收到的客户端请求会不断被添加到请求处理器的请求队列中,然后请求处理器线程启动后就会不断从请求队列中提取请求出来进行处理。

public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { protected RequestProcessor firstProcessor; ... public synchronized void startup() { startupWithServerState(State.RUNNING); } private void startupWithServerState(State state) { //8.创建并启动会话管理器SessionTracker if (sessionTracker == null) { createSessionTracker(); } startSessionTracker(); //9.初始化服务器实例ZooKeeperServer的请求处理链 setupRequestProcessors(); //注册JMX服务 registerJMX(); //开启监控JVM停顿的线程 startJvmPauseMonitor(); setState(state); notifyAll(); } protected void setupRequestProcessors() { RequestProcessor finalProcessor = new FinalRequestProcessor(this); RequestProcessor syncProcessor = new SyncRequestProcessor(this, finalProcessor); ((SyncRequestProcessor)syncProcessor).start(); firstProcessor = new PrepRequestProcessor(this, syncProcessor); ((PrepRequestProcessor)firstProcessor).start(); } ... } public interface RequestProcessor { void processRequest(Request request) throws RequestProcessorException; void shutdown(); } public class PrepRequestProcessor extends ZooKeeperCriticalThread implements RequestProcessor { LinkedBlockingQueue<Request> submittedRequests = new LinkedBlockingQueue<Request>(); private final RequestProcessor nextProcessor; ZooKeeperServer zks; ... public PrepRequestProcessor(ZooKeeperServer zks, RequestProcessor nextProcessor) { super("ProcessThread(sid:" + zks.getServerId() + " cport:" + zks.getClientPort() + "):", zks.getZooKeeperServerListener()); this.nextProcessor = nextProcessor; this.zks = zks; } public void processRequest(Request request) { submittedRequests.add(request); } @Override public void run() { ... while (true) { Request request = submittedRequests.take(); pRequest(request); } ... } protected void pRequest(Request request) throws RequestProcessorException { ... //事务请求处理 pRequest2Txn(request.type, zks.getNextZxid(), request, setDataRequest, true); ... //交给下一个处理器处理 nextProcessor.processRequest(request); } ... }

十.注册单机版服务器实例到网络连接工厂实例

就是调用ServerCnxnFactory的startup()方法中的setZooKeeperServer()方法,将初始化好的单机版服务器实例ZooKeeperServer注册到网络连接工厂实例ServerCnxnFactory。同时,也会将网络连接工厂实例ServerCnxnFactory注册到单机版服务器实例ZooKeeperServer。此时,zk服务端就可以对外提供正常的服务了。

public class NIOServerCnxnFactory extends ServerCnxnFactory { ... public void startup(ZooKeeperServer zks, boolean startServer) { //6.启动各种线程 start(); //10.注册zk服务器实例 setZooKeeperServer(zks); if (startServer) { //7.恢复本地数据 zks.startdata(); //8.创建并启动会话管理器SessionTracker //9.初始化zk的请求处理链 zks.startup(); } } ... } public abstract class ServerCnxnFactory { ... protected ZooKeeperServer zkServer; final public void setZooKeeperServer(ZooKeeperServer zks) { this.zkServer = zks; if (zks != null) { if (secure) { zks.setSecureServerCnxnFactory(this); } else { zks.setServerCnxnFactory(this); } } } ... } public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { ... protected ServerCnxnFactory serverCnxnFactory; protected ServerCnxnFactory secureServerCnxnFactory; public void setServerCnxnFactory(ServerCnxnFactory factory) { serverCnxnFactory = factory; } public void setSecureServerCnxnFactory(ServerCnxnFactory factory) { secureServerCnxnFactory = factory; } ... }

2.集群版的zk服务端的启动过程

(1)预启动阶段

(2)初始化阶段

(3)Leader选举阶段

(4)Leader和Follower启动阶段

什么是集群:

集群是由网络中不同的机器组成的一个系统,集群中的工作是通过集群中调度者服务器来协同完成的。

集群中的调度者服务器:

调度者的工作就是在集群收到客户端请求后,根据集群中机器的使用情况,决定将此次客户端请求交给集群中哪一台服务器或网络节点进行处理。

zk中的集群模式:

zk集群会将服务器分成Leader、Follower、Observer三种角色的服务器;在集群运行期间这三种角色的服务器所负责的工作各不相同。

一.Leader角色服务器(处理事务性请求 + 管理其他服务器)

负责处理事务性请求,以及管理集群中的其他服务器。Leader服务器是集群中工作的分配和调度者。

二.Follower服务器(处理非事务性请求 + 选举Leader服务器)

负责处理非事务性请求,以及选举出Leader服务器。发生Leader选举时,系统会从Follow服务器中,根据过半投票原则选举出一个Follower作为Leader服务器。

三.Observer服务器(处理非事务性请求 + 不参与选举和被选举)

负责处理非事务性请求,不参与Leader服务器的选举,也不会作为候选者被选举为Leader服务器。

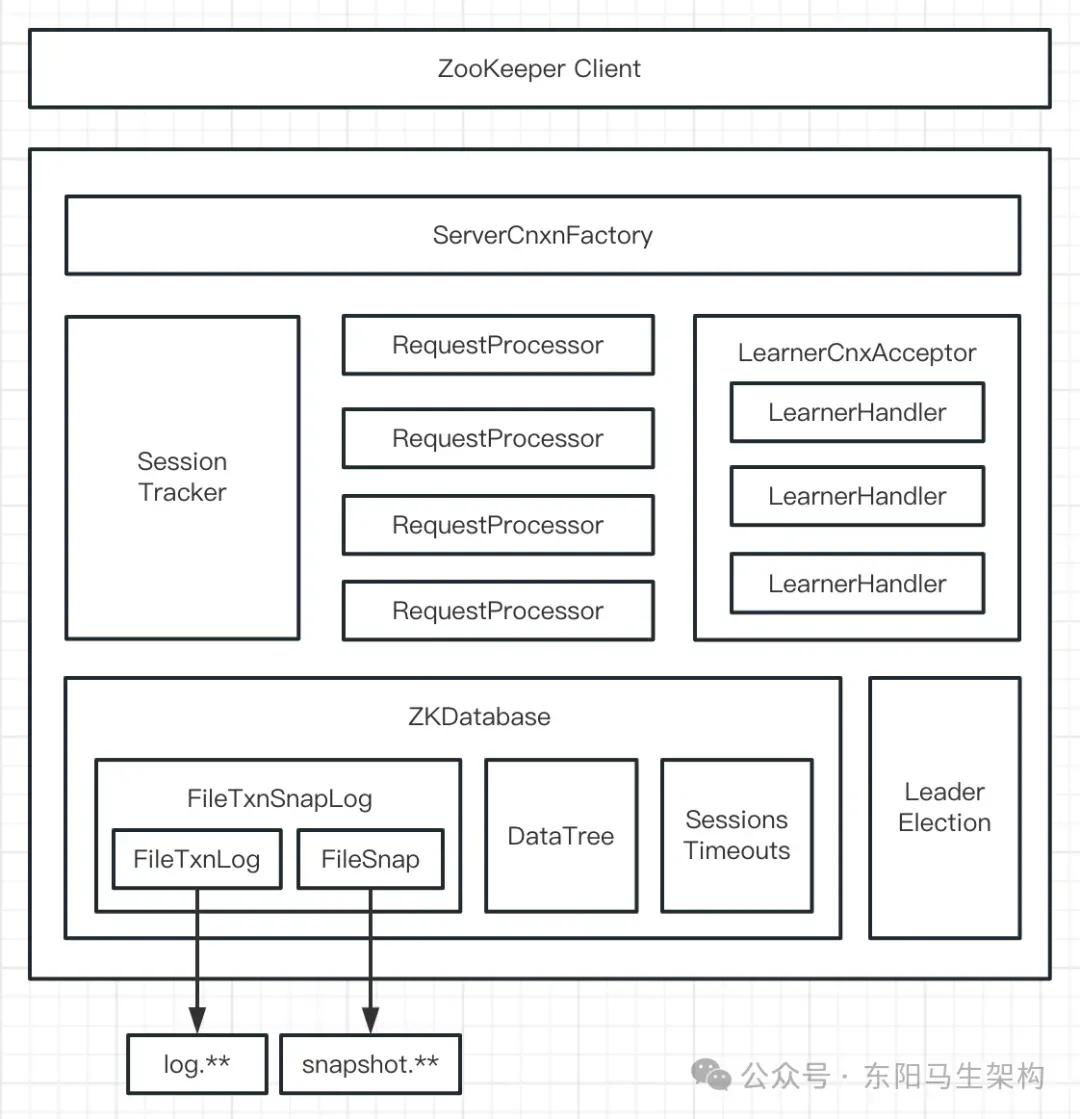

zk服务端整体架构图如下:

集群版zk服务端的启动分为四个阶段:

预启动阶段、初始化阶段、Leader选举阶段、Leader和Follower启动阶段

集群版zk服务端的启动流程图如下:

接下来介绍集群版的zk服务端是如何从初始化到对外提供服务的。

(1)预启动阶段

在zk服务端进行初始化之前,首先要对配置文件等信息进行解析和载入,而zk服务端的预启动阶段的主要工作流程如下:

一.QuorumPeerMain启动程序

二.解析zoo.cfg配置文件

三.创建和启动历史文件清理器

四.根据配置判断是集群模式还是单机模式

首先zk服务端会调用QuorumPeerMain类中的main()方法,然后在QuorumPeerMain的initializeAndRun()方法里解析zoo.cfg配置文件。接着继续在initializeAndRun()方法中创建和启动历史文件清理器,以及根据配置文件和启动参数,即args参数和config.isDistributed()方法,来判断zk服务端的启动方式是集群模式还是单机模式。如果配置参数中配置了相关的配置项,并且已经指定了集群模式运行,那么在服务启动时就会调用runFromConfig()方法完成集群模式的初始化。

public class QuorumPeerMain { protected QuorumPeer quorumPeer; ... //1.启动程序入口 public static void main(String[] args) { QuorumPeerMain main = new QuorumPeerMain(); try { //启动程序 main.initializeAndRun(args); } catch (IllegalArgumentException e) { ... } LOG.info("Exiting normally"); System.exit(0); } protected void initializeAndRun(String[] args) { QuorumPeerConfig config = new QuorumPeerConfig(); if (args.length == 1) { //2.解析配置文件 config.parse(args[0]); } //3.创建和启动历史文件清理器 DatadirCleanupManager purgeMgr = new DatadirCleanupManager(config.getDataDir(), config.getDataLogDir(), config.getSnapRetainCount(), config.getPurgeInterval()); purgeMgr.start(); //4.根据配置判断是集群模式还是单机模式 if (args.length == 1 && config.isDistributed()) { //集群模式 runFromConfig(config); } else { //单机模式 ZooKeeperServerMain.main(args); } } ... }

(2)初始化阶段

一.创建网络连接工厂实例ServerCnxnFactory

二.初始化网络连接工厂实例ServerCnxnFactory

三.创建集群版服务器实例QuorumPeer

四.创建数据持久化工具FileTxnSnapLog并设置到QuorumPeer实例中

五.创建内存数据库ZKDatabase并设置到QuorumPeer实例中

六.初始化集群版服务器实例QuorumPeer

七.恢复集群版服务器实例QuorumPeer本地数据

八.启动网络连接工厂ServerCnxnFactory主线程

public class QuorumPeerMain { protected QuorumPeer quorumPeer; ... public void runFromConfig(QuorumPeerConfig config) { ... ServerCnxnFactory cnxnFactory = null; if (config.getClientPortAddress() != null) { //1.创建网络连接工厂实例ServerCnxnFactory cnxnFactory = ServerCnxnFactory.createFactory(); //2.初始化网络连接工厂实例ServerCnxnFactory cnxnFactory.configure(config.getClientPortAddress(), config.getMaxClientCnxns(), false); } //接下来就是初始化集群版服务器实例QuorumPeer //3.创建集群版服务器实例QuorumPeer quorumPeer = getQuorumPeer(); //4.创建zk数据管理器FileTxnSnapLog quorumPeer.setTxnFactory(new FileTxnSnapLog(config.getDataLogDir(), config.getDataDir())); quorumPeer.enableLocalSessions(config.areLocalSessionsEnabled()); quorumPeer.enableLocalSessionsUpgrading(config.isLocalSessionsUpgradingEnabled()); quorumPeer.setElectionType(config.getElectionAlg()); quorumPeer.setMyid(config.getServerId()); quorumPeer.setTickTime(config.getTickTime()); quorumPeer.setMinSessionTimeout(config.getMinSessionTimeout()); quorumPeer.setMaxSessionTimeout(config.getMaxSessionTimeout()); quorumPeer.setInitLimit(config.getInitLimit()); quorumPeer.setSyncLimit(config.getSyncLimit()); quorumPeer.setConfigFileName(config.getConfigFilename()); //5.创建并初始化内存数据库ZKDatabase quorumPeer.setZKDatabase(new ZKDatabase(quorumPeer.getTxnFactory())); quorumPeer.setQuorumVerifier(config.getQuorumVerifier(), false); if (config.getLastSeenQuorumVerifier() != null) { quorumPeer.setLastSeenQuorumVerifier(config.getLastSeenQuorumVerifier(), false); } quorumPeer.initConfigInZKDatabase(); quorumPeer.setCnxnFactory(cnxnFactory); quorumPeer.setSslQuorum(config.isSslQuorum()); quorumPeer.setUsePortUnification(config.shouldUsePortUnification()); quorumPeer.setLearnerType(config.getPeerType()); quorumPeer.setSyncEnabled(config.getSyncEnabled()); quorumPeer.setQuorumListenOnAllIPs(config.getQuorumListenOnAllIPs()); if (config.sslQuorumReloadCertFiles) { quorumPeer.getX509Util().enableCertFileReloading(); } // sets quorum sasl authentication configurations quorumPeer.setQuorumSaslEnabled(config.quorumEnableSasl); if (quorumPeer.isQuorumSaslAuthEnabled()) { quorumPeer.setQuorumServerSaslRequired(config.quorumServerRequireSasl); quorumPeer.setQuorumLearnerSaslRequired(config.quorumLearnerRequireSasl); quorumPeer.setQuorumServicePrincipal(config.quorumServicePrincipal); quorumPeer.setQuorumServerLoginContext(config.quorumServerLoginContext); quorumPeer.setQuorumLearnerLoginContext(config.quorumLearnerLoginContext); } quorumPeer.setQuorumCnxnThreadsSize(config.quorumCnxnThreadsSize); quorumPeer.initialize(); //6.初始化集群版服务器实例QuorumPeer quorumPeer.start(); //join方法会将当前线程挂起,等待QuorumPeer线程结束后再执行当前线程 quorumPeer.join(); } protected QuorumPeer getQuorumPeer() throws SaslException { return new QuorumPeer(); } } public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { ServerCnxnFactory cnxnFactory; ServerCnxnFactory secureCnxnFactory; ... public synchronized void start() { //7.恢复集群版服务器实例QuorumPeer本地数据 loadDataBase(); //8.启动网络连接工厂ServerCnxnFactory主线程 startServerCnxnFactory(); adminServer.start(); //9.开始Leader选举 startLeaderElection(); startJvmPauseMonitor();//开启监控JVM停顿的线程 //10.启动集群版服务器实例QuorumPeer super.start(); } private void startServerCnxnFactory() { if (cnxnFactory != null) { cnxnFactory.start(); } if (secureCnxnFactory != null) { secureCnxnFactory.start(); } } ... } public abstract class ServerCnxnFactory { ... static public ServerCnxnFactory createFactory() throws IOException { String serverCnxnFactoryName = System.getProperty(ZOOKEEPER_SERVER_CNXN_FACTORY); if (serverCnxnFactoryName == null) { serverCnxnFactoryName = NIOServerCnxnFactory.class.getName(); } ServerCnxnFactory serverCnxnFactory = (ServerCnxnFactory) Class.forName(serverCnxnFactoryName).getDeclaredConstructor().newInstance(); return serverCnxnFactory; } ... } public class NIOServerCnxnFactory extends ServerCnxnFactory { //最大客户端连接数 protected int maxClientCnxns = 60; //处理连接过期的线程 private ConnectionExpirerThread expirerThread; //处理客户端建立连接的线程 private AcceptThread acceptThread; //处理客户端请求的线程 private final Set<SelectorThread> selectorThreads = new HashSet<SelectorThread>(); //会话过期相关 int sessionlessCnxnTimeout; private ExpiryQueue<NIOServerCnxn> cnxnExpiryQueue; //selector线程数,CPU核数的一半 private int numSelectorThreads; //工作线程数 private int numWorkerThreads; ... public void configure(InetSocketAddress addr, int maxcc, boolean secure) throws IOException { ... maxClientCnxns = maxcc; sessionlessCnxnTimeout = Integer.getInteger(ZOOKEEPER_NIO_SESSIONLESS_CNXN_TIMEOUT, 10000); cnxnExpiryQueue = new ExpiryQueue<NIOServerCnxn>(sessionlessCnxnTimeout); //创建一个自动处理过期会话的ConnectionExpirerThread线程 expirerThread = new ConnectionExpirerThread(); int numCores = Runtime.getRuntime().availableProcessors(); numSelectorThreads = Integer.getInteger(ZOOKEEPER_NIO_NUM_SELECTOR_THREADS, Math.max((int) Math.sqrt((float) numCores/2), 1)); numWorkerThreads = Integer.getInteger(ZOOKEEPER_NIO_NUM_WORKER_THREADS, 2 * numCores); ... //创建一批SelectorThread线程 for (int i=0; i<numSelectorThreads; ++i) { selectorThreads.add(new SelectorThread(i)); } //打开ServerSocketChannel this.ss = ServerSocketChannel.open(); ss.socket().setReuseAddress(true); //绑定端口,启动NIO服务器 ss.socket().bind(addr); ss.configureBlocking(false); //创建一个AcceptThread线程 acceptThread = new AcceptThread(ss, addr, selectorThreads); } public void start() { stopped = false; if (workerPool == null) { workerPool = new WorkerService("NIOWorker", numWorkerThreads, false); } for (SelectorThread thread : selectorThreads) { if (thread.getState() == Thread.State.NEW) { thread.start(); } } if (acceptThread.getState() == Thread.State.NEW) { acceptThread.start(); } if (expirerThread.getState() == Thread.State.NEW) { expirerThread.start(); } } }

一.创建网络连接工厂实例ServerCnxnFactory

在执行QuorumPeerMain的runFromConfig()方法时,首先会通过ServerCnxnFactory的createFactory()方法来创建服务端网络连接工厂。

ServerCnxnFactory的createFactory()方法首先会获取配置值,判断是使用NIO还是使用Netty,然后再通过反射去实例化服务端网络连接工厂。

可以通过配置zookeeper.serverCnxnFactory来指定使用:zk自己实现的NIO还是Netty框架,来构建服务端网络连接工厂。

二.初始化网络连接工厂实例ServerCnxnFactory

在执行QuorumPeerMain的runFromConfig()方法时,创建完服务端网络连接工厂实例ServerCnxnFactory后,就会调用网络连接工厂ServerCnxnFactory的configure()方法来初始化ServerCnxnFactory实例。

这里以NIOServerCnxnFactory的configure()方法为例,该方法主要会启动一个NIO服务器,以及创建三类线程:

一.处理客户端连接的AcceptThread线程

二.处理客户端请求的一批SelectorThread线程

三.处理过期连接的ConnectionExpirerThread线程

初始化完ServerCnxnFactory实例后,虽然此时NIO服务器已对外开放端口,客户端也能访问到2181端口,但此时zk服务端还不能正常处理客户端请求。

三.创建集群版服务器实例QuorumPeer

在执行QuorumPeerMain的runFromConfig()方法时,创建和初始化完网络连接工厂实例ServerCnxnFactory后,接着就会调用QuorumPeerMain的getQuorumPeer()方法创建集群版服务器实例。

ZooKeeperServer是单机版服务端的核心实体类。

QuorumPeer是集群版服务端的核心实体类。

可以将每个QuorumPeer类实例看作是集群中的一台服务器。在zk集群模式中,一个QuorumPeer类实例一般具有3种状态,分别是:

状态一:参与Leader节点的选举

状态二:作为Follower节点同步Leader节点的数据

状态三:作为Leader节点管理集群的Follower节点

在执行QuorumPeerMain的runFromConfig()方法时,创建完QuorumPeer实例后,接着会将集群版服务运行中需要的核心工具类注册到QuorumPeer实例中。这些核心工具类也是单机版服务端运行时需要的,比如:数据持久化类FileTxnSnapLog、NIO工厂类ServerCnxnFactory等。然后还会将配置文件中的服务器地址列表、Leader选举算法、会话超时时间等设置到QuorumPeer实例中。

四.创建数据持久化工具FileTxnSnapLog并设置到QuorumPeer实例中

可以通过FileTxnSnapLog对zk服务器的内存数据进行持久化,具体会将内存数据持久化到配置文件的事务日志文件 + 快照数据文件中。

在执行QuorumPeerMain的runFromConfig()方法时,创建完QuorumPeer实例后,首先会根据zoo.cfg配置文件中的dataDir数据快照目录和dataLogDir事务日志目录,通过"new FileTxnSnapLog()"来创建FileTxnSnapLog类实例,然后设置到QuorumPeer实例中。

五.创建内存数据库ZKDatabase并设置到QuorumPeer实例中

ZKDatabase是zk的内存数据库,主要负责管理zk的所有会话记录以及DataTree和事务日志的存储。

在执行QuorumPeerMain的runFromConfig方法时,创建完QuorumPeer实例以及创建完数据持久化工具FileTxnSnapLog并设置到QuorumPeer后,就会将持久化工具FileTxnSnapLog作为参数去创建ZKDatabase实例,然后设置到QuorumPeer实例。

六.初始化集群版服务器实例QuorumPeer

除了需要将一些核心组件注册到服务器实例QuorumPeer中去,还需要对服务器实例QuorumPeer根据zoo.cfg配置文件设置一些参数,比如服务器地址列表、Leader选举算法、会话超时时间等。其中这些核心组件包括:数据持久化工具FileTxnSnapLog、服务端网络连接工厂ServerCnxnFactory、内存数据库ZKDatabase。

完成初始化QuorumPeer实例并启动QuorumPeer线程后,便会通过QuorumPeer的join()方法将main线程挂起,等待QuorumPeer线程结束后再执行。

七.恢复集群版服务器实例QuorumPeer本地数据

在执行QuorumPeerMain的runFromConfig()方法时,初始化完QuorumPeer实例后,就会调用QuorumPeer的start()方法来启动集群中的服务器。

在QuorumPeer的start()方法中,首先会调用QuorumPeer的loadDataBase()方法来恢复数据。

八.启动网络连接工厂ServerCnxnFactory主线程

在QuorumPeer的start()方法中,在调用完QuorumPeer的loadDataBase()方法来恢复本地数据之后,便会调用QuorumPeer的startServerCnxnFactory()方法来启动网络连接工厂的主线程。

(3)Leader选举阶段

一.初始化Leader选举(初始化当前投票 + 监听选举端口 + 启动选举守护线程)

二.启动QuorumPeer线程检测当前服务器状态

三.进行Leader选举

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { private volatile boolean running = true; private ServerState state = ServerState.LOOKING; volatile private Vote currentVote; Election electionAlg; ... public synchronized void start() { //7.恢复集群版服务器实例QuorumPeer本地数据 loadDataBase(); //8.启动网络连接工厂ServerCnxnFactory主线程 startServerCnxnFactory(); adminServer.start(); //9.初始化Leader选举(初始化当前投票+监听选举端口+启动选举守护线程) startLeaderElection(); startJvmPauseMonitor();//开启监控JVM停顿的线程 //10.执行集群版服务器实例QuorumPeer.run()方法 super.start(); } synchronized public void startLeaderElection() { if (getPeerState() == ServerState.LOOKING) { //zk会根据自身的服务器ID、最新的事务ID和当前的服务器epoch来生成一个初始化的投票 currentVote = new Vote(myid, getLastLoggedZxid(), getCurrentEpoch()); } ... //创建选举算法——FastLeaderElection,每一个QuorumPeer实例都会有一个选举算法实例 this.electionAlg = createElectionAlgorithm(electionType); } protected Election createElectionAlgorithm(int electionAlgorithm){ Election le=null; QuorumCnxManager qcm = createCnxnManager(); QuorumCnxManager.Listener listener = qcm.listener; //启动对Leader选举端口的监听,等待集群中其他服务器创建连接 listener.start(); //创建选举算法,每一个QuorumPeer实例都会有一个选举算法实例 FastLeaderElection fle = new FastLeaderElection(this, qcm); //启动选举算法使用的守护线程,其中发送投票的守护线程会不断将当前的投票发出去 fle.start(); le = fle; return le; } public QuorumCnxManager createCnxnManager() { return new QuorumCnxManager(this, this.getId(), this.getView(), this.authServer, this.authLearner, this.tickTime * this.syncLimit, this.getQuorumListenOnAllIPs(), this.quorumCnxnThreadsSize, this.isQuorumSaslAuthEnabled()); } ... public synchronized ServerState getPeerState() { return state; } @Override public void run() { ... while (running) { //检测当前服务器状态 + 进行Leader选举 switch (getPeerState()) { case LOOKING: LOG.info("LOOKING"); ... //设置当前的投票 setCurrentVote(electionAlg.lookForLeader()); break; case OBSERVING: ... ... } } } ... } //创建用来进行选举的服务器,监听端口3888 public class QuorumCnxManager { ... public class Listener extends ZooKeeperThread { volatile ServerSocket ss = null;//BIO的ServerSocket ... @Override public void run() { int numRetries = 0; InetSocketAddress addr; Socket client = null; Exception exitException = null; while ((!shutdown) && (portBindMaxRetry == 0 || numRetries < portBindMaxRetry)) { ss = new ServerSocket(); ss.setReuseAddress(true); if (self.getQuorumListenOnAllIPs()) { int port = self.getElectionAddress().getPort(); addr = new InetSocketAddress(port); } else { self.recreateSocketAddresses(self.getId()); addr = self.getElectionAddress(); } setName(addr.toString()); ss.bind(addr); while (!shutdown) { client = ss.accept(); setSockOpts(client); LOG.info("Received connection request " + client.getRemoteSocketAddress()); if (quorumSaslAuthEnabled) { receiveConnectionAsync(client); } else { receiveConnection(client); } numRetries = 0; } } LOG.info("Leaving listener"); if (!shutdown) { LOG.error("As I'm leaving the listener thread, " + "I won't be able to participate in leader " + "election any longer: " + self.getElectionAddress()); } else if (ss != null) { try { ss.close(); } catch (IOException ie) { LOG.debug("Error closing server socket", ie); } } } ... } ... }

在QuorumPeer的start()方法中,调用完loadDataBase()和startServerCnxnFactory()方法,恢复好本地数据以及启动完网络连接工厂ServerCnxnFactory的线程后,便会调用QuorumPeer的startLeaderElection()方法进行Leader选举。

一.初始化Leader选举(初始化当前投票 + 监听选举端口 + 启动选举守护线程)

Leader选举是集群版的zk服务端和单机版的zk服务端的最大不同点。首先QuorumPeer的startLeaderElection()方法会通过"new Vote()",根据服务器ID、最新的事务ID和当前的服务器epoch,来初始化当前投票。也就是在Leader选举的初始化过程中,集群版zk的每个服务器都会给自己投票。

然后当QuorumPeer的startLeaderElection()方法完成初始化当前投票后,会调用QuorumPeer的createElectionAlgorithm()方法去初始化选举算法,即监听选举端口3888 + 启动选举守护线程。

而在QuorumPeer的createElectionAlgorithm()方法中:首先会创建Leader选举所需的网络IO实例QuorumCnxManager。QuorumCnxManager实例会启动Listener线程对Leader选举端口进行监听,用来等待集群中其他服务器发送的请求。

然后会创建FastLeaderElection选举算法实例,zk默认使用FastLeaderElection选举算法,每一个QuorumPeer实例都会有一个选举算法实例。

接着会启动选举算法FastLeaderElection实例使用的守护线程,其中发送投票的守护线程会不断从发送队列中取出消息进行发送,接收投票的守护线程会不断从接收队列中取出消息进行处理。

二.启动QuorumPeer线程检测当前服务器状态

QuorumPeer也是一个继承了ZooKeeperThread的线程。当QuorumPeer的startLeaderElection()方法完成初始化Leader选举后,便会启动QuorumPeer线程通过while循环不断检查当前服务器状态。

QuorumPeer线程的核心工作就是不断地检测当前服务器的状态并做相应处理。服务器状态会在LOOKING、LEADING、FOLLOWING/OBSERVING之间进行切换。在集群版zk服务端启动时,QuorumPeer的初始状态是LOOKING,所以QuorumPeer线程就会判断此时需要更新当前投票进行Leader选举。

三.进行Leader选举

zk的Leader选举过程,其实就是集群中所有机器相互间进行一系列投票,选举产生最合适的机器成为Leader,其余机器成为Follower或Observer,然后对这些已经确定集群角色的机器通过QuorumPeer线程进行初始化的过程。

Leader选举算法:

就是集群中哪个机器处理的数据越新,就越有可能成为Leader。先通过每个服务器处理过的最大ZXID来比较谁的数据最新,如果每个机器处理的ZXID一致,那么最大SID的服务器就成为Leader。

(4)Leader和Follower启动阶段

一.创建Leader服务端实例和Follower客户端实例

二.Leader启动时会创建LearnerCnxAcceptor监听Learner的连接

三.Learner启动时会发起请求建立和Leader的连接

四.Leader会为请求连接的每个Learner创建一个LearnerHandler

五.Learner建立和Leader的连接后会向Leader发送LearnerInfo进行注册

六.Leader解析LearnerInfo计算出epoch并发送LeaderInfo给Learner

七.Learner收到Leader发送的LeaderInfo后会反馈ackNewEpoch消息

八.Leader收到过半Learner的ackNewEpoch消息后开始进行数据同步

九.过半Learner完成数据同步就启动Leader和Learner绑定的服务器实例

当zk完成Leader选举后,集群中每个服务器基本都已确定自己的角色。zk将集群中的机器分为Leader、Follower、Obervser三种角色,每种角色在集群中起到的作用都各不相同。

Leader角色主要负责处理客户端发送的数据变更等事务性请求,并管理协调集群中Follower角色的服务器。Follower角色则主要处理客户端的获取数据等非事务性请求。Observer角色的服务器的功能和Follower角色的服务器的功能相似,唯一的不同就是不会参与Leader的选举工作。

zk中的这三种角色服务器,在服务启动过程中也有各自的不同,下面分析Leader角色和Follower角色在启动过程中的工作原理,也就是Leader角色和Follower角色启动过程中的交互步骤。

Leader服务端和Follower客户端的启动交互:

一.创建Leader服务端实例和Follower客户端实例

由于QuorumPeer线程会不断检测当前服务器的状态并做相应处理,所以当QuorumPeer线程 + FastLeaderElection守护线程完成Leader选举后,每个zk服务器都会根据节点状态创建相应的角色实例来完成数据同步。

比如zk服务器QuorumPeer实例,如果通过getPeerState()方法发现自己的节点状态为LEADING,那么就会调用QuorumPeer的makeLeader()方法来创建Leader服务端实例。如果通过getPeerState()方法发现自己的节点状态为FOLLOWERING,那么就会调用QuorumPeer的makeFollower()方法来创建Follower客户端实例。

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { private volatile boolean running = true; public Follower follower; public Leader leader; public Observer observer; ... public synchronized ServerState getPeerState(){ return state; } @Override public void run() { ... while (running) { //检测当前服务器状态 + 进行Leader选举 switch (getPeerState()) { case LOOKING: LOG.info("LOOKING"); ... //设置当前的投票 setCurrentVote(electionAlg.lookForLeader()); break; case FOLLOWING: LOG.info("FOLLOWING"); //1.创建Follower客户端实例 setFollower(makeFollower(logFactory)); //启动Follower客户端实例,followLeader()方法里面会有个while循环处理心跳等 follower.followLeader(); ... break; case LEADING: LOG.info("LEADING"); //1.创建Leader服务端实例 setLeader(makeLeader(logFactory)); //2.启动Leader服务端实例,lead()方法里面会有一个while循环处理心跳等 leader.lead(); setLeader(null); ... break; ... } ... } } //Follower客户端实例绑定了FollowerZooKeeperServer服务器实例 protected Follower makeFollower(FileTxnSnapLog logFactory) throws IOException { return new Follower(this, new FollowerZooKeeperServer(logFactory, this, this.zkDb)); } //Leader服务端实例绑定了LeaderZooKeeperServer服务器实例 protected Leader makeLeader(FileTxnSnapLog logFactory) throws IOException, X509Exception { return new Leader(this, new LeaderZooKeeperServer(logFactory, this, this.zkDb)); } ... }

二.Leader启动时会创建LearnerCnxAcceptor监听Learner的连接

当QuorumPeer线程通过makeLeader()方法创建好Leader服务端实例后,就会通过调用Leader的lead()方法来启动Leader服务端实例。

首先在Leader的构造方法中,会创建BIO的ServerSocket并监听2888端口。然后在Leader的lead()方法中,会创建Learner接收器LearnerCnxAcceptor。而Leader的lead()方法里会有一个while循环,不断处理与Learner的心跳等。

所有非Leader角色都可称为Learner角色,Follower会继承Learner。LearnerCnxAcceptor接收器则用于接收所有Learner客户端的连接请求。

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { ... //Leader服务端实例绑定了LeaderZooKeeperServer服务器实例 protected Leader makeLeader(FileTxnSnapLog logFactory) throws IOException, X509Exception { return new Leader(this, new LeaderZooKeeperServer(logFactory, this, this.zkDb)); } } public class Leader { private final ServerSocket ss;//BIO的ServerSocket //1.创建Leader网络服务端实例 Leader(QuorumPeer self,LeaderZooKeeperServer zk) throws IOException { this.self = self; this.proposalStats = new BufferStats(); if (self.shouldUsePortUnification() || self.isSslQuorum()) { boolean allowInsecureConnection = self.shouldUsePortUnification(); if (self.getQuorumListenOnAllIPs()) { ss = new UnifiedServerSocket(self.getX509Util(), allowInsecureConnection, self.getQuorumAddress().getPort()); } else { ss = new UnifiedServerSocket(self.getX509Util(), allowInsecureConnection); } } else { if (self.getQuorumListenOnAllIPs()) { ss = new ServerSocket(self.getQuorumAddress().getPort()); } else { ss = new ServerSocket(); } } ss.setReuseAddress(true); if (!self.getQuorumListenOnAllIPs()) { ss.bind(self.getQuorumAddress()); } this.zk = zk; this.learnerSnapshotThrottler = createLearnerSnapshotThrottler(maxConcurrentSnapshots, maxConcurrentSnapshotTimeout); } //2.启动Leader网络服务端实例 void lead() throws IOException, InterruptedException { ... //2.Leader网络服务端启动时会创建Learner接收器LearnerCnxAcceptor //Start thread that waits for connection requests from new followers. cnxAcceptor = new LearnerCnxAcceptor(); ... } ... }

三.Learner启动时会发起请求建立和Leader的连接

当QuorumPeer线程通过makeFollower()方法创建好Follower客户端实例后,就会调用Follower的followLeader()方法来启动Follower客户端实例。在Follower的followLeader()方法中,也就是在Learner客户端实例创建完后,会通过findLeader()方法从Leader选举的投票结果中找到Leader服务端,然后通过connectToLeader()方法来建立和该Leader服务端之间的连接。connectToLeader()方法尝试建立连接时,最多尝试5次,每次睡眠1秒。Follower的followLeader()方法中,会有一个while循环不断处理心跳等消息。

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { ... //Follower客户端实例绑定了FollowerZooKeeperServer服务器实例 protected Follower makeFollower(FileTxnSnapLog logFactory) throws IOException { return new Follower(this, new FollowerZooKeeperServer(logFactory, this, this.zkDb)); } } public class Follower extends Learner { final FollowerZooKeeperServer fzk; //1.创建Follower网络客户端实例 Follower(QuorumPeer self, FollowerZooKeeperServer zk) { this.self = self; this.zk = zk; this.fzk = zk; } //2.启动Follower网络客户端,也就是启动Learner网络客户端 void followLeader() throws InterruptedException { ... //3.从Leader选举的投票结果中找到Leader网络服务端 QuorumServer leaderServer = findLeader(); //3.建立和Leader网络服务端之间的连接 connectToLeader(leaderServer.addr, leaderServer.hostname); ... while (this.isRunning()) { //主要处理心跳消息 readPacket(qp); processPacket(qp); } } ... } public class Learner { ... QuorumPeer self; LearnerZooKeeperServer zk; protected BufferedOutputStream bufferedOutput; protected Socket sock; protected InputArchive leaderIs; protected OutputArchive leaderOs; ... //3.从Leader选举的投票结果中找到Leader服务器 protected QuorumServer findLeader() { QuorumServer leaderServer = null; // Find the leader by id Vote current = self.getCurrentVote(); for (QuorumServer s : self.getView().values()) { if (s.id == current.getId()) { // Ensure we have the leader's correct IP address before attempting to connect. s.recreateSocketAddresses(); leaderServer = s; break; } } if (leaderServer == null) { LOG.warn("Couldn't find the leader with id = " + current.getId()); } return leaderServer; } //3.建立和Leader服务器之间的连接 protected void connectToLeader(InetSocketAddress addr, String hostname) { this.sock = createSocket(); int initLimitTime = self.tickTime * self.initLimit; int remainingInitLimitTime = initLimitTime; long startNanoTime = nanoTime(); for (int tries = 0; tries < 5; tries++) { try { remainingInitLimitTime = initLimitTime - (int)((nanoTime() - startNanoTime) / 1000000); if (remainingInitLimitTime <= 0) { LOG.error("initLimit exceeded on retries."); throw new IOException("initLimit exceeded on retries."); } sockConnect(sock, addr, Math.min(self.tickTime * self.syncLimit, remainingInitLimitTime)); if (self.isSslQuorum()) { ((SSLSocket) sock).startHandshake(); } sock.setTcpNoDelay(nodelay); break; } catch (IOException e) { ... } Thread.sleep(1000); } self.authLearner.authenticate(sock, hostname); //下面定义的leaderIs和leaderOs是建立在Socket基础上的 //所以后续在registerWithLeader方法中可以先后往leaderIs写数据然后等待从leaderOs读数据 leaderIs = BinaryInputArchive.getArchive(new BufferedInputStream(sock.getInputStream())); bufferedOutput = new BufferedOutputStream(sock.getOutputStream()); leaderOs = BinaryOutputArchive.getArchive(bufferedOutput); } protected void sockConnect(Socket sock, InetSocketAddress addr, int timeout) throws IOException { sock.connect(addr, timeout); } }

四.Leader会为请求连接的每个Learner创建一个LearnerHandler

LearnerCnxAcceptor也是一个线程。Leader服务端启动时会创建和启动Learner接收器LearnerCnxAcceptor,LearnerCnxAcceptor线程里会通过while循环不断监听Learner发起的连接。

当Leader服务端实例接收到来自Learner的连接请求后,LearnerCnxAcceptor线程就会通过ServerSocket监听到Learner连接请求。此时,LearnerCnxAcceptor就会创建一个LearnerHandler实例。每个LearnerHandler实例都对应了一个Leader与Learner之间的连接,LearnerHandler负责Leader与Learner间几乎所有的消息通信和数据同步。

LearnerHandler也是一个线程,LearnerHandler会通过BIO + while循环来处理和Learner的通信、数据同步和心跳。

public class Leader { private final ServerSocket ss;//BIO的ServerSocket //1.创建Leader服务端实例 Leader(QuorumPeer self,LeaderZooKeeperServer zk) throws IOException { this.self = self; this.proposalStats = new BufferStats(); if (self.shouldUsePortUnification() || self.isSslQuorum()) { boolean allowInsecureConnection = self.shouldUsePortUnification(); if (self.getQuorumListenOnAllIPs()) { ss = new UnifiedServerSocket(self.getX509Util(), allowInsecureConnection, self.getQuorumAddress().getPort()); } else { ss = new UnifiedServerSocket(self.getX509Util(), allowInsecureConnection); } } else { if (self.getQuorumListenOnAllIPs()) { ss = new ServerSocket(self.getQuorumAddress().getPort()); } else { ss = new ServerSocket(); } } ss.setReuseAddress(true); if (!self.getQuorumListenOnAllIPs()) { ss.bind(self.getQuorumAddress()); } this.zk = zk; this.learnerSnapshotThrottler = createLearnerSnapshotThrottler(maxConcurrentSnapshots, maxConcurrentSnapshotTimeout); } ... void lead() throws IOException, InterruptedException { ... //2.Leader服务端启动时会创建Learner接收器LearnerCnxAcceptor //Start thread that waits for connection requests from new followers. cnxAcceptor = new LearnerCnxAcceptor(); cnxAcceptor.start(); ... } class LearnerCnxAcceptor extends ZooKeeperCriticalThread { private volatile boolean stop = false; public LearnerCnxAcceptor() { super("LearnerCnxAcceptor-" + ss.getLocalSocketAddress(), zk.getZooKeeperServerListener()); } @Override public void run() { while (!stop) { Socket s = null; boolean error = false; ... s = ss.accept(); s.setSoTimeout(self.tickTime * self.initLimit); s.setTcpNoDelay(nodelay); BufferedInputStream is = new BufferedInputStream(s.getInputStream()); //4.Leader服务端会为每个Learner客户端创建一个LearnerHandler LearnerHandler fh = new LearnerHandler(s, is, Leader.this); fh.start(); ... } } public void halt() { stop = true; } } ... } public class LearnerHandler extends ZooKeeperThread { final Leader leader; ... @Override public void run() { leader.addLearnerHandler(this); tickOfNextAckDeadline = leader.self.tick.get() + leader.self.initLimit + leader.self.syncLimit; ia = BinaryInputArchive.getArchive(bufferedInput); bufferedOutput = new BufferedOutputStream(sock.getOutputStream()); oa = BinaryOutputArchive.getArchive(bufferedOutput); QuorumPacket qp = new QuorumPacket(); ia.readRecord(qp, "packet"); if (qp.getType() != Leader.FOLLOWERINFO && qp.getType() != Leader.OBSERVERINFO) { LOG.error("First packet " + qp.toString() + " is not FOLLOWERINFO or OBSERVERINFO!"); return; } byte learnerInfoData[] = qp.getData(); ... } ... }

五.Learner建立和Leader的连接后会向Leader发送LearnerInfo进行注册

当Learner通过Learner的connectToLeader()方法和Leader建立起连接后,就会通过Learner的registerWithLeader()方法开始向Leader进行注册。也就是将Learner客户端自己的基本信息LearnerInfo发送给Leader服务端,LearnerInfo中会包括当前服务器的SID和处理事务的最新ZXID。

public class Follower extends Learner { final FollowerZooKeeperServer fzk; //创建Follower客户端实例 Follower(QuorumPeer self, FollowerZooKeeperServer zk) { this.self = self; this.zk = zk; this.fzk = zk; } //启动Follower客户端实例,也就是启动Learner客户端实例 void followLeader() throws InterruptedException { ... //从Leader选举的投票结果中找到Leader服务端 QuorumServer leaderServer = findLeader(); try { //3.建立和Leader服务端之间的连接 connectToLeader(leaderServer.addr, leaderServer.hostname); //5.向Leader发起注册 long newEpochZxid = registerWithLeader(Leader.FOLLOWERINFO); ... syncWithLeader(newEpochZxid); QuorumPacket qp = new QuorumPacket(); while (this.isRunning()) { readPacket(qp); processPacket(qp); } } catch (Exception e) { LOG.warn("Exception when following the leader", e); try { sock.close(); } catch (IOException e1) { e1.printStackTrace(); } pendingRevalidations.clear(); } } ... } public class Learner { ... //Once connected to the leader, perform the handshake protocol to establish a following / observing connection. protected long registerWithLeader(int pktType) throws IOException { long lastLoggedZxid = self.getLastLoggedZxid(); QuorumPacket qp = new QuorumPacket(); qp.setType(pktType); qp.setZxid(ZxidUtils.makeZxid(self.getAcceptedEpoch(), 0)); //Add sid to payload //5.将Learner客户端自己的基本信息LearnerInfo发送给Leader服务端 LearnerInfo li = new LearnerInfo(self.getId(), 0x10000, self.getQuorumVerifier().getVersion()); ByteArrayOutputStream bsid = new ByteArrayOutputStream(); BinaryOutputArchive boa = BinaryOutputArchive.getArchive(bsid); boa.writeRecord(li, "LearnerInfo"); qp.setData(bsid.toByteArray()); writePacket(qp, true); ... } void writePacket(QuorumPacket pp, boolean flush) throws IOException { synchronized (leaderOs) { if (pp != null) { leaderOs.writeRecord(pp, "packet"); } if (flush) { bufferedOutput.flush(); } } } ... }

六.Leader解析LearnerInfo计算出epoch并发送LeaderInfo给Learner

由于Leader的LearnerCnxAcceptor在接收到来自Learner的连接请求后,会创建LearnerHandler来处理Leader与Learner的消息通信和数据同步。所以当Learner通过registerWithLeader()方法向Leader发起注册请求后,Leader服务端下对应的LearnerHandler线程就能收到LearnerInfo信息,于是便会根据LearnerInfo信息解析出Learner的SID和ZXID。

首先调用ZxidUtils的getEpochFromZxid()方法,通过将Learner的ZXID右移32位来解析出Learner的epoch。然后调用Leader的getEpochToPropose()方法比较Learner和Leader的epoch,如果Learner的epoch大,则更新Leader的epoch为Learner的epoch + 1。

接着在Leader的getEpochToPropose()方法中,会将Learner的SID添加到HashSet类型的connectingFollowers中。通过Leader的connectingFollowers的wait()方法和notifyAll()方法,便能实现让LearnerHandler进行等待和唤醒。

直到过半Learner已向Leader进行了注册,同时更新了Leader的epoch,之后Leader就可以确定当前集群的epoch了。可见,可以通过Object的wait()方法和notifyAll()方法来实现过半效果:未过半则进行阻塞,过半则进行通知继续后续处理。

当确定好当前集群的epoch后,Leader的每个LearnerHandler,都会发送一个包含该epoch的LeaderInfo消息给对应的Learner,然后再通过Leader的waitForEpochAck()方法等待过半Learner的响应。

public class LearnerHandler extends ZooKeeperThread { final Leader leader; //ZooKeeper server identifier of this learner protected long sid = 0; protected final Socket sock; private BinaryInputArchive ia; private BinaryOutputArchive oa; ... @Override public void run() { leader.addLearnerHandler(this); tickOfNextAckDeadline = leader.self.tick.get() + leader.self.initLimit + leader.self.syncLimit; //将ia和oa与BIO的Socket进行绑定 //以便当Leader通过oa发送LeaderInfo消息给Learner时,可以通过ia读取到Learner的ackNewEpoch响应 ia = BinaryInputArchive.getArchive(bufferedInput); bufferedOutput = new BufferedOutputStream(sock.getOutputStream()); oa = BinaryOutputArchive.getArchive(bufferedOutput); QuorumPacket qp = new QuorumPacket(); ia.readRecord(qp, "packet"); byte learnerInfoData[] = qp.getData(); ... //根据LearnerInfo信息解析出Learner的SID ByteBuffer bbsid = ByteBuffer.wrap(learnerInfoData); if (learnerInfoData.length >= 8) { this.sid = bbsid.getLong(); } ... //根据Learner的ZXID解析出对应Learner的epoch long lastAcceptedEpoch = ZxidUtils.getEpochFromZxid(qp.getZxid()); long zxid = qp.getZxid(); //将Learner的epoch和Leader的epoch进行比较 //如果Learner的epoch更大,则更新Leader的epoch为Learner的epoch + 1 long newEpoch = leader.getEpochToPropose(this.getSid(), lastAcceptedEpoch); long newLeaderZxid = ZxidUtils.makeZxid(newEpoch, 0); ... //发送一个包含该epoch的LeaderInfo消息给该LearnerHandler对应的Learner QuorumPacket newEpochPacket = new QuorumPacket(Leader.LEADERINFO, newLeaderZxid, ver, null); oa.writeRecord(newEpochPacket, "packet"); bufferedOutput.flush(); //发送包含该epoch的LeaderInfo消息后等待Learner响应 //读取Learner返回的ackNewEpoch响应 QuorumPacket ackEpochPacket = new QuorumPacket(); ia.readRecord(ackEpochPacket, "packet"); ... //等待过半Learner响应 leader.waitForEpochAck(this.getSid(), ss); ... } ... } public class Leader { ... long epoch = -1; protected final Set<Long> connectingFollowers = new HashSet<Long>(); public long getEpochToPropose(long sid, long lastAcceptedEpoch) throws InterruptedException, IOException { synchronized(connectingFollowers) { if (!waitingForNewEpoch) { return epoch; } //将Learner的epoch和Leader的epoch进行比较 //如果Learner的epoch更大,则更新Leader的epoch为Learner的epoch + 1 if (lastAcceptedEpoch >= epoch) { epoch = lastAcceptedEpoch + 1; } if (isParticipant(sid)) { connectingFollowers.add(sid); } QuorumVerifier verifier = self.getQuorumVerifier(); if (connectingFollowers.contains(self.getId()) && verifier.containsQuorum(connectingFollowers)) { waitingForNewEpoch = false; self.setAcceptedEpoch(epoch); connectingFollowers.notifyAll(); } else { long start = Time.currentElapsedTime(); long cur = start; long end = start + self.getInitLimit()*self.getTickTime(); while (waitingForNewEpoch && cur < end) { //通过HashSet类型的connectingFollowers的wait和notifyAll方法,让LearnerHandler就会进行等待 connectingFollowers.wait(end - cur); cur = Time.currentElapsedTime(); } if (waitingForNewEpoch) { throw new InterruptedException("Timeout while waiting for epoch from quorum"); } } return epoch; } } ... protected final Set<Long> electingFollowers = new HashSet<Long>(); protected boolean electionFinished = false; public void waitForEpochAck(long id, StateSummary ss) throws IOException, InterruptedException { synchronized(electingFollowers) { if (electionFinished) { return; } ... if (isParticipant(id)) { electingFollowers.add(id); } QuorumVerifier verifier = self.getQuorumVerifier(); if (electingFollowers.contains(self.getId()) && verifier.containsQuorum(electingFollowers)) { electionFinished = true; electingFollowers.notifyAll(); } else { long start = Time.currentElapsedTime(); long cur = start; long end = start + self.getInitLimit()*self.getTickTime(); while (!electionFinished && cur < end) { electingFollowers.wait(end - cur); cur = Time.currentElapsedTime(); } ... } } } ... }

七.Learner收到Leader发送的LeaderInfo后会反馈ackNewEpoch消息

Learner通过Learner的writePacket()方法向Leader发送LearnerInfo消息后,会继续通过Learner的readPacket()方法接收Leader返回的LeaderInfo响应。当Learner接收到Leader的LearnerHandler返回的LeaderInfo消息后,就会解析出epoch和ZXID,然后向Leader反馈一个ackNewEpoch响应。

public class Learner { ... protected Socket sock; protected InputArchive leaderIs; protected OutputArchive leaderOs; ... //3.建立和Leader服务端之间的连接 protected void connectToLeader(InetSocketAddress addr, String hostname) { this.sock = createSocket(); int initLimitTime = self.tickTime * self.initLimit; int remainingInitLimitTime = initLimitTime; long startNanoTime = nanoTime(); for (int tries = 0; tries < 5; tries++) { ... remainingInitLimitTime = initLimitTime - (int)((nanoTime() - startNanoTime) / 1000000); if (remainingInitLimitTime <= 0) { LOG.error("initLimit exceeded on retries."); throw new IOException("initLimit exceeded on retries."); } sockConnect(sock, addr, Math.min(self.tickTime * self.syncLimit, remainingInitLimitTime)); if (self.isSslQuorum()) { ((SSLSocket) sock).startHandshake(); } sock.setTcpNoDelay(nodelay); break; ... Thread.sleep(1000); } self.authLearner.authenticate(sock, hostname); //下面定义的leaderIs和leaderOs是建立在BIO的Socket基础上的 //所以后续在registerWithLeader方法中可以先往leaderOs写数据然后阻塞等待从leaderIs读数据 leaderIs = BinaryInputArchive.getArchive(new BufferedInputStream(sock.getInputStream())); bufferedOutput = new BufferedOutputStream(sock.getOutputStream()); leaderOs = BinaryOutputArchive.getArchive(bufferedOutput); } protected long registerWithLeader(int pktType) throws IOException { long lastLoggedZxid = self.getLastLoggedZxid(); QuorumPacket qp = new QuorumPacket(); qp.setType(pktType); qp.setZxid(ZxidUtils.makeZxid(self.getAcceptedEpoch(), 0)); //Add sid to payload //5.将Learner客户端自己的基本信息LearnerInfo发送给Leader服务端 LearnerInfo li = new LearnerInfo(self.getId(), 0x10000, self.getQuorumVerifier().getVersion()); ByteArrayOutputStream bsid = new ByteArrayOutputStream(); BinaryOutputArchive boa = BinaryOutputArchive.getArchive(bsid); boa.writeRecord(li, "LearnerInfo"); qp.setData(bsid.toByteArray()); writePacket(qp, true); //7.接收Leader发送的包含当前集群的epoch的LeaderInfo信息 readPacket(qp); final long newEpoch = ZxidUtils.getEpochFromZxid(qp.getZxid()); if (qp.getType() == Leader.LEADERINFO) { leaderProtocolVersion = ByteBuffer.wrap(qp.getData()).getInt(); byte epochBytes[] = new byte[4]; final ByteBuffer wrappedEpochBytes = ByteBuffer.wrap(epochBytes); if (newEpoch > self.getAcceptedEpoch()) { wrappedEpochBytes.putInt((int)self.getCurrentEpoch()); self.setAcceptedEpoch(newEpoch); } else if (newEpoch == self.getAcceptedEpoch()) { wrappedEpochBytes.putInt(-1); } else { throw new IOException("Leaders epoch, " + newEpoch + " is less than accepted epoch, " + self.getAcceptedEpoch()); } //7.收到Leader发送的LeaderInfo信息后反馈ackNewEpoch消息给Learner QuorumPacket ackNewEpoch = new QuorumPacket(Leader.ACKEPOCH, lastLoggedZxid, epochBytes, null); writePacket(ackNewEpoch, true); return ZxidUtils.makeZxid(newEpoch, 0); } ... } //write a packet to the leader //@param pp:the proposal packet to be sent to the leader void writePacket(QuorumPacket pp, boolean flush) throws IOException { synchronized (leaderOs) { if (pp != null) { leaderOs.writeRecord(pp, "packet"); } if (flush) { bufferedOutput.flush(); } } } //read a packet from the leader //@param pp:the packet to be instantiated void readPacket(QuorumPacket pp) throws IOException { synchronized (leaderIs) { leaderIs.readRecord(pp, "packet"); } long traceMask = ZooTrace.SERVER_PACKET_TRACE_MASK; if (pp.getType() == Leader.PING) { traceMask = ZooTrace.SERVER_PING_TRACE_MASK; } } ... }

八.Leader收到过半Learner的ackNewEpoch消息后开始进行数据同步

Leader每收到一个Learner的连接请求,都会启动一个LearnerHandler处理。每个LearnerHandler线程都会首先通过Leader的getEpochToPropose()方法,阻塞等待过半Learner发送LearnerInfo信息发起对Leader的注册。

当过半Learner已经向Leader进行注册后,每个LearnerHandler线程又继续发送LeaderInfo信息给Learner确认epoch,然后通过Leader的waitForEpochAck()方法,阻塞等待过半Learner返回响应。

当Leader接收到过半Learner向Leader发送的ackNewEpoch响应后,每个LearnerHandler线程便会开始执行与Learner间的数据同步,而Learner会通过Learner的syncWithLeader()方法执行与Leader的数据同步。

public class LearnerHandler extends ZooKeeperThread { final Leader leader; //ZooKeeper server identifier of this learner protected long sid = 0; protected final Socket sock; private BinaryInputArchive ia; private BinaryOutputArchive oa; ... @Override public void run() { leader.addLearnerHandler(this); tickOfNextAckDeadline = leader.self.tick.get() + leader.self.initLimit + leader.self.syncLimit; //将ia和oa与Socket进行绑定 //以便当Leader通过oa发送LeaderInfo消息给Learner时,可以通过ia读取到Learner的ackNewEpoch响应 ia = BinaryInputArchive.getArchive(bufferedInput); bufferedOutput = new BufferedOutputStream(sock.getOutputStream()); oa = BinaryOutputArchive.getArchive(bufferedOutput); QuorumPacket qp = new QuorumPacket(); ia.readRecord(qp, "packet"); byte learnerInfoData[] = qp.getData(); ... //根据LearnerInfo信息解析出Learner的SID ByteBuffer bbsid = ByteBuffer.wrap(learnerInfoData); if (learnerInfoData.length >= 8) { this.sid = bbsid.getLong(); } ... //根据Learner的ZXID解析出对应Learner的epoch long lastAcceptedEpoch = ZxidUtils.getEpochFromZxid(qp.getZxid()); long zxid = qp.getZxid(); //将Learner的epoch和Leader的epoch进行比较 //如果Learner的epoch更大,则更新Leader的epoch为Learner的epoch + 1 long newEpoch = leader.getEpochToPropose(this.getSid(), lastAcceptedEpoch); long newLeaderZxid = ZxidUtils.makeZxid(newEpoch, 0); ... //发送一个包含该epoch的LeaderInfo消息给该LearnerHandler对应的Learner QuorumPacket newEpochPacket = new QuorumPacket(Leader.LEADERINFO, newLeaderZxid, ver, null); oa.writeRecord(newEpochPacket, "packet"); bufferedOutput.flush(); QuorumPacket ackEpochPacket = new QuorumPacket(); //发送包含该epoch的LeaderInfo消息后等待Learner响应 //读取Learner返回的ackNewEpoch响应 ia.readRecord(ackEpochPacket, "packet"); ... //等待过半Learner响应 leader.waitForEpochAck(this.getSid(), ss); ... //下面执行与Learner的数据同步 peerLastZxid = ss.getLastZxid(); boolean needSnap = syncFollower(peerLastZxid, leader.zk.getZKDatabase(), leader); if (needSnap) { long zxidToSend = leader.zk.getZKDatabase().getDataTreeLastProcessedZxid(); oa.writeRecord(new QuorumPacket(Leader.SNAP, zxidToSend, null, null), "packet"); bufferedOutput.flush(); // Dump data to peer leader.zk.getZKDatabase().serializeSnapshot(oa); oa.writeString("BenWasHere", "signature"); bufferedOutput.flush(); } LOG.debug("Sending NEWLEADER message to " + sid); if (getVersion() < 0x10000) { QuorumPacket newLeaderQP = new QuorumPacket(Leader.NEWLEADER, newLeaderZxid, null, null); oa.writeRecord(newLeaderQP, "packet"); } else { QuorumPacket newLeaderQP = new QuorumPacket(Leader.NEWLEADER, newLeaderZxid, leader.self.getLastSeenQuorumVerifier().toString().getBytes(), null); queuedPackets.add(newLeaderQP); } bufferedOutput.flush(); //Start thread that blast packets in the queue to learner startSendingPackets(); //Have to wait for the first ACK, wait until the leader is ready, and only then we can start processing messages. qp = new QuorumPacket(); ia.readRecord(qp, "packet"); //阻塞等待过半Learner完成数据同步,接下来就可以启动QuorumPeer服务器实例了 leader.waitForNewLeaderAck(getSid(), qp.getZxid()); ... while (true) { //这里有关于Leader和Learner之间保持心跳的处理 } } ... } public class Leader { ... protected final Set<Long> electingFollowers = new HashSet<Long>(); protected boolean electionFinished = false; public void waitForEpochAck(long id, StateSummary ss) throws IOException, InterruptedException { synchronized(electingFollowers) { if (electionFinished) { return; } ... if (isParticipant(id)) { electingFollowers.add(id); } QuorumVerifier verifier = self.getQuorumVerifier(); if (electingFollowers.contains(self.getId()) && verifier.containsQuorum(electingFollowers)) { electionFinished = true; electingFollowers.notifyAll(); } else { long start = Time.currentElapsedTime(); long cur = start; long end = start + self.getInitLimit()*self.getTickTime(); while(!electionFinished && cur < end) { electingFollowers.wait(end - cur); cur = Time.currentElapsedTime(); } ... } } } ... }

九.过半Learner完成数据同步就启动Leader和Learner绑定的服务器实例

Follower客户端绑定了FollowerZooKeeperServer服务器实例,Leader服务端绑定了LeaderZooKeeperServer服务器实例。

在Leader的lead()方法中,首先创建Learner接收器LearnerCnxAcceptor监听Learner发起的连接请求,然后Leader的lead()方法会阻塞等待过半Learner完成向Leader的注册,接着Leader的lead()方法会阻塞等待过半Learner返回ackNewEpoch响应,接着Leader的lead()方法会阻塞等待过半Learner完成数据同步,然后执行Leader的startZkServer()方法启动Leader绑定的服务器实例,也就是执行LeaderZooKeeperServer的startup()方法启动服务器。

而在Learner进行数据同步的Learner的syncWithLeader()方法中,完成数据同步后同样会启动Learner绑定的服务器实例,也就是执行LearnerZooKeeperServer的startup()方法启动服务器。

Leader和Learner绑定的服务器实例的启动步骤,主要就是执行ZooKeeperServer的startup()方法,即:创建并启动会话管理器 + 初始化服务器的请求处理链。

public class QuorumPeer extends ZooKeeperThread implements QuorumStats.Provider { ... //Follower客户端绑定了FollowerZooKeeperServer服务器实例 protected Follower makeFollower(FileTxnSnapLog logFactory) throws IOException { return new Follower(this, new FollowerZooKeeperServer(logFactory, this, this.zkDb)); } //Leader服务端绑定了LeaderZooKeeperServer服务器实例 protected Leader makeLeader(FileTxnSnapLog logFactory) throws IOException, X509Exception { return new Leader(this, new LeaderZooKeeperServer(logFactory, this, this.zkDb)); } ... } public class Leader { final LeaderZooKeeperServer zk; ... void lead() throws IOException, InterruptedException { ... //创建Learner接收器LearnerCnxAcceptor监听Learner发起的连接请求 cnxAcceptor = new LearnerCnxAcceptor(); cnxAcceptor.start(); //阻塞等待过半Learner完成向Leader的注册 long epoch = getEpochToPropose(self.getId(), self.getAcceptedEpoch()); ... //阻塞等待过半Learner返回ackNewEpoch响应 waitForEpochAck(self.getId(), leaderStateSummary); ... //阻塞等待过半Learner完成数据同步 waitForNewLeaderAck(self.getId(), zk.getZxid()); ... //开始启动Leader绑定的LeaderZooKeeperServer服务器实例 startZkServer(); ... } private synchronized void startZkServer() { ... zk.startup(); ... } ... } public class ZooKeeperServer implements SessionExpirer, ServerStats.Provider { ... public synchronized void startup() { startupWithServerState(State.RUNNING); } private void startupWithServerState(State state) { //创建并启动会话管理器 if (sessionTracker == null) { createSessionTracker(); } startSessionTracker(); //初始化服务器的请求处理链 setupRequestProcessors(); registerJMX(); //开启监控JVM停顿的线程 startJvmPauseMonitor(); setState(state); notifyAll(); } ... }