写在前面

- 自己搭CICD平台玩,但是少一个k8s的web端工具,请教老师之后发了这个给我,K8s的面板工具还是蛮多的,自己研究下

- 博文主要是

kuboard的安装,前提需要一个已经运行起来K8s集群。 - 另,因为涉及多机器操作,为了方便我配了ansible,这个不是必须.

┌──[root@vms81.liruilongs.github.io]-[~]

└─$kubectl get nodes

NAME STATUS ROLES AGE VERSION

vms81.liruilongs.github.io Ready control-plane,master 36d v1.22.2

vms82.liruilongs.github.io Ready <none> 36d v1.22.2

vms83.liruilongs.github.io Ready <none> 36d v1.22.2

┌──[root@vms81.liruilongs.github.io]-[~]

└─$docker ps很多时候我们放弃,以为不过是一段感情,到了最后,才知道,原来那是一生。——匪我思存《佳期如梦》

*

一、简单介绍

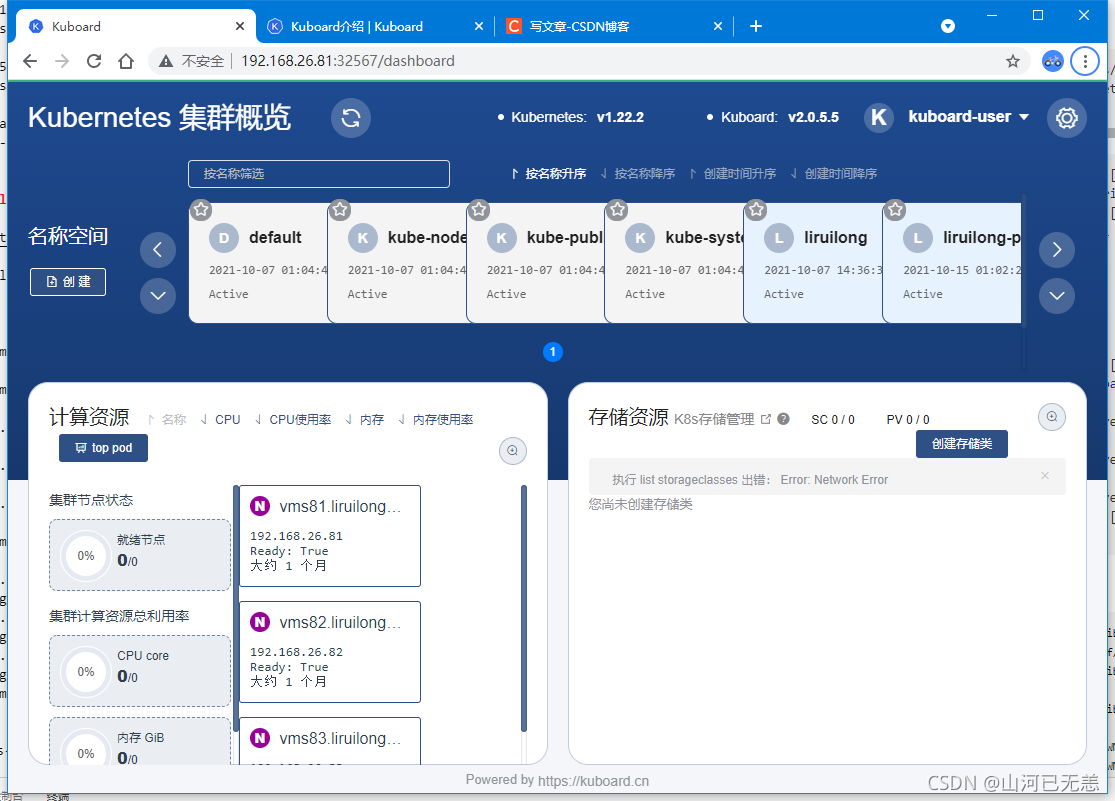

Kuboard,是一款免费的 Kubernetes 图形化管理工具,Kuboard 力图帮助用户快速在 Kubernetes 上落地微服务..

官网: http://press.demo.kuboard.cn/overview/share-coder.html

二、安装 kuboard

1.安装 metric-server

查看节点状态,我们使用docker的话可以通过docker stats.那使用k8s的话,我们可以通过metric server来查看

docker 方式

┌──[root@vms81.liruilongs.github.io]-[~]

└─$docker stats

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

781c898eea19 k8s_kube-scheduler_kube-scheduler-vms81.liruilongs.github.io_kube-system_5bd71ffab3a1f1d18cb589aa74fe082b_18 0.15% 23.22MiB / 3.843GiB 0.59% 0B / 0B 0B / 0B 7

acac8b21bb57 k8s_kube-controller-manager_kube-controller-manager-vms81.liruilongs.github.io_kube-system_93d9ae7b5a4ccec4429381d493b5d475_18 1.18% 59.16MiB / 3.843GiB 1.50% 0B / 0B 0B / 0B 6

fe97754d3dab k8s_calico-node_calico-node-skzjp_kube-system_a211c8be-3ee1-44a0-a4ce-3573922b65b2_14 4.89% 94.25MiB / 3.843GiB 2.39% 0B / 0B 0B / 4.1kB 40相关镜像下载

curl -Ls https://api.github.com/repos/kubernetes-sigs/metrics-server/tarball/v0.3.6 -o metrics-server-v0.3.6.tar.gz

docker pull mirrorgooglecontainers/metrics-server-amd64:v0.3.6

两种方式任选其一,我们这里已经下载了镜像,所以直接导入,使用ansible所以机器执行

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m copy -a "src=./metrics-img.tar dest=/root/metrics-img.tar"

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m shell -a "systemctl restart docker "

192.168.26.82 | CHANGED | rc=0 >>

192.168.26.83 | CHANGED | rc=0 >>

192.168.26.81 | CHANGED | rc=0 >>

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m shell -a "docker load -i /root/metrics-img.tar"

192.168.26.83 | CHANGED | rc=0 >>

Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6

192.168.26.81 | CHANGED | rc=0 >>

Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6

192.168.26.82 | CHANGED | rc=0 >>

Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$修改metrics-server-deployment.yaml,创建资源

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$mv kubernetes-sigs-metrics-server-d1f4f6f/ metrics

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cd metrics/

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics]

└─$ls

cmd deploy hack OWNERS README.md version

code-of-conduct.md Gopkg.lock LICENSE OWNERS_ALIASES SECURITY_CONTACTS

CONTRIBUTING.md Gopkg.toml Makefile pkg vendor

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics]

└─$cd deploy/1.8+/

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$ls

aggregated-metrics-reader.yaml metrics-apiservice.yaml resource-reader.yaml

auth-delegator.yaml metrics-server-deployment.yaml

auth-reader.yaml metrics-server-service.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$vim metrics-server-deployment.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$kubectl apply -f . 31 - name: metrics-server

32 image: k8s.gcr.io/metrics-server-amd64:v0.3.6

33 #imagePullPolicy: Always

34 imagePullPolicy: IfNotPresent

35 command:

36 - /metrics-server

37 - --metric-resolution=30s

38 - --kubelet-insecure-tls

39 - --kubelet-preferred-address-types=InternalIP

40 volumeMounts:确认是否成功安装kube-system空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-79xx4 1/1 Running 2 3h15m

calico-node-ntm7v 1/1 Running 1 12h

calico-node-skzjp 1/1 Running 4 12h

calico-node-v7pj5 1/1 Running 1 12h

coredns-545d6fc579-9h2z4 1/1 Running 2 3h15m

coredns-545d6fc579-xgn8x 1/1 Running 2 3h16m

etcd-vms81.liruilongs.github.io 1/1 Running 1 13h

kube-apiserver-vms81.liruilongs.github.io 1/1 Running 2 13h

kube-controller-manager-vms81.liruilongs.github.io 1/1 Running 4 13h

kube-proxy-rbhgf 1/1 Running 1 13h

kube-proxy-vm2sf 1/1 Running 1 13h

kube-proxy-zzbh9 1/1 Running 1 13h

kube-scheduler-vms81.liruilongs.github.io 1/1 Running 5 13h

metrics-server-bcfb98c76-gttkh 1/1 Running 0 70m简单测试

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$kubectl top nodes

W1007 14:23:06.102605 102831 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

vms81.liruilongs.github.io 555m 27% 2025Mi 52%

vms82.liruilongs.github.io 204m 10% 595Mi 15%

vms83.liruilongs.github.io 214m 10% 553Mi 14%

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]

└─$2. 下载资源yml文件

wget https://kuboard.cn/install-script/kuboard.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$wget https://kuboard.cn/install-script/kuboard.yaml

--2021-11-12 20:00:01-- https://kuboard.cn/install-script/kuboard.yaml

Resolving kuboard.cn (kuboard.cn)... 122.112.240.69, 119.3.92.138

Connecting to kuboard.cn (kuboard.cn)|122.112.240.69|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2318 (2.3K) [application/octet-stream]

Saving to: ‘kuboard.yaml’

100%[============================================================>] 2,318 --.-K/s in 0s

2021-11-12 20:00:04 (58.5 MB/s) - ‘kuboard.yaml’ saved [2318/2318]3.所有节点下载镜像

docker pull eipwork/kuboard:latest

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m ping

192.168.26.81 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.83 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.26.82 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$ansible all -m shell -a "docker pull eipwork/kuboard:latest"

4.修改 kuboard.yaml 把策略改为 IfNotPresent

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat kuboard.yaml | grep imagePullPolicy

imagePullPolicy: Always

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat kuboard.yaml | grep Always

imagePullPolicy: Always

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$sed -i s#Always#IfNotPresent#g kuboard.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$cat kuboard.yaml | grep imagePullPolicy

imagePullPolicy: IfNotPresent

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

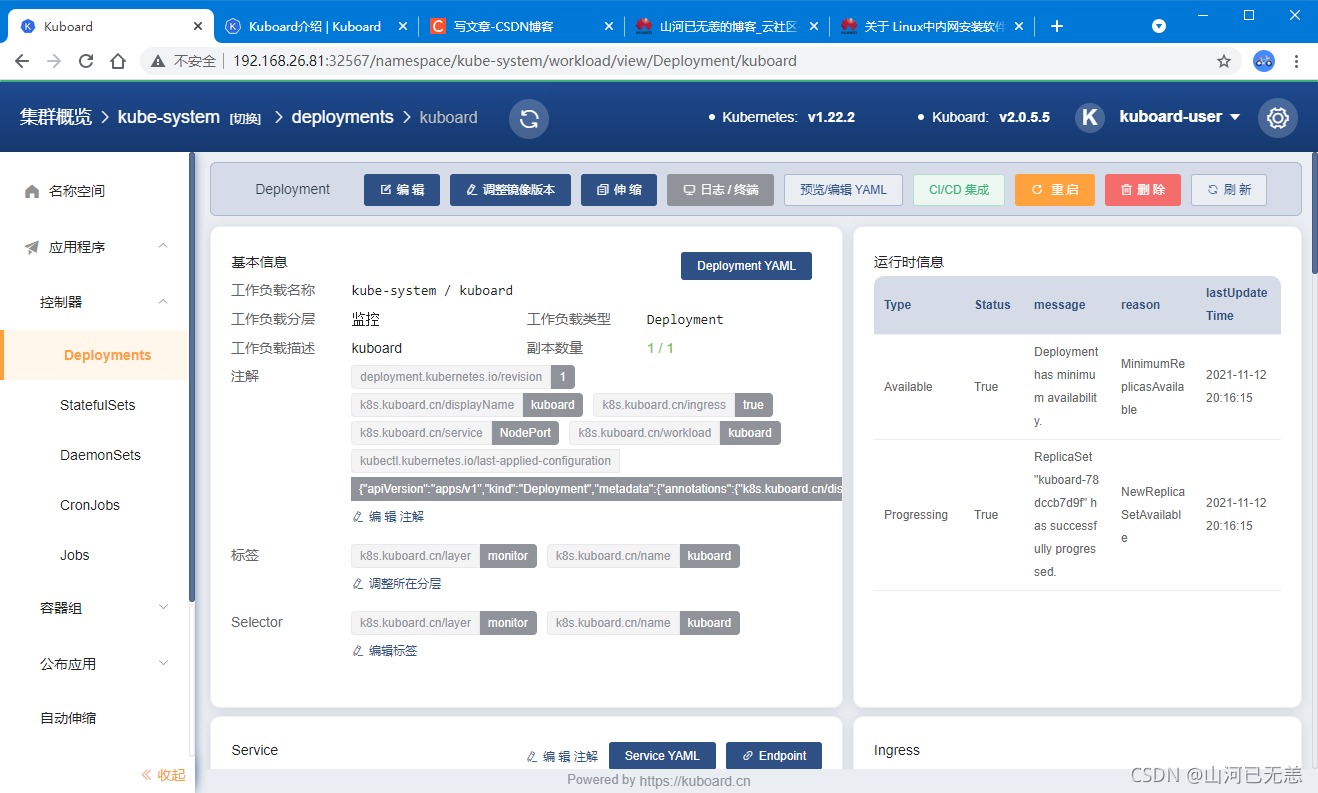

└─$5.创建资源 kubectl apply -f kuboard.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl apply -f kuboard.yaml

deployment.apps/kuboard created

service/kuboard created

serviceaccount/kuboard-user created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-user created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer created

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$6.确保 kuboard 运行 kubectl get pods -n kube-system

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-csdd6 1/1 Running 240 (4m56s ago) 17d

calico-node-ntm7v 1/1 Running 145 (8m22s ago) 36d

calico-node-skzjp 0/1 CrashLoopBackOff 753 (4m30s ago) 36d

calico-node-v7pj5 1/1 Running 169 (4m59s ago) 36d

coredns-7f6cbbb7b8-2msxl 1/1 Running 4 17d

coredns-7f6cbbb7b8-ktm2d 1/1 Running 5 (20h ago) 17d

etcd-vms81.liruilongs.github.io 1/1 Running 7 (7d11h ago) 24d

kube-apiserver-vms81.liruilongs.github.io 1/1 Running 15 (20h ago) 24d

kube-controller-manager-vms81.liruilongs.github.io 1/1 Running 56 (108m ago) 24d

kube-proxy-nzm24 1/1 Running 3 (11h ago) 23d

kube-proxy-p2zln 1/1 Running 3 (14d ago) 24d

kube-proxy-pqhqn 1/1 Running 7 (7d11h ago) 24d

kube-scheduler-vms81.liruilongs.github.io 1/1 Running 60 (108m ago) 24d

kuboard-78dccb7d9f-rsnrp 1/1 Running 0 49s

metrics-server-bcfb98c76-76pg5 1/1 Running 0 20h

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$kubectl config set7.获取 token

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)

eyJhbGciOiJSUzI1NiIsImtpZCI6IkZ1NHI1RkhSemVhN2s1OWthS1ZEQ0dueDRmS2RkMDdyR0FZYklkaWFnbmsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tYmY4bjgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzQ4YWYyNTQtZDI5NS00Yjc4LTg3ZWItNmE0ZDFkMjFkZmU4Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.Nzjerrlpw6XcBRkqXPQzDlSmMZrDf89yuVjXkL7vV1nhgWXX0iqZsqF8DPiy7Sjj-2JFYPD_zojgqV0sgOlKV_7Ou6p3F7K6lhu4VI9CGkM8OJxFdPIh-ETKVnIlb7l9s1jN4hvhBWck8geOIx4pnOawUU3jbOH7TQKz43bTnvUx_FACvnxG9gVU6KyQm6GVzs28SDs1YrqpMFWZgnJ_vCAe-KfUrqYChLecIHXM-vuB4JODxrwB4n3z2GtsJdigTIpd_FjeDs9Bl7v3CoWrozMa73rxPZyO58fo8D1bi1XTbJNeRjTjYnQc0-GvSoupQaNAfYloD1pwimmcFnIKxQ

┌──[root@vms81.liruilongs.github.io]-[~/ansible]

└─$8.登录 http://192.168.26.81:32567

用上面命令获取的 token 登录:

|

|---|

| 如果一直卡在这里,刷新下 |

|

|