一.部署Kube-Prometheus Stack

1.部署Prometheus的方式

- 软件包安装:

直接系统的软件包管理工具进行安装,比如"yum","apt"等工具,但这意味着你需要配置相应的软件源。

- 二进制安装:

直接去官网下载安装,需要编写启动脚本。我已经写好了一件部署脚本,这个课堂上已经让大家改写使用ansible一键部署啦。

- 容器安装:

需要安装docker环境,基于docker镜像的直接运行Prometheus server,课堂上我有演示,参考我的课堂笔记即可。

- Helm安装

直接基于现有的Chart进行部署Prometheus。但是需要准备K8S环境,因为helm底层会自动管理K8S集群的资源清单。

- Prometheus Operator

基于Operator部署有状态服务的Prometheus Server。

- Kube-Prometheus Stack

该项目内置了Prometheus一些列工具包的高可用环境部署。

- Prometheus Operator;

- 高可用的Prometheus

- 高可用的Alermanager

- 主机监控Node Exporter

- Prometheus Adaper(自定义监控)

- 容器监控kube-state-metrics

- 图形化展示Grafana

如果你想省事情的话的确可以采用这种方案进行部署。

2.基于Kube-Prometheus Stack部署

github地址:

https://github.com/prometheus-operator/kube-prometheus

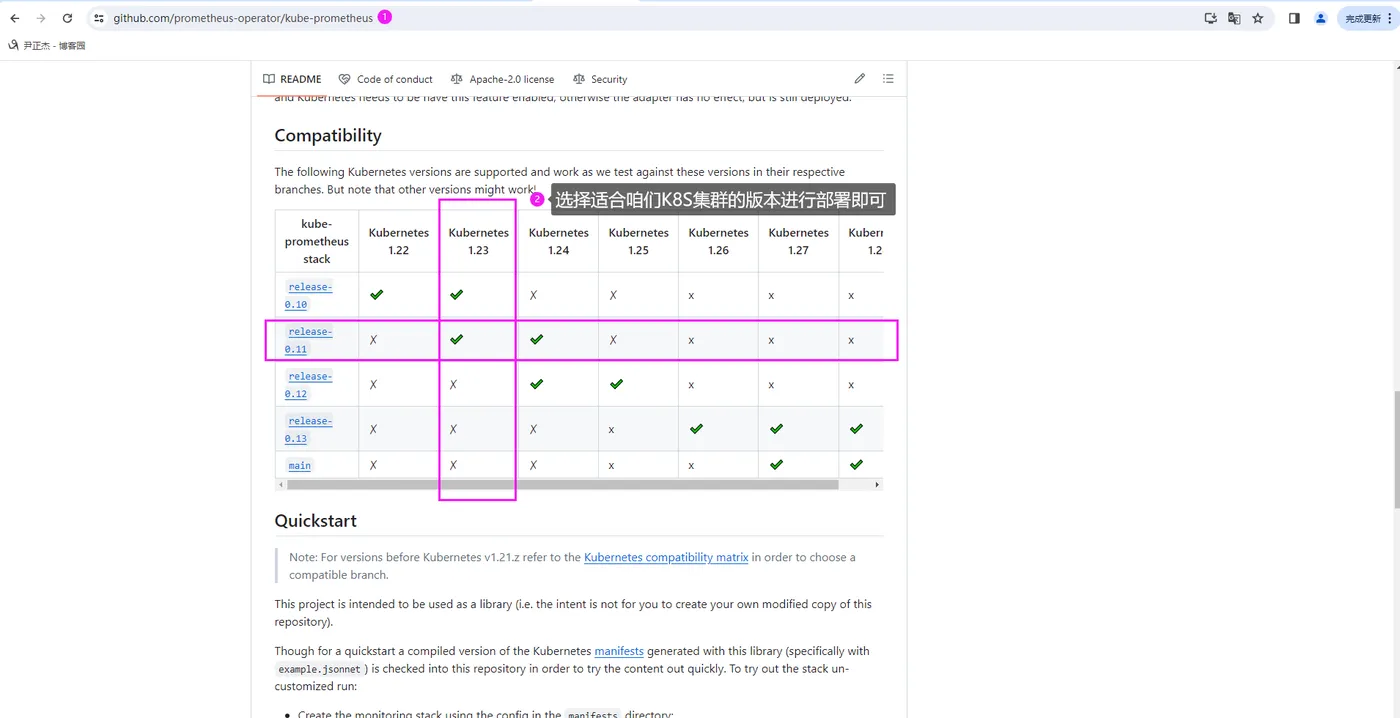

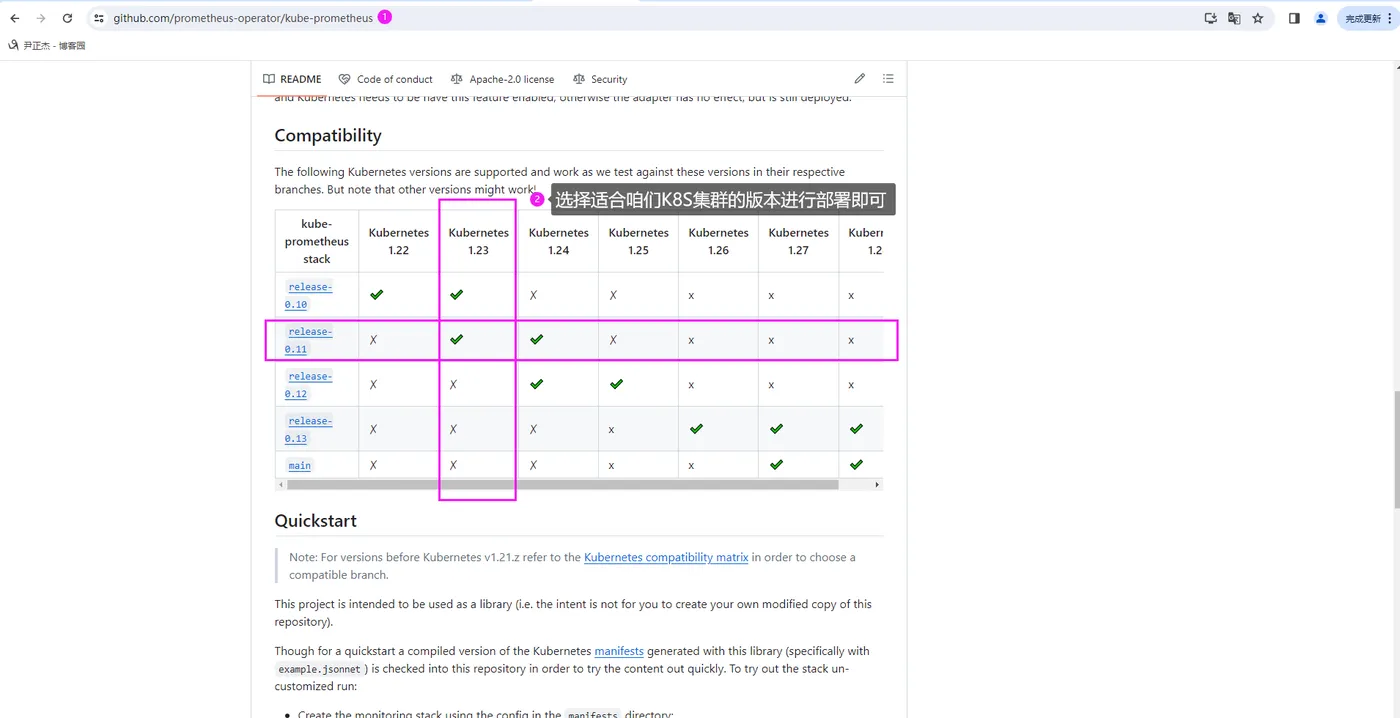

如上图所示,由于我K8S集群时1.23.17版本,因此选择比较合适的"0.11"版本进行部署。

实操案例:

1.下载Prometheus-operator源代码

[root@master231 yinzhengjie]# yum -y install git

[root@master231 yinzhengjie]# git clone -b release-0.11 https://github.com/prometheus-operator/kube-prometheus.git

温馨提示:

如果git clone不下来的话,可以直接在浏览器下载,而后上传到服务器即可。

[root@master231 yinzhengjie]# https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.11.0.tar.gz

[root@master231 yinzhengjie]# tar xf kube-prometheus-0.11.0.tar.gz

2.修改Prometheus Operator资源清单的配置文件

[root@master231 yinzhengjie]# cd kube-prometheus-0.11.0/

[root@master231 kube-prometheus-0.11.0]#

[root@master231 kube-prometheus-0.11.0]# sed -i 's#k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.5.0#registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/kube-state-metrics:2.5.0#' manifests/kubeStateMetrics-deployment.yaml

[root@master231 kube-prometheus-0.11.0]# sed -i 's#k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.9.1#registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/prometheus-adapter:v0.9.1#' manifests/prometheusAdapter-deployment.yaml

[root@master231 kube-prometheus-0.11.0]# cat manifests/grafana-service.yaml # 修改grafana的svc类型为NodePort,或者使用Ingress也可以。

...

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30080

...

[root@master231 kube-prometheus-0.11.0]#

[root@master231 kube-prometheus-0.11.0]# cat manifests/prometheus-service.yaml

...

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30090

...

[root@master231 kube-prometheus-0.11.0]#

[root@master231 yinzhengjie]# cat kube-prometheus-0.11.0/manifests/alertmanager-service.yaml

...

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30070

...

[root@master231 yinzhengjie]#

3.安装Prometheus Operator

[root@master231 kube-prometheus-0.11.0]# kubectl apply --server-side -f manifests/setup

[root@master231 kube-prometheus-0.11.0]#

[root@master231 kube-prometheus-0.11.0]# kubectl apply -f manifests/

[root@master231 kube-prometheus-0.11.0]#

4.查看Prometheus Operator容器状态

[root@master231 kube-prometheus-0.11.0]# kubectl get pods -o wide -n monitoring

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 99s 10.100.1.55 worker232 <none> <none>

alertmanager-main-1 2/2 Running 0 99s 10.100.2.26 worker233 <none> <none>

alertmanager-main-2 2/2 Running 0 99s 10.100.1.54 worker232 <none> <none>

blackbox-exporter-746c64fd88-7z6xk 3/3 Running 0 112s 10.100.1.49 worker232 <none> <none>

grafana-5fc7f9f55d-wwmjt 1/1 Running 0 110s 10.100.1.50 worker232 <none> <none>

kube-state-metrics-5c5cfbf465-4wj47 3/3 Running 0 109s 10.100.1.51 worker232 <none> <none>

node-exporter-s5lgg 2/2 Running 0 109s 10.0.0.231 master231 <none> <none>

node-exporter-trpk2 2/2 Running 0 109s 10.0.0.232 worker232 <none> <none>

node-exporter-zl9f2 2/2 Running 0 109s 10.0.0.233 worker233 <none> <none>

prometheus-adapter-5b479bc754-c9j6d 1/1 Running 0 107s 10.100.2.25 worker233 <none> <none>

prometheus-adapter-5b479bc754-gbp8b 1/1 Running 0 107s 10.100.1.52 worker232 <none> <none>

prometheus-k8s-0 2/2 Running 0 94s 10.100.1.56 worker232 <none> <none>

prometheus-k8s-1 2/2 Running 0 94s 10.100.2.27 worker233 <none> <none>

prometheus-operator-f59c8b954-b9467 2/2 Running 0 107s 10.100.1.53 worker232 <none> <none>

[root@master231 kube-prometheus-0.11.0]#

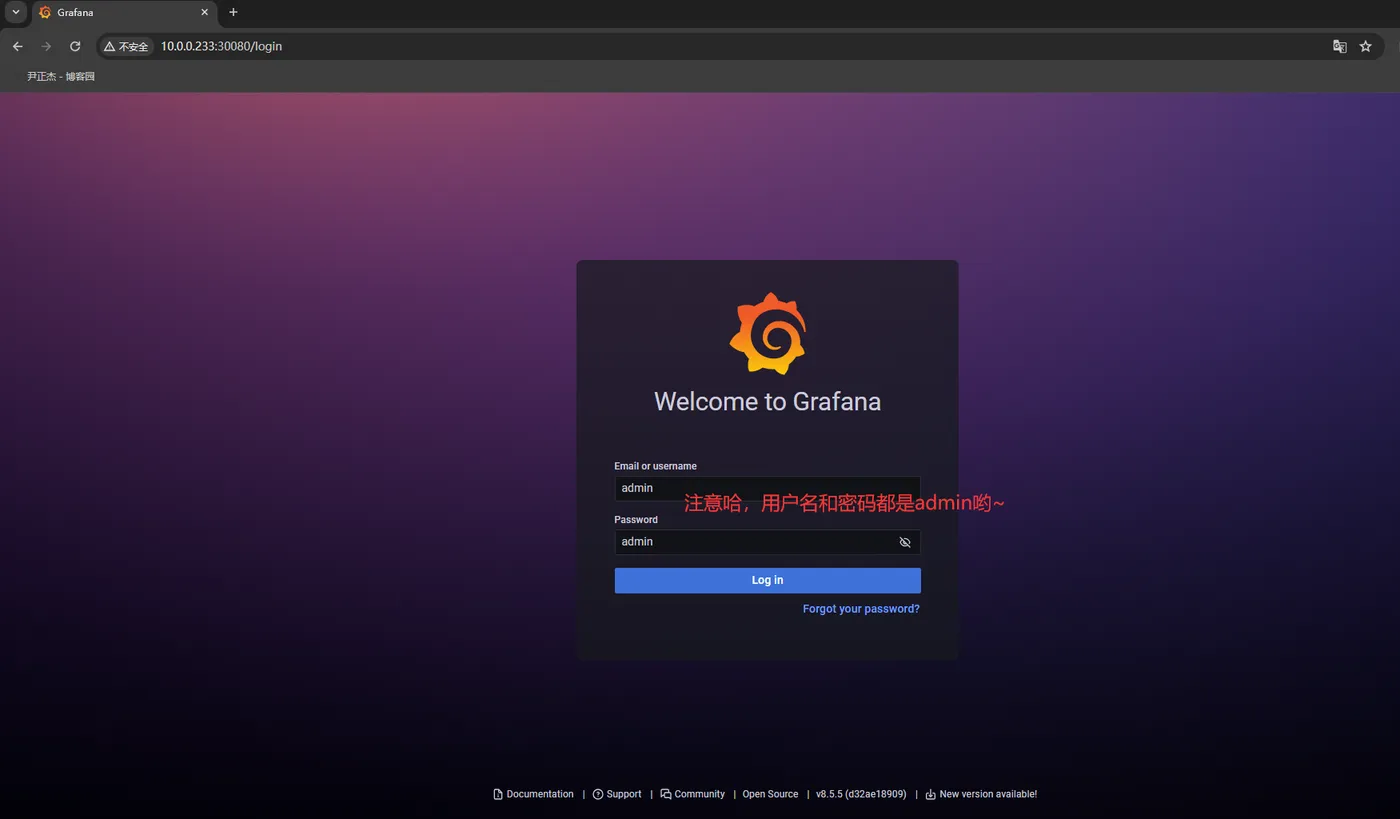

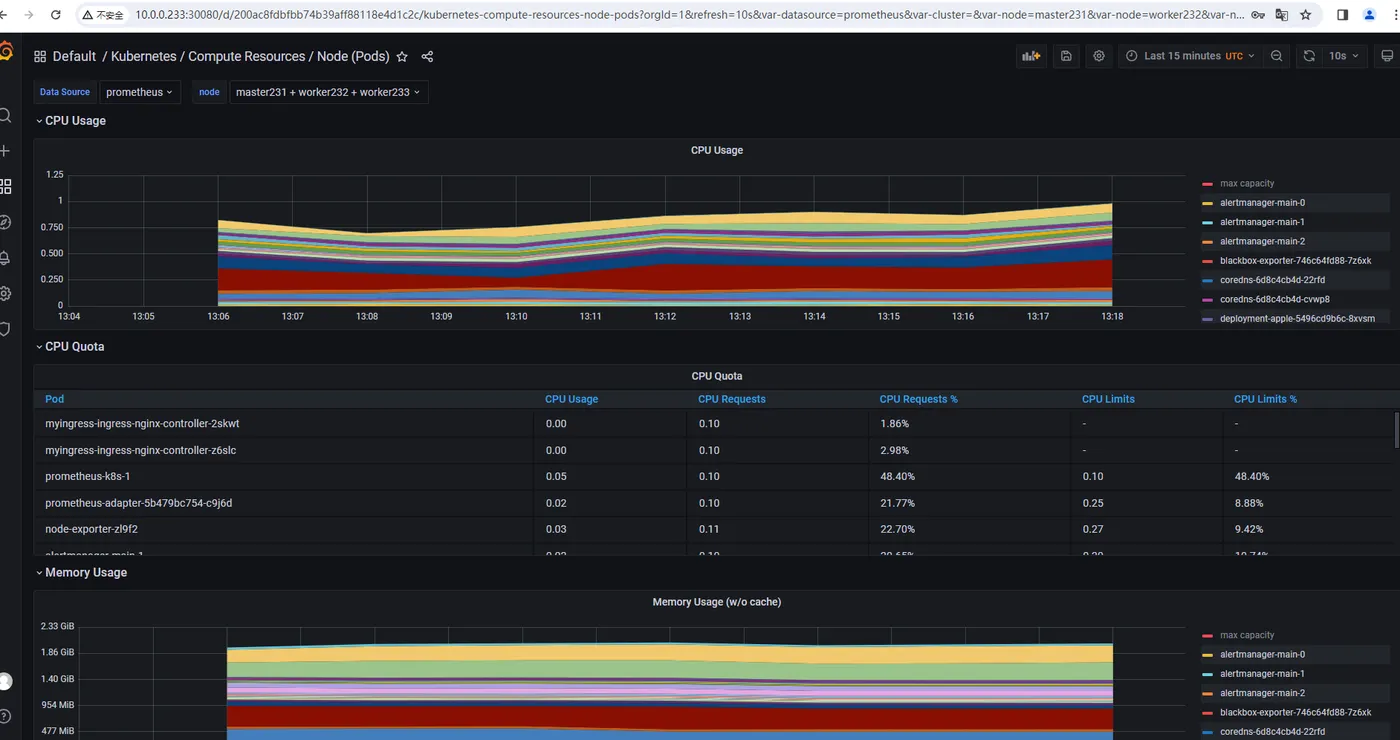

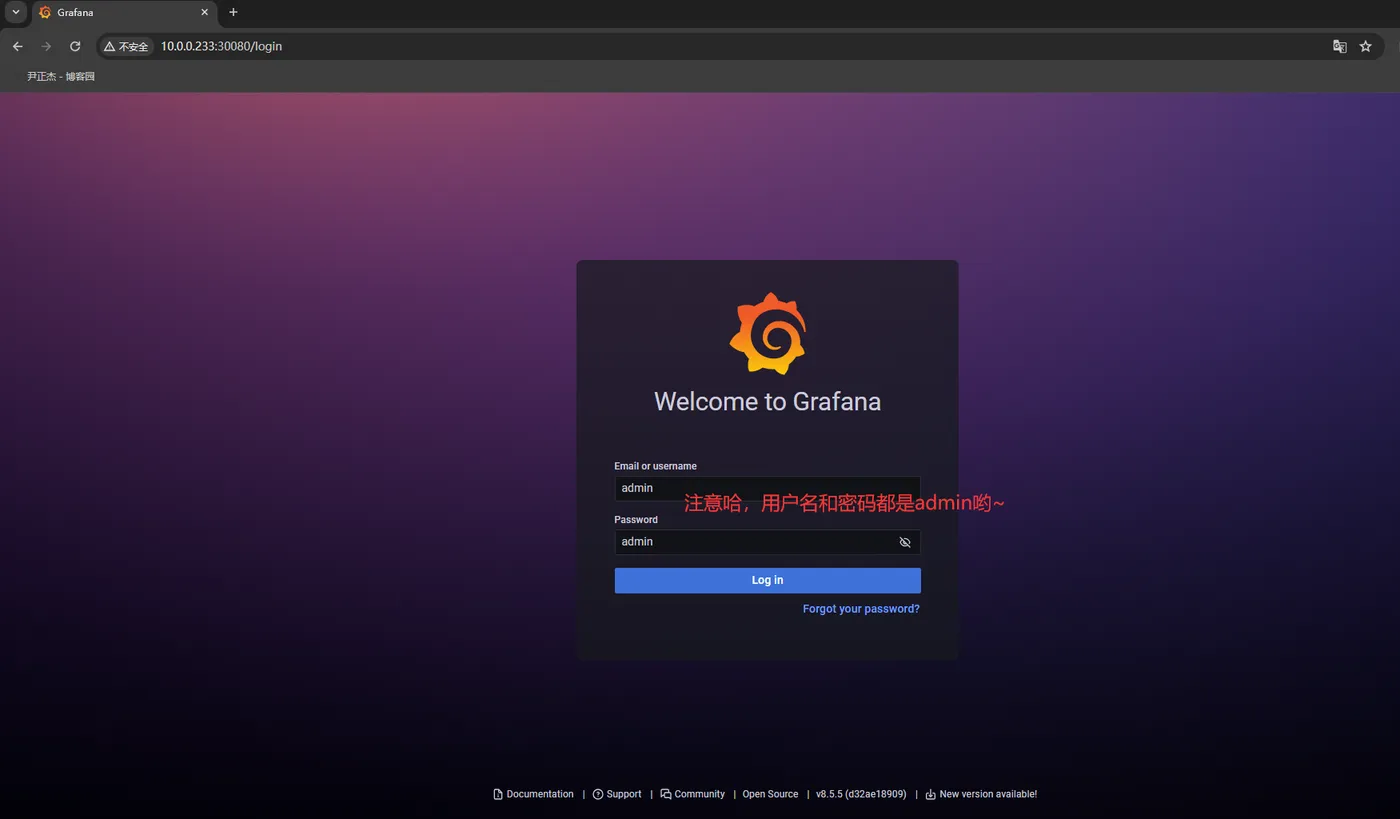

5.如下图1所示,访问grafana的WebUI

http://10.0.0.233:30080/login

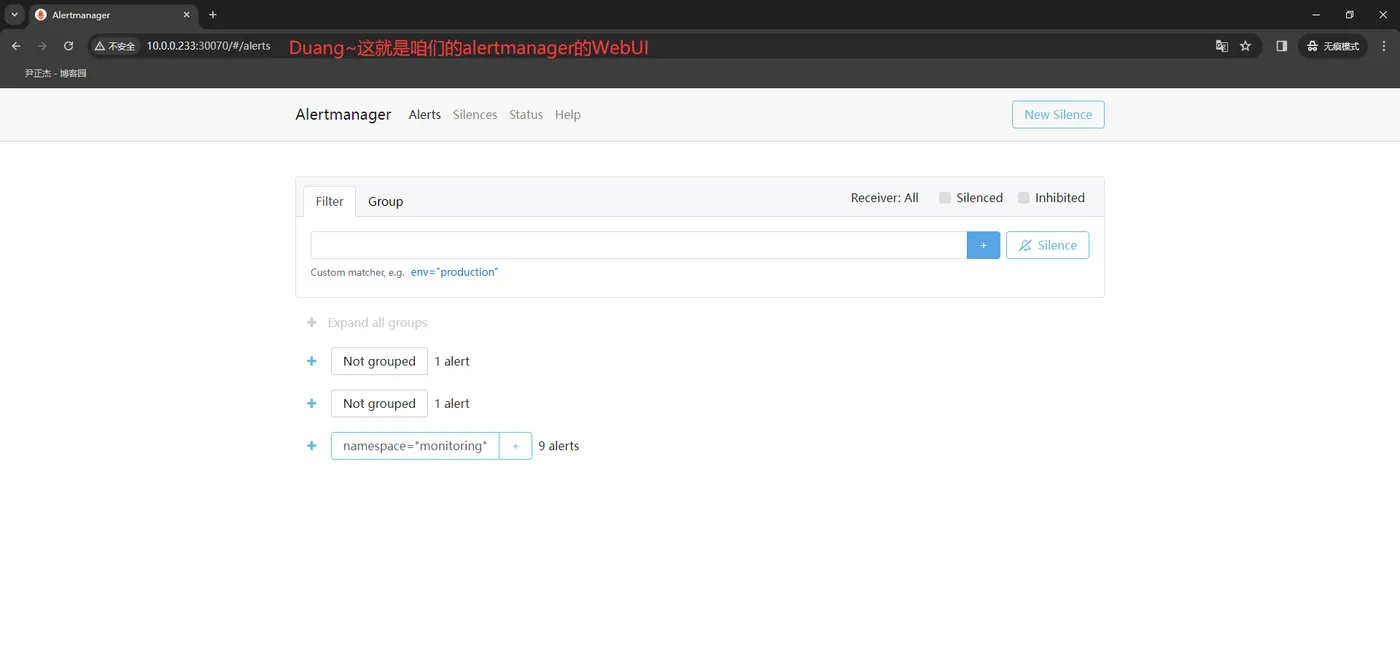

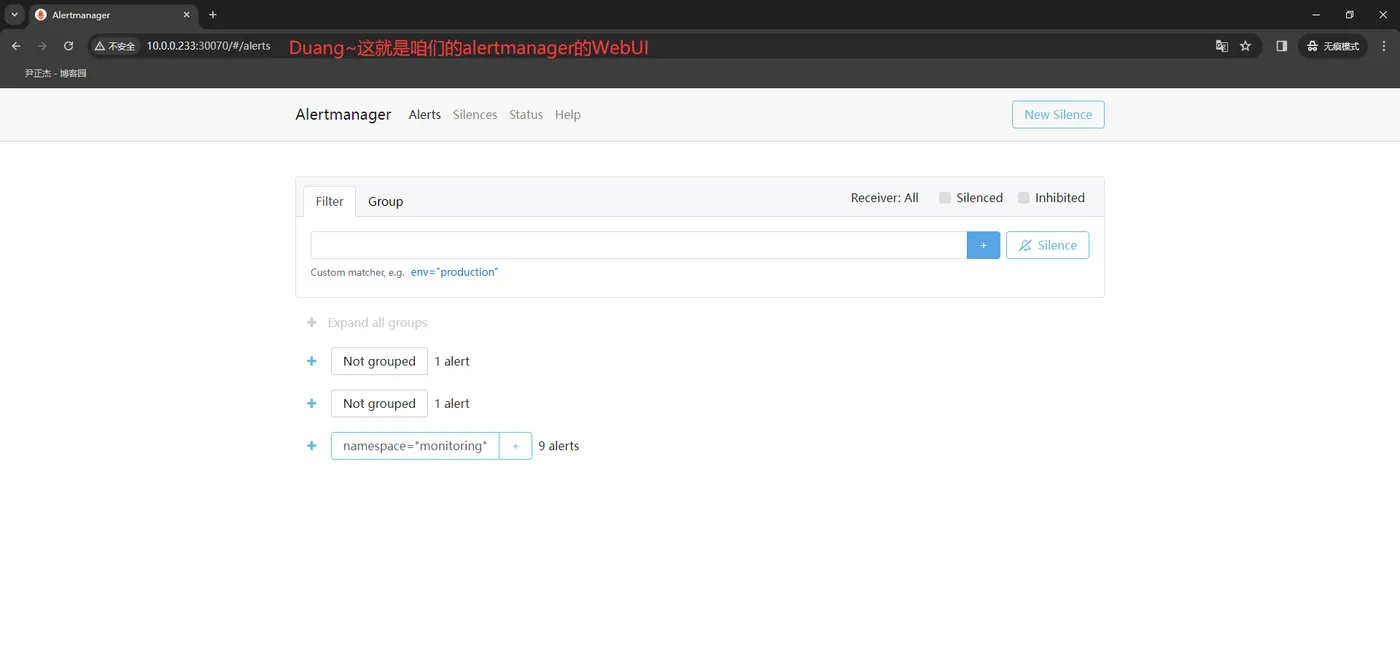

6.如下图2所示,访问alertmanager的WebUI

http://10.0.0.233:30070/#/alerts

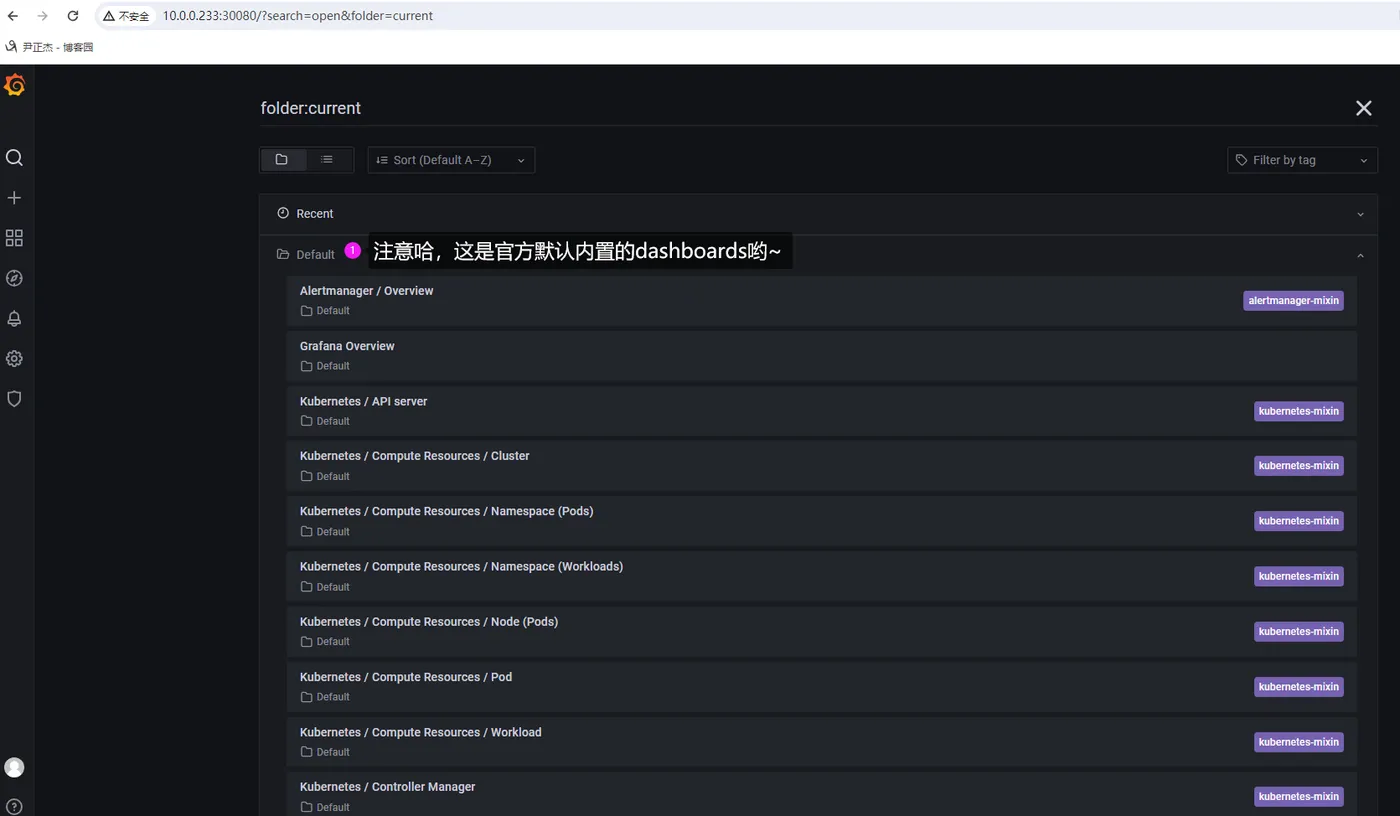

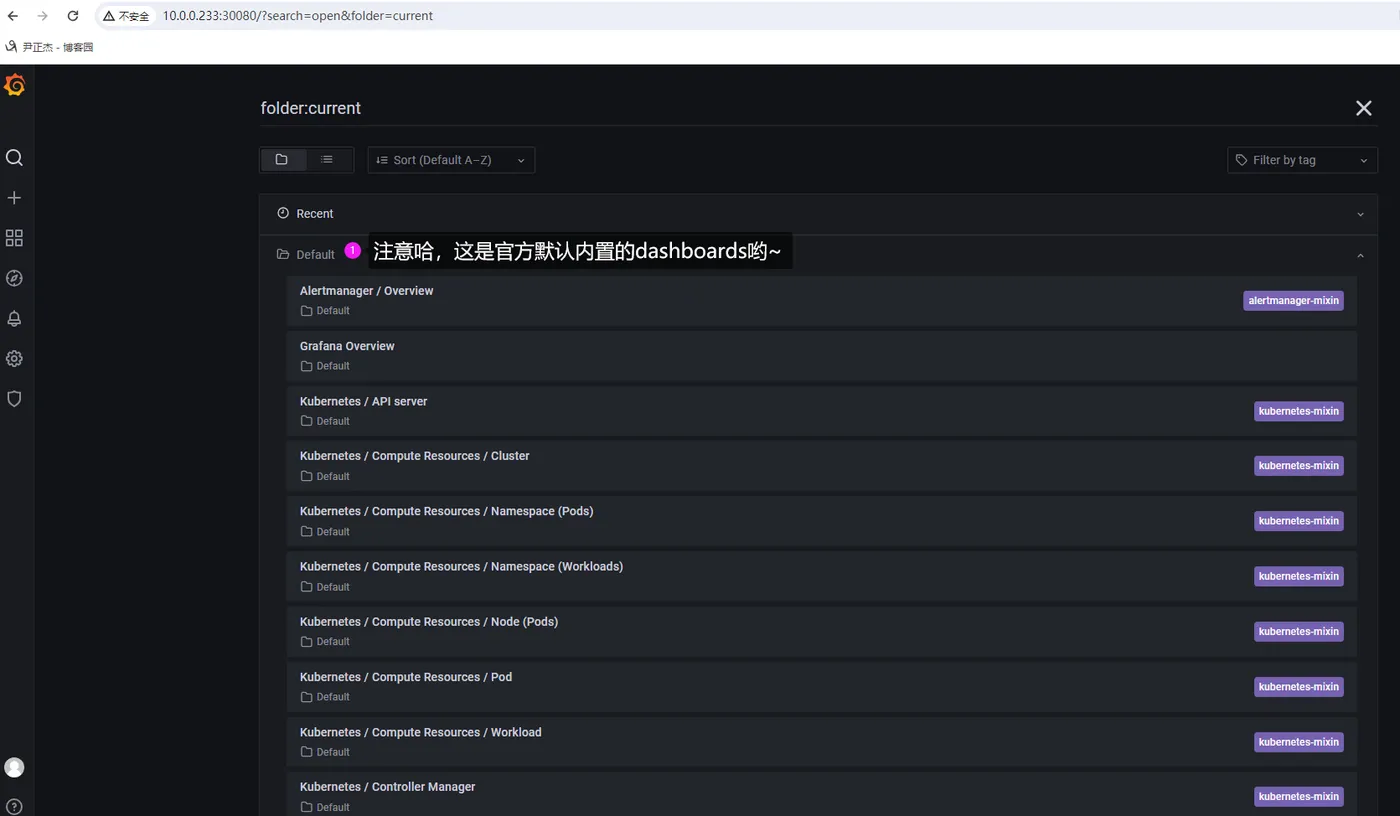

3.查看grafana监控的K8S集群状态

如上图所示,官方为我们提供了很多的模板可以直接使用。

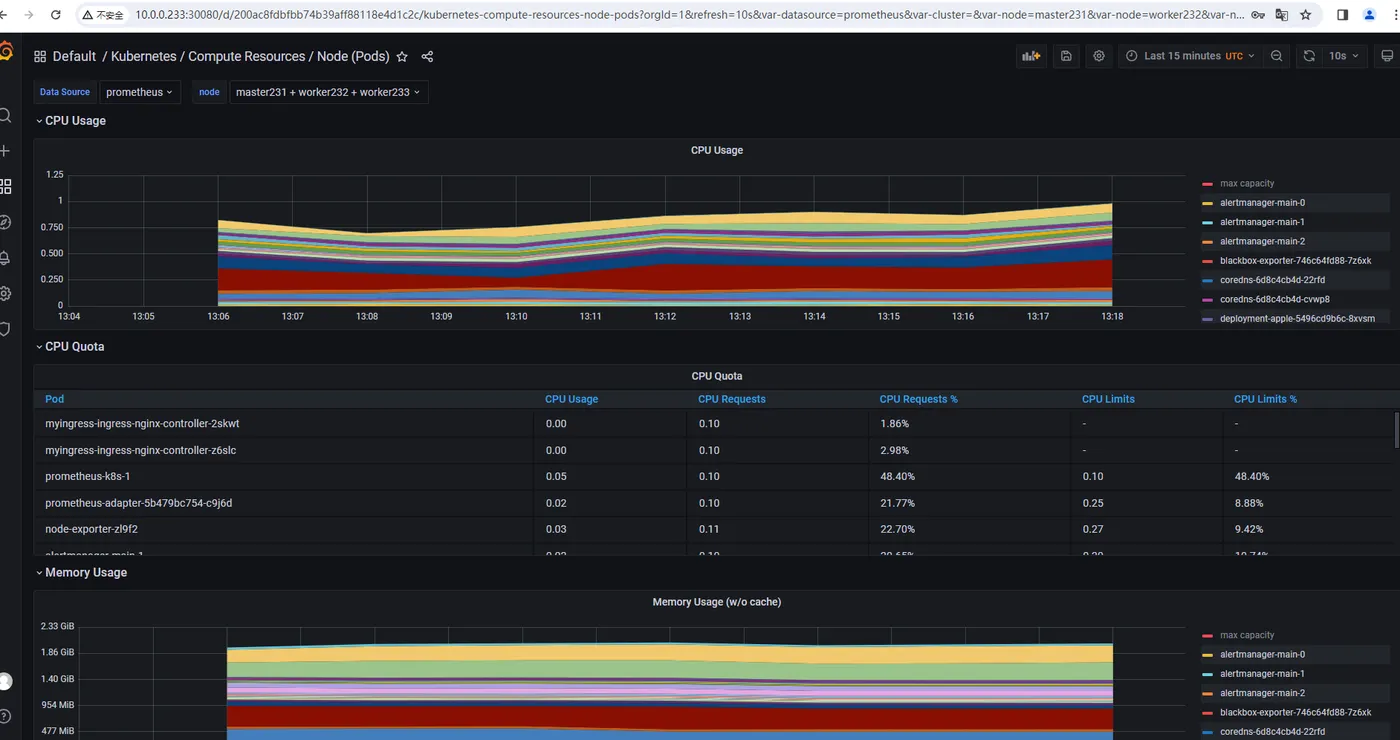

如下图所示,我们可以任意打开一个模板,观察其有相应的模板哟。

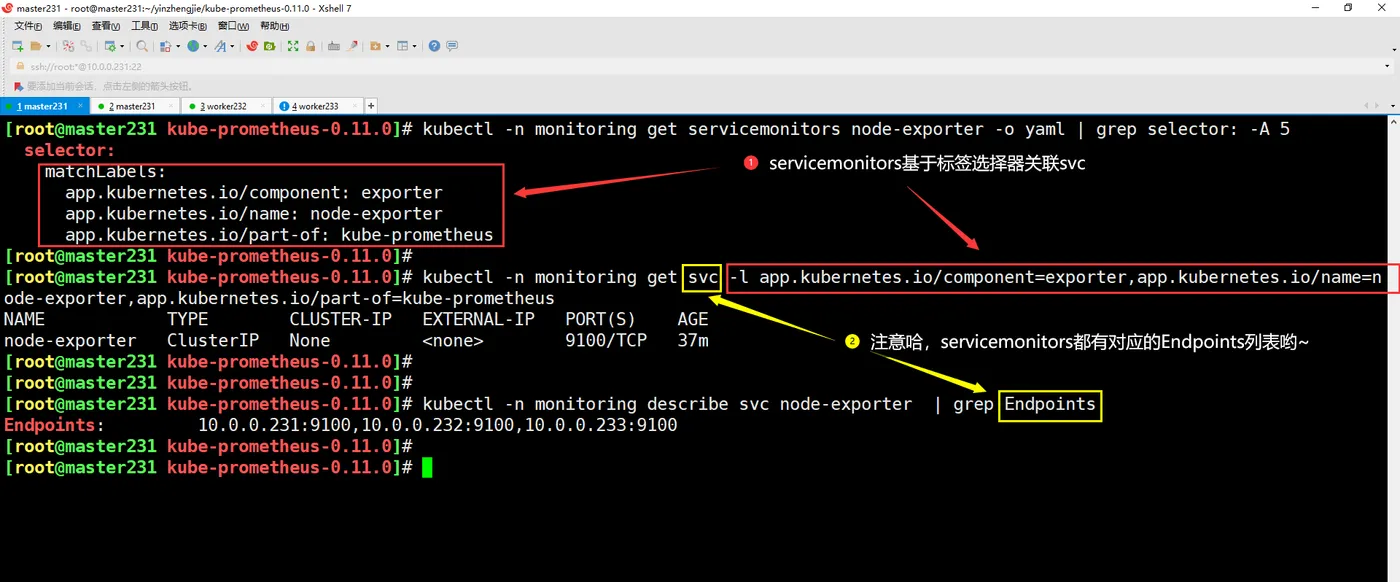

二.ServiceMonitor配置解析

1.什么是ServiceMonitor

传统的部署方式基于"prometheus.yml"配置文件来监控被目标,但是随着监控目标的增多,我们的配置文件就会变得很冗余,可读性就会变差。

于是就有了各种服务发现来减少"prometheus.yml"配置文件的配置,比如: "file_sd_config,consul_sd_config,kubernetes_sd_config,..."等配置。

而"Kube-Prometheus Stack"别具一格,采用了一种名为"ServiceMonitor"的自定义资源进行配置。

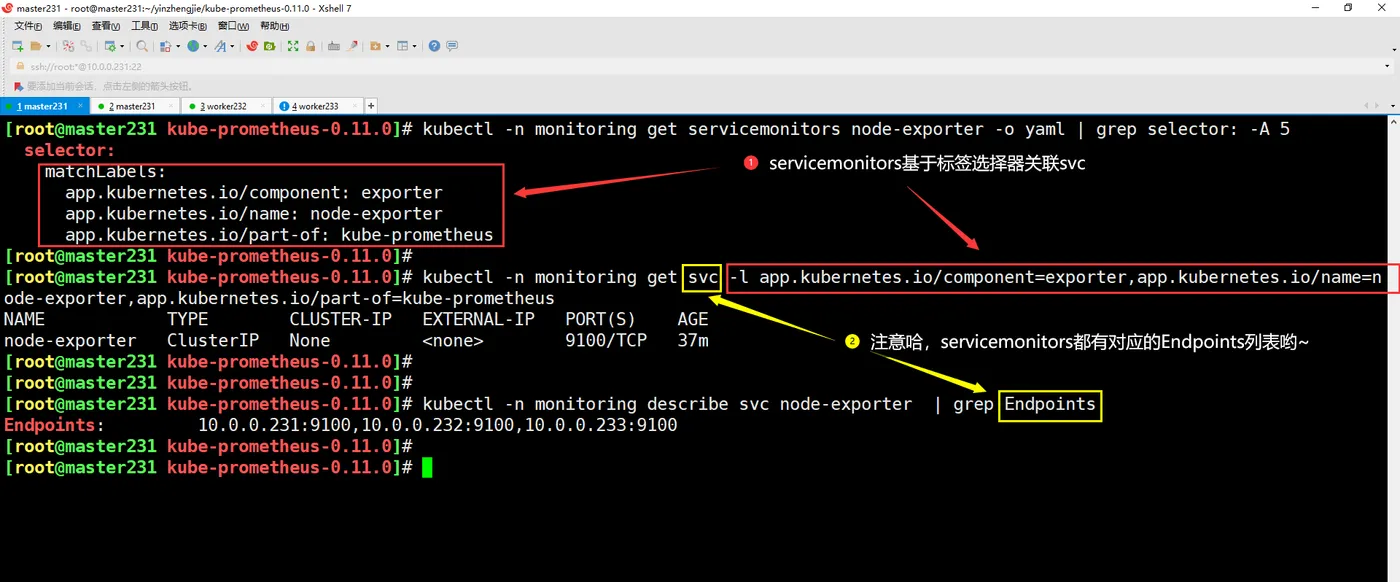

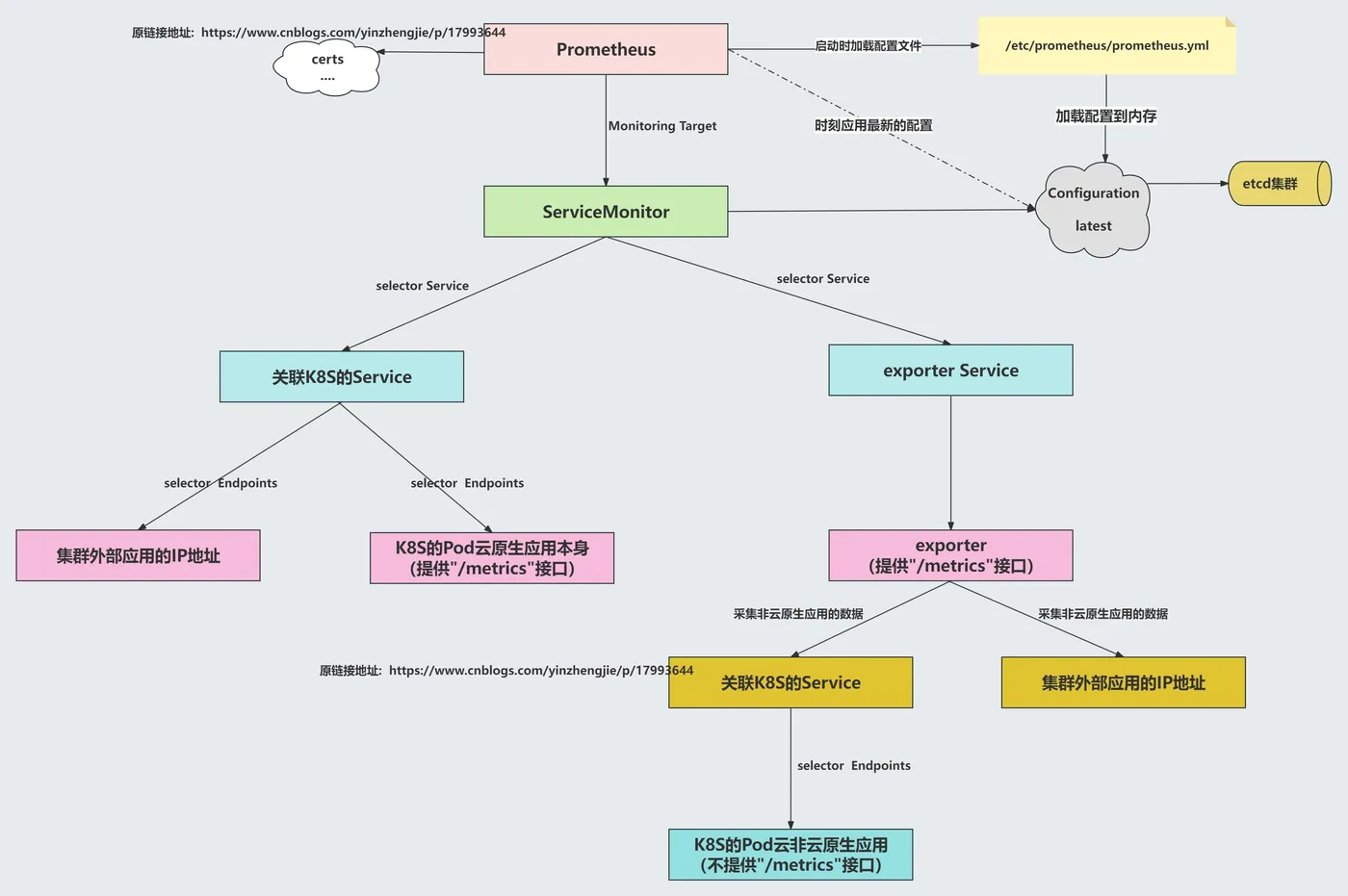

如上图所示,这个"ServiceMonitor"自定义资源基于标签来关联services资源,而services资源的ep列表会自动发现关联后端的Pod,然后在自动生成对应的"prometheus.yml"配置文件相匹配的代码块。

说白了,"ServiceMonitor"就是用来配置监控目标的,Prometheus可以识别这些配置并自动将其转换为"prometheus.yml"能识别的配置代码块。

2.ServiceMonitor配置解析

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: grafana

namespace: monitoring

spec:

# 指定监控后端目标的策略

endpoints:

# 监控数据抓取的时间间隔

- interval: 15s

# 指定metrics端口

port: http

# Metrics接口路径

path: /metrics

# Metrics接口的协议

scheme: http

# 监控目标Service所在的命名空间

namespaceSelector:

matchNames:

- monitoring

# 监控目标Service目标的标签。

selector:

matchLabels:

app.kubernetes.io/name: grafana

温馨提示:

上面只是我写了一个简单的案例,便于大家理解,实际上还可以写的更复杂,比如对于标签的处理等相关配置。

如果遇到不懂的字段,可以使用"kubectl explain ServiceMonitor"查阅相关文档即可。

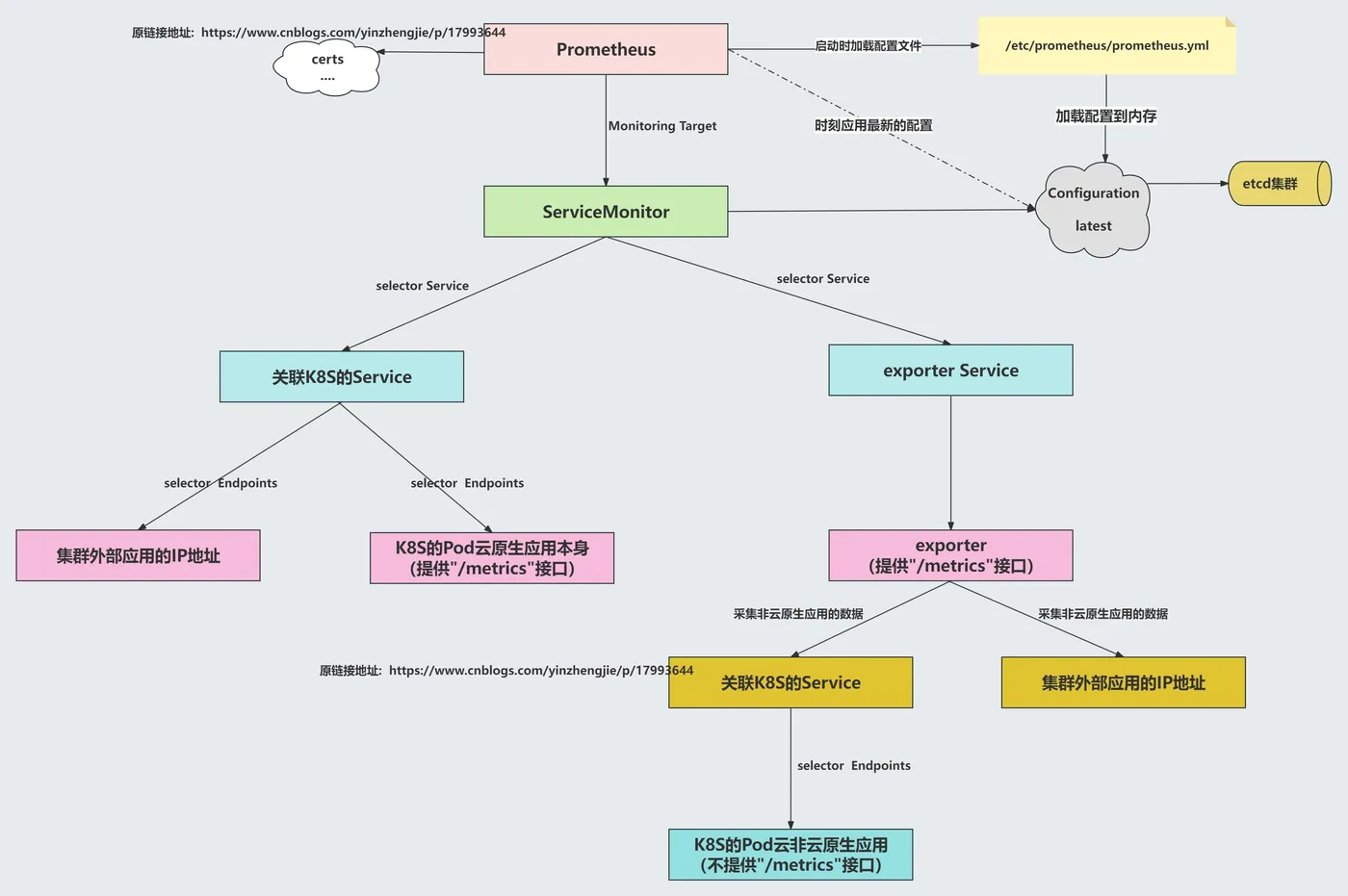

3.Prometheus监控流程

如上图所示,展示了ServerMonitor的监控流程图解。

三.Prometheus监控云原生应用etcd案例

1.测试ectd metrics接口

1.查看etcd证书存储路径

[root@master231 yinzhengjie]# egrep "\--key-file|--cert-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

[root@master231 yinzhengjie]#

2.测试etcd证书访问的metrics接口

[root@master231 yinzhengjie]# curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.0.0.231:2379/metrics -k | tail

# TYPE process_virtual_memory_max_bytes gauge

process_virtual_memory_max_bytes 1.8446744073709552e+19

# HELP promhttp_metric_handler_requests_in_flight Current number of scrapes being served.

# TYPE promhttp_metric_handler_requests_in_flight gauge

promhttp_metric_handler_requests_in_flight 1

# HELP promhttp_metric_handler_requests_total Total number of scrapes by HTTP status code.

# TYPE promhttp_metric_handler_requests_total counter

promhttp_metric_handler_requests_total{code="200"} 4

promhttp_metric_handler_requests_total{code="500"} 0

promhttp_metric_handler_requests_total{code="503"} 0

[root@master231 yinzhengjie]#

2.etcd Service创建

1.创建etcd的service

[root@master231 yinzhengjie]# cat etcd-svc.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: etcd-k8s

namespace: kube-system

subsets:

- addresses:

- ip: 10.0.0.231

ports:

- name: https-metrics

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: etcd-k8s

namespace: kube-system

labels:

apps: etcd

spec:

ports:

- name: https-metrics

port: 2379

targetPort: 2379

type: ClusterIP

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl apply -f etcd-svc.yaml

endpoints/etcd-k8s created

service/etcd-k8s created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl get svc -n kube-system -l apps=etcd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

etcd-k8s ClusterIP 10.200.33.157 <none> 2379/TCP 36m

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n kube-system describe svc etcd-k8s | grep Endpoints

Endpoints: 10.0.0.231:2379

[root@master231 yinzhengjie]#

2.基于创建的svc访问测试连通性

[root@master231 yinzhengjie]# curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.200.33.157:2379/metrics -k | tail -1

promhttp_metric_handler_requests_total{code="503"} 0

[root@master231 yinzhengjie]#

3.创建etcd证书的secrets并挂载到Prometheus server

1.查找需要挂载etcd的证书文件路径

[root@master231 yinzhengjie]# egrep "\--key-file|--cert-file|--trusted-ca-file" /etc/kubernetes/manifests/etcd.yaml

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

[root@master231 yinzhengjie]#

2.根据etcd的实际存储路径创建secrets

[root@master231 yinzhengjie]# kubectl create secret generic etcd-tls --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n monitoring

secret/etcd-tls created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n monitoring get secrets etcd-tls

NAME TYPE DATA AGE

etcd-tls Opaque 3 12s

[root@master231 yinzhengjie]#

3.修改Prometheus的资源,修改后会自动重启

[root@master231 yinzhengjie]# kubectl -n monitoring edit prometheus k8s

...

spec:

secrets:

- etcd-tls

...

[root@master231 yinzhengjie]# kubectl -n monitoring get pods -l app.kubernetes.io/component=prometheus -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

prometheus-k8s-0 2/2 Running 0 74s 10.100.1.57 worker232 <none> <none>

prometheus-k8s-1 2/2 Running 0 92s 10.100.2.28 worker233 <none> <none>

[root@master231 yinzhengjie]#

4.查看证书是否挂载成功

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-0 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

total 0

lrwxrwxrwx 1 root 2000 13 Jan 24 14:07 ca.crt -> ..data/ca.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.crt -> ..data/server.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.key -> ..data/server.key

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n monitoring exec prometheus-k8s-1 -c prometheus -- ls -l /etc/prometheus/secrets/etcd-tls

total 0

lrwxrwxrwx 1 root 2000 13 Jan 24 14:07 ca.crt -> ..data/ca.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.crt -> ..data/server.crt

lrwxrwxrwx 1 root 2000 17 Jan 24 14:07 server.key -> ..data/server.key

[root@master231 yinzhengjie]#

4.创建ServerMonitor

1.创建ServiceMonitor资源关联etcd的svc

[root@master231 yinzhengjie]# cat etcd-smon.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: yinzhengjie-etcd-smon

namespace: monitoring

spec:

# 指定job的标签,可以不设置。

jobLabel: kubeadm-etcd-k8s-yinzhengjie

# 指定监控后端目标的策略

endpoints:

# 监控数据抓取的时间间隔

- interval: 30s

# 指定metrics端口,这个port对应Services.spec.ports.name

port: https-metrics

# Metrics接口路径

path: /metrics

# Metrics接口的协议

scheme: https

# 指定用于连接etcd的证书文件

tlsConfig:

# 指定etcd的CA的证书文件

caFile: /etc/prometheus/secrets/etcd-tls/ca.crt

# 指定etcd的证书文件

certFile: /etc/prometheus/secrets/etcd-tls/server.crt

# 指定etcd的私钥文件

keyFile: /etc/prometheus/secrets/etcd-tls/server.key

# 关闭证书校验,毕竟咱们是自建的证书,而非官方授权的证书文件。

insecureSkipVerify: true

# 监控目标Service所在的命名空间

namespaceSelector:

matchNames:

- kube-system

# 监控目标Service目标的标签。

selector:

# 注意,这个标签要和etcd的service的标签保持一致哟

matchLabels:

apps: etcd

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl apply -f etcd-smon.yaml

servicemonitor.monitoring.coreos.com/yinzhengjie-etcd-smon created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl get smon -n monitoring yinzhengjie-etcd-smon

NAME AGE

yinzhengjie-etcd-smon 8s

[root@master231 yinzhengjie]#

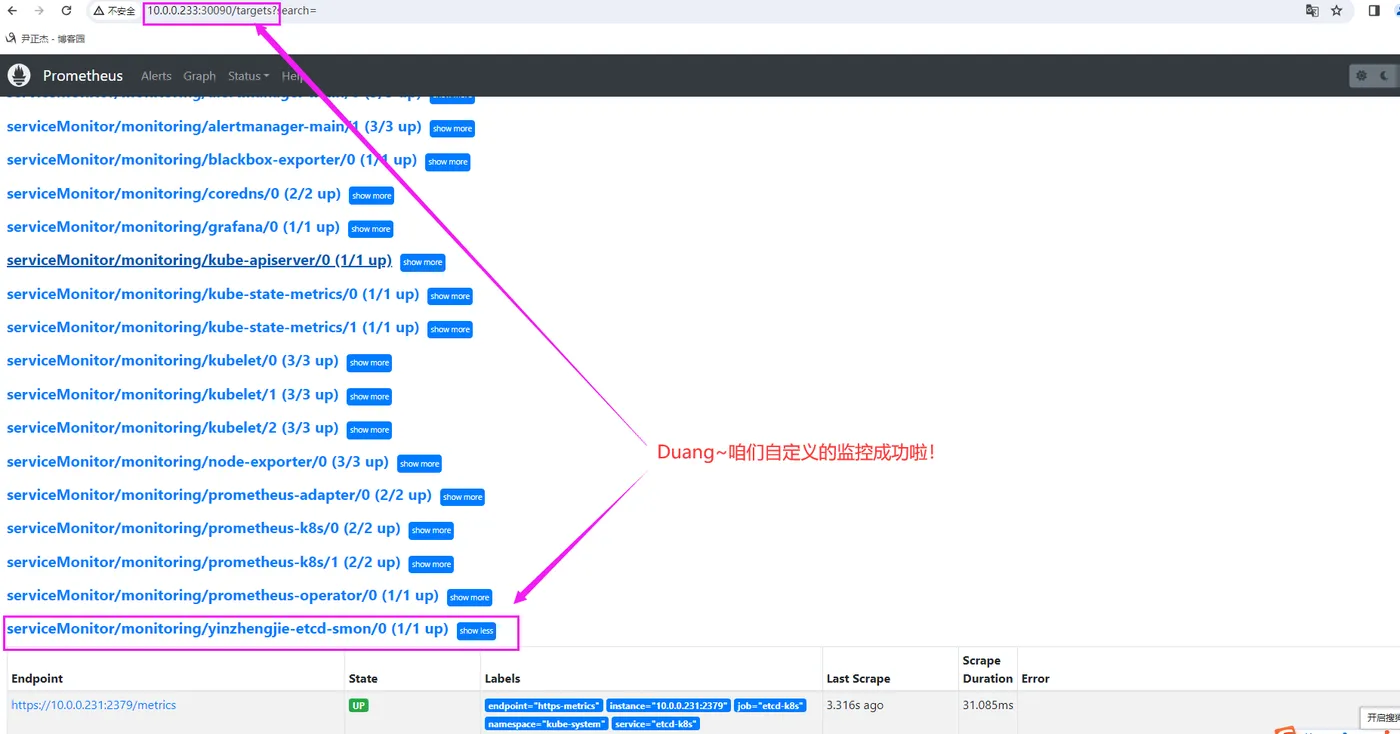

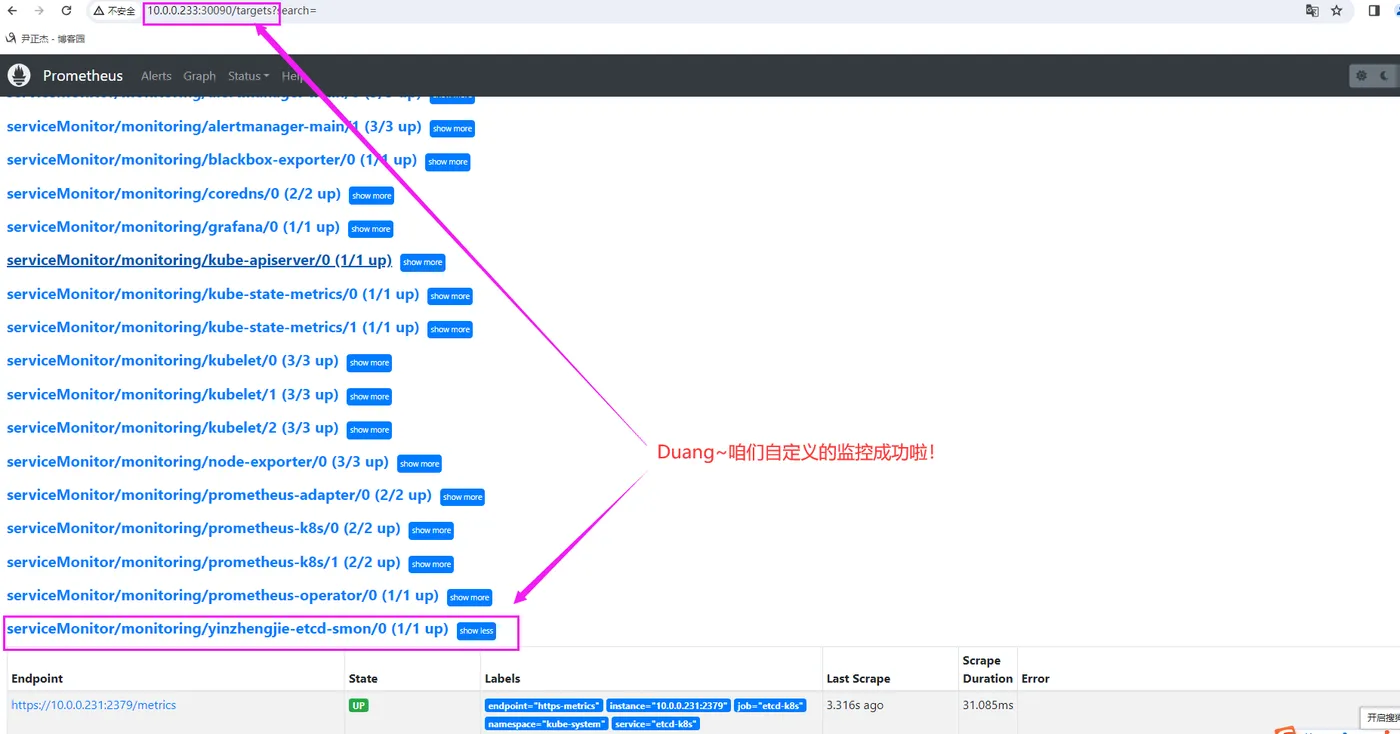

2.访问Prometheus的WebUI

http://10.0.0.233:30090/targets?search=

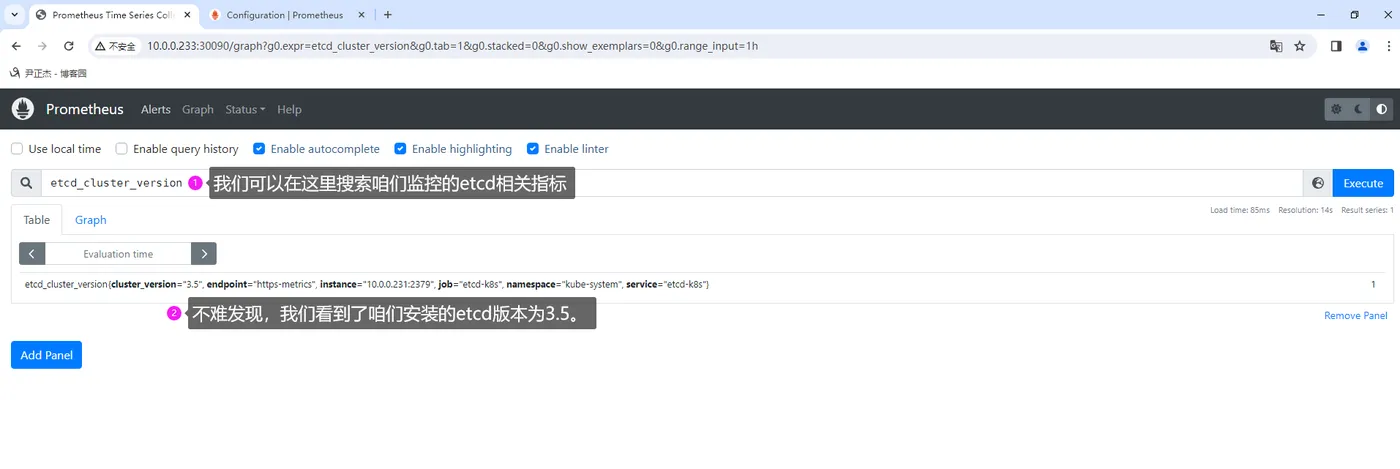

5.查看etcd的数据

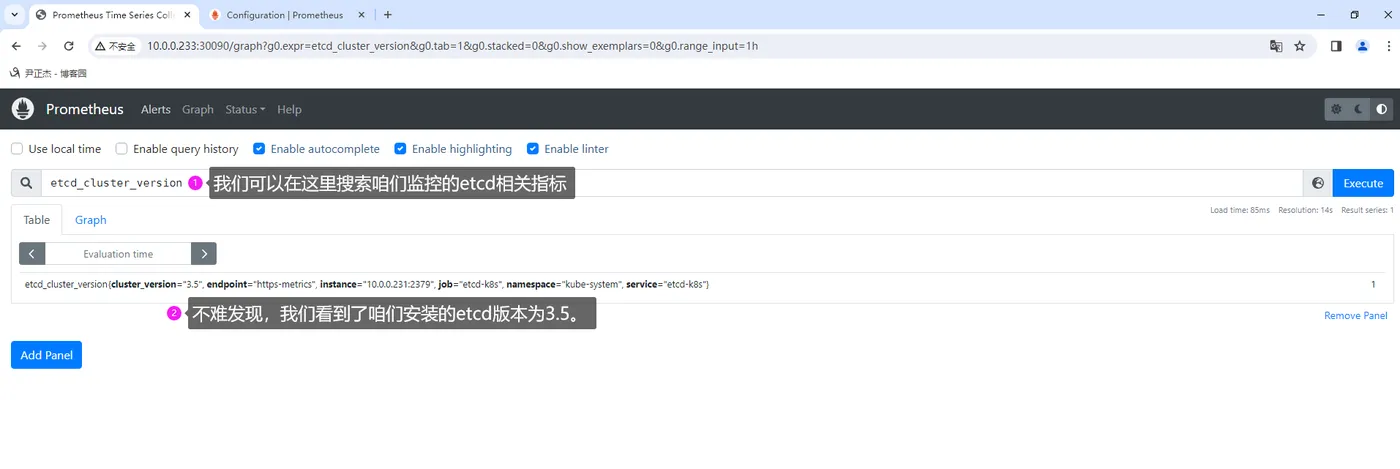

如上图所示,我们搜索"etcd_cluster_version"就可以查询到etcd的数据啦。

6.使用grafana查看etcd数据

导入etcd的模板,建议编号为"3070"。

四.Prometheus监控非云原生应用MySQL案例

1.部署MySQL服务

1.编写资源清单

[root@master231 yinzhengjie]# cat deploy-mysql80.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql80-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql80

template:

metadata:

labels:

apps: mysql80

spec:

containers:

- name: mysql

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/mysql:8.0.36-oracle

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: yinzhengjie

---

apiVersion: v1

kind: Service

metadata:

name: mysql80-service

spec:

selector:

apps: mysql80

ports:

- protocol: TCP

port: 3306

targetPort: 3306

[root@master231 yinzhengjie]#

2.创建资源

[root@master231 yinzhengjie]# kubectl apply -f deploy-mysql80.yaml

deployment.apps/mysql80-deployment created

service/mysql80-service created

[root@master231 yinzhengjie]#

3.验证服务的可用性

[root@master231 yinzhengjie]# kubectl get pods,svc | grep mysql80

pod/mysql80-deployment-846477c784-hmvfw 1/1 Running 0 43s

service/mysql80-service ClusterIP 10.200.95.73 <none> 3306/TCP 43s

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# telnet 10.200.95.73 3306

Trying 10.200.95.73...

Connected to 10.200.95.73.

Escape character is '^]'.

J

8.0.3xkS`0Pÿ2uV9z}tX'pcaching_sha2_password

2.为mysql_exporter授权

[root@master231 yinzhengjie]# kubectl exec -it mysql80-deployment-846477c784-hmvfw -- mysql -pyinzhengjie

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 10

Server version: 8.0.36 MySQL Community Server - GPL

Copyright (c) 2000, 2024, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

mysql> CREATE USER mysql_exporter IDENTIFIED BY 'yinzhengjie' WITH MAX_USER_CONNECTIONS 3;

Query OK, 0 rows affected (0.04 sec)

mysql> GRANT PROCESS,REPLICATION CLIENT,SELECT ON *.* TO mysql_exporter;

Query OK, 0 rows affected (0.01 sec)

mysql>

3.部署mysql_exporter

1.编写资源清单

[root@master231 yinzhengjie]# cat deploy-svc-cm-mysql_exporter.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: my.cnf

data:

.my.cnf: |-

[client]

user = mysql_exporter

password = yinzhengjie

[client.servers]

user = mysql_exporter

password = yinzhengjie

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-exporter-deployment

spec:

replicas: 1

selector:

matchLabels:

apps: mysql-exporter

template:

metadata:

labels:

apps: mysql-exporter

spec:

volumes:

- name: data

configMap:

name: my.cnf

items:

- key: .my.cnf

path: .my.cnf

containers:

- name: mysql-exporter

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/mysqld-exporter:v0.15.1

command:

- mysqld_exporter

- --config.my-cnf=/root/my.cnf

- --mysqld.address=mysql80-service.default.svc.yinzhengjie.com:3306

securityContext:

runAsUser: 0

ports:

- containerPort: 9104

#env:

#- name: DATA_SOURCE_NAME

# value: mysql_exporter:yinzhengjie@(mysql80-service.default.svc.yinzhengjie.com:3306)

volumeMounts:

- name: data

mountPath: /root/my.cnf

subPath: .my.cnf

---

apiVersion: v1

kind: Service

metadata:

name: mysql-exporter-service

labels:

apps: mysqld

spec:

selector:

apps: mysql-exporter

ports:

- protocol: TCP

port: 9104

targetPort: 9104

name: mysql80

[root@master231 yinzhengjie]#

2.验证数据是否采集到

[root@master231 yinzhengjie]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 22d

mysql-exporter-service ClusterIP 10.200.96.244 <none> 9104/TCP 3m57s

mysql80-service ClusterIP 10.200.95.73 <none> 3306/TCP 2d12h

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# curl -s 10.200.96.244:9104/metrics | tail -1

promhttp_metric_handler_requests_total{code="503"} 0

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# curl -s 10.200.96.244:9104/metrics | wc -l

2557

[root@master231 yinzhengjie]#

4.编写ServiceMonitor监控mysql_exporter

[root@master231 yinzhengjie]# cat mysql-smon.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: yinzhengjie-mysql-smon

spec:

jobLabel: kubeadm-mysql-k8s-yinzhengjie

endpoints:

- interval: 30s

# 这里的端口可以写svc的端口号,也可以写svc的名称。

# 但我推荐写svc端口名称,这样svc就算修改了端口号,只要不修改svc端口的名称,那么我们此处就不用再次修改哟。

# port: 9104

port: mysql80

path: /metrics

scheme: http

namespaceSelector:

matchNames:

- default

selector:

matchLabels:

apps: mysqld

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl apply -f mysql-smon.yaml

servicemonitor.monitoring.coreos.com/yinzhengjie-mysql-smon created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl get smon

NAME AGE

yinzhengjie-mysql-smon 8s

[root@master231 yinzhengjie]#

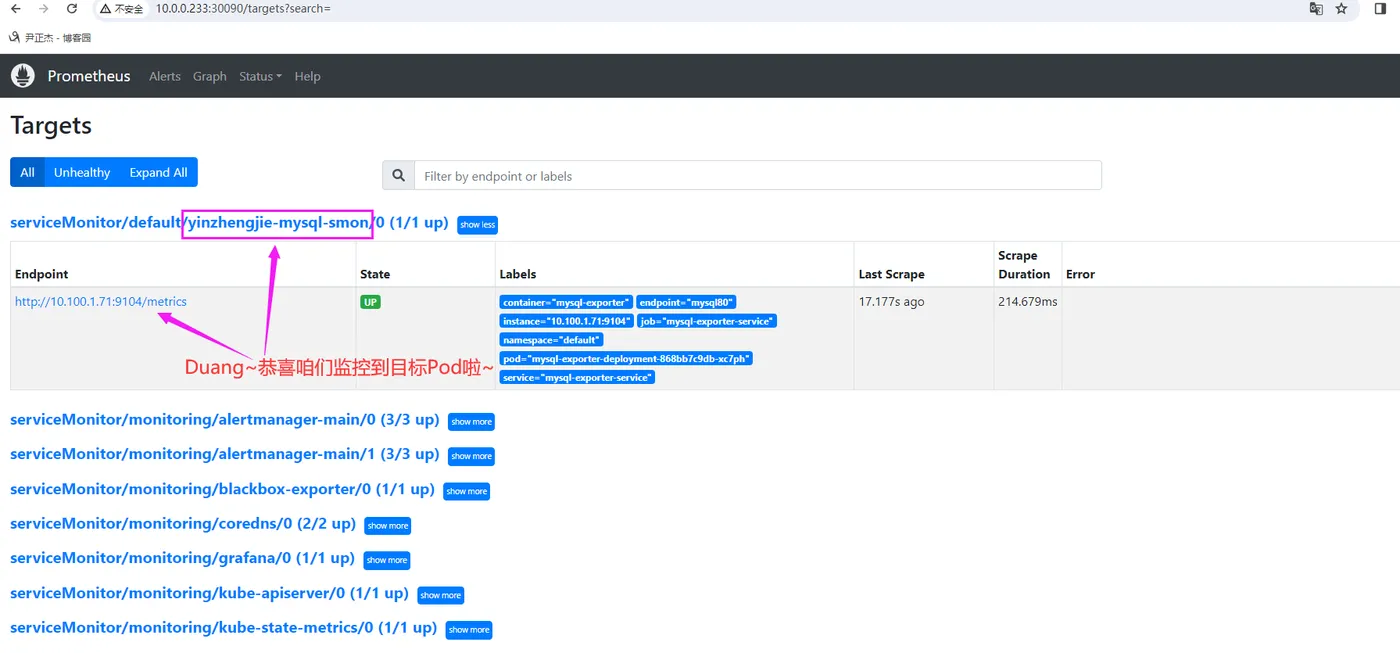

5.观察Prometheus是否有数据

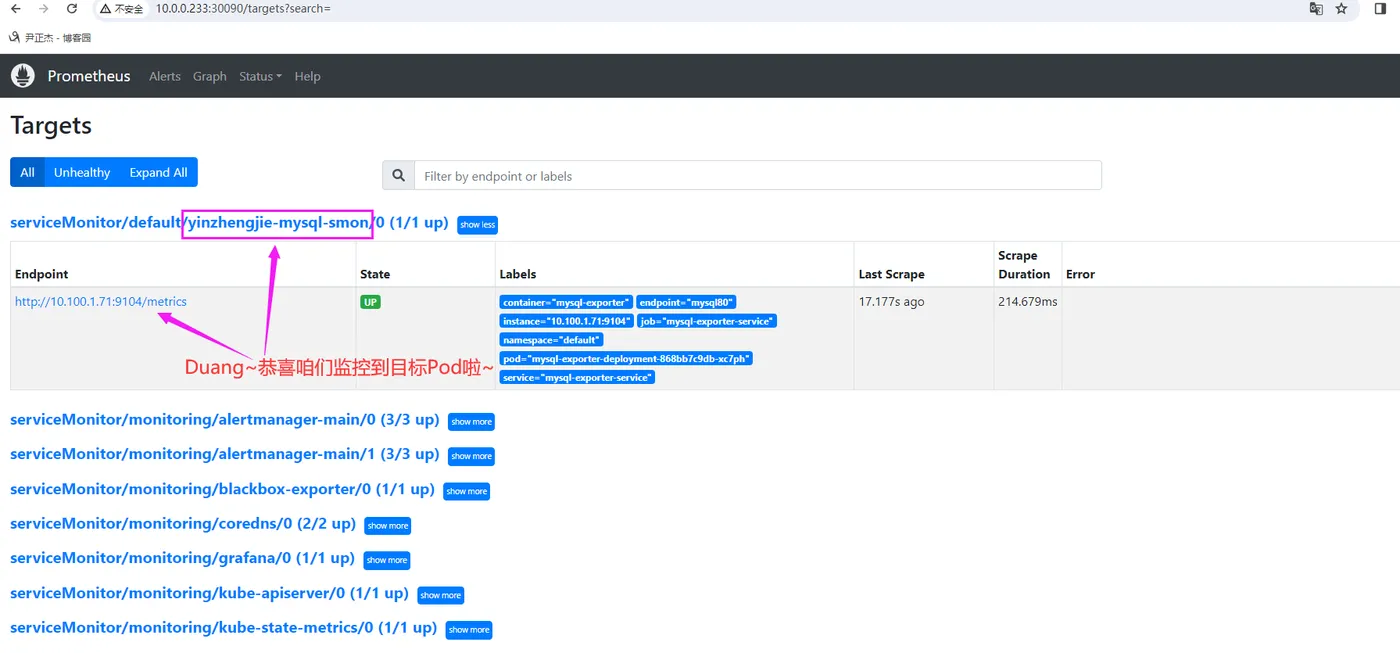

如上图所示,我们观察到Prometheus已经监控到mysql_exporter啦。

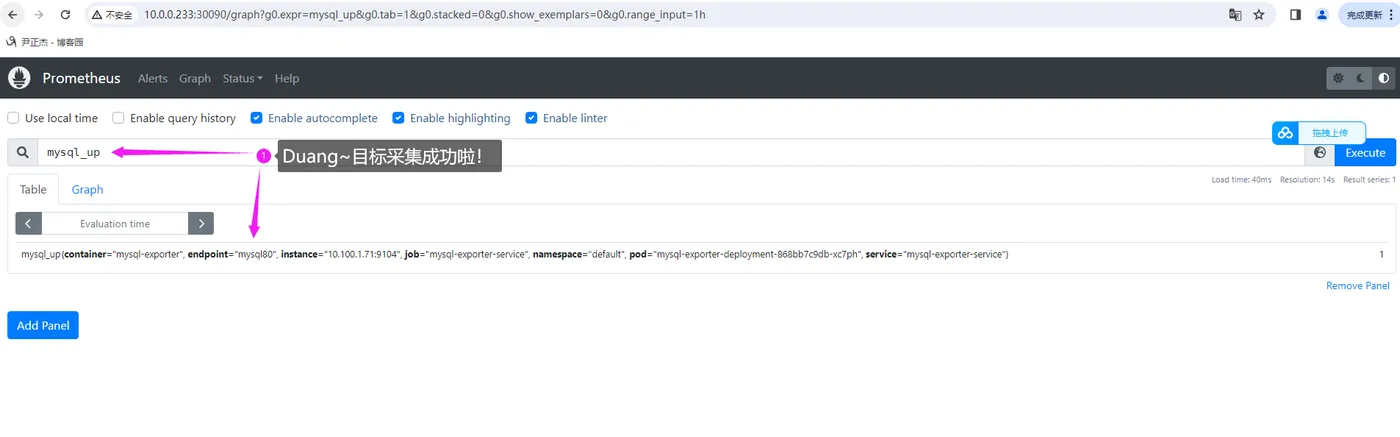

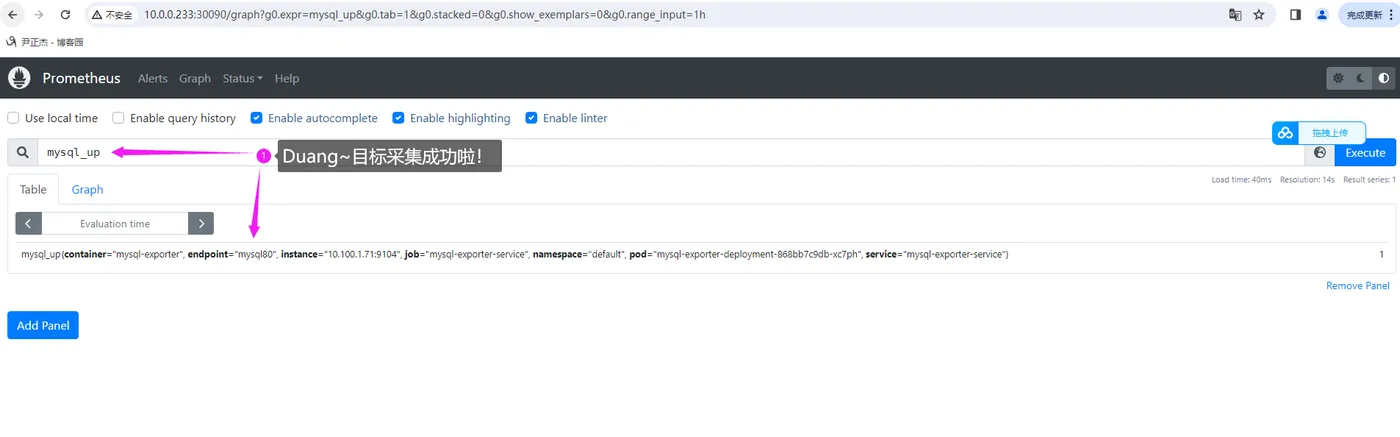

如下图所示,我们也可以搜索"mysql_up"这个metrics来观察是否采集到数据指标。

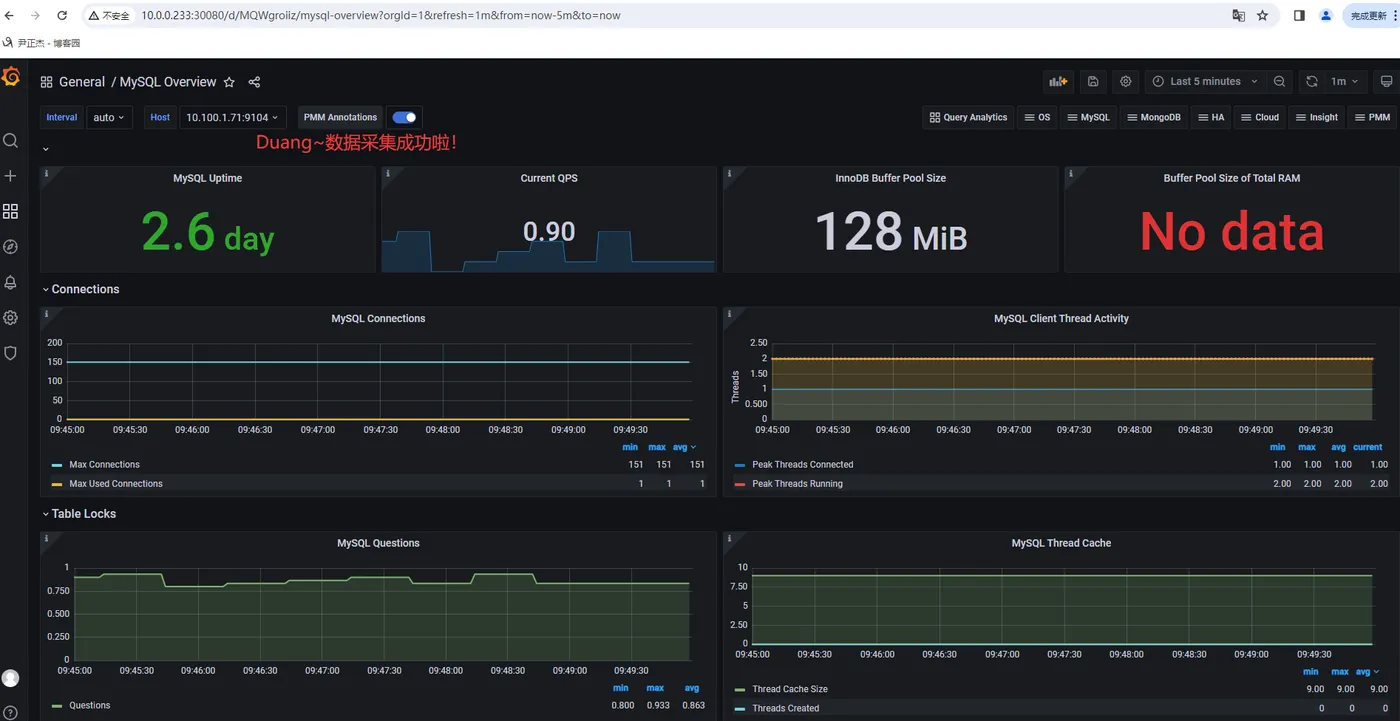

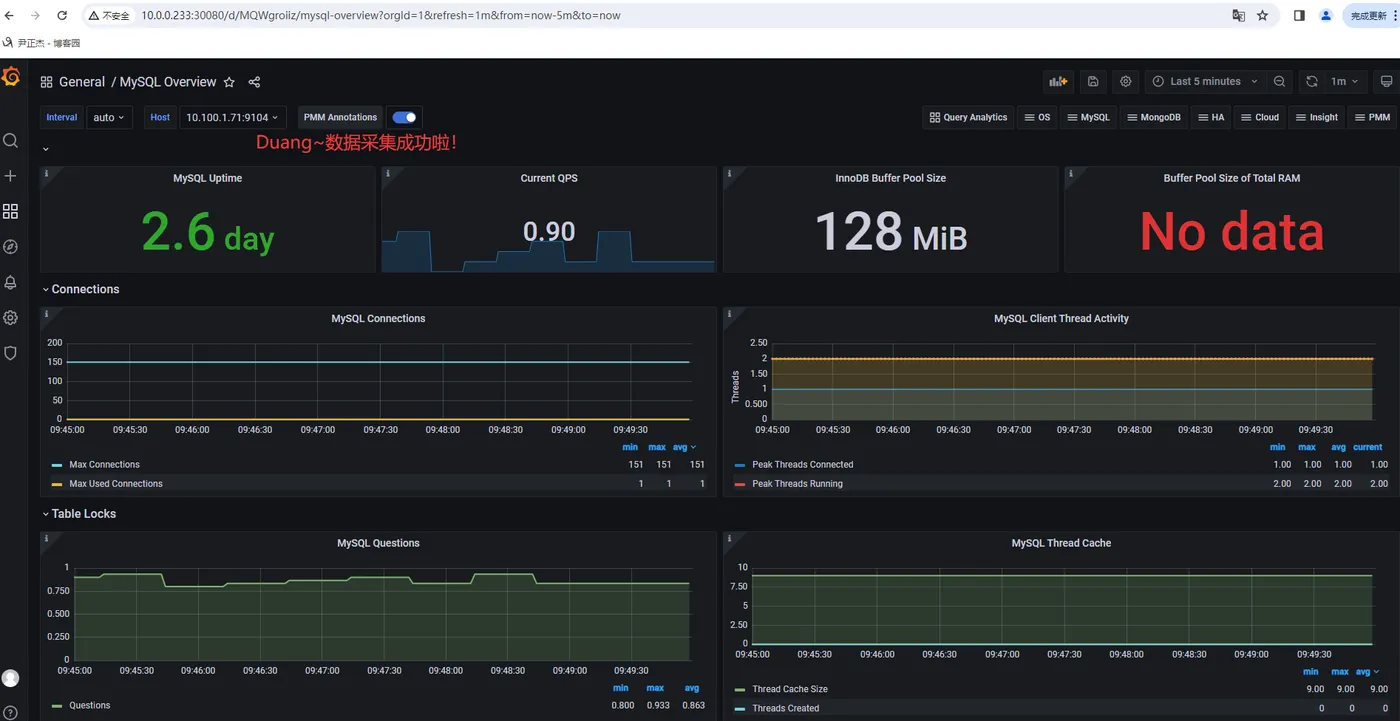

6.使用grafana查看数据

导入模板ID。如上图所示,我使用的是"7362"这个ID编号。

五.ServiceMonitor监控失败排查流程

1.ServiceMonitor监控失败排查流程

- 1.确认ServiceMonitor是否创建成功

- 2.确认Service Monitor标签是否配置正确

- 3.确认Prometheus是否生成了相关配置

- 4.确认存在Service Monitor匹配的Service

- 5.确认通过Service能够访问程序的“metrices"接口

- 6.确认Service的端口和scheme和Service Monitor一致

2.查看prometheus的默认告警规则

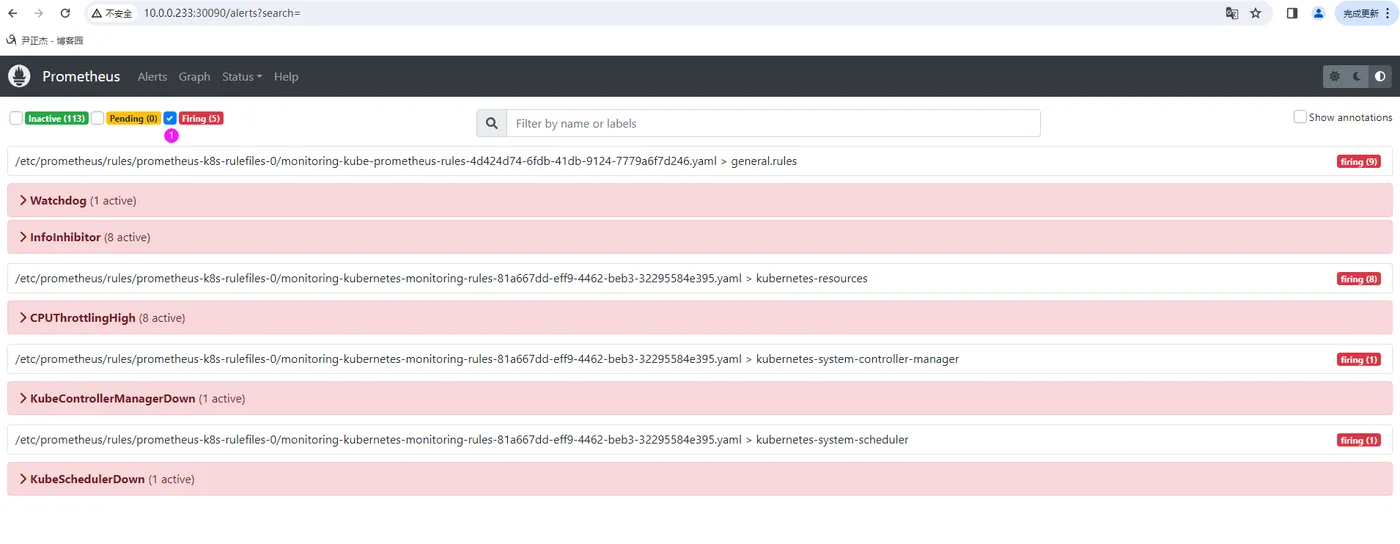

告警类型说明:

Inactive:

已经定义了告警规则但是没有触发。

Pending:

正在产生的告警,可能要产生多次才会触发告警。

Firing:

已经触发了的告警。

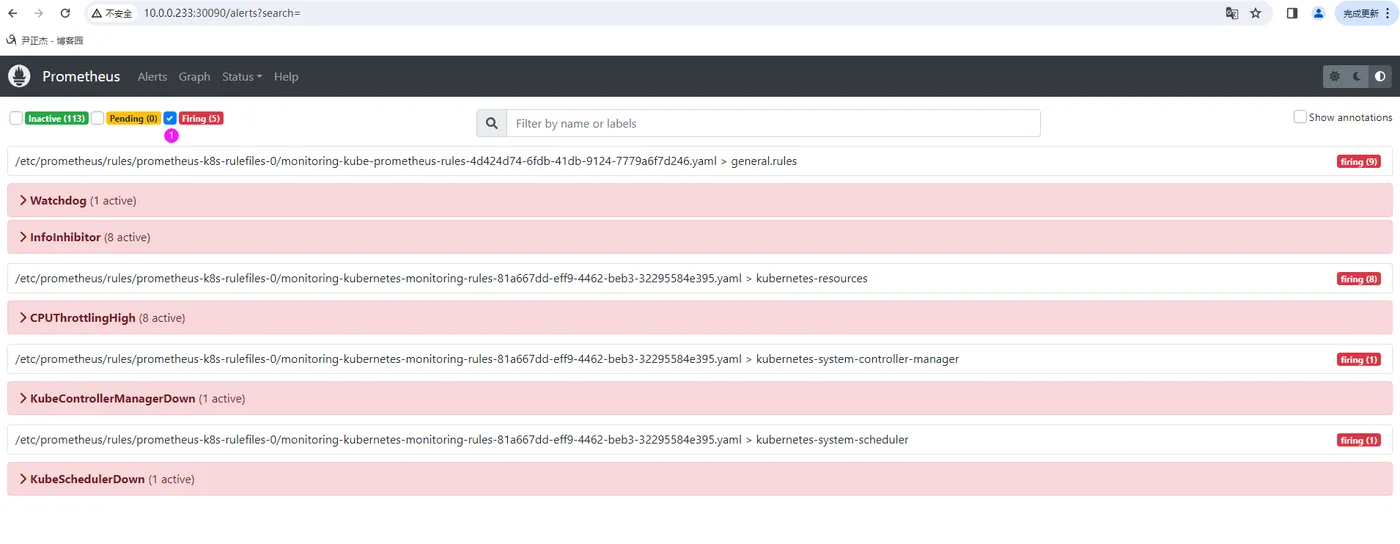

如上图所示,部署成功后默认会触发告警:

- Watchdog

首先,要强调的是这是一个正常的报警,哪一天这个告警通知不发了,说明监控出现问题了。

如果alermanger或者prometheus本身挂掉了就发不出告警了,因此一般会采用另一个监控来监控prometheus,或者自定义一个持续不断的告警通知。

prometheus operator已经考虑了这一点,本身携带一个watchdog,作为对自身的监控。

如果需要关闭,删除或注释掉Watchdog部分。

- InfoInhibitor

信息抑制程序,建议问下CHATGPT。

- CPUThrottlingHigh

用于CPU资源管理,这个官方出的告警被很多网友吐槽准确性很差,生产环境中可以关闭该告警。

参考链接:

https://github.com/kubernetes-monitoring/kubernetes-mixin/issues/108

- KubeControllerManagerDown

监控到ControllerManager组件不正常工作的告警,检查下smon关联的ControllerManager对应的svc是否存在。

- KubeSchedulerDown

监控到KubeScheduler组件不正常工作的告警,检查下smon关联的KubeScheduler对应的svc是否存在。

3.排查KubeControllerManagerDown案例

1.修改controller manager监听的地址(默认监听的本地回环地址相对于Prometheus服务器网络无法连通,因此无法监控)

[root@master231 yinzhengjie]# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

...

spec:

containers:

- command:

- kube-controller-manager

# 默认监听的地址是127.0.0.1,此处我们建议将其修改为0.0.0.0,否则Prometheus无法访问该节点。

# - --bind-address=127.0.0.1

- --bind-address=0.0.0.0

2.重启kubelet节点使得配置生效(由于我的K8S集群是基于kubeadm部署的,如果想要快速生效可以直接重启下kubelet,会加载所有的静态Pod,)

[root@master231 yinzhengjie]# systemctl restart kubelet

温馨提示:

如果是二进制部署的K8S集群则没有必要重启kubelet节点,直接重启下kube-controller-manager服务让其配置生效即可。

3.查看ServiceMonitor关联的svc对应的名称

[root@master231 yinzhengjie]# kubectl get smon -n monitoring kube-controller-manager -o yaml | grep matchNames -A 5 -B 2

...

# 注意,这里的port关联的是svc的端口的名称,我们在定义的时候要保持一致哟~

port: https-metrics

...

jobLabel: app.kubernetes.io/name

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

app.kubernetes.io/name: kube-controller-manager

...

[root@master231 yinzhengjie]#

4.根据上一步发现对应的smon组件并不存在

[root@master231 yinzhengjie]# kubectl -n kube-system get svc -l app.kubernetes.io/name=kube-controller-manager

No resources found in kube-system namespace.

[root@master231 yinzhengjie]#

5.手动创建与之关联的svc

[root@master231 yinzhengjie]# cat svc-kube-controller-manager.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: kube-controller-manager-k8s

namespace: kube-system

subsets:

- addresses:

- ip: 10.0.0.231

ports:

- name: https-metrics

port: 10257

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kube-controller-manager-k8s

namespace: kube-system

# 注意,咱们创建的标签必须要和官方smon组件关联的标签所匹配哟~

labels:

app.kubernetes.io/name: kube-controller-manager

spec:

ports:

# 注意,这个svc的名称也要和"kube-controller-manager"的smon的port字段对应上哟~

- name: https-metrics

port: 10257

targetPort: 10257

protocol: TCP

type: ClusterIP

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl apply -f svc-kube-controller-manager.yaml

endpoints/kube-controller-manager-k8s created

service/kube-controller-manager-k8s created

[root@master231 yinzhengjie]#

[root@master231 yinzhengjie]# kubectl -n kube-system get svc -l app.kubernetes.io/name=kube-controller-manager

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-controller-manager-k8s ClusterIP 10.200.92.60 <none> 10257/TCP 22s

[root@master231 yinzhengjie]#

6.检查svc的连通性(不难发现已经响应成功啦,说明咱们的配置没问题)

[root@master231 yinzhengjie]# curl https://10.200.92.60:10257/metrics --insecure

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/metrics\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

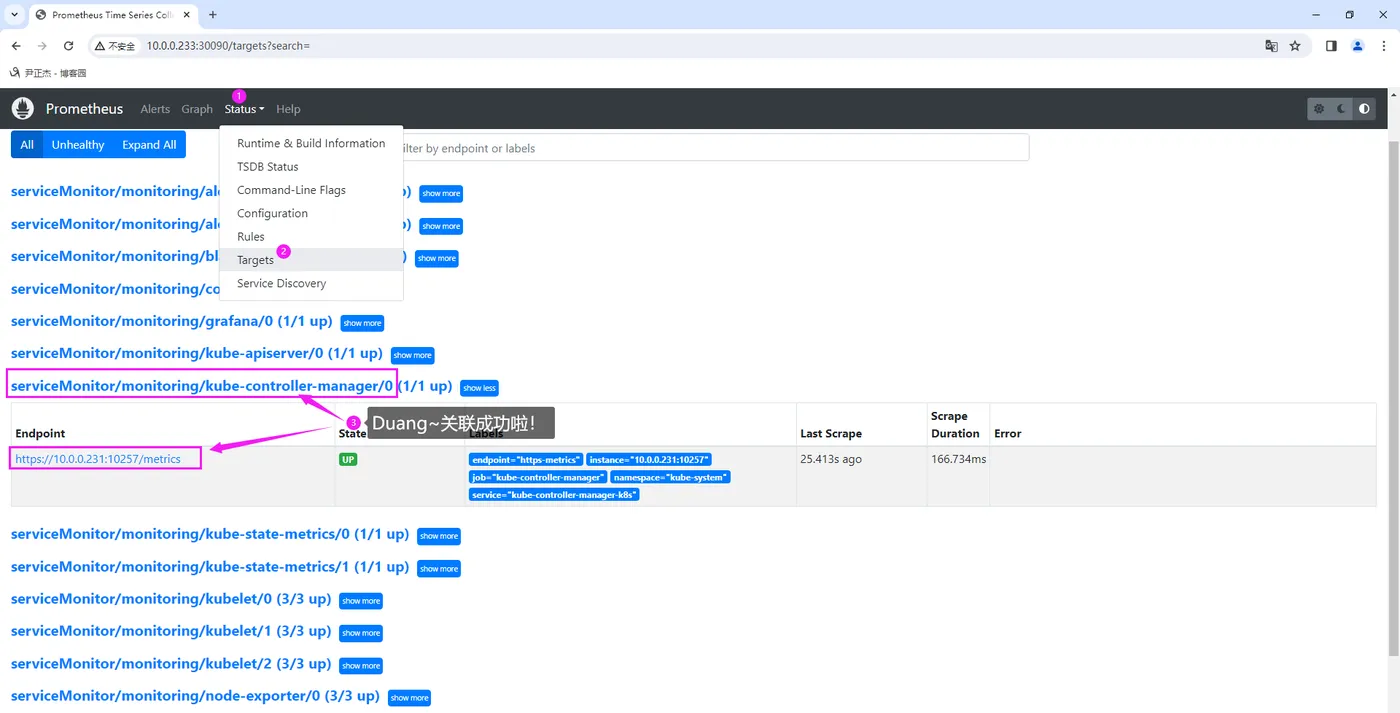

7.访问WebUI

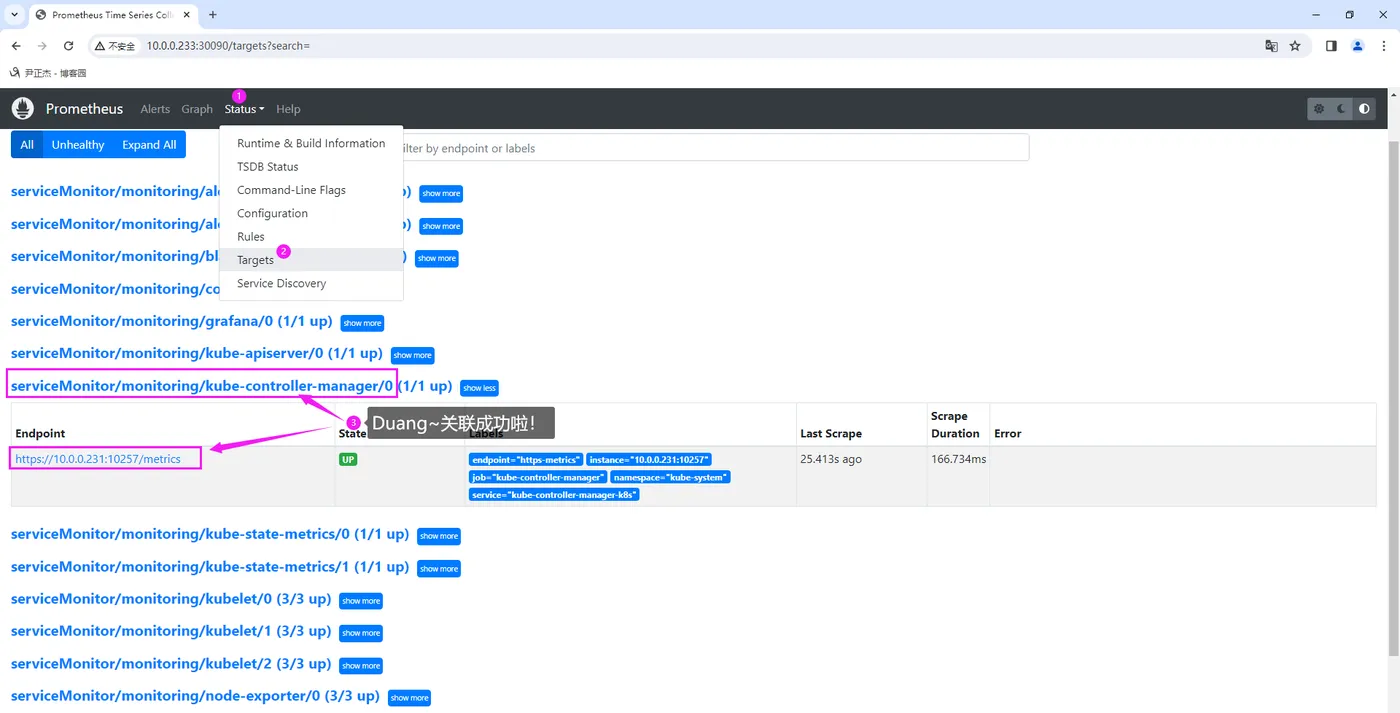

如上图所示,Prometheus的WebUI监控成功啦。

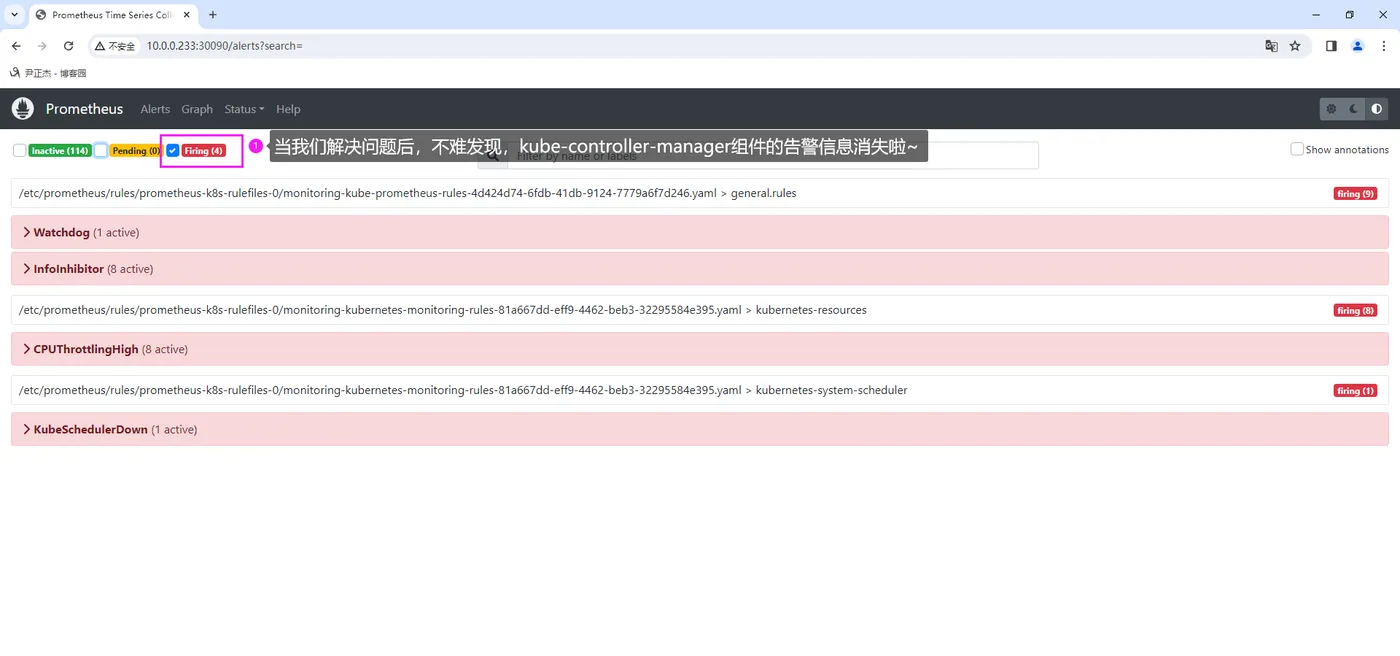

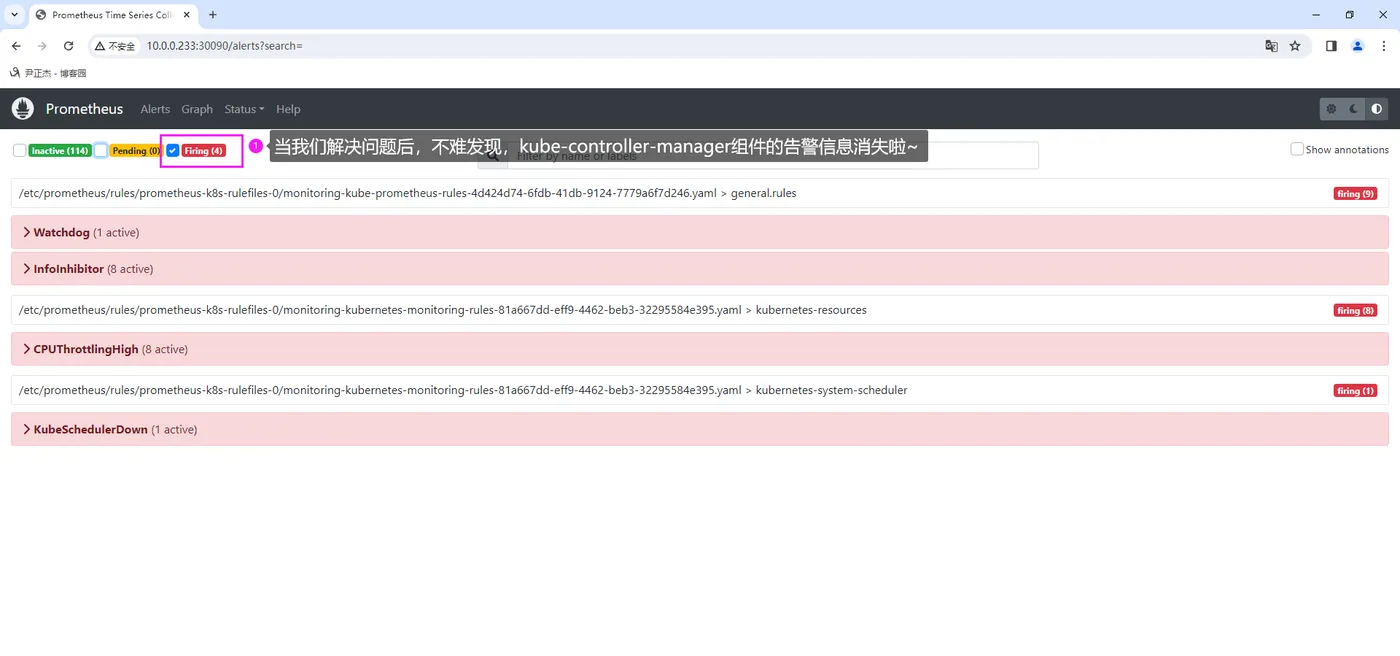

如下图所示,可以看到对应的“KubeControllerManagerDown”告警信息也消失了哟,原本5条告警信息仅剩下4条啦。

课后作业

- 完成课题的所有练习并整理思维导图;

- 请参考上述的处理流程,将其他几个告警都消除;