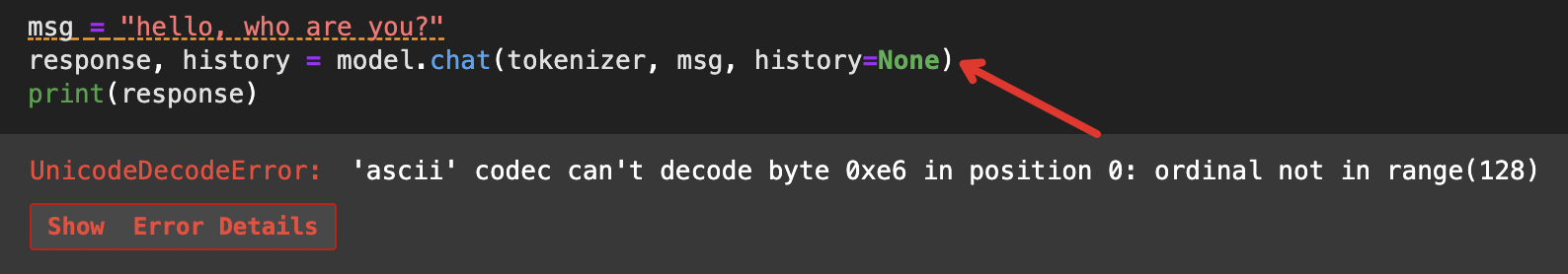

使用modelscope、Qwen1.8B-chat 模型推理时报错:UnicodeDecodeError: 'ascii' codec can't decode byte 0xe6 in position 0: ordinal not in range(128)

代码如下:

from modelscope import AutoModelForCausalLM, AutoTokenizer, GenerationConfig

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-1_8B-Chat", revision='master', trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-1_8B-Chat", revision='master', device_map="auto", trust_remote_code=True).eval()

msg = "hello, who are you?"

response, history = model.chat(tokenizer, msg, history=None)

print(response)

报错如下图所示,且报错指向位置如箭头所示: