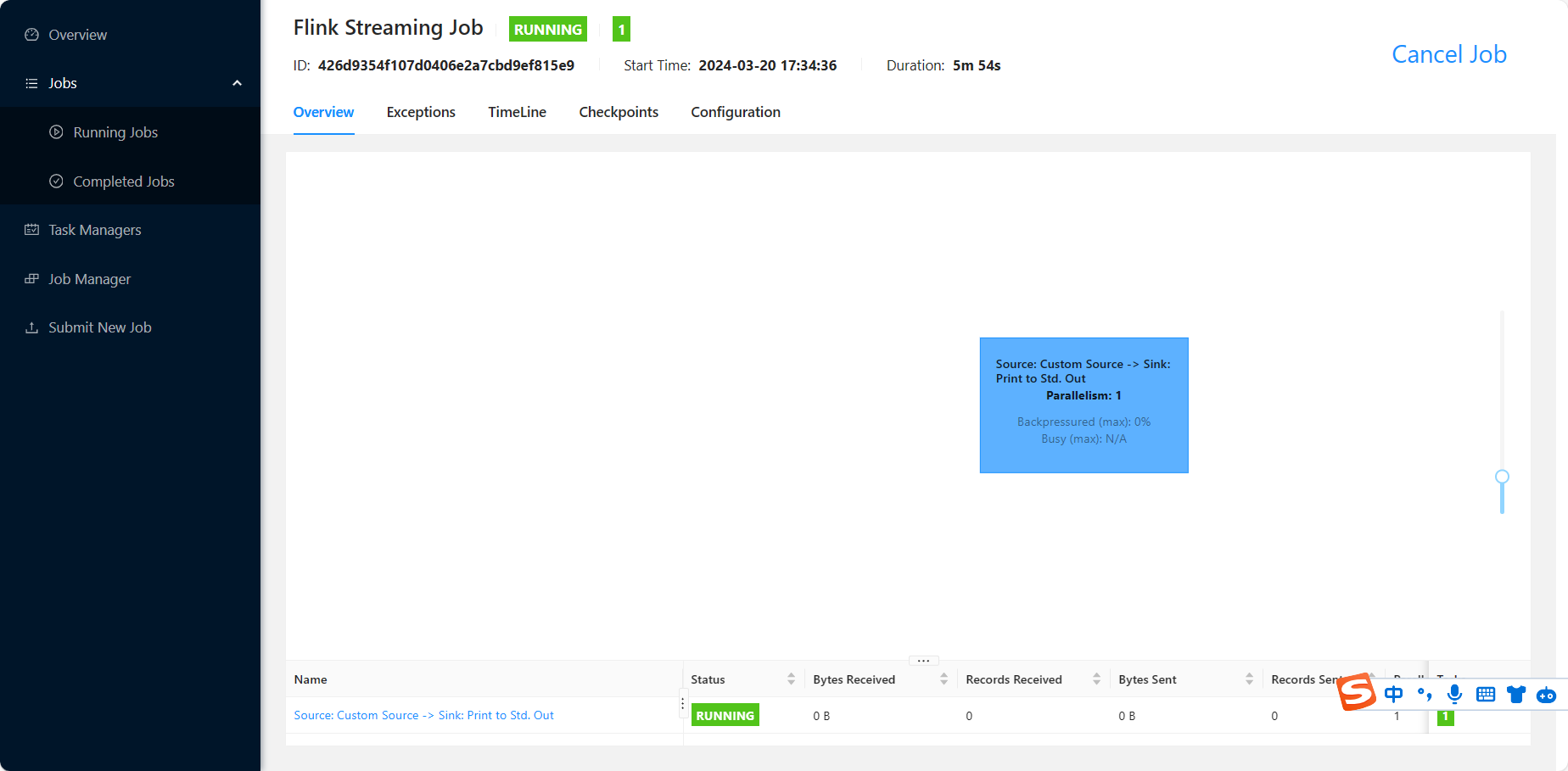

Flink CDC里我使用官网oracle cdc的demo运行起来为啥读不到oracle的数据啊?Legacy Source Thread - Source: Custom Source -> Sink: Print to Std. Out (1/1)#0 17:46:13.219 WARN org.apache.kafka.connect.runtime.WorkerConfig 334 logPluginPathConfigProviderWarning - Variables cannot be used in the 'plugin.path' property, since the property is used by plugin scanning before the config providers that replace the variables are initialized. The raw value 'null' was used for plugin scanning, as opposed to the transformed value 'null', and this may cause unexpected results.

Legacy Source Thread - Source: Custom Source -> Sink: Print to Std. Out (1/1)#0 17:46:13.219 WARN org.apache.kafka.connect.runtime.WorkerConfig 316 logInternalConverterRemovalWarnings - The worker has been configured with one or more internal converter properties ([internal.key.converter, internal.value.converter]). Support for these properties was deprecated in version 2.0 and removed in version 3.0, and specifying them will have no effect. Instead, an instance of the JsonConverter with schemas.enable set to false will be used. For more information, please visit http://kafka.apache.org/documentation/#upgrade and consult the upgrade notesfor the 3.0 release.stdout报了这个日志flink是1.14版本,cdc是2.4 这是怎么回事?

这是怎么回事?

版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

实时计算Flink版是阿里云提供的全托管Serverless Flink云服务,基于 Apache Flink 构建的企业级、高性能实时大数据处理系统。提供全托管版 Flink 集群和引擎,提高作业开发运维效率。