modelscope-funasr关于离线加载的问题,env:linux,python=3.9,torch=2.1.1,fuasr=0.8.4,modelscope=1.9.4。

docs:

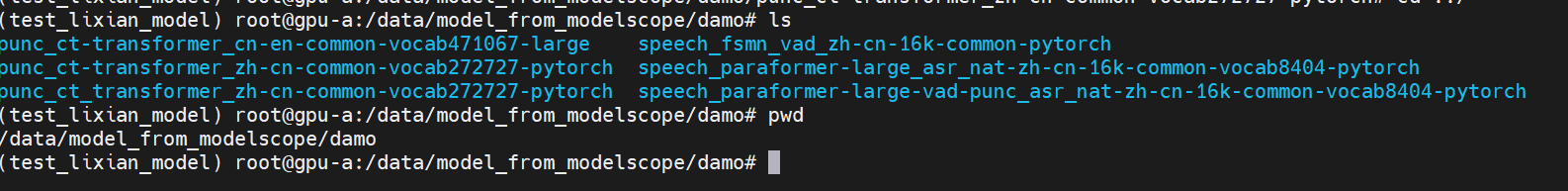

questions:离线环境下未能加载本地的funasr的模型始终需要链接modelscope社区,然后一直处于等待请求状态请问这是为什么?

code和配置

code:

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

inference_pipeline = pipeline(task=Tasks.auto_speech_recognition,model='/data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch',vad_model='/data/model_from_modelscope/damo/speech_fsmn_vad_zh-cn-16k-common-pytorch',punc_model='/data/model_from_modelscope/damo/punc_ct-transformer_cn-en-common-vocab471067-large')

import time

start_time = time.time()

wav_name = "./2023110200000949.wav"

rec_result = inference_pipeline(audio_in=wav_name)

end_time = time.time()

print("消耗时间",end_time-start_time)

模型配置文件如下

speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch/configuration.json修改各模型modelpath如下:

{

"framework": "pytorch",

"task" : "auto-speech-recognition",

"model" : {

"type" : "generic-asr",

"am_model_name" : "model.pb",

"model_config" : {

"type": "pytorch",

"code_base": "funasr",

"mode": "paraformer",

"lang": "zh-cn",

"batch_size": 1,

"am_model_config": "config.yaml",

"asr_model_config": "decoding.yaml",

"mvn_file": "am.mvn",

"model": "/data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch",

"vad_model": "/data/model_from_modelscope/damo/speech_fsmn_vad_zh-cn-16k-common-pytorch",

"punc_model": "/data/model_from_modelscope/damo/punc_ct-transformer_zh-cn-common-vocab272727-pytorch"

}

},

"pipeline": {

"type":"asr-inference"

}

}

vad-modelpath的configuration.json配置:

{

"framework": "pytorch",

"task" : "voice-activity-detection",

"model" : {

"type" : "generic-asr",

"am_model_name": "vad.pb",

"model_config" : {

"type": "pytorch",

"code_base": "funasr",

"mode": "offline",

"lang": "zh-cn",

"batch_size": 1,

"vad_model_name": "vad.pb",

"vad_model_config": "vad.yaml",

"vad_mvn_file": "vad.mvn",

"model": "/data/model_from_modelscope/damo/speech_fsmn_vad_zh-cn-16k-common-pytorch"

}

},

"pipeline": {

"type":"vad-inference"

}

}

punc_ct-model的configuration.json配置:

{

"framework": "pytorch",

"task" : "punctuation",

"model" : {

"type" : "generic-punc",

"punc_model_name" : "punc.pb",

"punc_model_config" : {

"type": "pytorch",

"code_base": "funasr",

"mode": "punc",

"lang": "zh-cn",

"batch_size": 1,

"punc_config": "punc.yaml",

"model": "/data/model_from_modelscope/damo/punc_ct-transformer_zh-cn-common-vocab272727-pytorch"

}

},

"pipeline": {

"type":"punc-inference"

}

}

运行信息如下

2023-12-12 09:14:48,215 - modelscope - INFO - PyTorch version 2.1.1 Found.

2023-12-12 09:14:48,215 - modelscope - INFO - Loading ast index from /root/.cache/modelscope/ast_indexer

2023-12-12 09:14:48,298 - modelscope - INFO - Loading done! Current index file version is 1.9.4, with md5 9d02da553bf8b162382a09ae52dc0aef and a total number of 945 components indexed

2023-12-12 09:14:48,935 - modelscope - INFO - initiate model from /data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch

2023-12-12 09:14:48,935 - modelscope - INFO - initiate model from location /data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch.

2023-12-12 09:14:48,936 - modelscope - INFO - initialize model from /data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch

2023-12-12 09:14:48,939 - modelscope - WARNING - No preprocessor field found in cfg.

2023-12-12 09:14:48,939 - modelscope - WARNING - No val key and type key found in preprocessor domain of configuration.json file.

2023-12-12 09:14:48,939 - modelscope - WARNING - Cannot find available config to build preprocessor at mode inference, current config: {'model_dir': '/data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch'}. trying to build by task and model information.

2023-12-12 09:14:48,939 - modelscope - WARNING - No preprocessor key ('generic-asr', 'auto-speech-recognition') found in PREPROCESSOR_MAP, skip building preprocessor.

在警告之后就一直卡住没往下进行了,我刚更新了fuasr版本到0.8.7也是一样的问题。

根据您提供的信息,问题的核心在于 离线环境下加载本地模型时,程序始终尝试连接 ModelScope 社区,并卡在请求状态。以下是可能的原因及解决方案:

ModelScope SDK 在初始化模型时,默认会尝试从远程社区获取模型的元数据或配置文件(如 configuration.json)。即使您指定了本地路径,SDK 仍可能尝试访问远程服务以验证模型完整性或补充缺失的配置。

设置环境变量禁用远程访问

在离线环境中,可以通过设置环境变量 MODELSCOPE_CACHE 和 MODELSCOPE_DISABLE_REMOTE 来强制 SDK 使用本地缓存,避免远程请求:

export MODELSCOPE_CACHE=/data/model_from_modelscope

export MODELSCOPE_DISABLE_REMOTE=1

这将确保 SDK 不会尝试访问远程服务。

检查本地模型完整性

确保本地模型目录中包含所有必要的文件(如 config.yaml, decoding.yaml, am.mvn, vad.mvn 等),并且路径与 configuration.json 中的配置一致。

日志中多次出现以下警告:

WARNING - No preprocessor field found in cfg.

WARNING - No val key and type key found in preprocessor domain of configuration.json file.

WARNING - Cannot find available config to build preprocessor at mode inference.

这表明 configuration.json 文件中缺少 preprocessor 字段,导致 SDK 无法正确初始化预处理模块。

补充 Preprocessor 配置

在 configuration.json 文件中添加 preprocessor 字段,示例如下:

"preprocessor": {

"type": "generic-asr",

"mode": "inference",

"lang": "zh-cn",

"batch_size": 1,

"am_model_config": "config.yaml",

"asr_model_config": "decoding.yaml",

"mvn_file": "am.mvn"

}

确保 preprocessor 字段与模型的实际需求匹配。

验证 Preprocessor 映射

如果仍然报错,请检查 PREPROCESSOR_MAP 是否包含当前任务和模型类型的映射。如果缺失,可以手动注册:

from modelscope.preprocessors.builder import PREPROCESSOR_MAP

PREPROCESSOR_MAP.register('generic-asr', 'auto-speech-recognition', YourCustomPreprocessor)

您提到已更新 FunASR 至 0.8.7,但问题依旧存在。这可能是由于 FunASR 和 ModelScope SDK 的版本不完全兼容。

降级或升级依赖版本

根据知识库资料,建议使用以下版本组合:

funasr==0.8.4modelscope==1.9.4torch==2.1.1如果问题仍未解决,可以尝试升级至最新版本:

pip install --upgrade funasr modelscope

检查 FunASR 的离线支持

FunASR 的某些功能可能需要在线服务支持。请确认您使用的模型是否完全支持离线加载。如果不支持,可以尝试使用其他模型(如 speech_paraformer-large_asr_nat-zh-cn-16k-common-vocab8404-pytorch)。

即使在离线环境中,某些系统配置(如代理或防火墙)可能导致 SDK 尝试访问远程服务。

禁用代理

检查是否存在代理配置,并临时禁用:

unset http_proxy

unset https_proxy

验证网络隔离

确保运行环境完全隔离,无任何网络连接。

为了进一步排查问题,可以在代码中添加调试信息,明确卡住的具体位置。

import os

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

# 设置环境变量

os.environ['MODELSCOPE_CACHE'] = '/data/model_from_modelscope'

os.environ['MODELSCOPE_DISABLE_REMOTE'] = '1'

# 初始化推理管道

try:

inference_pipeline = pipeline(

task=Tasks.auto_speech_recognition,

model='/data/model_from_modelscope/damo/speech_paraformer-large-vad-punc_asr_nat-zh-cn-16k-common-vocab8404-pytorch',

vad_model='/data/model_from_modelscope/damo/speech_fsmn_vad_zh-cn-16k-common-pytorch',

punc_model='/data/model_from_modelscope/damo/punc_ct-transformer_cn-en-common-vocab471067-large'

)

print("Pipeline initialized successfully.")

except Exception as e:

print(f"Error during pipeline initialization: {e}")

# 测试推理

wav_name = "./2023110200000949.wav"

try:

rec_result = inference_pipeline(audio_in=wav_name)

print("Recognition result:", rec_result)

except Exception as e:

print(f"Error during inference: {e}")

通过上述步骤,您可以逐步排查并解决离线加载模型的问题。重点包括: 1. 禁用远程访问:设置环境变量 MODELSCOPE_DISABLE_REMOTE。 2. 补充 Preprocessor 配置:确保 configuration.json 文件完整。 3. 验证版本兼容性:使用推荐的依赖版本组合。 4. 检查网络隔离:确保无代理或防火墙干扰。 5. 代码调试:定位具体错误位置。

如果问题仍未解决,请提供更多日志信息以便进一步分析。

ModelScope旨在打造下一代开源的模型即服务共享平台,为泛AI开发者提供灵活、易用、低成本的一站式模型服务产品,让模型应用更简单!欢迎加入技术交流群:微信公众号:魔搭ModelScope社区,钉钉群号:44837352