日志:

2017-11-22 19:38:24,939 INFO [pool-8-thread-198]: metastore.HiveMetaStore (HiveMetaStore.java:create_table_core(1447)) - create_table_core default.tests

2017-11-22 19:38:24,939 INFO [pool-8-thread-198]: metastore.HiveMetaStore (HiveMetaStore.java:create_table_core(1478)) - create_table_core preEvent default.tests

2017-11-22 19:38:24,944 INFO [pool-8-thread-198]: metastore.HiveMetaStore (HiveMetaStore.java:create_table_core(1554)) - create_table_core rdbms listeners default.tests

2017-11-22 19:38:24,944 INFO [pool-8-thread-198]: metastore.HiveMetaStore (HiveMetaStore.java:create_table_core(1561)) - create_table_core rdbms listeners done default.tests

2017-11-22 19:38:24,945 ERROR [pool-8-thread-198]: metastore.RetryingHMSHandler (RetryingHMSHandler.java:invokeInternal(199)) - MetaException(message:java.io.IOException: No FileSystem for scheme: oss)

at org.apache.hadoop.hive.ql.security.authorization.AuthorizationPreEventListener.metaException(AuthorizationPreEventListener.java:411)

at org.apache.hadoop.hive.ql.security.authorization.AuthorizationPreEventListener.authorizeCreateTable(AuthorizationPreEventListener.java:272)

at org.apache.hadoop.hive.ql.security.authorization.AuthorizationPreEventListener.onEvent(AuthorizationPreEventListener.java:140)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.firePreEvent(HiveMetaStore.java:2131)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.create_table_core(HiveMetaStore.java:1479)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.create_table_with_environment_context(HiveMetaStore.java:1579)

at sun.reflect.GeneratedMethodAccessor53.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:105)

at com.sun.proxy.$Proxy17.create_table_with_environment_context(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_table_with_environment_context.getResult(ThriftHiveMetastore.java:9399)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$create_table_with_environment_context.getResult(ThriftHiveMetastore.java:9383)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1866)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.io.IOException: No FileSystem for scheme: oss

at org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider.hiveException(StorageBasedAuthorizationProvider.java:436)

at org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider.authorize(StorageBasedAuthorizationProvider.java:355)

at org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider.authorize(StorageBasedAuthorizationProvider.java:193)

at org.apache.hadoop.hive.ql.security.authorization.AuthorizationPreEventListener.authorizeCreateTable(AuthorizationPreEventListener.java:265)

... 23 moreCaused by: java.io.IOException: No FileSystem for scheme: oss

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2786)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2793)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:99)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2829)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2811)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:390)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider.checkPermissions(StorageBasedAuthorizationProvider.java:371)

at org.apache.hadoop.hive.ql.security.authorization.StorageBasedAuthorizationProvider.authorize(StorageBasedAuthorizationProvider.java:348)

... 25 more版权声明:本文内容由阿里云实名注册用户自发贡献,版权归原作者所有,阿里云开发者社区不拥有其著作权,亦不承担相应法律责任。具体规则请查看《阿里云开发者社区用户服务协议》和《阿里云开发者社区知识产权保护指引》。如果您发现本社区中有涉嫌抄袭的内容,填写侵权投诉表单进行举报,一经查实,本社区将立刻删除涉嫌侵权内容。

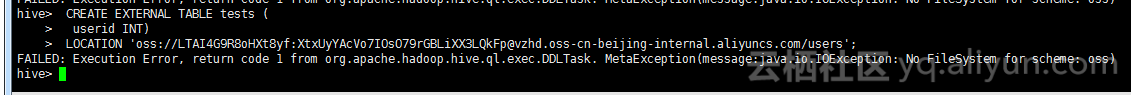

从提供的日志信息来看,您在使用Hive时遇到了一个错误,具体错误信息为 MetaException(message:java.io.IOException: No FileSystem for scheme: oss)。这意味着Hive尝试访问或操作一个使用OSS(阿里云对象存储服务)作为存储方案的数据表时,未能找到支持该文件系统(scheme)的文件系统实现。

要解决这个问题,您需要确保Hive能够识别并正确处理OSS文件系统。这通常涉及到为Hadoop配置相应的文件系统客户端。以下是解决此问题的一些建议步骤:

安装阿里云Hadoop插件:确保您的Hadoop环境中包含了阿里云的Hadoop插件,特别是hadoop-oss.jar,这个jar包提供了OSS文件系统的实现。如果您使用的是E-MapReduce服务,这个插件应该已经包含在内。如果是自建集群,您需要手动添加。

配置core-site.xml:在Hadoop的core-site.xml配置文件中,添加OSS文件系统的配置项。示例如下:

<property>

<name>fs.oss.impl</name>

<value>com.aliyun.oss.hadoop.OSSFileSystem</value>

</property>

<property>

<name>fs.oss.endpoint</name>

<value>您的OSS服务地址</value>

</property>

<property>

<name>fs.oss.accessKeyId</name>

<value>您的AccessKeyId</value>

</property>

<property>

<name>fs.oss.accessKeySecret</name>

<value>您的AccessKeySecret</value></value>

</property>

请将上述配置中的您的OSS服务地址、您的AccessKeyId和您的AccessKeySecret替换为实际的值。

重启Hive服务:完成上述配置后,需要重启Hive服务,以使新的配置生效。

检查网络与权限:确保Hive服务所在的机器可以访问OSS,并且使用的AccessKeyId和AccessKeySecret具有足够的权限来读写指定的OSS bucket。

通过以上步骤,Hive应当能够识别并正确地与OSS交互了。如果问题仍然存在,建议检查Hadoop和Hive的日志文件,以获取更详细的错误信息,进一步定位问题所在。