搜索

Paper:《“Why Should I Trust You?“: Explaining the Predictions of Any Classifier》翻译与解读

58 篇文章23 订阅

Paper:《"Why Should I Trust You?": Explaining the Predictions of Any Classifier》翻译与解读

目录

Paper:《"Why Should I Trust You?": Explaining the Predictions of Any Classifier》翻译与解读

Desired Characteristics for Explainers解释者所需的特征

3.LOCAL INTERPRETABLE MODEL-AGNOSTIC EXPLANATIONS本地可解释的与模型无关的解释

3.1 Interpretable Data Representations可解释的数据表示

3.2 Fidelity-Interpretability Trade-off保真度-可解释性权衡

3.3 Sampling for Local Exploration局部勘探取样

3.4 Sparse Linear Explanations稀疏线性解释

3.5 Example 1: Text classification with SVMs使用 SVM 进行文本分类

4.SUBMODULAR PICK FOR EXPLAINING MODELS用于解释模型的子模块选择

5.2 Are explanations faithful to the model?解释是否忠实于模型?

5.3 Should I trust this prediction?我应该相信这个预测吗?

5.4 Can I trust this model?我可以相信这个模型吗?

6.EVALUATION WITH HUMAN SUBJECTS用人类受试者评估

6.2Can users select the best classifier?用户可以选择最好的分类器吗?

6.3Can non-experts improve a classifier?非专家可以改进分类器吗?

6.4 Do explanations lead to insights?解释会带来洞察力吗?

8.CONCLUSION AND FUTURE WORK结论和未来工作

Paper:《"Why Should I Trust You?": Explaining the Predictions of Any Classifier》翻译与解读

来源 |

arXiv:1602.04938v3 [cs.LG] 2016年8月9日 Subjects: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Machine Learning (stat.ML) Cite as: arXiv:1602.04938 [cs.LG] (or arXiv:1602.04938v3 [cs.LG] for this version) |

作者 |

Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin |

原文 |

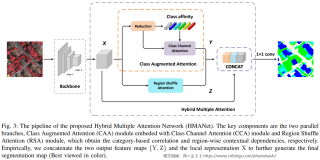

ABSTRACT

Despite widespread adoption, machine learning models remain mostly black boxes. Understanding the reasons behind predictions is, however, quite important in assessing trust, which is fundamental if one plans to take action based on a prediction, or when choosing whether to deploy a new model. Such understanding also provides insights into the model, which can be used to transform an untrustworthy model or prediction into a trustworthy one. In this work, we propose LIME, a novel explanation technique that explains the predictions of any classifier in an interpretable and faithful manner, by learning an interpretable model locally around the prediction. We also propose a method to explain models by presenting representative individual predictions and their explanations in a non-redundant way, framing the task as a submodular optimization problem. We demonstrate the flexibility of these methods by explaining different models for text (e.g. random forests) and image classification (e.g. neural networks). We show the utility of explanations via novel experiments, both simulated and with human subjects, on various scenarios that require trust: deciding if one should trust a prediction, choosing between models, improving an untrustworthy classifier, and identifying why a classifier should not be trusted. |

尽管被广泛采用,机器学习模型仍然被看作是一种黑匣子。然而,理解预测背后的原因对于评估信任是非常重要的,尤其是如果一个人计划根据预测采取行动,或在选择是否部署一个新模型时,这是至关重要的。这样的理解也提供了对模型的洞察,可以用来将一个不可信的模型或预测转换为一个可信的模型或预测。 在这项工作中,我们提出了一种新的解释技术LIME,通过学习一个局部的可解释模型,以一种可解释和忠实的方式解释任何分类器的预测。我们还提出了一种解释模型的方法,通过以非冗余的方式呈现具有代表性的个体预测及其解释,将任务框架为子模块优化问题—Submodular。我们通过解释文本(例如随机森林)和图像分类(例如神经网络)的不同模型来展示这些方法的灵活性。在各种需要信任的场景中,我们通过模拟和人类受试者的新实验展示了解释的效用:定一个人是否应该信任一个预测,在模型之间选择,改进一个不可信的分类器,以及确定为什么一个分类器不应该被信任。 |

1.INTRODUCTION

Machine learning is at the core of many recent advances in science and technology. Unfortunately, the important role of humans is an oft-overlooked aspect in the field. Whether humans are directly using machine learning classifiers as tools, or are deploying models within other products, a vital concern remains: if the users do not trust a model or a prediction, they will not use it. It is important to differentiate between two different (but related) definitions of trust: (1) trusting a prediction, i.e. whether a user trusts an individual prediction sufficiently to take some action based on it, and (2) trusting a model, i.e. whether the user trusts a model to behave in reasonable ways if deployed. Both are directly impacted by how much the human understands a model’s behaviour, as opposed to seeing it as a black box. |

机器学习是近年来许多科学技术进步的核心。不幸的是,人类的重要作用在该领域经常被忽视。无论人类是直接使用机器学习分类器作为工具,还是在其他产品中部署模型,一个至关重要的问题仍然存在:如果用户不信任模型或预测,他们将不会使用它。区分两个不同(但相关)的信任定义很重要:(1)信任一个预测,即用户是否充分信任一个个人预测,从而采取一些基于该预测的行动,以及(2)信任一个模型,即用户是否信任一个模型在部署后能够以合理的方式行事。两者都直接受到人类对模型行为的理解程度的影响,而不是将其视为黑匣子。 |

Determining trust in individual predictions is an important problem when the model is used for decision making. When using machine learning for medical diagnosis [6] or terrorism detection, for example, predictions cannot be acted upon on blind faith, as the consequences may be catastrophic. Apart from trusting individual predictions, there is also a need to evaluate the model as a whole before deploying it “in the wild”. To make this decision, users need to be confident that the model will perform well on real-world data, according to the metrics of interest. Currently, models are evaluated using accuracy metrics on an available validation dataset. However, real-world data is often significantly different, and further, the evaluation metric may not be indicative of the product’s goal. Inspecting individual predictions and their explanations is a worthwhile solution, in addition to such metrics. In this case, it is important to aid users by suggesting which instances to inspect, especially for large datasets. |

当模型用于决策时,确定个体预测的可信度是一个重要问题。例如,当使用机器学习进行医学诊断 [6] 或恐怖主义检测时,不能盲目相信预测,因为后果可能是灾难性的。 除了信任个体的预测外,还需要在“自然场景下”部署模型之前对模型进行整体评估。为了做出这个决定,用户需要确信模型将根据兴趣的指标在现实即世界数据上表现良好。目前,模型是在可用的验证数据集上使用精度指标进行评估的。然而,现实世界的数据往往有很大的不同,此外,评估指标可能并不代表产品的目标。除了这些指标之外,检查个体预测及其解释是一个有价值的解决方案。在这种情况下,重要的是通过建议检查哪些实例来帮助用户是很重要的,尤其是对于大型数据集。 |

In this paper, we propose providing explanations for indi- vidual predictions as a solution to the “trusting a prediction” problem, and selecting multiple such predictions (and expla- nations) as a solution to the “trusting the model” problem. Our main contributions are summarized as follows. (1)、LIME, an algorithm that can explain the predictions of any classifier or regressor in a faithful way, by approximating it locally with an interpretable model. (2)、SP-LIME, a method that selects a set of representative instances with explanations to address the “trusting the model” problem, via submodular optimization. (3)、Comprehensive evaluation with simulated and human sub- jects, where we measure the impact of explanations on trust and associated tasks. In our experiments, non-experts using LIME are able to pick which classifier from a pair generalizes better in the real world. Further, they are able to greatly improve an untrustworthy classifier trained on 20 newsgroups, by doing feature engineering using LIME. We also show how understanding the predictions of a neu- ral network on images helps practitioners know when and why they should not trust a model. |

在本文中,我们提出为个体预测提供解释作为“相信预测”问题的解决方案,并选择多个此类预测(和解释)作为“信任模型”问题的解决方案。我们的主要贡献总结如下。 (1)、LIME,一种算法,可以通过一个可解释的模型局部逼近,以忠实的方式解释任何分类器或回归器的预测。 (2)、SP-LIME,一种通过子模块优化—Submodular选择一组具有解释的代表性实例来解决“信任模型”问题的方法。 (3)、通过模拟实验和人类实验进行综合评估,衡量解释对信任和相关任务的影响。 在我们的实验中,使用 LIME 的非专家能够从一对分类器中挑选出哪个分类器在现实世界中的泛化效果更好。 此外,通过使用 LIME 进行特征工程,他们能够极大地改进在 20 个新闻组上训练的不可信分类器。 我们还展示了理解神经网络对图像的预测如何帮助从业者知道他们何时以及为什么不应该信任模型。 |

2.THE CASE FOR EXPLANATIONS

左边是一个医学诊断模型,它通过输入某个病人的一些基本症状得出"Flu"的诊断结果,通过解释器LIME的处理,可以得到,如放大镜所示,LIME解释器对于模型诊断结果的背后的解释。其中绿色代表促进这个结果的特征,红色代表反对这个结果的特征。我们将最后的选择权交还到医生的手里,医生可以通过这些”解释“来判断是否采纳机器学习的预测结果。

| Figure 1: Explaining individual predictions. A model predicts that a patient has the u, and LIME highlights the symptoms in the patient's history that led to the prediction. Sneeze and headache are portrayed as contributing to the \ u" prediction, while \no fatigue" is evidence against it. With these, a doctor can make an informed decision about whether to trust the model's prediction. | 图 1:解释个人预测。 模型预测患者有 u,LIME 强调了导致这个预测的病人病史中的症状。 打喷嚏和头痛被描绘为有助于“u”预测,而“无疲劳”是反对它的证据。 有了这些,医生可以就是否信任模型的预测做出明智的决定。 |

By “explaining a prediction”, we mean presenting textual or visual artifacts that provide qualitative understanding of the relationship between the instance’s components (e.g. words in text, patches in an image) and the model’s prediction. We argue that explaining predictions is an important aspect in getting humans to trust and use machine learning effectively, if the explanations are faithful and intelligible. |

通过“解释预测”,我们的意思是呈现文本或视觉工件,以提供对实例组件(例如文本中的单词、图像中的补丁)与模型预测之间关系的定性理解。我们认为,如果解释是忠实和可理解的,那么解释预测是让人类信任和有效使用机器学习的一个重要方面。 |

The process of explaining individual predictions is illus- trated in Figure 1. It is clear that a doctor is much better positioned to make a decision with the help of a model if intelligible explanations are provided. In this case, an ex- planation is a small list of symptoms with relative weights – symptoms that either contribute to the prediction (in green) or are evidence against it (in red). Humans usually have prior knowledge about the application domain, which they can use to accept (trust) or reject a prediction if they understand the reasoning behind it. It has been observed, for example, that providing explanations can increase the acceptance of movie recommendations [12] and other automated systems [8]. Every machine learning application also requires a certain measure of overall trust in the model. Development and evaluation of a classification model often consists of collect- ing annotated data, of which a held-out subset is used for automated evaluation. Although this is a useful pipeline for many applications, evaluation on validation data may not correspond to performance “in the wild”, as practitioners often overestimate the accuracy of their models [20], and thus trust cannot rely solely on it. Looking at examples offers an alternative method to assess truth in the model, especially if the examples are explained. We thus propose explaining several representative individual predictions of a model as a way to provide a global understanding. |

解释个人预测的过程如图 1 所示。很明显,如果提供了可理解的解释,医生可以更好地借助模型做出决定。在这种情况下,解释是一小部分具有相对权重的症状——这些症状要么有助于预测(绿色),要么是反对预测的证据(红色)。人们通常有关于应用领域的先验知识,如果他们理解预测背后的原因,他们可以使用这些知识来接受(信任)或拒绝预测。例如,据观察,提供解释可以提高电影推荐 [12] 和其他自动化系统 [8] 的接受度。 每个机器学习应用程序还需要对模型的整体信任度进行一定的衡量。分类模型的开发和评估通常包括收集带注释的数据,其中的一个外置子集用于自动评估。尽管这对于许多应用程序来说是一个有用的管道,但对验证数据的评估可能并不对应于“自然场景下”的性能,因为从业者经常高估他们模型[20]的准确性,因此不能完全依赖于它。查看示例提供了一种评估模型真实性的的另一种方法,特别是在对示例进行了解释的情况下。因此,我们建议解释一个模型的几个有代表性的个体预测,作为一种提供全局理解的方式。 |

There are several ways a model or its evaluation can go wrong. Data leakage, for example, defined as the uninten- tional leakage of signal into the training (and validation) data that would not appear when deployed [14], potentially increases accuracy. A challenging example cited by Kauf- man et al. [14] is one where the patient ID was found to be heavily correlated with the target class in the training and validation data. This issue would be incredibly challenging to identify just by observing the predictions and the raw data, but much easier if explanations such as the one in Figure 1 are provided, as patient ID would be listed as an explanation for predictions. Another particularly hard to detect problem is dataset shift [5], where training data is different than test data (we give an example in the famous 20 newsgroups dataset later on). The insights given by expla- nations are particularly helpful in identifying what must be done to convert an untrustworthy model into a trustworthy one – for example, removing leaked data or changing the training data to avoid dataset shift. |

模型或其评估可能会出错的方式有多种。例如,数据泄漏被定义为无意间会向部署[14]时不会出现的训练(和验证)数据泄漏信号,这可能会提高准确性。 Kaufman 等人引用的一个具有挑战性的例子[14] 是在训练和验证数据中发现患者 ID 与目标类别高度相关的一种。仅通过观察预测和原始数据来识别这个问题将非常具有挑战性,但如果提供图 1 中的解释则容易得多,因为患者 ID 将被列为预测的解释。另一个特别难以检测的问题是数据集移位 [5],其中训练数据不同于测试数据(我们稍后会在著名的 20 个新闻组数据集中给出一个示例)。解释给出的见解特别有助于确定必须做什么才能将一个不值得信任的模型转换为值得信任的模型——例如,删除泄露的数据或更改训练数据以避免数据集转移。 |

Machine learning practitioners often have to select a model from a number of alternatives, requiring them to assess the relative trust between two or more models. In Figure 2, we show how individual prediction explanations can be used to select between models, in conjunction with accuracy. In this case, the algorithm with higher accuracy on the validation set is actually much worse, a fact that is easy to see when explanations are provided (again, due to human prior knowledge), but hard otherwise. Further, there is frequently a mismatch between the metrics that we can compute and optimize (e.g. accuracy) and the actual metrics of interest such as user engagement and retention. While we may not be able to measure such metrics, we have knowledge about how certain model behaviors can influence them. Therefore, a practitioner may wish to choose a less accurate model for content recommendation that does not place high importance in features related to “clickbait” articles (which may hurt user retention), even if exploiting such features increases the accuracy of the model in cross validation. We note that explanations are particularly useful in these (and other) scenarios if a method can produce them for any model, so that a variety of models can be compared. |

机器学习从业者通常必须从多个备选模型中选择一个模型,要求他们评估两个或多个模型之间的相对信任度。在图 2 中,我们展示了如何使用单个预测解释来选择模型,以及准确性。在这种情况下,在验证集上具有更高精度的算法实际上糟糕得多,当提供解释时很容易看到这一事实(同样,由于人类先验知识),但很难看到其他情况。此外,我们可以计算和优化的指标(例如准确性)与实际感兴趣的指标(例如用户参与度和留存率)之间经常存在不匹配。虽然我们可能无法衡量这些指标,但我们知道特定的模型行为如何影响它们。因此,从业者可能希望选择一个不那么精确的内容推荐模型,该模型不会高度重视与“标题党”文章相关的特性(这可能会损害用户留存率),即使利用这些特征会提高模型在交叉中的准确性验证。我们注意到,如果一种方法可以为任何模型生成解释,那么解释在这些(和其他)场景中特别有用,以便可以比较各种模型。 |

Figure 2: Explaining individual predictions of competing classiers trying to determine if a document is about \Christianity" or \Atheism". The bar chart represents the importance given to the most relevant words, also highlighted in the text. Color indicates which class the word contributes to (green for Christianity", magenta for \Atheism"). |

左边如图所示:一个文章分类的例子,判定该文章是描述“Christianity”还是“Atheism”。可以知道两次的分类都是正确的。但仔细观察可以发现Algorithm 2所判断的主要依据是Posting、Host, 这个词汇其实与Atheism本身并没有太多的联系,虽然它的Accuracy很高,但它依然不可信的。因此我们可以认为这种”解释“的行为给了我们选择/不选择一个模型的理由。 图 2:解释竞争分类者试图确定文档是关于“基督教”还是“无神论”的个人预测。 条形图表示对最相关单词的重要性,也在文本中突出显示。 颜色表示该词属于哪个类别(绿色代表基督教”,洋红色代表“无神论”)。 |

Desired Characteristics for Explainers解释者所需的特征

We now outline a number of desired characteristics from explanation methods. An essential criterion for explanations is that they must be interpretable, i.e., provide qualitative understanding between the input variables and the response. We note that interpretability must take into account the user’s limitations. Thus, a linear model [24], a gradient vector [2] or an additive model [6] may or may not be interpretable. For example, if hundreds or thousands of features significantly contribute to a prediction, it is not reasonable to expect any user to comprehend why the prediction was made, even if individual weights can be inspected. This requirement further implies that explanations should be easy to understand, which is not necessarily true of the features used by the model, and thus the “input variables” in the explanations may need to be different than the features. Finally, we note that the notion of interpretability also depends on the target audience. Machine learning practitioners may be able to interpret small Bayesian networks, but laymen may be more comfortable with a small number of weighted features as an explanation. Another essential criterion is local fidelity. Although it is often impossible for an explanation to be completely faithful unless it is the complete description of the model itself, for an explanation to be meaningful it must at least be locally faithful, i.e. it must correspond to how the model behaves in the vicinity of the instance being predicted. We note that local fidelity does not imply global fidelity: features that are globally important may not be important in the local context, and vice versa. While global fidelity would imply local fidelity, identifying globally faithful explanations that are interpretable remains a challenge for complex models. |

我们现在从解释方法中概述一些期望的特征。 解释的一个基本标准是它们必须是可解释的,即在输入变量和响应之间提供定性的理解。我们注意到,可解释性必须考虑到用户的限制。因此,线性模型 [24]、梯度向量 [2] 或加性模型 [6] 可能是可解释的,也可能是不可解释的。例如,如果数百或数千个特征对预测有显着贡献,那么期望任何用户理解为什么做出预测是不合理的,即使可以检查各个权重。这一要求进一步意味着解释应该易于理解,而模型使用的特征不一定如此,因此解释中的“输入变量”可能需要与特征不同。最后,我们注意到可解释性的概念还取决于目标受众。机器学习从业者可能能够解释小型贝叶斯网络,但外行可能更愿意使用少量加权特征作为解释。另一个基本标准是局部保真度。尽管一个解释通常不可能完全可靠,除非它是模型本身的完整描述,但要使一个解释有意义,它必须至少在局部是可靠的,也就是说,它必须与模型在被预测的实例附近的行为相对应。我们注意到局部保真度并不意味着全局保真度:全局重要的特征在局部上下文中可能并不重要,反之亦然。虽然全局保真度意味着局部保真度,但确定可解释的全局保真解释仍然是复杂模型的挑战。 |

While there are models that are inherently interpretable [6, 17, 26, 27], an explainer should be able to explain any model,and thus be model-agnostic (i.e. treat the original model as a black box). Apart from the fact that many state-of- the-art classifiers are not currently interpretable, this also provides flexibility to explain future classifiers. In addition to explaining predictions, providing a global perspective is important to ascertain trust in the model. As mentioned before, accuracy may often not be a suitable metric to evaluate the model, and thus we want to explain the model. Building upon the explanations for individual predictions, we select a few explanations to present to the user, such that they are representative of the model. |

虽然有些模型本质上是可解释的 [6, 17, 26, 27],但解释器应该能够解释任何模型,因此与模型无关(即将原始模型视为黑盒)。 除了许多最先进的分类器目前无法解释的事实之外,这也为解释未来的分类器提供了灵活性。 除了解释预测之外,提供全局视角对于确定对模型的信任也很重要。 如前所述,准确性通常可能不是评估模型的合适指标,因此我们想解释模型。 基于对单个预测的解释的基础上,我们选择一些解释来呈现给用户,这样它们就能代表模型。 |

3.LOCAL INTERPRETABLE MODEL-AGNOSTIC EXPLANATIONS局部可解释且与模型无关的解释

We now present Local Interpretable Model-agnostic Explanations (LIME). The overall goal of LIME is to identify an interpretable model over the interpretable representation that is locally faithful to the classifier. |

我们现在提出局部可解释且与模型无关的(LIME)。 LIME 的总体目标是在局部忠实于分类器的可解释表示上识别可解释模型。 |

3.1 Interpretable Data Representations可解释的数据表示

Before we present the explanation system, it is important to distinguish between features and interpretable data representations. As mentioned before, interpretable expla- nations need to use a representation that is understandable to humans, regardless of the actual features used by the model. For example, a possible interpretable representation for text classification is a binary vector indicating the pres- ence or absence of a word, even though the classifier may use more complex (and incomprehensible) features such as word embeddings. Likewise for image classification, an in- terpretable representation may be a binary vector indicating the “presence” or “absence” of a contiguous patch of similar pixels (a super-pixel), while the classifier may represent the image as a tensor with three color channels per pixel. We denote x 2 Rd be the original representation of an instance being explained, and we use x0 2 f0; 1gd0 to denote a binary vector for its interpretable representation. |

在我们介绍解释系统之前,区分特征和可解释的数据表示是很重要的。如前所述,可解释的解释需要使用人类可以理解的表示,而不管模型使用的实际特征如何。例如,文本分类的一个可能的可解释表示是一个二进制向量,表示一个单词的存在或不存在,即使分类器可能使用更复杂(和难以理解)的特征,如单词嵌入。同样地,对于图像分类,可解释的表示可以是表示相似像素(超像素)的连续补丁“存在”或“不存在”的二进制向量,而分类器可以表示图像为一个张量,每个像素有三个颜色通道。我们 表示 x 2 Rd 是被解释实例的原始表示,我们使用 x0 2 f0; 1gd0 表示其可解释表示的二进制向量。 |

3.2 Fidelity-Interpretability Trade-off保真度-可解释性权衡

Formally, we define an explanation as a model g ∈ G, where G is a class of potentially interpretable models, such as linear models, decision trees, or falling rule lists [27], i.e. a model g ∈ G can be readily presented to the user with visual or textual artifacts. The domain of g is {0,1}, i.e. g acts over absence/presence of the interpretable components. As not every g 2 G may be simple enough to be interpretable thus we let (g) be a measure of complexity (as opposed to interpretability) of the explanation g 2 G. For example, for decision trees (g) may be the depth of the tree, while for linear models, (g) may be the number of non-zero weights. |

形式上,我们将解释定义为模型 g ∈ G,其中 G 是一类潜在的可解释模型,例如线性模型、决策树或下降规则列表 [27],即模型 g ∈ G 可以很容易地呈现给具有视觉或文本伪影的用户。 g 的域是 {0,1},即 g 作用于可解释组件的缺失/存在。由于并非每个 g 2 G 都可能足够简单以至于可以解释,因此我们让 (g) 是解释 g 2 G 的复杂性(相对于可解释性)的度量。例如,对于决策树,(g) 可能是树的深度,而对于线性模型,(g) 可能是数量非零权重。 |

Let the model being explained be denoted f : Rd ! R. In classication, f(x) is the probability (or a binary indicator) that x belongs to a certain class1. We further use _x0019_x(z) as a proximity measure between an instance z to x, so as to dene locality around x. Finally, let L(f; g; _x0019_x) be a measure of how unfaithful g is in approximating f in the locality dened by _x0019_x. In order to ensure both interpretability and local delity, we must minimize L(f; g; _x0019_x) while having (g) be low enough to be interpretable by humans. The explanation produced by LIME is obtained by the following: This formulation can be used with different explanation families G, fidelity functions L, and complexity measures Ω. Here we focus on sparse linear models as explanations, and on performing the search using perturbations. |

让正在解释的模型表示为 f : Rd ! R. 在分类中,f(x) 是 x 属于某个类别的概率(或二元指标)。我们进一步使用 _x0019_x(z) 作为实例 z 到 x 之间的接近度度量,以定义 x 周围的局部性。最后,让 L(f; g; _x0019_x) 衡量 g 在 _x0019_x 定义的局部性中逼近 f 的程度。为了确保可解释性和局部保真度,我们必须最小化 L(f; g; _x0019_x),同时让 (g) 低到足以被人类解释。 LIME 产生的解释是通过以下方式获得的: 该公式可以与不同的解释族 G、保真度函数 L 和复杂度度量 Ω 一起使用。在这里,我们专注于稀疏线性模型作为解释,并使用扰动执行搜索。 |

3.3 Sampling for Local Exploration局部勘探取样

We want to minimize the locality-aware loss L(f, g, πx) without making any assumptions about f , since we want the explainer to be model-agnostic. Thus, in order to learnthe local behavior of f as the interpretable inputs vary, we approximate L(f, g, πx) by drawing samples, weighted by πx. We sample instances around xl by drawing nonzero elements of xl uniformly at random (where the number of such draws is also uniformly sampled). Given a perturbed sample zl ∈ {0, 1}dl (which contains a fraction of the nonzero elements of xl), we recover the sample in the original representation z ∈ Rd and obtain f (z), which is used as a label for the explanation model. Given this dataset Z of perturbed samples with the associated labels, we optimize Eq. (1) toget an explanation ξ(x). The primary intuition behind LIME is presented in Figure 3, where we sample instances both in the vicinity of x (which have a high weight due to πx) and far away from x (low weight from πx). Even though the original model may be too complex to explain globally, LIME presents an explanation that is locally faithful (linear in this case), where the locality is captured by πx. It is worth noting that our method is fairly robust to sampling noise since the samples are weighted by πx in Eq. (1). We now present a concrete instance of this general framework. |

我们希望在不对 f 做任何假设的情况下最小化局部感知损失 L(f, g, πx),因为我们希望解释器与模型无关。因此,为了学习 f 在可解释输入变化时的局部行为,我们通过抽取样本来近似 L(f, g, πx),加权为 πx。我们通过随机均匀地绘制 xl 的非零元素来对 xl 周围的实例进行采样(其中 这样的抽奖也是统一抽样的)。给定一个扰动样本 zl ∈ {0, 1}dl (其中包含 xl 的一部分非零元素),我们恢复原始表示中的样本 z ∈ Rd 并获得 f (z),它用作解释模型。给定这个带有相关标签的扰动样本数据集 Z,我们优化方程。 (1) 得到解释 ξ(x)。 LIME 背后的主要直觉如图 3 所示,我们在 x 附近(由于 πx 具有高权重)和远离 x (由于 πx 的低权重)对实例进行采样。尽管原始模型可能过于复杂而无法全局解释,但 LIME 提供了一种局部忠实的解释(在这种情况下为线性),其中局部性由 πx 捕获。值得注意的是,我们的方法对采样噪声相当鲁棒,因为样本在方程式中由 πx 加权。 (1)。我们现在介绍这个通用框架的一个具体实例。 |

3.4 Sparse Linear Explanations稀疏线性解释

| For the rest of this paper, we let G be the class of linear models, such that g(z) = wg ·z. We use the locally weighted square loss as L, as defined in Eq. (2), where we let πx(z) = exp(−D(x, z)2/σ2) be an exponential kernel defined on some distance function D (e.g. cosine distance for text, L2 distance for images) with width σ. | 对于本文的其余部分,我们让 G 是线性模型的类别,使得 g(z) = wg·z。我们使用局部加权平方损失作为 L,如方程式中所定义。 (2),其中我们让 πx(z) = exp(−D(x, z)2/σ2) 是定义在某个距离函数 D(例如文本的余弦距离,图像的 L2 距离)上的指数核,宽度为 σ . |

z′是z的可解释形式(interpretable version)(可以理解为只有部分特征的实例)。 对于文本来说,interpretable version就是选出K维的bag of words; 对于图像来说,interpretable version就是一组K维super-pixel组成的向量,K是人工设置的一个常数。LIME伪代码所示 |

|

For text classification, we ensure that the explanation is interpretable by letting the interpretable representation be a bag of words, and by setting a limit K on the number of words, i.e. Ω(g) = ∞1[ wg 0 > K]. Potentially, K can be adapted to be as big as the user can handle, or we could have different values of K for different instances. In this paper we use a constant value for K, leaving the exploration of different values to future work. We use the same Ω for image classification, using “super-pixels” (computed using any standard algorithm) instead of words, such that the interpretable representation of an image is a binary vector where 1 indicates the original super-pixel and 0 indicates a grayed out super-pixel. This particular choice of Ω makes directly solving Eq. (1) intractable, but we approximate it by first selecting K features with Lasso (using the regularization path [9]) and then learning the weights via least squares (a procedure we call K-LASSO in Algorithm 1). Since Algo- rithm 1 produces an explanation for an individual prediction, its complexity does not depend on the size of the dataset, but instead on time to compute f (x) and on the number of samples N . In practice, explaining random forests with 1000 trees using scikit-learn (http://scikit-learn.org) on a laptop with N = 5000 takes under 3 seconds without any optimizations such as using gpus or parallelization. Explain- ing each prediction of the Inception network [25] for image classification takes around 10 minutes. |

对于文本分类,我们确保解释是 通过让可解释的表示是一个词袋并通过对词的数量设置一个限制 K 来解释,即 Ω(g) = ∞1[ wg 0 > K]。潜在地,K 可以适应用户可以处理的最大大小,或者我们可以为不同的实例设置不同的 K 值。在本文中,我们为 K 使用一个常数值,将不同值的探索留给未来的工作。我们使用相同的 Ω 进行图像分类,使用“超像素”(使用任何标准算法计算)而不是单词,这样图像的可解释表示是二进制向量,其中 1 表示原始超像素,0 表示变灰超像素。 Ω 的这种特殊选择使得直接求解方程。 (1) 难以处理,但我们首先通过 Lasso 选择 K 个特征(使用正则化路径 [9])然后通过最小二乘法学习权重(我们在算法 1 中称为 K-LASSO 的过程)来近似它。由于算法 1 对单个预测产生了解释,因此其复杂性不取决于数据集的大小,而是取决于计算 f (x) 的时间和样本数 N。在实践中,在 N = 5000 的笔记本电脑上使用 scikit-learn (http://scikit-learn.org) 解释具有 1000 棵树的随机森林需要不到 3 秒的时间,而无需任何优化,例如使用 gpus 或并行化。解释 Inception 网络 [25] 对图像分类的每个预测大约需要 10 分钟。 |

Any choice of interpretable representations andG will have some inherent drawbacks. First, while the underlying model can be treated as a black-box, certain interpretable representations will not be powerful enough to explain certain behaviors. For example, a model that predicts sepia-toned images to be retro cannot be explained by presence of absence of super pixels. Second, our choice of G (sparse linear models) means that if the underlying model is highly non-linear even in the locality of the prediction, there may not be a faithful explanation. However, we can estimate the faithfulness ofthe explanation on Z, and present this information to the user. This estimate of faithfulness can also be used for selecting an appropriate family of explanations from a set ofmultiple interpretable model classes, thus adapting to the given dataset and the classifier. We leave such exploration for future work, as linear explanations work quite well for multiple black-box models in our experiments. |

任何可解释的表示和 G 的选择都会有一些固有的缺点。首先,虽然底层模型可以被视为一个黑盒,但某些可解释的表示不足以解释某些行为。例如,预测棕褐色图像是复古的模型不能用超像素的缺失来解释。其次,我们对 G(稀疏线性模型)的选择意味着,如果基础模型即使在预测的局部也是高度非线性的,则可能没有忠实的解释。但是,我们可以估计 Z 上解释的真实性,并将此信息呈现给用户。这种忠实度估计也可用于从一组多个可解释模型类中选择适当的解释族,从而适应给定的数据集和分类器。我们将这种探索留给未来的工作,因为在我们的实验中,线性解释对于多个黑盒模型非常有效。 |

Figure 3: Toy example to present intuition for LIME.The black-box model’s complex decision function f (unknown to LIME) is represented by the blue/pink background, which cannot be approximated well by a linear model. The bold red cross is the instance being explained. LIME samples instances, gets pre- dictions using f , and weighs them by the proximity to the instance being explained (represented here by size). The dashed line is the learned explanationthat is locally (but not globally) faithful. |

图 3:可视化LIME 直觉的玩具示例。黑盒模型的复杂决策函数 f(LIME 未知)由蓝色/粉红色背景表示,线性模型无法很好地近似。 加粗的红叉是正在解释的实例。 LIME 对实例进行采样,使用 f 获取预测,并根据与所解释的实例的接近程度来衡量它们(这里用大小表示)。 虚线是局部(但不是全局)忠实的学习解释。 |

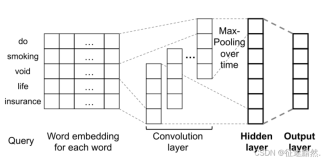

3.5 Example 1: Text classification with SVMs使用 SVM 进行文本分类

When using sparse linear explanations for image classifiers, one may wish to just highlight the super-pixels with posi- tive weight towards a specific class, as they give intuition as to why the model would think that class may be present. We explain the prediction of Google’s pre-trained Inception neural network [25] in this fashion on an arbitrary image (Figure 4a). Figures 4b, 4c, 4d show the superpixels expla- nations for the top 3 predicted classes (with the rest of the image grayed out), having set K = 10. What the neural network picks up on for each of the classes is quite natural to humans - Figure 4b in particular provides insight as to why acoustic guitar was predicted to be electric: due to the fretboard. This kind of explanation enhances trust in the classifier (even if the top predicted class is wrong), as it shows that it is not acting in an unreasonable manner. |

当对图像分类器使用稀疏线性解释时,人们可能希望仅突出显示对特定类别具有正权重的超像素,因为它们可以直观地说明模型为什么认为该类别可能存在。 我们以这种方式在任意图像上解释了谷歌预训练的 Inception 神经网络 [25] 的预测(图 4a)。 图 4b、4c、4d 显示了前 3 个预测类别的超像素解释(图像的其余部分变灰),设置 K = 10。神经网络为每个类别拾取的内容非常自然 对人类 - 图 4b 特别提供了关于为什么原声吉他被预测为电吉他的见解:由于指板。 这种解释增强了对分类器的信任(即使最上面的预测类是错误的),因为它表明它并没有以不合理的方式行事。 |

Figure 4: Explaining an image classication prediction made by Google's Inception neural network. The top 3 classes predicted are \Electric Guitar" (p = 0:32), \Acoustic guitar" (p = 0:24) and \Labrador" (p = 0:21) |

图 4:解释由 Google 的 Inception 神经网络进行的图像分类预测。 预测的前 3 类是 \电吉他" (p = 0:32)、\木吉他" (p = 0:24) 和 \拉布拉多" (p = 0:21) |

4.SUBMODULAR PICK FOR EXPLAINING MODELS用于解释模型的子模块选择

Although an explanation of a single prediction provides some understanding into the reliability of the classifier to the user, it is not sufficient to evaluate and assess trust in the model as a whole. We propose to give a global understanding of the model by explaining a set of individual instances. This approach is still model agnostic, and is complementary to computing summary statistics such as held-out accuracy. Even though explanations of multiple instances can be insightful, these instances need to be selected judiciously, since users may not have the time to examine a large number of explanations. We represent the time/patience that humans have by a budget B that denotes the number of explanations they are willing to look at in order to understand a model. Given a set of instances X, we define the pick step as the task of selecting B instances for the user to inspect. |

尽管对单个预测的解释为用户提供了对分类器可靠性的一些理解,但这不足以评估和评估模型作为一个整体的信任。我们建议通过解释一组单独的实例来对模型进行全局理解。这种方法仍然与模型无关,并且是对计算汇总统计数据(例如保持准确性)的补充。 尽管对多个实例的解释可能是深刻的,但需要明智地选择这些实例,因为用户可能没有时间检查大量的解释。我们用预算 B 表示人类所拥有的时间/耐心,预算 B 表示他们为了理解模型而愿意查看的解释数量。给定一组实例 X,我们将挑选步骤定义为选择 B 个实例供用户检查的任务。 |

The pick step is not dependent on the existence of explana- tions - one of the main purpose of tools like Modeltracker [1] and others [11] is to assist users in selecting instances them- selves, and examining the raw data and predictions. However, since looking at raw data is not enough to understand predic- tions and get insights, the pick step should take into account the explanations that accompany each prediction. Moreover, this method should pick a diverse, representative set of expla- nations to show the user – i.e. non-redundant explanations that represent how the model behaves globally. Given the explanations for a set of instances X (|X| = n), we construct an n × dl explanation matrix W that represents the local importance of the interpretable components for each instance. When using linear models as explanations, for an instance xi and explanation gi =ξ(xi), we set Wij =|wgij |. Further, for each component (column) j in W, welet Ij denote the global importance of that component in the explanation space. Intuitively, we want I such that features that explain many different instances have higher importance scores. In Figure 5, we show a toy example W, with n = dl = 5, where W is binary (for simplicity). Theimportance function I should score feature f2 higher thanfeature f1, i.e. I2 > I1, since feature f2 is used to explain more instances. Concretely for the text applications, we set For images, I must measure something features. We formalize this non-redundant coverage intuition that is comparable across the super-pixels in different images,such as color histograms or other features of super-pixels; we leave further exploration of these ideas for future work. While we want to pick instances that cover the important components, the set of explanations must not be redundant in the components they show the users, i.e. avoid selecting instances with similar explanations. In Figure 5, after the second row is picked, the third row adds no value, as the user has already seen features f2 and f3 - while the last row exposes the user to completely new features. Selecting the second and last row results in the coverage of almost all thefeatures. We formalize thisnon-redundant coverage intuitionthat is comparable across the super-pixels in different images,in Eq. (3), where we define coverage as the set function c that, given Wand I, computes the total importance of the features that appear in at least one instance in a set V . |

选择步骤不依赖于解释的存在——像Modeltracker [1] 和其他 [11] 等工具的主要目的之一是帮助用户自己选择实例,并检查原始数据和预测。然而,由于查看原始数据不足以理解预测和获得洞察力,因此选择步骤应考虑每个预测附带的解释。此外,这种方法应该选择一组多样化的、有代表性的解释来向用户展示——即非冗余的解释,这些解释代表了模型的全局行为。 给定一组实例X (|X| = n)的解释,我们构造了一个n × dl解释矩阵W,表示每个实例的可解释组件的局部重要性。当使用线性模型作为解释时,对于一个实例xi和解释gi = ξ(xi),我们设Wij =|wgij |。进一步,对于W中的每个分量(列)j,我们让Ij表示该分量在解释空间中的全局重要性。直觉上,我们想要这样 解释许多不同实例的特征具有更高的重要性分数。在图 5 中,我们展示了一个玩具示例 W,其中 n = dl = 5,其中 W 是二进制的(为简单起见)。重要性函数 I 应该对特征 f2 评分高于特征 f1,即 I2 > I1,因为特征 f2 用于解释更多实例。具体对于文本应用,我们设置 对于图像,我必须测量一些特征。我们将这种非冗余覆盖直觉形式化,这种直觉在不同图像中的超像素之间具有可比性,例如颜色直方图或超像素的其他特征;我们将这些想法的进一步探索留给未来的工作。 虽然我们希望选择涵盖重要组件的实例,但解释集在它们向用户展示的组件中不能是多余的,即避免选择具有相似解释的实例。在图 5中,选择第二行后,第三行没有增加任何价值,因为用户已经看到了特征 f2 和 f3——而最后一行向用户展示了全新的特征。选择第二行和最后一行会导致几乎所有特征的覆盖。我们将这种非冗余覆盖直觉形式化,它在不同图像中的超像素之间具有可比性,在方程式中(3),我们将覆盖率定义为集合函数 c,它在给定 W 和 I 的情况下计算出现在集合 V 中至少一个实例中的特征的总重要性。 |

The problem in Eq. (4) is maximizing a weighted coverage function, and is NP-hard [10]. Let c(V ∪{i}, W, I)−c(V, W, I) be the marginal coverage gain of adding an instance i to a set V . Due to submodularity, a greedy algorithm that iteratively adds the instance with the highest marginal coverage gain to the solution offers a constant-factor approximation guarantee of 1-1/e to the optimum [15]. We outline this approximation in Algorithm 2, and call it submodular pick. |

式(4)中的问题是加权覆盖函数的最大化,是NP-hard[10]。设 c(V ∪{i}, W, I)−c(V, W, I) 是将实例 i 添加到集合 V 的边际覆盖增益。由于子模块性,一个贪心算法迭代地将具有最高边际覆盖增益的实例添加到解决方案中,提供了一个常数因子近似保证1-1/e 到最优值 [15]。我们在算法 2 中概述了这种近似,并将其称为子模选取—submodular pick。 |

Figure 5: Toy example W. Rows represent instances (documents) and columns represent features(words). Feature f2 (dotted blue) has the highest importance. Rows 2 and 5 (in red) would be selected by the pick procedure, covering all but feature f1. |

图 5:Toy案例 W。行表示实例(文档),列表示特征(单词)。 特征 f2(蓝色虚线)的重要性最高。 选择程序将选择第 2 行和第 5 行(红色),涵盖除特征 f1 之外的所有内容。 |

5. SIMULATED USER EXPERIMENTS模拟用户实验

In this section, we present simulated user experiments to evaluate the utility of explanations in trust-related tasks. In particular, we address the following questions: (1) Are the explanations faithful to the model, (2) Can the explanations aid users in ascertaining trust in predictions, and (3) Are the explanations useful for evaluating the model as a whole. Code and data for replicating our experiments are available at https://github.com/marcotcr/lime-experiments. |

在本节中,我们展示了模拟用户实验,以评估解释在信任相关任务中的效用。 特别是,我们解决了以下问题:(1)解释是否忠实于模型,(2)解释能否帮助用户确定对预测的信任,以及(3)解释是否对评估整个模型有用。 用于复制我们的实验的代码和数据可在 https://github.com/marcotcr/lime-experiments 获得。 |

5.1 Experiment Setup实验设置

We use two sentiment analysis datasets (books and DVDs, 2000 instances each) where the task is to classify prod- uct reviews as positive or negative [4]. We train decision trees (DT), logistic regression with L2 regularization (LR), nearest neighbors (NN), and support vector machines with RBF kernel (SVM), all using bag of words as features. We also include random forests (with 1000 trees) trained with the average word2vec embedding [19] (RF), a model that is impossible to interpret without a technique like LIME. We use the implementations and default parameters of scikit- learn, unless noted otherwise. We divide each dataset into train (1600 instances) and test (400 instances). To explain individual predictions, we compare our pro- posed approach (LIME), with parzen [2], a method that approximates the black box classifier globally with Parzen windows, and explains individual predictions by taking the gradient of the prediction probability function. For parzen, we take the K features with the highest absolute gradients as explanations. We set the hyper-parameters for parzen and LIME using cross validation, and set N = 15, 000. We also compare against a greedy procedure (similar to Martens and Provost [18]) in which we greedily remove features that contribute the most to the predicted class until the prediction changes (or we reach the maximum of K features), and a random procedure that randomly picks K features as an explanation. We set K to 10 for our experiments. For experiments where the pick procedure applies, we either do random selection (random pick, RP) or the procedure described in §4 (submodular pick, SP). We refer to pick- explainer combinations by adding RP or SP as a prefix. |

我们使用两个情绪分析数据集(书籍和 DVD,每个 2000 个实例),其中任务是将产品评论分类为正面或负面 [4]。我们训练决策树 (DT)、带有 L2 正则化 (LR) 的逻辑回归、最近邻 (NN) 和带有 RBF 内核 (SVM) 的支持向量机,所有这些都使用词袋作为特征。我们还包括用平均 word2vec 嵌入 [19] (RF) 训练的随机森林(有 1000 棵树),如果没有像 LIME 这样的技术,这种模型是无法解释的。除非另有说明,否则我们使用 scikit-learn 的实现和默认参数。我们将每个数据集分为训练(1600 个实例)和测试(400 个实例)。 为了解释个体预测,我们将我们提出的方法 (LIME) 与 parzen [2] 进行比较,parzen [2] 是一种使用 Parzen 窗口全局近似黑盒分类器的方法,并通过采用预测概率函数的梯度来解释个体预测。对于 parzen,我们以绝对梯度最高的 K 个特征作为解释。我们使用交叉验证设置 parzen 和 LIME 的超参数,并设置 N = 15, 000。我们还与贪婪程序(类似于 Martens 和 Provost [18])进行比较,在该程序中,我们贪婪地删除了对预测类别,直到预测发生变化(或者我们达到 K 个特征的最大值),以及一个随机选择 K 个特征作为解释的随机过程。我们将 K 设置为 10 进行实验。 对于采用挑选程序的实验,我们要么进行随机选择(random pick, RP),要么采用§4中描述的子模块选择(submodular pick, SP)。我们通过添加RP或SP作为前缀来表示挑选-解释组合。 |

5.2 Are explanations faithful to the model?解释是否忠实于模型?

Figure 6: Recall on truly important features for two interpretable classiers on the books dataset. Figure 7: Recall on truly important features for two interpretable classiers on the DVDs dataset. |

图 6:回忆书籍数据集上两个可解释分类器的真正重要特征。 图 7:回忆 DVD 数据集上两个可解释分类器的真正重要特征。 |

We measure faithfulness of explanations on classifiers that are by themselves interpretable (sparse logistic regression and decision trees). In particular, we train both classifiers such that the maximum number of features they use for any instance is 10, and thus we know the gold set of features that the are considered important by these models. For each prediction on the test set, we generate explanations and compute the fraction of these gold features that are recovered by the explanations. We report this recall averaged over all the test instances in Figures 6 and 7. We observe that the greedy approach is comparable to parzen on logistic regression, but is substantially worse on decision trees since changing a single feature at a time often does not have an effect on the prediction. The overall recall by parzen is low, likely due to the difficulty in approximating the original high- dimensional classifier. LIME consistently provides > 90% recall for both classifiers on both datasets, demonstrating that LIME explanations are faithful to the models. |

我们测量对本身可解释的分类器(稀疏逻辑回归和决策树)的解释的忠实度。特别是,我们训练两个分类器,使它们用于任何实例的最大特征数为 10,因此我们知道这些模型认为重要的特征的黄金集。对于测试集上的每个预测,我们生成解释并计算由解释恢复的这些黄金特征的比例。我们报告了图 6 和图 7 中所有测试实例的平均召回率。我们观察到,贪心方法在逻辑回归上与 parzen 相当,但在决策树上要差得多,因为一次更改单个特征通常没有对预测的影响。 parzen 的整体召回率很低,可能是由于难以逼近原始高维分类器。 LIME 在两个数据集上始终为两个分类器提供 > 90% 的召回率,这表明 LIME 解释忠实于模型。 |

5.3 Should I trust this prediction?我应该相信这个预测吗?

In order to simulate trust in individual predictions, we first randomly select 25% of the features to be “untrustworthy”, and assume that the users can identify and would not want to trust these features (such as the headers in 20 newsgroups, leaked data, etc). We thus develop oracle “trustworthiness” by labeling test set predictions from a black box classifier as “untrustworthy” if the prediction changes when untrustworthy features are removed from the instance, and “trustworthy” otherwise. In order to simulate users, we assume that users deem predictions untrustworthy from LIME and parzen ex- planations if the prediction from the linear approximation changes when all untrustworthy features that appear in the explanations are removed (the simulated human “discounts” the effect of untrustworthy features). For greedy and random, the prediction is mistrusted if any untrustworthy features are present in the explanation, since these methods do not provide a notion of the contribution of each feature to the prediction. Thus for each test set prediction, we can evaluate whether the simulated user trusts it using each explanation method, and compare it to the trustworthiness oracle. Using this setup, we report the F1 on the trustworthy predictions for each explanation method, averaged over 100 runs, in Table 1. The results indicate that LIME dominates others (all results are significant at p = 0.01) on both datasets, and for all of the black box models. The other methods either achieve a lower recall (i.e. they mistrust predictions more than they should) or lower precision (i.e. they trust too many predictions), while LIME maintains both high precision and high recall. Even though we artificially select which features are untrustworthy, these results indicate that LIME is helpful in assessing trust in individual predictions. |

为了模拟对个体预测的信任,我们首先随机选择 25% 的特征为“不可信”,并假设用户可以识别并且不想信任这些特征(例如 20 个新闻组中的标题、泄露的数据) , ETC)。因此,我们通过将来自黑盒分类器的测试集预测标记为“不可信”来开发预言机“可信度”,如果在从实例中删除不可信特征时预测发生变化,否则为“可信”。为了模拟用户,我们假设用户认为来自 LIME 和 parzen 解释的预测是不可信的,如果当所有出现在解释中的不可信特征都被移除时线性近似的预测发生变化(模拟人“折扣”不可信的影响特征)。对于贪婪和随机,如果解释中存在任何不可信的特征,则预测是不可信的,因为这些方法没有提供每个特征对预测的贡献的概念。因此,对于每个测试集预测,我们可以使用每种解释方法评估模拟用户是否信任它,并将其与可信度预言机进行比较。 使用此设置,我们在表 1 中报告了每种解释方法的可信预测的 F1,平均超过 100 次运行。结果表明 LIME 在两个数据集上均优于其他方法(所有结果在 p = 0.01 时均显着),并且对于所有黑盒模型。其他方法要么实现较低的召回率(即他们对预测的不信任程度超过了应有的程度),要么实现了较低的精度(即他们相信太多的预测),而 LIME 则保持了高精度和高召回率。尽管我们人为地选择了哪些特征是不可信的,但这些结果表明 LIME 有助于评估个人预测的信任度。 |

Table 1: Average F1 of trustworthiness for different explainers on a collection of classiers and datasets. Figure 8: Choosing between two classiers, as the number of instances shown to a simulated user is varied. Averages and standard errors from 800 runs. |

表 1:不同解释器在分类器和数据集集合上的平均可信度 F1。 图 8:在两个分类器之间进行选择,因为向模拟用户显示的实例数量是不同的。 800 次测试的平均值和标准误差。 |

5.4 Can I trust this model?我可以相信这个模型吗?

In the final simulated user experiment, we evaluate whether the explanations can be used for model selection, simulating the case where a human has to decide between two competing models with similar accuracy on validation data. For this purpose, we add 10 artificially “noisy” features. Specifically, on training and validation sets (80/20 split of the original training data), each artificial feature appears in 10% of the examples in one class, and 20% of the other, while on the test instances, each artificial feature appears in 10% of the examples in each class. This recreates the situation where the models use not only features that are informative in the real world, but also ones that introduce spurious correlations. We create pairs of competing classifiers by repeatedly training pairs of random forests with 30 trees until their validation accuracy is within 0.1% of each other, but their test accuracy differs by at least 5%. Thus, it is not possible to identify the better classifier (the one with higher test accuracy) from the accuracy on the validation data. The goal of this experiment is to evaluate whether a user can identify the better classifier based on the explanations of B instances from the validation set. The simulated human marks the set of artificial features that appear in the B explanations as untrustworthy, following which we evaluate how many total predictions in the validation set should be trusted (as in the previous section, treating only marked features as untrustworthy). Then, we select the classifier with fewer untrustworthy predictions, and compare this choice to the classifier with higher held-out test set accuracy. |

在最终的模拟用户实验中,我们评估解释是否可用于模型选择,模拟人类必须在验证数据上具有相似准确性的两个竞争模型之间做出决定的情况。为此,我们添加了 10 个人为的“嘈杂”特征。具体来说,在训练集和验证集(原始训练数据的 80/20 分割)上,每个人为特征出现在一个类中 10% 的示例中,在另一个类中出现 20%,而在测试实例上,每个人为特征出现在每个班级的 10% 的例子中。这重现了模型不仅使用在现实世界中提供信息的特征,而且还使用引入虚假相关性的特征的情况。我们通过重复训练具有 30 棵树的随机森林对来创建竞争分类器对,直到它们的验证准确度在彼此的 0.1% 以内,但它们的测试准确度至少相差 5%。因此,不可能从验证数据的准确度中识别出更好的分类器(具有更高测试准确度的分类器)。 本实验的目的是评估用户是否可以根据验证集中对 B 个实例的解释来识别更好的分类器。模拟人将出现在 B 解释中的人工特征集标记为不可信,然后我们评估验证集中有多少总预测应该被信任(如上一节中,仅将标记的特征视为不可信)。然后,我们选择具有较少不可信预测的分类器,并将该选择与具有较高保留测试集准确度的分类器进行比较。 |

We present the accuracy of picking the correct classifier as B varies, averaged over 800 runs, in Figure 8. We omit SP-parzen and RP-parzen from the figure since they did not produce useful explanations, performing only slightly better than random. LIME is consistently better than greedy, irre- spective of the pick method. Further, combining submodular pick with LIME outperforms all other methods, in particular it is much better than RP-LIME when only a few examples are shown to the users. These results demonstrate that the trust assessments provided by SP-selected LIME explana- tions are good indicators of generalization, which we validate with human experiments in the next section. |

我们在图 8 中展示了随着 B 变化而选择正确分类器的准确度,平均超过 800 次运行。我们从图中省略了 SP-parzen 和 RP-parzen,因为它们没有产生有用的解释,只比随机的表现好一点。无论选择哪种方法,LIME都比greedy要好。此外,将 submodular pick 与 LIME 相结合优于所有其他方法,特别是当仅向用户显示几个示例时,它比 RP-LIME 好得多。这些结果表明,SP 选择的 LIME 解释提供的信任评估是很好的泛化指标,我们在下一节中通过人体实验验证了这一点。 |

6.EVALUATION WITH HUMAN SUBJECTS用人类受试者评估

In this section, we recreate three scenarios in machine learning that require trust and understanding of predictions and models. In particular, we evaluate LIME and SP-LIME in the following settings: (1) Can users choose which of two classifiers generalizes better (§ 6.2), (2) based on the explanations, can users perform feature engineering to improve the model (§ 6.3), and (3) are users able to identify and describe classifier irregularities by looking at explanations (§ 6.4). |

在本节中,我们在机器学习中重新创建了三个需要信任和理解预测和模型的场景。特别是,我们在以下设置中评估 LIME 和 SP-LIME:(1)用户可以选择两个分类器中哪一个更好地泛化(第 6.2 节),(2)基于解释,用户可以执行特征工程来改进模型( § 6.3) 和 (3) 是用户能够通过查看解释来识别和描述分类器违规行为(§ 6.4)。 |

6.1Experiment setup实验设置

For experiments in §6.2 and §6.3, we use the “Christianity” and “Atheism” documents from the 20 newsgroups dataset mentioned beforehand. This dataset is problematic since it contains features that do not generalize (e.g. very informative header information and author names), and thus validation accuracy considerably overestimates real-world performance. In order to estimate the real world performance, we create a new religion dataset for evaluation. We download Atheism and Christianity websites from the DMOZ directory and human curated lists, yielding 819 webpages in each class. High accuracy on this dataset by a classifier trained on 20 newsgroups indicates that the classifier is generalizing using semantic content, instead of placing importance on the data specific issues outlined above. Unless noted otherwise, we use SVM with RBF kernel, trained on the 20 newsgroups data with hyper-parameters tuned via the cross-validation. |

对于 §6.2 和 §6.3 中的实验,我们使用前面提到的 20 个新闻组数据集中的“Christianity”和“Atheism”文档。这个数据集是有问题的,因为它包含不能泛化的特征(例如非常丰富的标题信息和作者姓名),因此验证准确性大大高估了现实世界的表现。 为了估计真实世界的表现,我们创建了一个新的宗教数据集进行评估。我们从 DMOZ 目录和人工管理列表下载无神论和基督教网站,每个班级产生 819 个网页。在 20 个新闻组上训练的分类器对该数据集的高精度表明该分类器正在使用语义内容进行泛化,而不是重视上述数据特定问题。除非另有说明,否则我们使用带有 RBF 内核的 SVM,对 20 个新闻组数据进行训练,并通过交叉验证调整超参数。 |

6.2Can users select the best classifier?用户可以选择最好的分类器吗?

In this section, we want to evaluate whether explanations can help users decide which classifier generalizes better, i.e., which classifier would the user deploy “in the wild”. Specif- ically, users have to decide between two classifiers: SVM trained on the original 20 newsgroups dataset, and a version of the same classifier trained on a “cleaned” dataset where many of the features that do not generalize have been man- ually removed. The original classifier achieves an accuracy score of 57.3% on the religion dataset, while the “cleaned” classifier achieves a score of 69.0%. In contrast, the test accu- racy on the original 20 newsgroups split is 94.0% and 88.6%, respectively – suggesting that the worse classifier would be selected if accuracy alone is used as a measure of trust. |

在本节中,我们要评估解释是否可以帮助用户决定哪个分类器可以更好地概括,即用户将“自然场景下”部署哪个分类器。具体来说,用户必须在两个分类器之间做出选择:在原始 20 个新闻组数据集上训练的 SVM,以及在“清理”数据集上训练的同一分类器的版本,其中许多不能泛化的特征已被手动删除.原始分类器在宗教数据集上的准确度得分为 57.3%,而“清洁”分类器的得分为 69.0%。相比之下,原始 20 个新闻组拆分的测试准确度分别为 94.0% 和 88.6%——这表明如果仅使用准确度作为信任度衡量标准,则会选择较差的分类器。 |

We recruit human subjects on Amazon Mechanical Turk – by no means machine learning experts, but instead people with basic knowledge about religion. We measure their ability to choose the better algorithm by seeing side-by- side explanations with the associated raw data (as shown in Figure 2). We restrict both the number of words in each explanation (K) and the number of documents that each person inspects (B) to 6. The position of each algorithm and the order of the instances seen are randomized between subjects. After examining the explanations, users are asked to select which algorithm will perform best in the real world. The explanations are produced by either greedy (chosen as a baseline due to its performance in the simulated user experiment) or LIME, and the instances are selected either by random (RP) or submodular pick (SP). We modify the greedy step in Algorithm 2 slightly so it alternates between explanations of the two classifiers. For each setting, we repeat the experiment with 100 users. The results are presented in Figure 9. Note that all of the methods are good at identifying the better classifier, demonstrating that the explanations are useful in determining which classifier to trust, while using test set accuracy would result in the selection of the wrong classifier. Further, we see that the submodular pick (SP) greatly improves the user’s ability to select the best classifier when compared to random pick (RP), with LIME outperforming greedy in both cases. |

我们在 Amazon Mechanical Turk 上招募人类受试者——绝不是机器学习专家,而是具有宗教基本知识的人。我们通过查看相关原始数据的并排解释来衡量他们选择更好算法的能力(如图 2 所示)。我们将每个解释中的单词数 (K) 和每个人检查的文档数 (B) 都限制为 6。每个算法的位置和看到的实例的顺序在受试者之间是随机的。在检查了解释之后,用户被要求选择哪种算法在现实世界中表现最好。解释由贪心(由于其在模拟用户实验中的表现而被选为基线)或LIME 产生,并且通过随机(RP)或子模块选择(SP)选择实例。我们稍微修改算法 2 中的贪心步骤,使其在两个分类器的解释之间交替。对于每个设置,我们对 100 个用户重复实验。 结果如图 9 所示。请注意,所有方法都擅长识别更好的分类器,这表明这些解释对于确定要信任的分类器很有用,而使用测试集的准确性会导致选择错误的分类器。此外,我们看到与随机选择 (RP) 相比,子模块选择 (SP) 极大地提高了用户选择最佳分类器的能力,而 LIME 在这两种情况下都优于贪心。 |

Figure 9: Average accuracy of human subject (with standard errors) in choosing between two classiers. Figure 10: Feature engineering experiment. Each shaded line represents the average accuracy of subjects in a path starting from one of the initial 10 subjects. Each solid line represents the average across all paths per round of interaction. |

图 9:人类受试者在两个分类器之间进行选择时的平均准确度(带有标准误差)。 图 10:特征工程实验。 每条阴影线代表从最初 10 个主题之一开始的路径中主题的平均准确度。 每条实线代表每轮交互中所有路径的平均值。 |

6.3Can non-experts improve a classifier?非专家可以改进分类器吗?

If one notes that a classifier is untrustworthy, a common task in machine learning is feature engineering, i.e. modifying the set of features and retraining in order to improve gener- alization. Explanations can aid in this process by presenting the important features, particularly for removing features that the users feel do not generalize. We use the 20 newsgroups data here as well, and ask Ama- zon Mechanical Turk users to identify which words from the explanations should be removed from subsequent training, for the worse classifier from the previous section (§6.2). In each round, the subject marks words for deletion after observing B = 10 instances with K = 10 words in each explanation (an interface similar to Figure 2, but with a single algorithm). As a reminder, the users here are not experts in machine learning and are unfamiliar with feature engineering, thus are only identifying words based on their semantic content. Further, users do not have any access to the religion dataset – they do not even know of its existence. We start the experi- ment with 10 subjects. After they mark words for deletion, we train 10 different classifiers, one for each subject (with the corresponding words removed). The explanations for each classier are then presented to a set of 5 users in a new round of interaction, which results in 50 new classiers. We do a final round, after which we have 250 classiers, each with a path of interaction tracing back to the rst 10 subjects. |

如果有人注意到分类器不可信,那么机器学习中的一项常见任务是特征工程,即修改特征集并重新训练以提高泛化能力。解释可以通过呈现重要特征来帮助这个过程,特别是对于删除用户认为不能概括的特征。 我们在这里也使用了 20 个新闻组数据,并要求 Amazon Mechanical Turk 用户识别解释中的哪些单词应该从后续训练中删除,以获取上一节中更差的分类器(第 6.2 节)。在每一轮中,受试者在观察 B = 10 个实例(每个解释中的 K = 10 个单词)后将单词标记为删除(界面类似于图 2,但使用单一算法)。提醒一下,这里的用户不是机器学习专家,也不熟悉特征工程,因此只是根据语义内容来识别单词。此外,用户无权访问宗教数据集——他们甚至不知道它的存在。我们从 10 个受试者开始实验。在他们标记要删除的单词后,我们训练了 10 个不同的分类器,每个主题一个(删除了相应的单词)。然后在新一轮交互中将每个分类器的解释呈现给一组 5 个用户,从而产生 50 个新分类器。我们进行最后一轮,之后我们有 250 个分类器,每个分类器都有一条交互路径可以追溯到前 10 个主题。 |

The explanations and instances shown to each user are produced by SP-LIME or RP-LIME. We show the average accuracy on the religion dataset at each interaction round for the paths originating from each of the original 10 subjects (shaded lines), and the average across all paths (solid lines) in Figure 10. It is clear from the gure that the crowd workers are able to improve the model by removing features they deem unimportant for the task.Further, SP-LIME outperforms RP-LIME, indicating selection of the instances to show the users is crucial for efficient feature engineering. Each subject took an average of 3.6 minutes per round of cleaning, resulting in just under 11 minutes to produce a classifier that generalizes much better to real world data. Each path had on average 200 words removed with SP, and 157 with RP, indicating that incorporating coverage of important features is useful for feature engineering. Further, out of an average of 200 words selected with SP, 174 were selected by at least half of the users, while 68 by all the users. Along with the fact that the variance in the accuracy decreases across rounds, this high agreement demonstrates that the users are converging to similar correct models. This evaluation is an example of how explanations make it easy to improve an untrustworthy classifier – in this case easy enough that machine learning knowledge is not required. |

向每个用户显示的说明和实例由 SP-LIME 或 RP-LIME 生成。我们展示了宗教数据集在每个交互轮次中源自原始 10 个主题(阴影线)的路径的平均准确度,以及图 10 中所有路径的平均值(实线)。从图中可以清楚地看出,群众工作者能够通过删除他们认为对任务不重要的特征来改进模型。此外,SP-LIME 优于 RP-LIME,这表明选择实例以向用户展示对于高效的特征工程至关重要。 每个受试者每轮清洁平均需要 3.6 分钟,因此只需不到 11 分钟即可生成一个分类器,该分类器可以更好地泛化到现实世界的数据。每条路径用 SP 平均删除了 200 个单词,用 RP 删除了 157 个单词,这表明合并重要特征的覆盖对于特征工程是有用的。此外,在使用 SP 选择的平均 200 个单词中,至少有一半的用户选择了 174 个,而所有用户选择了 68 个。除了准确度的差异在各轮之间减小的事实外,这种高度一致性表明用户正在收敛到相似的正确模型。该评估是解释如何使改进不可信分类器变得容易的一个例子——在这种情况下很容易,不需要机器学习知识。 |

6.4 Do explanations lead to insights?解释会带来洞察力吗?

Often artifacts of data collection can induce undesirable correlations that the classifiers pick up during training. These issues can be very difficult to identify just by looking at the raw data and predictions. In an effort to reproduce such a setting, we take the task of distinguishing between photos of Wolves and Eskimo Dogs (huskies). We train a logistic regression classifier on a training set of 20 images, hand selected such that all pictures of wolves had snow in the background, while pictures of huskies did not. As the features for the images, we use the first max-pooling layer of Google’s pre-trained Inception neural network [25]. On a collection of additional 60 images, the classifier predicts “Wolf” if there is snow (or light background at the bottom), and “Husky” otherwise, regardless of animal color, position, pose, etc. We trained this bad classifier intentionally, to evaluate whether subjects are able to detect it. The experiment proceeds as follows: we first present a balanced set of 10 test predictions (without explanations), where one wolf is not in a snowy background (and thus the prediction is “Husky”) and one husky is (and is thus predicted as “Wolf”). We show the “Husky” mistake in Figure 11a. The other 8 examples are classified correctly. We then ask the subject three questions: (1) Do they trust this algorithm to work well in the real world, (2) why, and (3) how do they think the algorithm is able to distinguish between these photos of wolves and huskies. After getting these responses, we show the same images with the associated explanations, such as in Figure 11b, and ask the same questions. |

通常,数据收集的假象会诱导分类器在训练过程中获得不必要的相关性。仅通过查看原始数据和预测可能很难识别这些问题。为了重现这样的场景,我们进行了区分狼和爱斯基摩狗(哈士奇)照片的任务。我们在包含 20 张图像的训练集上训练逻辑回归分类器,这些图像是手工选择的,使得所有狼的图片背景中都有雪,而哈士奇的图片则没有。作为图像的特征,我们使用谷歌预训练的 Inception 神经网络 [25] 的第一个最大池化层。在另外 60 张图像的集合中,如果有雪(或底部有浅色背景),分类器会预测“狼”,否则会预测“哈士奇”,而不管动物的颜色、位置、姿势等。我们有意训练了这个糟糕的分类器,以评估受试者是否能够检测到它。 实验进行如下:我们首先提出了一组平衡的 10 个测试预测(没有解释),其中一只狼不在雪背景中(因此预测为“哈士奇”),另一只哈士奇在雪背景中(因此预测为“狼”)。我们在图 11a 中展示了“Husky”错误。其他 8 个示例分类正确。然后我们问受试者三个问题:(1)他们是否相信这个算法在现实世界中运行良好,(2)为什么,以及(3)他们如何认为这个算法能够区分这些狼和哈士奇的照片。在得到这些回复后,我们会展示带有相关解释的相同图像,如图 11b 所示,并提出相同的问题。 |

Before observing the explanations, more than a third trusted the classifier, and a little less than half mentioned the snow pattern as something the neural network was using although all speculated on other patterns. After examining the explanations, however, almost all of the subjects identi- fied the correct insight, with much more certainty that it was a determining factor. Further, the trust in the classifier also dropped substantially. Although our sample size is small, this experiment demonstrates the utility of explaining indi- vidual predictions for getting insights into classifiers knowing when not to trust them and why. |

由于这项任务需要对伪关联和泛化的概念有一定的熟悉,所以这个实验的实验对象是至少上过一门机器学习课程的研究生。在收集了回答后,我们让3名独立的评估人员阅读他们的推理,并确定每个受试者是否提到了雪、背景或等效物作为模型可能使用的特征。我们选择大多数来决定主题对洞察力的判断是否正确,并在表 2中显示解释之前和之后报告这些数字。 在观察解释之前,超过三分之一的人相信分类器,不到一半的人提到雪花图案是神经网络正在使用的东西,尽管所有人都推测其他图案。然而,在检查了这些解释之后,几乎所有的受试者都确定了正确的洞察力,并且更加确定这是一个决定性因素。此外,对分类器的信任度也大幅下降。尽管我们的样本量很小,但这个实验证明了解释个体预测对深入了解分类器的效用,知道什么时候不相信它们以及为什么不相信它们。 |

Figure 11: Raw data and explanation of a bad model's prediction in the \Husky vs Wolf" task. Table 2: \Husky vs Wolf" experiment results. |

图 11:“Husky vs Wolf”任务中不良模型预测的原始数据和解释。 表 2:“Husky vs Wolf”实验结果。 |

7.RELATED WORK相关工作

The problems with relying on validation set accuracy as the primary measure of trust have been well studied. Practi- tioners consistently overestimate their model’s accuracy [20], propagate feedback loops [23], or fail to notice data leaks [14]. In order to address these issues, researchers have proposed tools like Gestalt [21] and Modeltracker [1], which help users navigate individual instances. These tools are complemen- tary to LIME in terms of explaining models, since they do not address the problem of explaining individual predictions. Further, our submodular pick procedure can be incorporated in such tools to aid users in navigating larger datasets. Some recent work aims to anticipate failures in machine learning, specifically for vision tasks [3, 29]. Letting users know when the systems are likely to fail can lead to an increase in trust, by avoiding “silly mistakes” [8]. These solutions either require additional annotations and feature engineering that is specific to vision tasks or do not provide insight into why a decision should not be trusted. Further- more, they assume that the current evaluation metrics are reliable, which may not be the case if problems such as data leakage are present. Other recent work [11] focuses on ex- posing users to different kinds of mistakes (our pick step). Interestingly, the subjects in their study did not notice the serious problems in the 20 newsgroups data even after look- ing at many mistakes, suggesting that examining raw data is not sufficient. Note that Groce et al. [11] are not alone in this regard, many researchers in the field have unwittingly published classifiers that would not generalize for this task. Using LIME, we show that even non-experts are able to identify these irregularities when explanations are present. Further, LIME can complement these existing systems, and allow users to assess trust even when a prediction seems “correct” but is made for the wrong reasons. |

依赖验证集准确性作为信任的主要衡量标准的问题已经得到了很好的研究。实践者总是高估他们模型的准确性[20],传播反馈循环[23],或者没有注意到数据泄漏[14]。为了解决这些问题,研究人员提出了 Gestalt [21] 和 Modeltracker [1] 等工具,它们可以帮助用户导航各个实例。这些工具在解释模型方面是 LIME 的补充,因为它们没有解决解释单个预测的问题。此外,我们的子模块挑选过程可以合并到这些工具中,以帮助用户导航更大的数据集。 最近的一些工作旨在预测机器学习中的失败,特别是视觉任务 [3, 29]。通过避免“愚蠢的错误”[8],让用户知道系统何时可能会失败可以增加信任。这些解决方案要么需要特定于视觉任务的额外注释和特征工程,要么不提供关于为什么不应该信任决策的洞察力。此外,他们假设当前的评估指标是可靠的,如果存在数据泄漏等问题,情况可能并非如此。最近的其他工作 [11] 侧重于让用户暴露在不同类型的错误(我们的选择步骤)。有趣的是,他们研究的受试者即使在查看了许多错误之后也没有注意到 20 个新闻组数据中的严重问题,这表明检查原始数据是不够的。请注意,Groce 等人 [11] 在这方面并不是唯一的,该领域的许多研究人员都无意中发表了不会对这一任务进行概括的分类器。使用 LIME,我们表明即使是非专家也能够在存在解释时识别这些不规则现象。此外,LIME 可以补充这些现有系统,并允许用户评估信任,即使一个预测看起来是“正确的”,但却是基于错误的原因做出的。 |

Recognizing the utility of explanations in assessing trust, many have proposed using interpretable models [27], espe- cially for the medical domain [6, 17, 26]. While such models may be appropriate for some domains, they may not apply equally well to others (e.g. a supersparse linear model [26] with 5 − 10 features is unsuitable for text applications). Interpretability, in these cases, comes at the cost of flexibility, accuracy, or efficiency. For text, EluciDebug [16] is a full human-in-the-loop system that shares many of our goals (interpretability, faithfulness, etc). However, they focus on an already interpretable model (Naive Bayes). In computer vision, systems that rely on object detection to produce candidate alignments [13] or attention [28] are able to pro- duce explanations for their predictions. These are, however, constrained to specific neural network architectures or inca- pable of detecting “non object” parts of the images. Here we focus on general, model-agnostic explanations that can be applied to any classifier or regressor that is appropriate for the domain - even ones that are yet to be proposed. |

认识到解释在评估信任中的效用,许多人提出使用可解释模型 [27],特别是在医学领域 [6,17,26]。虽然此类模型可能适用于某些领域,但它们可能不同样适用于其他领域(例如,具有 5-10 个特征的超稀疏线性模型 [26] 不适合文本应用程序)。在这些情况下,可解释性是以灵活性、准确性或效率为代价的。对于文本,EluciDebug [16] 是一个完整的人在环系统,它共享我们的许多目标(可解释性、忠实度等)。然而,他们专注于一个已经可以解释的模型(朴素贝叶斯)。在计算机视觉中,依赖对象检测来产生候选对齐 [13] 或注意力 [28] 的系统能够对其预测产生解释。然而,这些受限于特定的神经网络架构或无法检测图像的“非目标”部分。在这里,我们专注于通用的、与模型无关的解释,这些解释可以应用于适用于该领域的任何分类器或回归器——即使是尚未提出的分类器或回归器。 |

A common approach to model-agnostic explanation is learn- ing a potentially interpretable model on the predictions of the original model [2, 7, 22]. Having the explanation be a gradient vector [2] captures a similar locality intuition to that of LIME. However, interpreting the coefficients on the gradient is difficult, particularly for confident predictions (where gradient is near zero). Further, these explanations ap- proximate the original model globally, thus maintaining local fidelity becomes a significant challenge, as our experiments demonstrate. In contrast, LIME solves the much more feasi- ble task of finding a model that approximates the original model locally. The idea of perturbing inputs for explanations has been explored before [24], where the authors focus on learning a specific contribution model, as opposed to our general framework. None of these approaches explicitly take cognitive limitations into account, and thus may produce non-interpretable explanations, such as a gradients or linear models with thousands of non-zero weights. The problem becomes worse if the original features are nonsensical to humans (e.g. word embeddings). In contrast, LIME incor- porates interpretability both in the optimization and in our notion of interpretable representation, such that domain and task specific interpretability criteria can be accommodated. |

与模型无关的解释的一种常见方法是在原始模型的预测上学习一个潜在的可解释模型 [2, 7, 22]。将解释设为梯度向量 [2] 可以捕获与 LIME 相似的局部直觉。然而,解释梯度上的系数是困难的,特别是对于自信的预测(梯度接近于零)。此外,正如我们的实验所证明的,这些解释在全局范围内近似于原始模型,因此保持局部保真度成为一项重大挑战。相比之下,LIME 解决了更可行的任务,即找到一个在局部逼近原始模型的模型。在 [24] 之前已经探索过扰动解释输入的想法,作者专注于学习特定的贡献模型,而不是我们的一般框架。这些方法都没有明确地考虑到认知的局限性,因此可能会产生不可解释的解释,例如具有数千个非零权重的梯度或线性模型。如果原始特征对人类无意义(例如词嵌入),问题会变得更糟。相比之下,LIME 在优化和我们的可解释表示概念中都包含了可解释性,因此可以适应特定领域和任务的可解释性标准。 |

8.CONCLUSION AND FUTURE WORK结论和未来工作

In this paper, we argued that trust is crucial for effective human interaction with machine learning systems, and that explaining individual predictions is important in assessing trust. We proposed LIME, a modular and extensible ap- proach to faithfully explain the predictions of any model in an interpretable manner. We also introduced SP-LIME, a method to select representative and non-redundant predic- tions, providing a global view of the model to users. Our experiments demonstrated that explanations are useful for a variety of models in trust-related tasks in the text and image domains, with both expert and non-expert users: deciding between models, assessing trust, improving untrustworthy models, and getting insights into predictions. |

在本文中,我们认为信任对于人类与机器学习系统的有效互动至关重要,并且解释个体预测在评估信任方面很重要。我们提出了LIME,这是一种模块化和可扩展的方法,可以以可解释的方式忠实地解释任何模型的预测。我们还介绍了 SP-LIME,这是一种选择代表性和非冗余预测的方法,为用户提供了一个模型的全局视图。我们的实验表明,解释对于文本和图像领域中与信任相关的任务中的各种模型很有用,包括专家和非专家用户:在模型之间做出决定、评估信任、改进不可信模型以及深入了解预测。 |

There are a number of avenues of future work that we would like to explore. Although we describe only sparse linear models as explanations, our framework supports the exploration of a variety of explanation families, such as de- cision trees; it would be interesting to see a comparative study on these with real users. One issue that we do not mention in this work was how to perform the pick step for images, and we would like to address this limitation in the future. The domain and model agnosticism enables us to explore a variety of applications, and we would like to inves- tigate potential uses in speech, video, and medical domains, as well as recommendation systems. Finally, we would like to explore theoretical properties (such as the appropriate number of samples) and computational optimizations (such as using parallelization and GPU processing), in order to provide the accurate, real-time explanations that are critical for any human-in-the-loop machine learning system. |

我们想要探索的未来工作有许多途径。虽然我们只描述稀疏的线性模型作为解释,但我们的框架支持对各种解释家族的探索,例如决策树;与真实用户进行比较研究会很有趣。我们在这项工作中没有提到的一个问题是如何执行图像的挑选步骤,我们希望在未来解决这个限制。领域和模型不可知论使我们能够探索各种应用,我们希望研究语音、视频和医学领域以及推荐系统的潜在用途。最后,我们想探索理论特性(例如适当数量的样本)和计算优化(例如使用并行化和 GPU 处理),便提供准确、实时的解释,这对任何人在循环的机器学习系统都是至关重要的。 |

Acknowledgements致谢

We would like to thank Scott Lundberg, Tianqi Chen, and Tyler Johnson for helpful discussions and feedback. This work was supported in part by ONR awards #W911NF-13- 1-0246 and #N00014-13-1-0023, and in part by TerraSwarm, one of six centers of STARnet, a Semiconductor Research Corporation program sponsored by MARCO and DARPA. |

我们要感谢 Scott Lundberg、Tianqi Chen 和 Tyler Johnson 的有益讨论和反馈。这项工作部分得到了 ONR 奖项 #W911NF-13-1-0246 和 #N00014-13-1-0023 的支持,部分得到了 STARnet 六个中心之一的 TerraSwarm 的支持,这是一个由 MARCO 和 DARPA 赞助的半导体研究公司项目. |

REFERENCES

[1] S. Amershi, M. Chickering, S. M. Drucker, B. Lee,

P. Simard, and J. Suh. Modeltracker: Redesigning performance analysis tools for machine learning. In Human Factors in Computing Systems (CHI), 2015.

[2] D. Baehrens, T. Schroeter, S. Harmeling, M. Kawanabe,

K. Hansen, and K.-R. Mu¨ller. How to explain individual classification decisions. Journal of Machine Learning Research, 11, 2010.

[3] A. Bansal, A. Farhadi, and D. Parikh. Towards transparent systems: Semantic characterization of failure modes. In European Conference on Computer Vision (ECCV), 2014.

[4] J. Blitzer, M. Dredze, and F. Pereira. Biographies, bollywood, boom-boxes and blenders: Domain adaptation for sentiment classification. In Association for Computational Linguistics (ACL), 2007.

[5] J. Q. Candela, M. Sugiyama, A. Schwaighofer, and N. D. Lawrence. Dataset Shift in Machine Learning. MIT, 2009.

[6] R. Caruana, Y. Lou, J. Gehrke, P. Koch, M. Sturm, and

N. Elhadad. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day readmission. In Knowledge Discovery and Data Mining (KDD), 2015.

[7] M. W. Craven and J. W. Shavlik. Extracting tree-structured representations of trained networks. Neural information processing systems (NIPS), pages 24–30, 1996.

[8] M. T. Dzindolet, S. A. Peterson, R. A. Pomranky, L. G. Pierce, and H. P. Beck. The role of trust in automation reliance. Int. J. Hum.-Comput. Stud., 58(6), 2003.

[9] B. Efron, T. Hastie, I. Johnstone, and R. Tibshirani. Least angle regression. Annals of Statistics, 32:407–499, 2004.

[10] U. Feige. A threshold of ln n for approximating set cover. J. ACM, 45(4), July 1998.

[11] A. Groce, T. Kulesza, C. Zhang, S. Shamasunder,

M. Burnett, W.-K. Wong, S. Stumpf, S. Das, A. Shinsel,

F. Bice, and K. McIntosh. You are the only possible oracle: Effective test selection for end users of interactive machine learning systems. IEEE Trans. Softw. Eng., 40(3), 2014.

[12] J. L. Herlocker, J. A. Konstan, and J. Riedl. Explaining collaborative filtering recommendations. In Conference on Computer Supported Cooperative Work (CSCW), 2000.

[13] A. Karpathy and F. Li. Deep visual-semantic alignments for generating image descriptions. In Computer Vision and Pattern Recognition (CVPR), 2015.

[14] S. Kaufman, S. Rosset, and C. Perlich. Leakage in data mining: Formulation, detection, and avoidance. In Knowledge Discovery and Data Mining (KDD), 2011.

[15] A. Krause and D. Golovin. Submodular function maximization. In Tractability: Practical Approaches to Hard Problems. Cambridge University Press, February 2014.

[16] T. Kulesza, M. Burnett, W.-K. Wong, and S. Stumpf.

Principles of explanatory debugging to personalize interactive machine learning. In Intelligent User Interfaces (IUI), 2015.

[17] B. Letham, C. Rudin, T. H. McCormick, and D. Madigan.

Interpretable classifiers using rules and bayesian analysis: Building a better stroke prediction model. Annals of Applied Statistics, 2015.

[18] D. Martens and F. Provost. Explaining data-driven document classifications. MIS Q., 38(1), 2014.

[19] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and

J. Dean. Distributed representations of words and phrases and their compositionality. In Neural Information Processing Systems (NIPS). 2013.

[20] K. Patel, J. Fogarty, J. A. Landay, and B. Harrison.

Investigating statistical machine learning as a tool for software development. In Human Factors in Computing Systems (CHI), 2008.

[21] K. Patel, N. Bancroft, S. M. Drucker, J. Fogarty, A. J. Ko, and J. Landay. Gestalt: Integrated support for implementation and analysis in machine learning. In User Interface Software and Technology (UIST), 2010.

[22] I. Sanchez, T. Rocktaschel, S. Riedel, and S. Singh. Towards extracting faithful and descriptive representations of latent variable models. In AAAI Spring Syposium on Knowledge Representation and Reasoning (KRR): Integrating Symbolic and Neural Approaches, 2015.

[23] D. Sculley, G. Holt, D. Golovin, E. Davydov, T. Phillips,

D. Ebner, V. Chaudhary, M. Young, and J.-F. Crespo. Hidden technical debt in machine learning systems. In Neural Information Processing Systems (NIPS). 2015.

[24] E. Strumbelj and I. Kononenko. An efficient explanation of individual classifications using game theory. Journal of Machine Learning Research, 11, 2010.

[25] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed,

D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich. Going deeper with convolutions. In Computer Vision and Pattern Recognition (CVPR), 2015.

[26] B. Ustun and C. Rudin. Supersparse linear integer models for optimized medical scoring systems. Machine Learning, 2015.

[27] F. Wang and C. Rudin. Falling rule lists. In Artificial Intelligence and Statistics (AISTATS), 2015.

[28] K. Xu, J. Ba, R. Kiros, K. Cho, A. Courville,

R. Salakhutdinov, R. Zemel, and Y. Bengio. Show, attend and tell: Neural image caption generation with visual attention. In International Conference on Machine Learning (ICML), 2015.

[29] P. Zhang, J. Wang, A. Farhadi, M. Hebert, and D. Parikh.

Predicting failures of vision systems. In Computer Vision and Pattern Recognition (CVPR), 2014.