A Few Useful Things to Know About Machine Learning》翻译与解读

了解机器学习的一些有用的东西

Machine learning systems automatically learn programs from data. This is often a very attractive alternative to manually constructing them, and in the last decade the use of machine learning has spread rapidly throughout computer science and beyond. Machine learning is used in Web search, spam filters, recommender systems, ad placement, credit scoring, fraud detection, stock trading, drug design, and many other applications. A recent report from the McKinsey Global Institute asserts that machine learning (a.k.a. data mining or predictive analytics) will be the driver of the next big wave of innovation.15 Several fine textbooks are available to interested practitioners and researchers (for example, Mitchell16 and Witten et al.24). However, much of the “folk knowledge” that is needed to successfully develop machine learning applications is not readily available in them. As a result, many machine learning projects take much longer than necessary or wind up producing less-than-ideal results. Yet much of this folk knowledge is fairly easy to communicate. This is the purpose of this article. 机器学习系统会自动从数据中学习程序。这通常是手动构建它们的一种非常有吸引力的替代方法,并且在过去的十年中,机器学习的使用已迅速遍及整个计算机科学及其他领域。机器学习用于Web搜索,垃圾邮件过滤器,推荐系统,广告排名,信用评分,欺诈检测,股票交易,药物设计以及许多其他应用程序中。麦肯锡全球研究院最近的一份报告断言,机器学习(又名数据挖掘或预测分析)将成为下一波创新浪潮的驱动力.15有兴趣的从业者和研究人员可以使用几本精美的教科书(例如Mitchell16和Witten等24)。但是,成功开发机器学习应用程序所需的许多``民间知识''尚不容易获得。结果,许多机器学习项目花费的时间比必要的时间长得多,或者结束时产生的结果不理想。然而,许多民间知识非常容易交流。这就是本文的目的。

key insights 重要见解

Machine learning algorithms can figure out how to perform important tasks by generalizing from examples. This is often feasible and cost-effective where manual programming is not. As more data becomes available, more ambitious problems can be tackled.

Machine learning is widely used in computer science and other fields. However, developing successful machine learning applications requires a substantial amount of “black art” that is difficult to find in textbooks.

This article summarizes 12 key lessons that machine learning researchers and practitioners have learned. These include pitfalls to avoid, important issues to focus on, and answers to common questions. 机器学习算法可以通过示例总结来弄清楚如何执行重要任务。在没有手动编程的情况下,这通常是可行且具有成本效益的。随着越来越多的数据可用,可以解决更多雄心勃勃的问题。机器学习广泛应用于计算机科学和其他领域。但是,开发成功的机器学习应用程序需要大量的``妖术'',这在教科书中很难找到。本文总结了机器学习研究人员和从业人员所学的12项关键课程。这些包括要避免的陷阱,需要重点关注的重要问题以及常见问题的答案。

Many different types of machine learning exist, but for illustration purposes I will focus on the most mature and widely used one: classification. Nevertheless, the issues I will discuss apply across all of machine learning. A classifier is a system that inputs (typically) a vector of discrete and/or continuous feature values and outputs a single discrete value, the class. For example, a spam filter classifies email messages into “spam” or “not spam,” and its input may be a Boolean vector x = (x1,…,xj,…,xd), where xj = 1 if the j th word in the dictionary appears in the email and xj = 0 otherwise. A learner inputs a training set of examples (xi, yi), where xi = (xi,1 , . . . , xi,d) is an observed input and yi is the corresponding output, and outputs a classifier. The test of the learner is whether this classifier produces the correct output yt for future examples xt (for example, whether the spam filter correctly classifies previously unseen email messages as spam or not spam). 存在许多不同类型的机器学习,但出于说明目的,我将重点介绍最成熟且使用最广泛的一种:分类。尽管如此,我将讨论的问题适用于所有机器学习。分类器是一个系统,通常输入离散和/或连续特征值的向量并输出单个离散值的类。例如,垃圾邮件过滤器将电子邮件分类为“垃圾邮件”或“非垃圾邮件”,其输入可能是布尔向量x =(x1,...,xj,...,xd),如果第j个单词的话xj = 1字典中出现在电子邮件中,否则xj = 0。学习者输入一组训练示例(xi,yi),其中xi =(xi,1,。。。xi,d)是观察到的输入,yi是相应的输出,并输出分类器。学习者的考验是此分类器是否为将来的示例xt生成正确的输出yt(例如,垃圾邮件过滤器是否将先前未见过的电子邮件正确分类为垃圾邮件或非垃圾邮件)。

Learning = Representation + Evaluation + Optimization 学习=表示+评估+优化

Suppose you have an application that you think machine learning might be good for. The first problem facing you is the bewildering variety of learning algorithms available. Which one to use? There are literally thousands available, and hundreds more are published each year. The key to not getting lost in this huge space is to realize that it consists of combinations of just three components. The components are:

Representation. A classifier must be represented in some formal language that the computer can handle. Conversely, choosing a representation for a learner is tantamount to choosing the set of classifiers that it can possibly learn. This set is called the hypothesis space of the learner. If a classifier is not in the hypothesis space, it cannot be learned. A related question, that I address later, is how to represent the input, in other words, what features to use.

Evaluation. An evaluation function (also called objective function or scoring function) is needed to distinguish good classifiers from bad ones. The evaluation function used internally by the algorithm may differ from the external one that we want the classifier to optimize, for ease of optimization and due to the issues I will discuss.

Optimization. Finally, we need a method to search among the classifiers in the language for the highest-scoring one. The choice of optimization technique is key to the efficiency of the learner, and also helps determine the classifier produced if the evaluation function has more than one optimum. It is common for new learners to start out using off-the-shelf optimizers, which are later replaced by custom-designed ones.

假设您有一个您认为机器学习可能很适合的应用程序。您面临的第一个问题是可用的学习算法种类繁多。使用哪一个?实际上有数千种可用,每年都会发布数百种。在这个巨大空间中不迷路的关键是要认识到它仅由三个组成部分组成。这些组件是:

表示。分类器必须以计算机可以处理的某种正式语言表示。相反,为学习者选择表示形式等同于选择其可能学习的分类器集合。该集合称为学习者的假设空间。如果分类器不在假设空间中,则无法学习。我稍后将解决的一个相关问题是如何表示输入,换句话说,要使用的功能。

评估。需要评估函数(也称为目标函数或评分函数)以区分良好的分类器和不良的分类器。该算法在内部使用的评估函数可能与我们希望分类器优化的外部评估函数有所不同,这是为了简化优化以及由于我将要讨论的问题。

优化。最后,我们需要一种在语言中的分类器中搜索得分最高的方法。优化技术的选择是学习者效率的关键,并且如果评估函数具有多个最优值,则有助于确定所生成的分类器。对于新学习者来说,通常首先使用现成的优化器,然后由定制设计的优化器代替。

The accompanying table shows common examples of each of these three components. For example, knearest neighbor classifies a test example by finding the k most similar training examples and predicting the majority class among them. HyperI plane-based methods form a linear combination of the features per class and predict the class with the highest-valued combination. Decision trees test one feature at each internal node, with one branch for each feature value, and have class predictions at the leaves. Algorithm 1 (above) shows a bare-bones decision tree learner for Boolean domains, using information gain and greedy search.20 InfoGain(xj, y) is the mutual information between feature xj and the class y. MakeNode(x,c0,c1) returns a node that tests feature x and has c0 as the child for x = 0 and c1 as the child for x = 1.

Of course, not all combinations of one component from each column of the table make equal sense. For example, discrete representations naturally go with combinatorial optimization, and continuous ones with continuous optimization. Nevertheless, many learners have both discrete and continuous components, and in fact the day may not be far when every single possible combination has appeared in some learner!

Most textbooks are organized by representation, and it is easy to overlook the fact that the other components are equally important. There is no simple recipe for choosing each component, but I will touch on some of the key issues here. As we will see, some choices in a machine learning project may be even more important than the choice of learner. 下表显示了这三个组件中每个组件的通用示例。例如,knearest邻居通过找到k个最相似的训练示例并预测其中的大多数类别来对测试示例进行分类。基于HyperI的基于平面的方法形成每个类别的特征的线性组合,并以最高价值的组合来预测类别。决策树在每个内部节点上测试一个功能,每个功能值具有一个分支,并在树叶上进行类预测。上面的算法1显示了使用信息增益和贪婪搜索的布尔域的基本决策树学习器.20 InfoGain(xj,y)是特征xj和类别y之间的互信息。 MakeNode(x,c0,c1)返回一个测试特征x的节点,对于x = 0,将c0作为子节点,对于x = 1,将c1作为其子节点。

当然,并非表中每一列的一个组件的所有组合都具有同等意义。例如,离散表示自然会进行组合优化,而连续表示则会进行连续优化。尽管如此,许多学习者具有离散和连续的组成部分,实际上,当每个单独的可能组合出现在某个学习者中的日子可能并不遥远!

大多数教科书都是按代表形式组织的,很容易忽略其他组成部分同等重要的事实。没有选择每个组件的简单方法,但是我将在这里介绍一些关键问题。正如我们将看到的那样,机器学习项目中的某些选择可能比学习者的选择更为重要。

It’s Generalization that Counts 重要的是概括

The fundamental goal of machine learning is to generalize beyond the examples in the training set. This is because, no matter how much data we have, it is very unlikely that we will see those exact examples again at test time. (Notice that, if there are 100,000 words in the dictionary, the spam filter described above has 2100,000 possible different inputs.) Doing well on the training set is easy (just memorize the examples). The most common mistake among machine learning beginners is to test on the training data and have the illusion of success. If the chosen classifier is then tested on new data, it is often no better than random guessing. So, if you hire someone to build a classifier, be sure to keep some of the data to yourself and test the classifier they give you on it. Conversely, if you have been hired to build a classifier, set some of the data aside from the beginning, and only use it to test your chosen classifier at the very end, followed by learning your final classifier on the whole data. 机器学习的基本目标是超越训练集中的示例进行概括。这是因为,无论我们拥有多少数据,我们都不太可能在测试时再次看到这些确切的例子。 (请注意,如果字典中有100,000个单词,则上述垃圾邮件过滤器可能有2100,000个不同的输入。)在训练集上做得很好很容易(请记住示例)。机器学习初学者中最常见的错误是对训练数据进行测试并产生成功的幻觉。如果选择的分类器随后在新数据上进行测试,则通常不会比随机猜测更好。因此,如果您雇用某人来构建分类器,请确保将一些数据保留给自己并测试他们在其中提供给您的分类器。相反,如果您被雇用来构建分类器,请从一开始就保留一些数据,并仅在最后使用它来测试所选的分类器,然后再对整个数据学习最终的分类器。

Contamination of your classifier by test data can occur in insidious ways, for example, if you use test data to tune parameters and do a lot of tuning. (Machine learning algorithms have lots of knobs, and success often comes from twiddling them a lot, so this is a real concern.) Of course, holding out data reduces the amount available for training. This can be mitigated by doing cross-validation: randomly dividing your training data into (say) 10 subsets, holding out each one while training on the rest, testing each learned classifier on the examples it did not see, and averaging the results to see how well the particular parameter setting does.

In the early days of machine learning, the need to keep training and test data separate was not widely appreciated. This was partly because, if the learner has a very limited representation (for example, hyperplanes), the difference between training and test error may not be large. But with very flexible classifiers (for example, decision trees), or even with linear classifiers with a lot of features, strict separation is mandatory.

测试数据对分类器的污染可能以阴险的方式发生,例如,如果您使用测试数据来调整参数并进行大量调整。 (机器学习算法有很多旋钮,而成功往往来自于大量的纠缠,因此这是一个真正的问题。)当然,保留数据会减少可用于训练的数量。可以通过交叉验证来缓解这种情况:将您的训练数据随机分为10个子集(例如10个子集),在其余部分进行训练时坚持每个子集,在未看到的示例上测试每个学习的分类器,然后平均结果以查看特定参数设置的效果如何。

在机器学习的早期,对训练和测试数据保持分开的需求并未得到广泛认可。这部分是因为,如果学习者的表示形式非常有限(例如,超平面),则训练与测试错误之间的差异可能不会很大。但是对于非常灵活的分类器(例如决策树),甚至对于具有很多功能的线性分类器来说,严格的分隔是强制性的。

Notice that generalization being the goal has an interesting consequence for machine learning. Unlike in most other optimization problems, we do not have access to the function we want to optimize! We have to use training error as a surrogate for test error, and this is fraught with danger. (How to deal with it is addressed later.) On the positive side, since the objective function is only a proxy for the true goal, we may not need to fully optimize it; in fact, a local optimum returned by simple greedy search may be better than the global optimum. 请注意,泛化是机器学习的目标产生了有趣的结果。 与大多数其他优化问题不同,我们无权访问我们要优化的功能! 我们必须使用训练错误作为测试错误的替代品,这充满了危险。 从积极的方面来看,由于目标函数只是真实目标的代理,因此我们可能不需要完全优化它; 实际上,通过简单的贪婪搜索返回的局部最优值可能要好于全局最优值。

Data Alone Is Not Enough 仅数据不足

Generalization being the goal has another major consequence: Data alone is not enough, no matter how much of it you have. Consider learning a Boolean function of (say) 100 variables from a million examples. There are 2100 − 106 examples whose classes you do not know. How do you figure out what those classes are? In the absence of further information, there is just no way to do this that beats flipping a coin. This observation was first made (in somewhat different form) by the philosopher David Hume over 200 years ago, but even today many mistakes in machine learning stem from failing to appreciate it. Every learner must embody some knowledge or assumptions beyond the data it is given in order to generalize beyond it. This notion was formalized by Wolpert in his famous “no free lunch” theorems, according to which no learner can beat random guessing over all possible functions to be learned.25.

This seems like rather depressing news. How then can we ever hope to learn anything? Luckily, the functions we want to learn in the real world are not drawn uniformly from the set of all mathematically possible functions! In fact, very general assumptions—like smoothness, similar examples having similar classes, limited dependences, or limited complexity—are often enough to do very well, and this is a large part of why machine learning has been so successful. Like deduction, induction (what learners do) is a knowledge lever: it turns a small amount of input knowledge into a large amount of output knowledge. Induction is a vastly more powerful lever than deduction, requiring much less input knowledge to produce useful results, but it still needs more than zero input knowledge to work. And, as with any lever, the more we put in, the more we can get out.

泛化是目标的另一个主要后果:数据量不够,无论您拥有多少数据。考虑从一百万个示例中学习一个(布尔)100个变量的布尔函数。有2100 − 106个您不知道其类的示例。您如何弄清楚这些类是什么?在没有更多信息的情况下,根本没有办法像抛硬币一样做到这一点。这种观察是200多年前哲学家戴维·休((David Hume)首次提出的(形式有所不同),但直到今天,机器学习中的许多错误仍然源于对它的欣赏。每个学习者都必须在给出的数据之外体现一些知识或假设,以便对其进行概括。 Wolpert在他著名的“免费午餐”定理中正式化了这个概念,根据该定理,任何学习者都无法对将要学习的所有可能功能进行随机猜测。25。

这似乎令人沮丧。那我们怎么能希望学到什么呢?幸运的是,我们要在现实世界中学习的功能并不是从所有数学上可能的功能集中统一得出的!实际上,非常平滑的假设(例如平滑度,具有相似类,有限依赖项或有限复杂性的类似示例)通常足以很好地完成工作,这是机器学习如此成功的很大一部分。就像演绎一样,归纳(学习者的工作)是一种知识杠杆:它将少量的输入知识变成大量的输出知识。归纳比推论具有更强大的杠杆作用,需要更少的输入知识才能产生有用的结果,但它仍然需要超过零的输入知识才能起作用。而且,就像使用任何杠杆一样,我们投入的越多,我们越能脱身。

A corollary of this is that one of the key criteria for choosing a representation is which kinds of knowledge are easily expressed in it. For example, if we have a lot of knowledge about what makes examples similar in our domain, instance-based methods may be a good choice. If we have knowledge about probabilistic dependencies, graphical models are a good fit. And if we have knowledge about what kinds of preconditions are required by each class, “IF . . . THEN . . .” rules may be the best option. The most useful learners in this regard are those that do not just have assumptions hardwired into them, but allow us to state them explicitly, vary them widely, and incorporate them automatically into the learning (for example, using firstorder logic21 or grammars6 ).

In retrospect, the need for knowledge in learning should not be surprising. Machine learning is not magic; it cannot get something from nothing. What it does is get more from less. Programming, like all engineering, is a lot of work: we have to build everything from scratch. Learning is more like farming, which lets nature do most of the work. Farmers combine seeds with nutrients to grow crops. Learners combine knowledge with data to grow programs. 一个必然的推论是选择一种表示形式的关键标准之一就是在其中容易表达哪种知识。例如,如果我们对使示例在我们的领域中变得相似有很多了解,那么基于实例的方法可能是一个不错的选择。如果我们了解有关概率依赖性的知识,则图形模型非常适合。如果我们了解每个类都需要哪些先决条件,则“ IF。 。 。然后 。 。 。”规则可能是最佳选择。在这方面最有用的学习者是那些不仅将假设硬性地扎入其中的假设,而且使我们能够明确地陈述它们,进行广泛的变化并将它们自动地纳入学习中(例如使用一阶logic21或grammars6)。

回想起来,学习中知识的需求不足为奇。机器学习不是魔术;它一无所获。它所做的就是从更少获得更多。像所有工程学一样,编程工作量很大:我们必须从头开始构建所有内容。学习更像是耕种,让自然完成大部分工作。农民将种子与养分结合起来种植农作物。学习者将知识与数据相结合以开发程序。

Overfitting Has Many Faces What if the knowledge and data we have are not sufficient to completely determine the correct classifier? Then we run the risk of just hallucinating a classifier (or parts of it) that is not grounded in reality, and is simply encoding random quirks in the data. This problem is called overfitting, and is the bugbear of machine learning. When your learner outputs a classifier that is 100% accurate on the training data but only 50% accurate on test data, when in fact it could have output one that is 75% accurate on both, it has overfit.

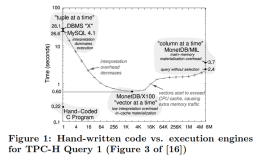

Everyone in machine learning knows about overfitting, but it comes in many forms that are not immediately obvious. One way to understand overfitting is by decomposing generalization error into bias and variance. 9 Bias is a learner’s tendency to consistently learn the same wrong thing. Variance is the tendency to learn random things irrespective of the real signal. Figure 1 illustrates this by an analogy with throwing darts at a board. A linear learner has high bias, because when the frontier between two classes is not a hyperplane the learner is unable to induce it. Decision trees do not have this problem because they can represent any Boolean function, but on the other hand they can suffer from high variance: decision trees learned on different training sets generated by the same phenomenon are often very different, when in fact they should be the same. Similar reasoning applies to the choice of optimization method: beam search has lower bias than greedy search, but higher variance, because it tries more hypotheses. Thus, contrary to intuition, a more powerful learner is not necessarily better than a less powerful one.

Figure 2 illustrates this.a Even though the true classifier is a set of rules, with up to 1,000 examples naive Bayes is more accurate than a rule learner. This happens despite naive Bayes’s false assumption that the frontier is linear! Situations like this are common in machine learning: strong false assumptions can be better than weak true ones, because a learner with the latter needs more data to avoid overfitting. 过度拟合有很多面孔如果我们所掌握的知识和数据不足以完全确定正确的分类器怎么办?然后,我们冒着使幻化一个分类器(或部分分类器)的风险,而这个分类器(或部分分类器)实际上并没有扎根,只是在数据中编码了随机的怪癖。这个问题称为过拟合,是机器学习的负担。当您的学习者输出的分类数据在训练数据上准确度为100%但在测试数据上仅准确度为50%时,实际上它可能在两个数据上都输出准确度为75%的分类器,这是过拟合的。

机器学习中的每个人都知道过拟合,但是它以许多形式出现,但并不是立即显而易见的。一种理解过度拟合的方法是将泛化误差分解为偏差和方差。 9偏见是学习者始终如一地学习同一错误事物的倾向。方差是学习随机事物的趋势,与真实信号无关。图1通过在板上扔飞镖的类比说明了这一点。线性学习者具有较高的偏见,因为当两类之间的边界不是超平面时,学习者无法诱导它。决策树没有这个问题,因为它们可以表示任何布尔函数,但是另一方面,它们可能遭受高方差:在相同现象产生的不同训练集上学习的决策树通常非常不同,而实际上它们应该是相同。类似的推理适用于优化方法的选择:光束搜索比贪婪搜索具有更低的偏差,但方差更高,因为它会尝试更多的假设。因此,与直觉相反,更强大的学习者不一定比不那么强大的学习者更好。

图2对此进行了说明。a尽管真正的分类器是一组规则,但多达1000个示例的朴素贝叶斯比一个规则学习者更准确。尽管天真贝叶斯错误地认为边界是线性的,但仍会发生这种情况!像这样的情况在机器学习中很常见:强错误的假设可能比弱真实的假设更好,因为拥有后者的学习者需要更多数据来避免过拟合。

Cross-validation can help to combat overfitting, for example by using it to choose the best size of decision tree to learn. But it is no panacea, since if we use it to make too many parameter choices it can itself start to overfit.17

Besides cross-validation, there are many methods to combat overfitting. The most popular one is adding a regularization term to the evaluation function. This can, for example, penalize classifiers with more structure, thereby favoring smaller ones with less room to overfit. Another option is to perform a statistical significance test like chi-square before adding new structure, to decide whether the distribution of the class really is different with and without this structure. These techniques are particularly useful when data is very scarce. Nevertheless, you should be skeptical of claims that a particular technique “solves” the overfitting problem. It is easy to avoid overfitting (variance) by falling into the opposite error of underfitting (bias). Simultaneously avoiding both requires learning a perfect classifier, and short of knowing it in advance there is no single technique that will always do best (no free lunch).

A common misconception about overfitting is that it is caused by noise,like training examples labeled with the wrong class. This can indeed aggravate overfitting, by making the learner draw a capricious frontier to keep those examples on what it thinks is the right side. But severe overfitting can occur even in the absence of noise. For instance, suppose we learn a Boolean classifier that is just the disjunction of the examples labeled “true” in the training set. (In other words, the classifier is a Boolean formula in disjunctive normal form, where each term is the conjunction of the feature values of one specific training example.) This classifier gets all the training examples right and every positive test example wrong, regardless of whether the training data is noisy or not.

The problem of multiple testing13 is closely related to overfitting. Standard statistical tests assume that only one hypothesis is being tested, but modern learners can easily test millions before they are done. As a result what looks significant may in fact not be. For example, a mutual fund that beats the market 10 years in a row looks very impressive, until you realize that, if there are 1,000 funds and each has a 50% chance of beating the market on any given year, it is quite likely that one will succeed all 10 times just by luck. This problem can be combatted by correcting the significance tests to take the number of hypotheses into account, but this can also lead to underfitting. A better approach is to control the fraction of falsely accepted non-null hypotheses, known as the false discovery rate. 3 交叉验证可以帮助克服过度拟合,例如通过使用交叉验证来选择要学习的最佳决策树大小。但这不是万能药,因为如果我们使用它进行过多的参数选择,它本身可能会开始过度适应.17

除了交叉验证外,还有许多方法可以防止过度拟合。最受欢迎的一种是在评估函数中添加正则化项。例如,这可能会惩罚具有更多结构的分类器,从而偏向于具有较小空间以适合过度的较小分类器。另一种选择是在添加新结构之前执行卡方检验等统计显着性检验,以判断使用和不使用此结构时类的分布是否确实不同。当数据非常稀缺时,这些技术特别有用。尽管如此,您应该对特定技术可以``解决''过拟合问题的说法持怀疑态度。通过陷入欠拟合(bias)的相反误差很容易避免过拟合(variance)。同时避免同时需要学习一个完美的分类器和既不事先知道它又没有一种技术会永远做到最好(没有免费的午餐)。

关于过度拟合的一个常见误解是它是由噪声引起的,例如带有错误课程的训练示例。通过使学习者画出一个反复无常的疆界以使那些例子保持正确的观点,确实可以加剧过度拟合。但是即使没有噪音也会发生严重的过拟合。例如,假设我们学习了一个布尔分类器,它只是训练集中标注为``true''的示例的分离。 (换句话说,分类器是布尔正则形式的布尔公式,其中每个术语是一个特定训练示例的特征值的合取)。该分类器获得正确的所有训练示例,每个阳性检验示例都正确,无论训练数据是否嘈杂。

多次测试的问题13与过度拟合密切相关。标准统计测试假设仅对一种假设进行了测试,但是现代学习者可以在完成之前轻松地测试数百万个假设。因此,看似重要的事实实际上可能并非如此。例如,一个连续十年击败市场的共同基金看起来非常令人印象深刻,直到您意识到,如果有1,000只基金,并且每种都有在任何给定年份击败市场的50%的机会,那么很可能仅靠运气,一个人就能成功十次。可以通过校正显着性检验以将假设的数量纳入考虑范围来解决此问题,但这也可能导致拟合不足。更好的方法是控制被错误接受的非零假设的比例,即错误发现率。 3