Introduction

This article discusses the steps involved in performing data write operations with MongoDB, focusing on the roles of the Journal and Oplog applications. Journal is a concept on the MongoDB storage engine layer while plog is a capped collection on the MongoDB master-slave replication layer.

MongoDB Journal

All data read and write operations in MongoDB require calling the interface on the storage engine layer to store and read data. The journal is an auxiliary mechanism for the storage engine to store data. Currently, MongoDB supports MMAPv1, WiredTiger, MongoRocks, and other storage engines, and all of them support the configuration of the journal.

To illustrate this, consider how WiredTiger functions. WiredTiger does not immediately store data written to it unless the configuration of the journal is complete. Instead, it performs a full-data checkpoint (storage.syncPeriodSecs configuration item) once every minute by default to make all the data persistent. If the server goes down in the middle of the process, data restoration is possible for data dating back to the most recent checkpoint.

It is often said that the enablement of journal is imperative. Upon enabling the journal, each write operation (reconstruction of the written data in the journal) is recorded in an operation log. As a result, if a fault occurs on the server after starting WiredTiger, WiredTiger can restore data from the most recent checkpoint, and the subsequent journal operation logs will be played back to restore the remaining data.

Two parameters control the actions of the journal in MongoDB. The storage.journal.enabled parameter determines whether to enable the journal and the storage.journal.commitInternalMs parameter determines the interval of the journal flushing to the disk, which has a default value of 100 ms. You can set the writeConcern to {j: true} during writing to ensure that journal flushes the disk at every write.

MongoDB Oplog

Through oplog, you can synchronize data between nodes in the replication set. The client writes data to the primary node, and the primary node records an oplog after writing the data. The secondary node pulls the oplog from the primary node (or other secondary nodes) to ensure each node in the replication set stores the same data. For the storage engine, oplog is part of the ordinary data.

One-Time Write with MongoDB

When writing a document to the MongoDB replication set, perform the following steps:

- Write the document data to the corresponding set

- Update the set's index information

- Write an oplog for synchronization

The steps above must succeed completely, or fail completely, to avoid the following instances:

- If data write is successful but the index write fails, some data may be readable in full-table scans but unreadable through indexes.

- If data write and index write are successful but the oplog write fails, the synchronization of the write operation to the secondary node will not be possible. This leads to data inconsistency between the master and slave nodes.

When MongoDB writes data, it puts the above three operations into a WiredTiger transaction to ensure the atomicity of the operations.

beginTransaction();

writeDataToColleciton();

writeCollectionIndex();

writeOplog();

commitTransaction();

Performing a transaction with WiredTiger initializes all application changes, with all the operations written to a journal operation log. The background will frequently set checkpoints to make the changes persistent and remove useless journals.

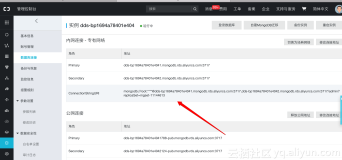

In terms of the data layout, the relationship between oplog and journal is as follows:

Conclusion

In this article, we discussed how MongoDB performs data write operations, specifically looking at the roles of oplog and journal in the process. Oplog and journal are concepts that represent the different layers of MongoDB. Since oplog is a common set in MongoDB, oplog writes and common set writes are identical. One write will change the corresponding data, index, and oplog, and these changes correspond to a journal operation log.